Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Semantic Analysis interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Semantic Analysis Interview

Q 1. Explain the difference between syntax and semantics.

Syntax and semantics are two fundamental aspects of language understanding. Think of it like this: syntax is the grammar – the rules for how words are arranged to form sentences. Semantics, on the other hand, is about meaning – what those sentences actually convey.

For example, the sentence “The cat sat on the mat.” is grammatically correct (syntactically sound). However, the sentence “Mat on the sat cat the.” is syntactically incorrect because it violates the rules of English grammar. Both sentences, however, could be semantically meaningful to someone who understands the concept of a cat, a mat, and the act of sitting. The meaning remains the same even when the syntax is changed.

In short: Syntax focuses on the structure, while semantics focuses on the meaning.

Q 2. Describe different types of semantic relationships (e.g., synonymy, antonymy, hyponymy).

Semantic relationships describe how words and concepts relate to each other in meaning. Several key types exist:

- Synonymy: Words with similar meanings. For example, ‘happy’ and ‘joyful’ are synonyms.

- Antonymy: Words with opposite meanings. ‘Hot’ and ‘cold’ are antonyms.

- Hyponymy: A hierarchical relationship where one word is a specific instance of a more general word. ‘Dog’ is a hyponym of ‘animal’. ‘Golden Retriever’ is a hyponym of ‘dog’. This creates a hierarchy of meaning.

- Meronymy: Represents a part-whole relationship. ‘Wheel’ is a meronym of ‘car’.

- Hypernymy: The inverse of hyponymy; the more general term. ‘Animal’ is a hypernym of ‘dog’.

Understanding these relationships is crucial for tasks like information retrieval, text summarization, and question answering, as they allow machines to grasp the nuanced connections between words.

Q 3. What are WordNet and its applications in Semantic Analysis?

WordNet is a large lexical database of English. It organizes words into sets of synonyms called synsets, showing semantic relationships between them (like hyponymy, synonymy, etc.). Think of it as a vast interconnected network of words and their meanings.

Applications in semantic analysis are numerous:

- Word Sense Disambiguation (WSD): WordNet helps determine the correct meaning of a word in context, as many words have multiple meanings.

- Text Summarization: Identifying key concepts and relationships in text to create concise summaries.

- Information Retrieval: Improving search engine accuracy by understanding the semantic relationships between search queries and documents.

- Question Answering: Matching questions to relevant parts of a knowledge base.

Essentially, WordNet provides a structured representation of word meaning that computers can use to perform sophisticated semantic analysis tasks.

Q 4. Explain the concept of Word Sense Disambiguation (WSD).

Word Sense Disambiguation (WSD) is the task of identifying the correct meaning of a word given its context. Many words have multiple senses (meanings), and WSD aims to determine which sense is appropriate in a specific instance. For example, the word ‘bank’ can refer to a financial institution or the side of a river. WSD aims to distinguish between these different senses.

Consider the sentence: “I went to the bank to deposit money.” WSD would correctly identify ‘bank’ as the financial institution, not the riverbank.

Accurate WSD is critical for many Natural Language Processing (NLP) applications because misinterpreting word senses can lead to serious errors in downstream tasks.

Q 5. Describe different approaches to WSD (e.g., supervised, unsupervised).

Various approaches exist for WSD:

- Supervised approaches: These methods use a labeled dataset where each instance of a word is annotated with its correct sense. Machine learning models are trained on this data to learn to predict the correct sense for new instances. This often requires significant amounts of manually annotated data.

- Unsupervised approaches: These methods don’t require labeled data. They often rely on distributional semantics – the idea that words with similar meanings appear in similar contexts. Techniques like clustering words based on their co-occurrence with other words are frequently employed.

- Knowledge-based approaches: These leverage external knowledge sources, like WordNet, to disambiguate word senses based on their semantic relationships.

The choice of approach depends on the availability of labeled data and the specific application. Supervised methods generally achieve higher accuracy but require more resources, while unsupervised methods are more scalable but may be less accurate.

Q 6. What are ontologies and their role in Semantic Analysis?

Ontologies are formal representations of knowledge. They define concepts, their properties, and the relationships between them. Imagine them as detailed dictionaries and thesaurus combined, providing a structured view of a specific domain’s knowledge. They are often represented as graphs.

In semantic analysis, ontologies provide a structured framework for representing meaning. They allow computers to reason about information and make inferences. For instance, an ontology might define the concept of ‘car’ with properties like ‘color,’ ‘make,’ and ‘model,’ and relationships to concepts like ‘vehicle’ and ‘engine’.

Applications include semantic search, knowledge representation, and reasoning in expert systems.

Q 7. Explain the difference between OWL and RDF.

Both OWL (Web Ontology Language) and RDF (Resource Description Framework) are used for representing knowledge on the Semantic Web, but they differ in their expressiveness and capabilities.

RDF is a basic framework for representing data as triples (subject, predicate, object). It’s simple and flexible, making it suitable for a wide range of applications. However, its expressiveness is limited; it cannot express complex relationships between concepts effectively.

OWL builds upon RDF, providing a richer vocabulary for expressing more complex ontologies. OWL allows for defining classes, properties, and relationships with greater precision and detail, enabling more sophisticated reasoning and inference capabilities. It offers various levels of expressiveness (OWL Lite, OWL DL, OWL Full), allowing you to choose the level appropriate for your needs.

In essence, RDF is a foundation, while OWL is a more powerful language built on top of it, designed for more complex semantic modeling.

Q 8. How do you evaluate the performance of a semantic analysis system?

Evaluating a semantic analysis system’s performance isn’t a single metric but a multifaceted process. We need to consider various aspects depending on the specific task. For example, if we’re building a system for sentiment analysis, accuracy in classifying positive, negative, or neutral sentiment is paramount. For question answering, we’d look at the precision and recall of the answers generated. Let’s break down some key performance indicators (KPIs):

- Accuracy: This measures the percentage of correctly processed inputs. For instance, if our system classifies 95 out of 100 sentences correctly, its accuracy is 95%. However, accuracy alone can be misleading, especially with imbalanced datasets.

- Precision: Out of all the instances the system identified as belonging to a particular class (e.g., positive sentiment), what percentage was actually correct? A high precision means fewer false positives.

- Recall: Out of all the instances that actually belonged to a particular class, what percentage did the system correctly identify? A high recall means fewer false negatives.

- F1-Score: This is the harmonic mean of precision and recall, providing a balanced measure. It’s particularly useful when dealing with class imbalances.

- Execution Time: In real-world applications, efficiency is crucial. We need to assess how quickly the system processes inputs, especially for large datasets.

Beyond these, we might use more specialized metrics. For instance, in a machine translation context, BLEU score (Bilingual Evaluation Understudy) is commonly used to assess the quality of translation. The choice of evaluation metrics always depends on the specific application and goals of the semantic analysis system.

Q 9. Describe different semantic similarity measures.

Semantic similarity measures quantify how alike two pieces of text are in terms of their meaning, not just their surface-level similarity. Several measures exist, each with its strengths and weaknesses:

- Cosine Similarity: This is a common measure that calculates the cosine of the angle between two vectors representing the texts. These vectors are often generated using techniques like TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings (Word2Vec, GloVe).

- Jaccard Similarity: This measures the overlap between the sets of words (or concepts) in two texts. It’s simple to compute but less sensitive to the importance of individual words.

- Edit Distance (Levenshtein Distance): This measures the minimum number of edits (insertions, deletions, substitutions) needed to transform one text into another. While useful for comparing very similar texts, it’s less effective for semantically similar but lexically different texts.

- Path Similarity (in Knowledge Graphs): If we represent texts as nodes in a knowledge graph, we can calculate the shortest path between them. The shorter the path, the more semantically similar they are considered to be.

- Word Embedding-based Similarity: Modern approaches leverage word embeddings like Word2Vec or GloVe. These methods represent words as dense vectors in a high-dimensional space, where semantically similar words have vectors closer together. We can then measure the cosine similarity or Euclidean distance between these word vectors to quantify similarity.

The best measure depends on the context. For example, cosine similarity based on word embeddings is frequently used because it handles synonyms and captures semantic relationships effectively, while Jaccard similarity is simpler but less nuanced.

Q 10. What are knowledge graphs and how are they used in Semantic Analysis?

Knowledge graphs are structured repositories of information, representing entities (people, places, things) and their relationships. Imagine a network where each node is an entity, and edges represent relationships between them. They’re crucial in semantic analysis because they provide a rich context for understanding text.

In semantic analysis, knowledge graphs are used in various ways:

- Disambiguation: Resolving ambiguities in text by leveraging the relationships in the graph. For instance, if the text refers to ‘Apple,’ the knowledge graph can help determine if it’s the fruit or the technology company.

- Question Answering: Finding answers to complex questions by traversing the graph. The system can extract relevant entities and relationships from the question and then search the graph for the answer.

- Information Retrieval: Improving the accuracy and relevance of information retrieval by considering the semantic relationships between entities.

- Sentiment Analysis: Enriching sentiment analysis by incorporating the knowledge about the entities and their relationships. The sentiment toward an entity can be influenced by the sentiment toward related entities.

For example, consider a question: “What is the capital of France?” A knowledge graph would directly link ‘France’ to ‘Paris’ with a ‘capital’ relationship, allowing for a quick and accurate answer. Without a knowledge graph, answering this would require sophisticated NLP techniques.

Q 11. Explain the concept of a knowledge representation language.

A knowledge representation language (KRL) is a formal system for encoding knowledge in a computer-processable format. It’s like a language for describing the world’s facts and relationships. Different KRLs exist, each offering its own strengths and weaknesses.

Some prominent KRLs include:

- RDF (Resource Description Framework): This uses triples (subject, predicate, object) to represent facts. For example,

- OWL (Web Ontology Language): OWL builds on RDF and adds richer constructs to represent complex relationships and constraints. It’s used for creating ontologies, which are formal specifications of concepts and relationships.

- RDFS (RDF Schema): Provides a vocabulary for defining classes and properties within RDF.

- Frames and Description Logics: These provide alternative ways to represent knowledge, focusing on hierarchical structures and logical axioms.

The choice of KRL depends on the specific application and the complexity of the knowledge being represented. RDF’s simplicity and flexibility make it popular, while OWL is better suited for more sophisticated knowledge modeling.

Q 12. Describe different methods for information extraction.

Information extraction (IE) is the task of automatically extracting structured information from unstructured or semi-structured text. Several methods exist:

- Rule-based methods: These use hand-crafted rules and patterns to identify and extract information. While these can be very accurate for specific domains, they are time-consuming to create and maintain and struggle with variations in text.

- Machine learning methods: These train models on labeled data to learn patterns and extract information automatically. This includes approaches like Named Entity Recognition (NER), Relation Extraction, and Event Extraction. These are more adaptable to changes in text but require substantial labeled data for training.

- Hybrid methods: These combine rule-based and machine learning approaches, leveraging the strengths of both. This often results in more robust and accurate extraction.

- Deep learning methods: These use neural networks, particularly Recurrent Neural Networks (RNNs) and Transformers, to extract information. These methods often outperform traditional machine learning approaches but require significant computational resources.

For example, extracting company names, addresses, and phone numbers from web pages is a classic IE task. Rule-based methods might search for patterns like ‘Inc.’ or ‘Ltd.’ to identify company names, while machine learning methods can learn to identify them based on the surrounding text.

Q 13. What are named entity recognition (NER) and its challenges?

Named Entity Recognition (NER) is the task of identifying and classifying named entities in text into predefined categories like person, organization, location, date, etc. It’s a fundamental task in many NLP applications. Imagine a news article; NER would identify “Barack Obama” as a person, “United States” as a location, and “2023” as a date.

Challenges in NER include:

- Ambiguity: Words can have multiple meanings. ‘Apple’ could refer to the fruit or the company.

- Nested entities: Entities can be nested within each other. For example, “Bill Gates founded Microsoft in Redmond, Washington.” Here we have nested entities (person within organization, location within location).

- Out-of-vocabulary (OOV) entities: The system might encounter new or uncommon entities that weren’t in its training data.

- Varying expressions: Entities might be expressed in many ways. “Apple Inc.” is the same as “Apple”.

- Lack of labeled data: Training accurate NER models requires large amounts of labeled data, which can be expensive and time-consuming to create.

Addressing these challenges often involves using advanced techniques like contextual embeddings, handling ambiguity with knowledge bases, and employing techniques like transfer learning or active learning to reduce reliance on extensive labeled datasets.

Q 14. How can you handle ambiguity in natural language processing?

Ambiguity is a significant hurdle in NLP because natural language is inherently ambiguous. We need strategies to resolve this. Here are several approaches:

- Word Sense Disambiguation (WSD): This aims to identify the correct meaning of a word based on the context. Approaches range from using dictionaries and thesauri to machine learning models trained on contextual information.

- Part-of-Speech (POS) Tagging: Identifying the grammatical role of each word (noun, verb, adjective, etc.) can help disambiguate sentence structure.

- Syntactic Parsing: Creating a parse tree representing the grammatical structure of a sentence provides context for resolving ambiguities.

- Knowledge-based approaches: Using knowledge graphs or ontologies to resolve ambiguities by considering the semantic relationships between entities and concepts.

- Machine Learning models: Training models (e.g., recurrent neural networks) on large corpora can help them learn to disambiguate text based on patterns and contexts.

For example, consider the sentence “I saw the bat.” The word ‘bat’ is ambiguous. WSD, by analyzing the surrounding words, can determine if it refers to the animal or the sporting equipment.

Often, a combination of techniques is necessary to handle the complexity of natural language ambiguity effectively. The chosen method(s) will depend upon the specific application and available resources.

Q 15. Explain the concept of semantic role labeling.

Semantic role labeling (SRL) is a crucial task in natural language processing (NLP) that aims to identify the roles different words play in a sentence, relative to the main verb. Think of it like assigning characters in a play their respective roles. Instead of just understanding the words individually, SRL helps us understand how those words relate to the action described by the verb.

For instance, in the sentence “The dog chased the ball,” SRL would identify ‘dog’ as the agent (the one performing the action), ‘chased’ as the predicate (the verb describing the action), and ‘ball’ as the patient (the thing being acted upon). These roles are often represented formally using PropBank or FrameNet annotations.

This goes beyond simple part-of-speech tagging, which only identifies word types (noun, verb, etc.). SRL delves deeper into the semantic relationships within a sentence. This detailed understanding is vital for many downstream NLP applications.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss the applications of semantic analysis in different domains (e.g., search, healthcare).

Semantic analysis finds applications across diverse domains, significantly enhancing how we interact with and understand information.

- Search Engines: Semantic search goes beyond keyword matching. It understands the meaning behind search queries, providing more relevant results. For example, a search for “best Italian restaurants near me” won’t just find pages mentioning those words, but will understand the user’s intent and prioritize results based on location and cuisine.

- Healthcare: Semantic analysis helps in processing medical records, extracting vital information for diagnoses, treatment planning, and research. It can automatically identify diseases, medications, and symptoms from unstructured text, streamlining clinical workflows and aiding in patient care.

- Customer Service: Chatbots and virtual assistants heavily rely on semantic analysis to understand user queries and provide accurate responses. By correctly interpreting the intent behind the user’s language, these systems can offer personalized and effective solutions.

- Social Media Monitoring: Sentiment analysis, a branch of semantic analysis, allows businesses to monitor public opinion regarding their brand or products. By understanding the emotions expressed in social media posts, companies can adapt their strategies accordingly.

In essence, any application that needs to go beyond the surface level understanding of text to capture its deeper meaning benefits greatly from semantic analysis.

Q 17. What are the challenges of applying semantic analysis to large-scale data?

Scaling semantic analysis to massive datasets presents several challenges:

- Computational Cost: Processing large volumes of text requires significant computational resources, especially for complex semantic models. Training sophisticated models can take days or even weeks.

- Data Sparsity: Many real-world semantic relationships are rare or unseen in training data. This leads to inaccurate predictions for novel or infrequent situations.

- Ambiguity and Polysemy: Words can have multiple meanings (polysemy) and sentences can be ambiguous. Resolving these ambiguities is a significant hurdle in achieving accurate semantic analysis.

- Data Noise: Real-world data is often noisy, containing errors, typos, and inconsistencies. Cleaning and pre-processing this data to ensure accuracy is time-consuming.

- Scalability of Infrastructure: Storing and managing large datasets requires robust and scalable infrastructure, which can be costly and complex to implement.

Addressing these challenges often involves employing techniques like distributed computing, efficient data structures, and robust error handling strategies.

Q 18. How do you handle noisy or incomplete data in semantic analysis?

Handling noisy or incomplete data in semantic analysis is crucial for building reliable systems. Strategies include:

- Data Cleaning and Preprocessing: This involves removing irrelevant characters, correcting typos, handling missing values, and normalizing text. Techniques like stemming, lemmatization, and stop-word removal are commonly used.

- Robust Semantic Models: Developing models that are less sensitive to noise is essential. This may involve using regularization techniques to prevent overfitting, which makes the model too sensitive to quirks in the training data.

- Data Augmentation: Generating synthetic data that resembles the real data can help improve model robustness and handle rare occurrences.

- Error Detection and Correction: Implementing mechanisms to detect and correct errors during the analysis process. This could involve using confidence scores and employing post-processing rules.

- Ensemble Methods: Combining predictions from multiple models can improve overall accuracy and reduce the impact of noise in individual models.

The choice of strategy often depends on the nature and extent of the noise present in the data.

Q 19. Describe different techniques for semantic parsing.

Several techniques exist for semantic parsing, the task of converting natural language into a formal representation, like logical forms or meaning representations.

- Rule-Based Parsing: This traditional approach uses hand-crafted rules to map linguistic structures to meaning representations. While precise for limited domains, it lacks scalability and flexibility.

- Statistical Parsing: These methods utilize statistical models trained on annotated data to predict the most probable meaning representation for a given sentence. Examples include probabilistic context-free grammars (PCFGs) and dependency parsing augmented with semantic information.

- Neural Network-Based Parsing: Recent advances in deep learning have led to the development of powerful neural network architectures for semantic parsing. These models, such as recurrent neural networks (RNNs) and transformers, can learn complex patterns from data and achieve high accuracy. They offer superior flexibility and scalability compared to rule-based or statistical methods.

- Semantic Role Labeling (SRL) based parsing: As discussed previously, SRL forms a foundation for semantic parsing by identifying the roles of different constituents in a sentence. This information is then used to construct a meaning representation.

The choice of technique depends on factors like the availability of annotated data, the complexity of the language, and the desired level of accuracy.

Q 20. Explain the difference between lexical semantics and compositional semantics.

Lexical semantics and compositional semantics are two distinct but interconnected approaches to understanding word meaning.

- Lexical Semantics: This focuses on the meaning of individual words and their relationships to other words in a lexicon (dictionary). It explores concepts like synonymy (words with similar meanings), antonymy (words with opposite meanings), and hyponymy (a hierarchical relationship, e.g., ‘dog’ is a hyponym of ‘animal’). It’s like understanding the individual building blocks of meaning.

- Compositional Semantics: This deals with how the meaning of a sentence is derived from the meaning of its individual words and their syntactic arrangement. It explores how the combination of words creates a more complex meaning than the sum of its parts. It’s like understanding how those building blocks are assembled to create a complete structure.

Consider the sentence “The big red ball.” Lexical semantics would analyze the meaning of each word individually – ‘big,’ ‘red,’ and ‘ball’. Compositional semantics would then explain how these individual meanings combine to create the overall meaning of the phrase, describing a specific type of ball.

In essence, lexical semantics provides the foundation, while compositional semantics builds upon it to understand sentence-level meaning.

Q 21. What are some common semantic analysis tools and libraries?

Several tools and libraries facilitate semantic analysis. The choice depends on your specific needs and programming language preferences.

- Stanford CoreNLP: A widely used Java library offering various NLP functionalities, including part-of-speech tagging, named entity recognition, and SRL.

- spaCy: A Python library known for its efficiency and ease of use. It provides tools for various semantic tasks, including tokenization, part-of-speech tagging, and dependency parsing.

- NLTK: Another popular Python library, offering a broad range of NLP tools and resources, including various semantic analysis components.

- AllenNLP: A powerful Python library built on PyTorch, providing state-of-the-art models for various NLP tasks, including semantic role labeling and semantic parsing.

- Hugging Face Transformers: This library offers easy access to pre-trained transformer models, which are highly effective for semantic tasks. Many models are readily available for tasks like sentence classification and question answering.

Many other specialized tools and libraries exist for specific semantic analysis tasks, such as sentiment analysis or ontology mapping. Choosing the right tools requires considering your project’s specific requirements and your familiarity with different programming languages and frameworks.

Q 22. How do you choose the appropriate semantic analysis technique for a given task?

Choosing the right semantic analysis technique hinges on understanding the specific task and available resources. It’s like choosing the right tool for a job – a hammer isn’t suitable for screwing in a screw.

- Task Complexity: Simple tasks like sentiment analysis might only need lexicon-based approaches, while complex tasks like relation extraction often require more sophisticated methods like neural networks.

- Data Availability: Large annotated datasets are crucial for supervised learning methods like deep learning. If data is scarce, unsupervised or semi-supervised techniques become more relevant.

- Computational Resources: Deep learning models are powerful but computationally expensive. Simpler techniques like WordNet-based approaches are more computationally efficient for resource-constrained environments.

- Desired Accuracy: The level of accuracy needed dictates the complexity of the chosen method. A quick, rough estimate might suffice for some applications, while others might require extremely high precision.

For example, analyzing customer feedback for sentiment might use a lexicon-based approach, while identifying complex relationships between entities in a scientific paper might necessitate a more advanced method such as a graph-based approach combined with a recurrent neural network.

Q 23. Discuss the ethical implications of semantic analysis.

Ethical implications in semantic analysis are significant and often overlooked. Bias in data can lead to biased outputs, perpetuating harmful stereotypes. Privacy is another major concern; analyzing personal data requires careful consideration of data anonymization and user consent.

- Bias in Data and Algorithms: If the training data reflects societal biases (e.g., gender bias in job descriptions), the resulting model will likely perpetuate those biases. This can have serious consequences in areas like hiring and loan applications.

- Privacy Violations: Analyzing text data can reveal sensitive personal information. Strong data protection measures are essential, including anonymization techniques and adherence to relevant privacy regulations (like GDPR).

- Misinformation and Manipulation: Semantic analysis can be used to create sophisticated fake news or manipulate public opinion. It’s crucial to be aware of these possibilities and develop techniques to detect and mitigate such malicious use.

For instance, a model trained on biased news articles might incorrectly classify opinions or sentiments, leading to skewed results and reinforcing harmful stereotypes. Responsible development and deployment require constant vigilance against these ethical pitfalls.

Q 24. Explain the role of context in semantic analysis.

Context is paramount in semantic analysis; it’s the lifeblood of understanding meaning. Words and phrases rarely have a single, fixed meaning; their interpretation changes dramatically depending on their surroundings.

Consider the word “bank.” In the sentence “I went to the bank,” it refers to a financial institution. However, in “The river bank was muddy,” it refers to the land beside a river. The surrounding words and the overall sentence structure provide the crucial context that disambiguates the meaning.

Methods to incorporate context include:

- Windowing: Examining a fixed-size window of words around the target word.

- Recurrent Neural Networks (RNNs): RNNs are designed to process sequential data like text, capturing long-range dependencies and contextual information.

- Transformer Networks: These models excel at capturing long-range dependencies and relationships between words across a sentence, providing excellent context awareness.

Without proper context handling, semantic analysis can lead to significant inaccuracies and misinterpretations. A robust semantic analysis system must effectively utilize contextual information to achieve accurate and reliable results.

Q 25. How do you handle different languages in semantic analysis?

Handling multiple languages presents unique challenges in semantic analysis. Languages differ vastly in their structure, morphology, and semantics. A single method rarely works across all languages.

- Multilingual Models: Recent advances in deep learning have led to multilingual models capable of understanding and processing multiple languages simultaneously. These models are often trained on massive multilingual corpora.

- Machine Translation: Translating text into a single, common language before analysis can simplify the process, but can introduce translation errors that affect accuracy.

- Language-Specific Resources: Leveraging language-specific resources like lexicons, ontologies, and linguistic rules is crucial for achieving high accuracy in each language.

- Cross-lingual Transfer Learning: Knowledge gained from a high-resource language (e.g., English) can be transferred to a low-resource language, improving performance with limited data.

For example, sentiment analysis in English might rely on readily available lexicons, whereas analyzing sentiment in a low-resource language like Nepali might require a combination of machine translation, cross-lingual transfer learning, and potentially building a language-specific lexicon from scratch.

Q 26. Describe your experience with specific semantic analysis projects.

In a previous role, I led a project to develop a semantic search engine for a large legal firm. The challenge was to extract key concepts and relationships from complex legal documents to enable efficient retrieval of relevant information. We employed a combination of techniques, including named entity recognition (NER), relation extraction, and topic modeling, coupled with a vector space model for similarity search.

Another significant project involved building a sentiment analysis system for social media data to monitor public opinion about a product launch. Here, we used deep learning models (specifically, RNNs and transformers) to capture context and nuances in language, providing valuable insights into customer sentiment and potential issues.

These projects highlighted the importance of selecting the appropriate techniques based on the data and the task at hand, as well as the critical need for rigorous evaluation and iterative improvement.

Q 27. How do you stay up-to-date with the latest advancements in semantic analysis?

Staying current in semantic analysis requires a multi-pronged approach.

- Academic Publications: I regularly read top-tier journals and conference proceedings like ACL, EMNLP, and NAACL.

- Online Courses and Workshops: Platforms like Coursera, edX, and fast.ai offer excellent courses on deep learning and natural language processing.

- Industry Blogs and News: Following influential researchers and companies in the field provides insights into the latest trends and breakthroughs.

- Open-Source Projects: Contributing to or following open-source projects allows for hands-on experience with cutting-edge techniques and tools.

- Conferences and Networking: Attending conferences and workshops offers the opportunity to learn from experts and connect with other researchers.

This continuous learning ensures I’m familiar with the latest advancements in algorithms, techniques, and tools, allowing me to apply the most effective methods to the tasks at hand.

Q 28. What are your future goals in the field of semantic analysis?

My future goals include pushing the boundaries of cross-lingual semantic analysis, particularly for low-resource languages. I’m also interested in exploring the applications of semantic analysis in addressing ethical challenges, such as bias detection and mitigation in AI systems. Furthermore, I am keen on researching explainable AI in the context of semantic analysis, to improve transparency and trustworthiness of semantic models.

Ultimately, my goal is to contribute to the development of more robust, accurate, ethical, and understandable semantic analysis tools that can benefit a wide range of applications and positively impact society.

Key Topics to Learn for Semantic Analysis Interview

- Word Sense Disambiguation (WSD): Understanding the different meanings of words in context and algorithms used to resolve ambiguity. Practical applications include improved search engine results and information retrieval systems.

- Part-of-Speech (POS) Tagging: Identifying the grammatical role of each word in a sentence. This is crucial for syntactic analysis and understanding sentence structure. Practical applications include natural language processing pipelines and machine translation.

- Named Entity Recognition (NER): Identifying and classifying named entities like people, organizations, locations, etc. Practical applications involve information extraction from text, knowledge base construction, and question answering systems.

- Semantic Role Labeling (SRL): Identifying the roles that different words play in a sentence (e.g., agent, patient, instrument). This provides a deeper understanding of the sentence’s meaning. Practical applications include event extraction and text summarization.

- Word Embeddings and Word Vectors: Understanding how words are represented as vectors in high-dimensional space, capturing semantic relationships. Practical applications include semantic similarity calculation and recommendation systems.

- Ontologies and Knowledge Graphs: Working with structured representations of knowledge and their application in semantic analysis tasks. Practical applications include knowledge-based question answering and reasoning.

- Sentiment Analysis: Determining the emotional tone (positive, negative, neutral) of text. Practical applications include social media monitoring and customer feedback analysis.

- Problem-solving approaches: Familiarize yourself with common challenges in semantic analysis, such as handling noisy data, dealing with ambiguity, and evaluating performance metrics.

Next Steps

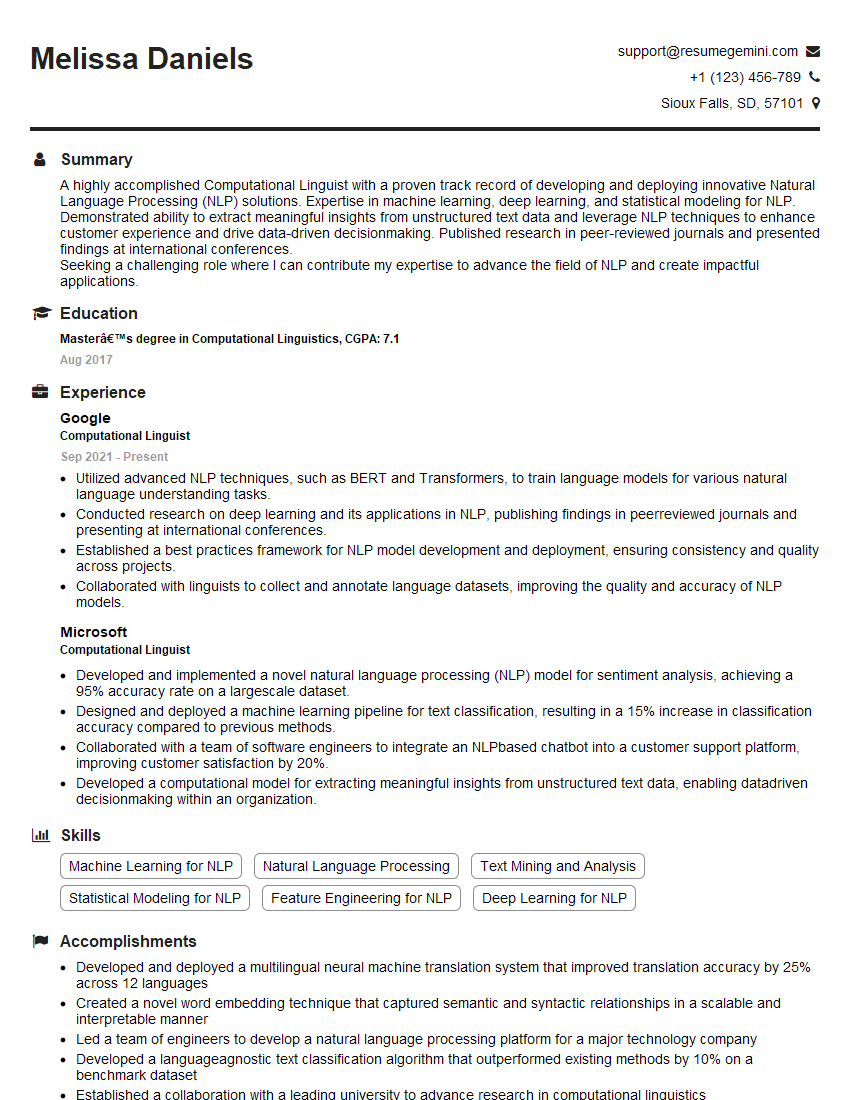

Mastering Semantic Analysis significantly enhances your career prospects in the rapidly growing fields of Natural Language Processing (NLP) and Artificial Intelligence (AI). A strong understanding of these concepts opens doors to exciting roles with high demand. To maximize your job search success, crafting an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a compelling and effective resume that highlights your skills and experience in Semantic Analysis. Examples of resumes tailored to Semantic Analysis are available within ResumeGemini to provide you with inspiration and guidance.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?