Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Scientific Writing and Data Interpretation interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Scientific Writing and Data Interpretation Interview

Q 1. Explain the importance of clarity and conciseness in scientific writing.

Clarity and conciseness are paramount in scientific writing because they ensure your research findings are easily understood and avoid misinterpretations. Think of it like a meticulously crafted map: a reader needs to quickly grasp the direction and destination without getting bogged down in unnecessary details.

- Clarity: Using precise language, defining all technical terms, and structuring your writing logically (e.g., using clear topic sentences and transitions) allows readers to effortlessly follow your train of thought. Ambiguity can lead to misinterpretations of your research and potentially flawed conclusions from others.

- Conciseness: Avoiding jargon unless essential, eliminating redundant phrases, and focusing on the most impactful information ensures that your message is delivered efficiently. A concise manuscript is easier to read, increasing the likelihood of your research being understood and cited.

For example, instead of writing, ‘The results indicated a statistically significant difference between the two groups, which was quite large in magnitude,’ you could write, ‘The two groups differed significantly (p < 0.05, effect size = 1.2).’

Q 2. Describe your experience with different scientific writing styles (e.g., IMRaD, narrative reviews).

I have extensive experience with various scientific writing styles. The IMRaD (Introduction, Methods, Results, and Discussion) format is the cornerstone for most empirical research articles, providing a clear and standardized structure. I’ve used it extensively across diverse fields like biology and environmental science. Narrative reviews, on the other hand, allow for a more flexible approach, offering the space to synthesize existing research and build a cohesive narrative on a specific topic. This requires a different skillset: the ability to weave together multiple studies, highlight key themes, and offer a nuanced perspective.

My experience also extends to writing grant proposals, which demand a persuasive and concise style to justify the need for research funding. I’ve successfully tailored my writing style to suit the audience and purpose, ensuring the effectiveness of the communication. For instance, in a grant proposal, I would focus on the significance and impact of the proposed research, whereas in a research article, I would emphasize the methodology and findings.

Q 3. How do you ensure accuracy and avoid plagiarism in your scientific writing?

Accuracy and originality are non-negotiable in scientific writing. My approach involves a multi-step process:

- Meticulous Data Handling: I meticulously review all data points, ensuring accuracy in data collection, analysis, and reporting. This includes double-checking calculations, verifying data sources, and using appropriate statistical methods.

- Proper Citation and Referencing: Every piece of information sourced from other works is carefully cited using a consistent referencing style (e.g., APA, MLA). This is crucial for acknowledging the contributions of other researchers and preventing plagiarism.

- Plagiarism Detection Tools: I utilize plagiarism detection software to scan my work and identify any unintentional instances of plagiarism. This provides an extra layer of assurance.

- Peer Review: Before submission, I always seek feedback from colleagues or mentors. A fresh pair of eyes can catch errors or areas needing clarification.

A real-world example involves a recent project where I detected a potential error in a dataset. Through careful investigation and double-checking the original data source, I identified and corrected the error, ensuring the accuracy of my analysis and conclusions.

Q 4. How would you approach writing a complex scientific concept for a non-technical audience?

Communicating complex scientific concepts to a non-technical audience requires a strategic approach focusing on simplicity, relatable analogies, and visual aids:

- Break Down Complexity: Instead of using jargon, I explain concepts using simple language and everyday analogies. For example, instead of ‘mitochondrial respiration,’ I might say ‘the power plants within our cells’.

- Use Visuals: Diagrams, charts, and infographics can dramatically improve understanding. A picture is worth a thousand words, especially for complex data or processes.

- Start with the ‘Why’: Begin by highlighting the relevance and impact of the concept before delving into the details. This keeps the audience engaged and motivated to learn more.

- Tell a Story: Frame the information within a narrative, making it more engaging and memorable. Human stories and relatable examples can help non-technical audiences connect with the topic.

For instance, explaining the concept of ‘climate change’ to a general audience might involve analogies like a rising fever or a greenhouse effect to help them grasp the core principles easily.

Q 5. Describe your process for interpreting statistical data and presenting key findings.

My process for interpreting statistical data and presenting key findings involves several steps:

- Descriptive Statistics: I start with descriptive statistics (mean, median, standard deviation, etc.) to summarize the data and get a general understanding of its distribution.

- Inferential Statistics: I then apply appropriate inferential statistics (t-tests, ANOVA, regression, etc.) to test specific hypotheses or examine relationships between variables.

- Visualizations: I use visualizations like graphs and charts to communicate the findings effectively. The choice of visualization depends on the type of data and the message I want to convey.

- Interpreting p-values and effect sizes: I carefully consider both statistical significance (p-values) and effect sizes to understand the practical implications of the findings. A statistically significant result may not always be practically meaningful, and vice versa.

- Clear and Concise Writing: I summarize the key findings in a clear, concise, and unbiased manner, avoiding jargon or overly technical language when presenting to non-experts.

In a recent project, we used regression analysis to understand the relationship between environmental factors and plant growth. By carefully examining the regression coefficients, their significance, and R-squared values, we could draw meaningful conclusions and report them clearly in our publication.

Q 6. How familiar are you with different types of statistical analysis (e.g., t-tests, ANOVA, regression)?

I am very familiar with a wide range of statistical analysis techniques. My expertise includes:

- t-tests: For comparing means between two groups.

- ANOVA (Analysis of Variance): For comparing means among three or more groups.

- Regression Analysis (linear, multiple, logistic): For examining relationships between variables and making predictions.

- Correlation analysis: To assess the strength and direction of linear relationships between variables.

- Chi-square tests: To analyze categorical data and test for independence between variables.

The choice of statistical test depends on the research question, the type of data, and the assumptions that can be made about the data. I always carefully consider the appropriateness of each test before applying it.

Q 7. How would you identify and handle outliers or errors in a dataset?

Identifying and handling outliers or errors in a dataset is crucial for ensuring the accuracy and reliability of the analysis. My approach is:

- Visual Inspection: I begin by visually inspecting the data using histograms, box plots, and scatter plots to identify potential outliers or unusual patterns.

- Statistical Methods: I use statistical methods like the Z-score or modified Z-score to identify outliers that fall outside a certain range of values.

- Investigate the Cause: I carefully investigate the potential causes of outliers or errors. This might involve reviewing the data collection process, checking for data entry errors, or examining whether an outlier is a true reflection of a rare event or a data error.

- Data Transformation: Depending on the cause and nature of the outlier, I may transform the data (e.g., log transformation) to reduce the influence of outliers.

- Removal or Winsorization: In some cases, I might remove outliers if they’re clearly due to errors. Alternatively, I might use winsorization (replacing extreme values with less extreme ones) to reduce the impact of outliers.

- Robust Statistical Methods: Consider using robust statistical methods which are less sensitive to outliers, such as median instead of mean.

It’s important to document all the steps taken in handling outliers and to justify the chosen approach. Transparency is key in ensuring the reproducibility and validity of the findings.

Q 8. Explain your experience with data visualization tools and techniques.

My experience with data visualization spans several tools and techniques, focusing on creating clear and insightful representations of complex datasets. I’m proficient in using tools like Tableau, Power BI, and Python libraries such as Matplotlib, Seaborn, and Plotly. My approach emphasizes choosing the right visualization for the data and the intended audience. For example, if I’m showcasing trends over time, a line chart is ideal. If I need to compare different categories, a bar chart or a pie chart might be more suitable. Beyond basic charts, I’m adept at creating more sophisticated visualizations like heatmaps, scatter plots with trend lines, and interactive dashboards to allow users to explore data dynamically. In a recent project analyzing customer churn, I used a combination of a geographic heatmap showing churn rates by region and a bar chart highlighting the top reasons for churn, giving stakeholders a comprehensive overview. This allowed for quick identification of high-risk areas and key factors driving customer attrition.

Q 9. How would you present complex data findings to both technical and non-technical stakeholders?

Presenting complex data findings to diverse audiences requires a tailored approach. For technical stakeholders, I would use precise language, detail statistical methods employed, and delve into the nuances of the data analysis. I might include detailed tables, statistical summaries, and even raw data extracts upon request. For non-technical stakeholders, my approach would be more narrative-driven. I would use clear and concise language, focusing on the key findings and their implications. Visualizations, such as charts and graphs, play a crucial role here, making the information accessible and easy to understand. For instance, instead of presenting a complex regression model, I would highlight the key relationships discovered, perhaps with a well-labeled scatter plot showing the correlation. Using storytelling techniques, I connect the data analysis to the business problem, explaining its implications in a way that is relevant and meaningful to the audience. In a recent presentation, I successfully conveyed complex A/B testing results to both data scientists and business executives by using a simple bar chart showcasing the percentage lift in conversions, followed by a concise explanation of the statistical significance.

Q 10. What are your preferred methods for summarizing large datasets?

Summarizing large datasets effectively hinges on understanding the research question and the audience. My preferred methods involve a combination of techniques. First, I perform descriptive statistics to understand the distribution of variables: mean, median, standard deviation, and percentiles. For categorical variables, I use frequency tables and percentages. Then, I identify key patterns using data visualization techniques like histograms, box plots, and scatter plots. I also rely on summary tables to present key statistics for various subgroups within the data. Furthermore, if the dataset is particularly large, I might use dimensionality reduction techniques like Principal Component Analysis (PCA) to reduce the number of variables while retaining most of the variance. This makes the data easier to manage and visualize. Finally, depending on the type of data and the research question, I might also create aggregate measures or calculate key performance indicators (KPIs) to condense the information into meaningful insights. In a recent study, I used PCA to reduce over 50 variables to 5 principal components, making it feasible to perform regression analysis and successfully identify key predictive factors.

Q 11. How do you ensure data integrity and reliability?

Data integrity and reliability are paramount. My approach to ensuring this involves several steps. Firstly, I implement rigorous data validation procedures during the data collection and entry phase, including checks for consistency and plausibility. This might involve using data validation rules in spreadsheets or programming checks in scripts. Secondly, I meticulously document all data cleaning and preprocessing steps, creating a clear audit trail. This allows for reproducibility and makes it easier to identify and correct potential errors. Thirdly, I use version control systems like Git to manage data files and analysis scripts, ensuring that all changes are tracked and recoverable. Finally, I regularly back up data to prevent loss due to system failure or accidental deletion. In a previous project, rigorous data validation caught several inconsistencies in survey data, preventing inaccurate conclusions and significantly improving the quality of the final report.

Q 12. Describe your experience with data cleaning and preprocessing techniques.

Data cleaning and preprocessing is a crucial step that significantly influences the outcome of any analysis. My experience encompasses a range of techniques. This starts with handling missing values, which can be addressed through imputation (replacing missing values with estimated ones) or exclusion, depending on the extent and nature of missing data and the research objective. Next, I often deal with outliers—extreme values that can skew results. I analyze outliers to determine if they are genuine data points or errors. If errors, they are corrected; if genuine, their influence is carefully considered. I address inconsistencies in data formats and units by standardizing them. I also tackle data transformations, such as log transformations for skewed data, to improve normality assumptions in statistical modeling. Finally, I frequently use feature engineering techniques, such as creating new variables from existing ones, to enhance model performance. For instance, in a sales prediction project, I created a new variable indicating the season of the year to account for seasonal sales patterns.

Q 13. Explain the difference between descriptive and inferential statistics.

Descriptive and inferential statistics serve different purposes. Descriptive statistics summarize and describe the main features of a dataset. Think of it as giving a snapshot of the data. This includes measures like mean, median, mode, standard deviation, and visualizations like histograms and box plots. They tell us what the data looks like without making any generalizations beyond it. Inferential statistics, on the other hand, goes a step further. It uses sample data to make inferences and draw conclusions about a larger population. This involves hypothesis testing, confidence intervals, and regression analysis. Essentially, inferential statistics allows us to generalize our findings from the sample to the population, with an associated degree of uncertainty. A simple example: calculating the average height of students in a class is descriptive statistics, while using that average to estimate the average height of all students in the university is inferential statistics.

Q 14. How would you choose the appropriate statistical test for a given research question?

Choosing the appropriate statistical test depends heavily on the research question, the type of data (categorical, continuous), and the nature of the comparison. The first step is clearly defining the research question and hypotheses. Are you comparing means, proportions, or exploring associations between variables? Are your variables independent or dependent? For example, if you’re comparing the average income of two groups, a t-test might be suitable if the data is normally distributed. If you’re comparing proportions, a chi-square test or a z-test might be appropriate. If you’re exploring the relationship between two continuous variables, correlation analysis or regression analysis may be more suitable. I often use flowcharts or decision trees to guide the selection process, considering the data characteristics and the research objective systematically. It’s also important to check the assumptions underlying each statistical test (e.g., normality, independence) before applying it. Violating these assumptions can lead to inaccurate results. Software packages like R or SPSS offer various statistical tests, but careful consideration of the data and research question is essential to choose the right one.

Q 15. How do you interpret p-values and confidence intervals?

P-values and confidence intervals are crucial tools for interpreting statistical results. A p-value represents the probability of observing results as extreme as, or more extreme than, the ones obtained, assuming the null hypothesis is true. The null hypothesis typically states there’s no effect or difference. A small p-value (typically below 0.05) suggests that the observed results are unlikely under the null hypothesis, leading us to reject the null hypothesis and conclude there’s evidence for an effect. However, it’s crucial to remember that a p-value doesn’t measure the size of an effect, only the likelihood of observing the data if there were no effect.

A confidence interval, on the other hand, provides a range of plausible values for a population parameter (e.g., the mean difference between two groups). A 95% confidence interval means that if we were to repeat the study many times, 95% of the calculated intervals would contain the true population parameter. Confidence intervals offer a more complete picture than p-values, as they convey both the precision of the estimate and the magnitude of the effect. For example, a narrow confidence interval indicates high precision, while a wide interval suggests more uncertainty.

Example: Let’s say we’re comparing the effectiveness of two drugs. A p-value of 0.03 suggests the difference between the drugs is statistically significant. However, a 95% confidence interval of (0.5, 1.5) tells us that the difference in effectiveness lies somewhere between 0.5 and 1.5 units, providing a measure of the effect size and uncertainty. This information is much more informative than the p-value alone.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How would you address limitations in a dataset or analysis?

Addressing limitations in a dataset or analysis is crucial for maintaining the integrity and credibility of scientific findings. It involves honestly acknowledging any shortcomings that might affect the interpretation or generalizability of the results. This strengthens the overall study by demonstrating a critical and thoughtful approach.

- Data limitations: These might include small sample size, missing data, selection bias (e.g., non-random sampling), or limitations in the measurement techniques used. For example, a small sample size can lead to low statistical power, making it harder to detect true effects. Missing data might introduce bias if it’s not handled appropriately (e.g., using imputation methods).

- Analytical limitations: These could involve the choice of statistical methods, assumptions made during the analysis, or the inability to control for all confounding variables. For example, using a parametric test on non-normal data can lead to inaccurate results. Uncontrolled confounding variables can obscure the true relationship between variables of interest.

Addressing these limitations: In a research report, limitations should be explicitly discussed in a dedicated section. This includes explaining how each limitation might impact the findings and suggesting potential directions for future research to address these issues. For example, if a small sample size limited the power of the study, this should be noted, and future studies with larger sample sizes should be proposed. Further, stating what types of biases may be present in the data and outlining how they may have influenced the findings is critical. Using sensitivity analyses (e.g., exploring different statistical methods) to check for robustness of results can also be helpful.

Q 17. Describe your experience with scientific literature databases (e.g., PubMed, Web of Science).

I have extensive experience using scientific literature databases such as PubMed, Web of Science, Scopus, and Google Scholar. My proficiency spans beyond simply searching for articles; I’m adept at crafting effective search strategies using Boolean operators (AND, OR, NOT), MeSH terms (Medical Subject Headings), and keywords to retrieve highly relevant literature. I’m also skilled at refining searches based on publication date, study design, and other relevant criteria.

Beyond searching, I can critically appraise the identified studies, focusing on methodological rigor, the validity of conclusions, and the relevance to the research question. I’m capable of extracting key findings, synthesizing information across multiple studies, and identifying potential gaps in the existing literature. This is vital for conducting thorough literature reviews, supporting research proposals, and interpreting my own research findings in the context of existing knowledge. For instance, I recently used PubMed to conduct a comprehensive review of the literature on a specific type of cancer treatment, which required me to systematically search, screen, and evaluate hundreds of articles.

Q 18. How do you stay updated on best practices in scientific writing and data interpretation?

Staying abreast of best practices in scientific writing and data interpretation is a continuous process. I employ a multi-pronged approach:

- Reading reputable journals and publications: I regularly read journals like the ‘Journal of the American Medical Association’ (JAMA), ‘The Lancet’, and ‘Nature’, paying close attention to the style, structure, and clarity of their publications. These journals represent gold standards in scientific communication.

- Attending workshops and conferences: Participating in conferences and workshops focused on scientific writing and data analysis provides opportunities for learning from experts, networking, and discovering new tools and techniques. Recent workshops have covered advanced statistical methods and data visualization.

- Following influential researchers and organizations: Following leading scientists and organizations on social media and through their publications helps stay informed about the latest developments and best practices in the field. I frequently follow prominent research groups in my specific area of interest.

- Engaging with online resources and courses: Numerous online resources, including courses on platforms such as Coursera and edX, offer valuable insights into scientific writing, data analysis, and related topics.

Q 19. What software/tools are you proficient in for data analysis and scientific writing?

I am proficient in several software tools crucial for data analysis and scientific writing. For data analysis, my expertise includes:

- R: A powerful statistical computing language with extensive packages for data manipulation, visualization, and statistical modeling. I’m comfortable using packages such as

ggplot2for visualization anddplyrfor data wrangling.library(ggplot2) ggplot(data, aes(x = variable1, y = variable2)) + geom_point() - Python (with libraries like Pandas, NumPy, Scikit-learn): Python provides similar functionalities to R, offering flexibility and a vast ecosystem of libraries for various data analysis tasks. I use Pandas for data manipulation, NumPy for numerical computation, and Scikit-learn for machine learning techniques.

- SPSS and SAS: I have experience with these commercially available statistical packages, which are widely used in various fields.

For scientific writing, I utilize:

- Microsoft Word and LaTeX: I am proficient in both environments, choosing the most appropriate based on the project’s requirements and the need for complex formatting. LaTeX is especially useful for generating reports with a high degree of mathematical notation.

- Zotero and Mendeley: These reference management tools help maintain a consistent citation style and streamline the literature review process.

Q 20. Explain your experience with creating scientific presentations (e.g., posters, slides).

I possess substantial experience creating scientific presentations, including posters and slide presentations for conferences and internal meetings. My approach emphasizes clarity, conciseness, and visual appeal to effectively communicate complex information to diverse audiences.

Poster presentations: I focus on creating visually engaging posters using clear headings, concise text, and high-quality figures and tables. I prioritize presenting the key findings prominently and using a logical flow to guide the viewer. I often use software like Adobe Illustrator or PowerPoint to design posters.

Slide presentations: I strive to keep slides clean and uncluttered, using bullet points, visuals, and minimal text to enhance audience engagement. I tailor the content and style to the specific audience and context, ensuring the presentation is both informative and engaging. I usually use PowerPoint or Google Slides for slide presentations, focusing on a narrative flow that conveys the study’s context, methods, results, and conclusions in a clear and logical manner.

Example: In a recent conference, I presented a poster summarizing our findings on a new diagnostic tool. I used a clear visual hierarchy to highlight the key results, and interactive elements to enhance audience engagement. The feedback I received was very positive, indicating that the poster effectively communicated our research findings.

Q 21. How would you handle conflicting data from different sources?

Handling conflicting data from different sources requires a systematic and critical approach. It’s not about simply picking one source over another, but rather understanding the reasons behind the discrepancies and determining the most reliable source.

- Identify and document the discrepancies: Carefully compare the conflicting data points, noting the specific differences and the sources of the data.

- Evaluate the quality of each data source: Assess the methodological rigor, sample size, potential biases, and the reputation of the sources. Consider factors like the publication venue, the authors’ expertise, and the funding of the research. A meta-analysis could reveal potential biases.

- Investigate potential explanations for the discrepancies: Differences may arise due to variations in study design, measurement methods, or the populations studied. For example, differences in study populations could easily lead to different results.

- Analyze the strengths and weaknesses of each dataset: Consider the potential limitations of each dataset and how they might have contributed to the observed discrepancies. For example, one dataset may have a small sample size, leading to low precision.

- Seek additional data or evidence if needed: If the conflicts remain unresolved, consider conducting additional analyses or seeking more information to clarify the situation. A replication study could be useful.

- Report the conflicts transparently and discuss the implications: In any scientific report, explicitly acknowledge the conflicting data, explain the reasons behind the discrepancies, and discuss the implications for the interpretation of the findings. Clearly explain how the discrepancies were addressed and the rationale behind the chosen approach for resolving the conflict.

By following this systematic approach, you can arrive at a well-reasoned interpretation of the data, even when faced with conflicting information. This transparency and thorough investigation enhance the credibility and trustworthiness of your findings.

Q 22. Describe a time you had to explain a complex scientific concept in a simplified way.

Simplifying complex scientific concepts requires a deep understanding of the subject matter and the ability to tailor the explanation to the audience’s background. My approach involves breaking down the concept into smaller, manageable parts, using analogies and real-world examples to illustrate key ideas, and avoiding technical jargon whenever possible.

For instance, I once had to explain the concept of ‘epigenetics’ – the study of heritable changes in gene expression that don’t involve alterations to the underlying DNA sequence – to a group of high school students. Instead of diving into complex molecular mechanisms, I used the analogy of a light switch. The DNA sequence is like the wiring of the house; it’s the underlying structure. Epigenetics is like the switch; it can turn genes ‘on’ or ‘off’ without changing the wiring itself. This simple analogy allowed them to grasp the core concept quickly and sparked further interest in the topic. I further reinforced this with examples of how environmental factors, like diet or stress, could act as ‘switch flippers’, leading to changes in gene expression.

Q 23. How do you ensure the ethical implications of data analysis and reporting?

Ethical considerations are paramount in data analysis and reporting. I ensure ethical conduct throughout the entire research process, starting with informed consent from participants (where applicable), ensuring data privacy and anonymity through appropriate anonymization techniques, and meticulously documenting all data manipulation steps to maintain transparency and reproducibility.

For example, when working with sensitive health data, I utilize techniques like differential privacy to add noise to the dataset, preventing the identification of individual participants while preserving the statistical validity of the analysis. Furthermore, I always adhere to relevant guidelines and regulations, such as HIPAA in the US, and I strive to publish my findings in an honest and unbiased manner, acknowledging any limitations of the study.

- Data Integrity: Maintaining the accuracy and completeness of data.

- Data Security: Protecting data from unauthorized access and breaches.

- Transparency and Reproducibility: Documenting methods clearly for others to replicate the work.

- Bias Mitigation: Identifying and addressing potential biases in data collection and analysis.

- Conflict of Interest Disclosure: Openly declaring any potential conflicts of interest.

Q 24. How do you approach writing for different publication types (e.g., journals, reports)?

Adapting my writing style to different publication types is crucial for effective communication. Journals usually require a highly structured format, emphasizing rigor and conciseness, with a focus on novel contributions to the field. Reports, on the other hand, may require a more narrative approach, prioritizing clarity and accessibility for a broader audience and often focusing on practical implications.

For journal articles, I adhere to the specific guidelines provided by the journal, focusing on a clear introduction, detailed methodology, concise results, thorough discussion, and a strong conclusion. I always strive to provide sufficient context to the work and clearly define all terminology. For reports, I employ a more conversational tone, using visuals like charts and graphs to enhance understanding and summarizing complex data in an easily digestible format. I also emphasize the practical applications and implications of the findings.

Q 25. How do you manage multiple writing projects simultaneously?

Managing multiple writing projects simultaneously necessitates a well-organized approach. I use project management tools to create timelines, set deadlines, and track progress. I prioritize tasks based on urgency and importance using techniques such as the Eisenhower Matrix (urgent/important). I also dedicate specific blocks of time to each project to maintain focus and avoid context switching.

For instance, I might dedicate Monday mornings to working on a journal manuscript, Tuesdays to a report, and Wednesdays to grant proposals. This structured approach prevents feeling overwhelmed and ensures each project receives adequate attention. Regular review of my schedule allows me to adjust priorities as needed. Breaking down large tasks into smaller, more manageable sub-tasks is also crucial for maintaining momentum and preventing burnout.

Q 26. Describe your experience with peer review processes.

Peer review is an integral part of the scientific process, and I have extensive experience with it, both as a reviewer and as an author. As a reviewer, I evaluate manuscripts rigorously, assessing their scientific merit, methodology, clarity, and originality. I provide constructive feedback aimed at improving the quality of the work.

As an author, I value constructive criticism from peer reviewers as it helps improve the quality and clarity of my own work. I carefully address all comments and concerns raised, providing detailed explanations of any changes made or reasons for not accepting a suggestion. I see the peer review process as an essential step in ensuring the validity and reliability of scientific findings. It’s a collaborative process that enhances the quality of scientific literature.

Q 27. What strategies do you employ to effectively communicate scientific findings?

Effective communication of scientific findings requires a multi-faceted approach. I use a combination of clear and concise writing, visual aids such as graphs and charts, and oral presentations tailored to the audience. I always strive to explain complex concepts in an accessible way, using analogies and real-world examples to illustrate key points. I also tailor my communication style to the audience; a presentation to a scientific conference will differ significantly from a report for a non-scientific stakeholder.

For instance, when presenting research findings to a funding agency, I emphasize the practical applications and potential impact of the work, highlighting its relevance to their mission. When presenting at a scientific conference, I focus on the methodological rigor and novelty of the research. In all cases, I aim for clear and concise communication that maximizes understanding and engagement.

Q 28. How do you deal with criticism of your work?

Criticism, when constructive, is an invaluable opportunity for growth and improvement. I approach criticism with a professional and open mind, carefully considering the points raised. I don’t take criticism personally, but instead, evaluate its validity and relevance. If the criticism is well-founded, I acknowledge the shortcomings and work to improve my work. If the criticism seems unfounded or biased, I carefully document my response and reasoning, providing evidence to support my position.

For example, if a reviewer criticizes a methodology, I will thoroughly review the methodology section of my manuscript, checking for potential errors or weaknesses. If errors are found, I will revise the methodology and clearly explain the revisions in a response letter to the editor. If I believe the criticism is unfounded, I will provide a detailed explanation as to why, backed by evidence from relevant literature or data. This process helps me refine my research and improve my ability to communicate it effectively.

Key Topics to Learn for Scientific Writing and Data Interpretation Interview

- Scientific Writing Principles: Understanding different scientific writing styles (e.g., IMRaD, narrative reviews), effective communication of complex information, and proper citation and referencing techniques.

- Data Visualization and Presentation: Creating clear and impactful visualizations (charts, graphs) to effectively communicate data trends and findings to diverse audiences. Practical application: Choosing the appropriate visualization type for different data sets and presenting data in a compelling and understandable manner.

- Statistical Analysis and Interpretation: Understanding basic statistical concepts (mean, median, standard deviation, p-values), interpreting statistical outputs, and effectively communicating statistical significance in written reports.

- Report Writing and Editing: Mastering the process of writing clear, concise, and grammatically correct scientific reports. Practical application: Identifying and correcting common grammatical and stylistic errors in scientific writing.

- Data Integrity and Quality Control: Understanding data quality issues, appropriate data cleaning and handling techniques, and the importance of maintaining data integrity throughout the research process.

- Ethical Considerations in Scientific Writing and Data Handling: Understanding principles of research integrity, plagiarism avoidance, and responsible data management.

- Technical Writing Skills: Ability to clearly and concisely explain complex technical concepts to a non-technical audience. Practical application: Creating effective summaries and explanations for diverse audiences.

Next Steps

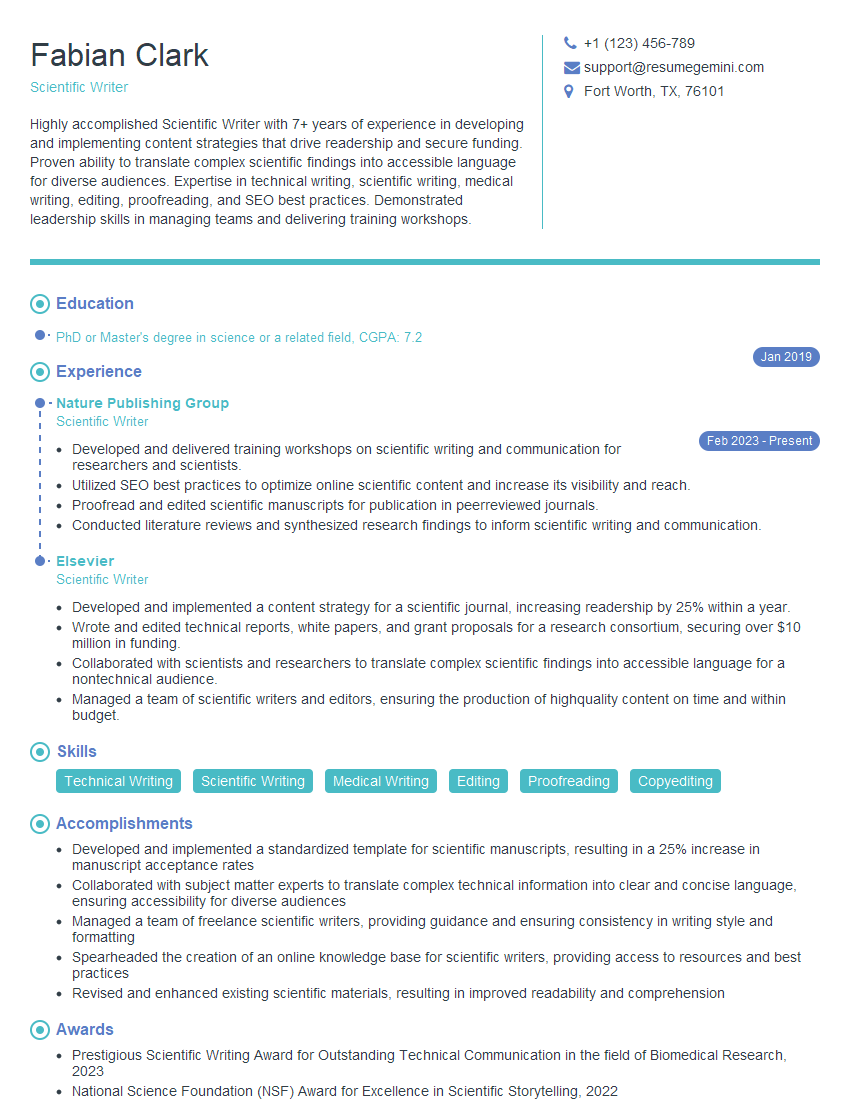

Mastering scientific writing and data interpretation is crucial for career advancement in research, academia, and industry. These skills are highly sought after, opening doors to diverse and rewarding opportunities. To maximize your job prospects, it’s essential to have a compelling and ATS-friendly resume that effectively highlights your abilities. ResumeGemini is a trusted resource for creating professional and impactful resumes. We provide examples of resumes tailored specifically to Scientific Writing and Data Interpretation positions, helping you showcase your skills and experience in the best possible light. Take the next step towards your dream career – build a winning resume with ResumeGemini!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?