Warning: search_filter(): Argument #2 ($wp_query) must be passed by reference, value given in /home/u951807797/domains/techskills.interviewgemini.com/public_html/wp-includes/class-wp-hook.php on line 324

Are you ready to stand out in your next interview? Understanding and preparing for Cloud Microservices Architecture interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Cloud Microservices Architecture Interview

Q 1. Explain the principles of microservices architecture.

Microservices architecture is a design approach where a large application is structured as a collection of small, autonomous services, each running its own process and communicating with each other over a network. Think of it like a well-organized city, where each service is a specialized business (e.g., a bakery, a library, a post office), operating independently but contributing to the overall functionality of the city. The key principles are:

- Independent Deployability: Each service can be deployed, updated, and scaled independently without affecting others. This allows for faster release cycles and improved resilience.

- Decentralized Governance: Teams own and manage their services, fostering autonomy and agility.

- Organized around Business Capabilities: Services are designed around specific business functions, aligning technology with business goals.

- Smart Endpoints and Dumb Pipes: Services contain their own business logic and communicate via simple protocols, promoting loose coupling.

- Technology Diversity: Different services can be built using different technologies best suited for their specific needs, providing flexibility and choice.

- Fail-Fast Approach: Services are designed to fail gracefully, minimizing the impact on other parts of the system.

Q 2. What are the advantages and disadvantages of using a microservices architecture?

Microservices offer several advantages, but also come with challenges:

Advantages:

- Increased Agility and Faster Deployment: Smaller, independent services are easier to develop, test, and deploy. Changes can be rolled out quickly without affecting the entire application.

- Improved Scalability and Resilience: Individual services can be scaled independently based on demand, optimizing resource utilization and improving fault tolerance. A failure in one service doesn’t necessarily bring down the entire system.

- Technology Diversity: The ability to use different technologies for different services enhances flexibility and allows teams to use the best tool for the job.

- Improved Team Autonomy: Smaller, focused teams can own and manage their services, leading to higher productivity and ownership.

Disadvantages:

- Increased Complexity: Managing a large number of services can be significantly more complex than a monolithic application, requiring robust monitoring, logging, and deployment tools.

- Data Consistency Challenges: Maintaining data consistency across multiple services requires careful planning and coordination. Distributed transactions can be difficult to manage.

- Inter-service Communication Overhead: Communication between services adds latency and complexity.

- Operational Overhead: Monitoring, logging, and debugging become more complex with more services.

Q 3. Describe different approaches to inter-service communication in a microservices architecture (e.g., REST, gRPC, message queues).

Several approaches exist for inter-service communication:

- REST (Representational State Transfer): A widely used architectural style that utilizes HTTP methods (GET, POST, PUT, DELETE) to interact with services. It’s relatively simple to implement and understand. Example using a hypothetical

/users/{id}endpoint:GET /users/123 - gRPC (Google Remote Procedure Call): A high-performance, open-source framework that uses Protocol Buffers for efficient data serialization. It’s faster and more efficient than REST but requires more upfront setup. A gRPC call might look like this (in proto definition):

service UserService { rpc GetUser (UserRequest) returns (UserResponse); } - Message Queues (e.g., Kafka, RabbitMQ): Asynchronous communication approach where services send messages to a queue, and other services consume messages from the queue. This approach decouples services and enhances resilience. Imagine ordering a product – the order service sends a message to the inventory service; the inventory service processes the message independently.

The choice depends on factors like performance requirements, data volume, and complexity of communication.

Q 4. How do you handle data consistency across multiple microservices?

Data consistency across microservices is a crucial challenge. There isn’t a single solution, but several strategies are commonly employed:

- Saga Pattern: This pattern orchestrates a series of local transactions across multiple services. If any transaction fails, compensating transactions are executed to revert the changes. It’s like a multi-step process where each step needs to be confirmed, and if one fails, the others are rolled back.

- Event Sourcing: Instead of storing the current state of the data, events that modify the data are stored. This provides a complete audit trail and facilitates eventual consistency. It’s like tracking every change, allowing you to recreate the current state at any point.

- CQRS (Command Query Responsibility Segregation): Separates read and write operations. The read model can be optimized for fast queries, even if the write model involves eventual consistency. Think of separating the process of updating customer details from retrieving customer details, allowing you to optimize each independently.

- Database per Service: Each service owns its data, simplifying development and deployment, but requires careful planning for data sharing and consistency.

The best approach depends on the specific requirements and constraints of the application. Often, a combination of these patterns is used.

Q 5. Explain different strategies for service discovery in a microservices environment.

Service discovery allows services to locate and communicate with each other dynamically. Key strategies include:

- Service Registry (e.g., Consul, etcd, Eureka): Services register themselves with a central registry, and other services can query the registry to find the location of a particular service. It’s like a phone book for services.

- DNS-based Service Discovery: Uses DNS to resolve service names to IP addresses and ports. This approach integrates well with existing infrastructure. Imagine a DNS entry that automatically points to the IP address of the currently active service instance.

- Peer-to-Peer Discovery: Services discover each other directly without a central registry. This approach is more decentralized but can be more challenging to manage.

The choice depends on factors like scalability requirements, complexity, and existing infrastructure.

Q 6. How do you ensure fault tolerance and resilience in a microservices architecture?

Ensuring fault tolerance and resilience is critical in microservices architectures. Several strategies are essential:

- Circuit Breakers: Prevents cascading failures by stopping requests to a failing service after a certain number of failures. It’s like a safety valve, preventing further requests to a service until it recovers.

- Bulkhead Pattern: Isolates services from each other, limiting the impact of a failure to a specific subset of services. This is like compartmentalizing a ship to prevent a single breach from sinking the whole vessel.

- Retry Mechanisms: Automatically retries failed requests after a certain delay. This helps handle temporary network issues or service disruptions. It’s like automatically re-sending an email if the initial attempt fails.

- Health Checks: Regularly monitors the health of services and alerts when failures are detected. This allows for proactive identification and resolution of issues.

- Load Balancing: Distributes traffic across multiple instances of a service to prevent overload and ensure high availability.

Implementing these patterns often involves using tools and frameworks like service meshes (Istio, Linkerd).

Q 7. Describe your experience with containerization technologies like Docker and Kubernetes.

I have extensive experience with Docker and Kubernetes. Docker provides containerization, packaging an application and its dependencies into an isolated unit. This ensures consistent execution across different environments. I’ve used Docker to build and deploy numerous microservices, streamlining the development and deployment process. I’ve leveraged Docker’s image building capabilities extensively, optimizing images for size and efficiency.

Kubernetes is an orchestration platform that automates the deployment, scaling, and management of containerized applications. I’ve used Kubernetes to manage clusters of nodes, scheduling pods (containers) based on resource requirements and availability. Features like rolling updates, self-healing, and service discovery have been crucial in building resilient and scalable microservices architectures. I’m proficient in utilizing Kubernetes concepts like deployments, stateful sets, and services to manage application lifecycle effectively. I have experience with various Kubernetes configurations like using different network plugins, resource quotas, and managing persistent volumes.

Q 8. How do you monitor and log microservices in a production environment?

Monitoring and logging microservices in production is crucial for ensuring reliability and performance. It involves a multi-faceted approach, combining centralized logging, distributed tracing, and real-time monitoring dashboards. Think of it like having a comprehensive health check for each individual organ (microservice) and the entire body (system).

Centralized Logging: We typically use a centralized logging system like Elasticsearch, Fluentd, and Kibana (EFK stack) or the more modern ELK stack alternative, OpenSearch. Each microservice sends its logs to this system, allowing us to aggregate and analyze them from a single point. This makes troubleshooting issues across multiple services far easier. For example, if a payment processing microservice fails, we can quickly pinpoint the root cause by examining its logs alongside logs from related services.

Distributed Tracing: To understand the flow of requests across multiple microservices, we leverage distributed tracing tools such as Jaeger or Zipkin. These tools inject unique identifiers into requests, tracking their journey through the entire system. This allows us to visualize the request path, identify bottlenecks, and pinpoint the source of latency issues. Imagine tracing a package as it moves through different shipping hubs – that’s essentially what distributed tracing does for requests in a microservice architecture.

Real-time Monitoring: We utilize monitoring tools like Prometheus and Grafana to monitor key metrics like CPU usage, memory consumption, and request latency for each microservice. Dashboards provide real-time visibility into the health and performance of the entire system. This gives us immediate alerts if any service deviates from expected performance levels.

Alerting: We configure alerts based on predefined thresholds for critical metrics. For example, if a service’s error rate exceeds a certain percentage, or its response time surpasses a specific limit, we receive immediate notifications, allowing for swift intervention and issue resolution.

Q 9. Explain your experience with API gateways and their role in microservices.

API gateways are essential components in a microservices architecture. They act as a reverse proxy, providing a single entry point for clients to access multiple backend microservices. Think of it as a concierge service for your microservices, handling authentication, routing, and security checks before forwarding requests to the appropriate service.

Key Roles:

- Request Routing: The API gateway routes incoming requests to the correct microservice based on the request path, headers, or other criteria.

- Authentication and Authorization: It handles authentication and authorization, ensuring that only authorized clients can access specific services. This centralizes security management, improving overall system security.

- Rate Limiting and Throttling: The gateway can enforce rate limits and throttle requests to prevent abuse or overload of individual microservices.

- Transformation and Protocol Translation: It can transform requests (e.g., JSON to XML) and translate between different communication protocols.

- Monitoring and Logging: The API gateway can log and monitor requests, providing valuable insights into system usage and performance.

Experience: In past projects, I’ve extensively used Kong and Apigee as API gateways. Kong, being open-source and highly customizable, allowed us to tailor its functionality to our specific needs. Apigee offered a more managed solution with robust features for enterprise-level deployments, including advanced security and analytics.

Q 10. Describe your experience with CI/CD pipelines for microservices.

CI/CD (Continuous Integration/Continuous Delivery) pipelines are vital for efficiently deploying and managing microservices. A well-structured pipeline automates the build, test, and deployment process, ensuring rapid and reliable releases. It’s like an assembly line for software, streamlining the development cycle.

Components:

- Source Code Management: We use Git for version control, providing a central repository for all microservice code.

- Build Automation: Tools like Maven or Gradle automate the build process, compiling code, running tests, and packaging the application.

- Automated Testing: Unit tests, integration tests, and end-to-end tests ensure code quality and prevent regressions.

- Deployment Automation: Tools like Kubernetes or Docker Swarm automate the deployment process, managing container orchestration and scaling. This ensures seamless deployments to various environments (development, staging, production).

- Monitoring and Feedback: Integration with monitoring tools allows for real-time feedback on the deployment’s success and performance.

Example: In a recent project, we implemented a CI/CD pipeline using Jenkins, Docker, and Kubernetes. Each code commit triggered an automated build, test, and deployment process. This significantly reduced deployment time and improved the frequency of releases.

Q 11. How do you handle security concerns in a microservices architecture?

Security in a microservices architecture is paramount. Because of the distributed nature of microservices, it’s crucial to adopt a multi-layered security approach. Think of it as building a castle with multiple layers of defense.

Key Security Considerations:

- Authentication and Authorization: Implement robust authentication mechanisms (e.g., OAuth 2.0, JWT) to verify the identity of clients and authorize access to services. This can be handled by the API gateway or individually by each microservice.

- Data Encryption: Encrypt sensitive data both in transit and at rest using appropriate encryption techniques.

- Input Validation and Sanitization: Validate and sanitize all inputs to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Secure Communication: Use HTTPS to encrypt communication between microservices and clients.

- Network Segmentation: Isolate microservices into separate networks or security zones to limit the impact of potential breaches.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and address them proactively.

- Secrets Management: Securely store and manage sensitive information like API keys and database credentials using a secrets management solution.

Example: We used HashiCorp Vault in a previous project to manage sensitive information securely, preventing accidental exposure of credentials.

Q 12. Explain different approaches to database management in a microservices architecture (e.g., microservices per database, shared database).

Database management in a microservices architecture requires careful consideration. There are several approaches, each with its own trade-offs.

Microservices per Database: This approach assigns a dedicated database to each microservice. This offers strong data isolation, improving scalability and resilience. However, it can lead to data inconsistency if not managed properly. Think of each service having its own private filing cabinet. This is ideal when data needs are independent, but requires more management overhead.

Shared Database: In this approach, multiple microservices share a single database. This simplifies data management, but can lead to performance bottlenecks and increased coupling between services. Think of all services sharing the same filing cabinet; easier access, but with the risk of clutter and conflicts.

Hybrid Approach: Many architectures adopt a hybrid approach, combining aspects of both strategies. For example, closely related microservices might share a database, while others have their dedicated databases. This allows for flexibility and optimization based on specific needs.

Considerations: The choice depends on factors such as data consistency requirements, scalability needs, and the complexity of inter-service relationships. It is essential to carefully plan the database strategy to ensure data integrity and system performance.

Q 13. Describe your experience with different message brokers (e.g., Kafka, RabbitMQ).

Message brokers are essential for asynchronous communication between microservices. They provide a decoupled and reliable mechanism for exchanging messages, enhancing scalability and resilience. They are like a post office for your microservices, ensuring messages are delivered reliably.

Kafka: A high-throughput, distributed streaming platform. Ideal for handling a large volume of real-time data streams. Think of it as a high-speed railway for data.

RabbitMQ: A robust, feature-rich message broker supporting various messaging protocols (AMQP, MQTT, STOMP). Offers reliable message delivery and various queueing mechanisms. Think of it as a more versatile postal service, handling different types of packages.

Choosing a Broker: The choice depends on factors such as the volume of messages, the required message delivery guarantees, and the desired level of features. Kafka excels in high-throughput scenarios, while RabbitMQ offers greater flexibility and feature richness.

Experience: I’ve worked extensively with both Kafka and RabbitMQ in various projects. Kafka was preferred when dealing with large streams of real-time data, like event streams in a user activity tracking system. RabbitMQ was a better fit for scenarios where message ordering and guaranteed delivery were critical, such as in financial transaction processing.

Q 14. How do you design for scalability in a microservices architecture?

Designing for scalability in a microservices architecture is crucial for handling increasing traffic and data volumes. It involves several key strategies.

Horizontal Scaling: This involves adding more instances of a microservice to handle increased load. It’s like adding more checkout counters at a supermarket during peak hours. This is easily achievable with container orchestration platforms like Kubernetes.

Vertical Scaling: This involves upgrading the resources (CPU, memory) of existing microservice instances. Like upgrading your computer’s RAM to improve its performance. However, it’s less flexible than horizontal scaling.

Database Scaling: Ensure your databases can scale to handle the increased data load. This can involve techniques like sharding or using cloud-based database solutions.

Asynchronous Communication: Use message brokers for asynchronous communication between microservices to reduce coupling and improve scalability. This prevents a single slow service from impacting the entire system.

Load Balancing: Distribute incoming traffic across multiple instances of a microservice using a load balancer. This ensures even distribution of load and prevents overload on individual instances.

Caching: Implement caching mechanisms (e.g., Redis, Memcached) to reduce database load and improve response times. This stores frequently accessed data in memory for faster retrieval.

Microservice Decomposition: Design microservices with clear boundaries and independent functionalities to allow for independent scaling of individual components.

Q 15. What are some common challenges encountered when implementing microservices?

Implementing microservices offers many benefits, but it also introduces unique challenges. Think of it like building with LEGOs – individual bricks are simple, but constructing a complex castle requires careful planning and execution.

- Increased Complexity: Managing a large number of independent services increases operational overhead. You have to monitor, deploy, and scale each service individually, leading to a more complex deployment pipeline.

- Distributed Tracing and Debugging: Tracing a request across multiple services can be difficult. A simple bug might span several services, making debugging time-consuming.

- Data Consistency: Maintaining data consistency across multiple services that might use different databases is crucial and often tricky. Transactions spanning multiple services need careful coordination.

- Inter-service Communication: Choosing the right communication pattern (synchronous vs. asynchronous) is vital for performance and resilience. Incorrect choices can lead to bottlenecks or cascading failures.

- Testing: Thoroughly testing a microservices architecture is complex, requiring unit, integration, and end-to-end tests. Each service needs its own tests, and integration tests require simulating interactions between services.

For example, imagine an e-commerce platform. A single monolithic application would handle everything – user accounts, product catalog, shopping cart, and payment processing. In a microservices architecture, these would be separate services. While this allows for independent scaling and deployment, it adds complexity in managing inter-service communication and ensuring data consistency between user accounts and order details.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle versioning of microservices?

Versioning microservices is critical for maintaining backward compatibility and allowing for iterative updates. We typically employ several strategies:

- Semantic Versioning (SemVer): Using SemVer (MAJOR.MINOR.PATCH) clearly communicates changes in the service’s API. A major version bump signals breaking changes, while minor updates introduce new features, and patch releases address bug fixes. This allows consumers to understand the impact of an update and manage their dependencies effectively.

- API Gateway: An API gateway acts as a reverse proxy, handling routing and versioning. It can route requests to different versions of the service based on the request headers or path. This decouples consumers from the underlying service versions.

- Canary Deployments: Gradually rolling out a new version of a service to a small subset of users helps identify potential issues before a full deployment. This minimizes the risk of widespread disruption.

- Blue/Green Deployments: Deploying the new version alongside the older version allows for a quick rollback if issues arise. Once the new version is stable, traffic can be switched completely.

For instance, imagine a payment service. Version 1.0 might support only credit cards. Version 2.0 adds PayPal support. SemVer would indicate this as a MINOR version change (e.g., 1.1.0). An API gateway could route requests to either version based on client configuration.

Q 17. Explain your understanding of domain-driven design (DDD) and its application to microservices.

Domain-Driven Design (DDD) is an approach to software development that focuses on deeply understanding the business domain. It’s particularly valuable in microservices architectures because it helps define clear boundaries between services. Instead of starting with technical concerns, DDD emphasizes modeling the business domain’s concepts and relationships.

In microservices, each service should align with a specific bounded context within the domain. A bounded context is a clearly defined area of the domain with its own vocabulary and logic. For example, in an e-commerce system, ‘Order Management’ and ‘Inventory Management’ might be separate bounded contexts, each represented by a microservice.

DDD employs several key concepts like:

- Ubiquitous Language: A common vocabulary used by developers and domain experts to ensure clear communication and understanding.

- Entities: Objects with identity and lifecycle, like a ‘Customer’ or ‘Product’.

- Aggregates: Clusters of related entities treated as a single unit. Example: an ‘Order’ aggregate might include ‘Order Items’ and ‘Shipping Address’.

- Repositories: Abstractions for persistent storage of entities.

By applying DDD, we ensure that our microservices are well-defined, cohesive, and aligned with the business needs. It improves communication, reduces complexity, and promotes maintainability.

Q 18. How do you perform testing in a microservices environment (unit, integration, end-to-end)?

Testing in a microservices environment is crucial and involves multiple levels:

- Unit Tests: These tests focus on individual components within a microservice. They are fast, isolated, and ensure the internal logic of each service functions correctly. We might use mocking frameworks to simulate dependencies.

- Integration Tests: These verify the interactions between different microservices. They require setting up a simplified environment, often using test containers or in-memory databases, to simulate the interactions between services. They ensure that services can communicate and exchange data correctly.

- End-to-End (E2E) Tests: These tests simulate real-world scenarios by exercising the entire system, spanning multiple microservices. They ensure the system works as intended from a user’s perspective. They are typically slower and more complex to set up, but essential for verifying the overall system functionality.

Example: In an e-commerce system, unit tests would verify individual functions of the ‘Order Service’ (e.g., creating an order). Integration tests would check the interaction between the ‘Order Service’ and the ‘Payment Service’. E2E tests would simulate a complete purchase flow, including user login, adding items to the cart, processing payment, and order confirmation.

Q 19. What are some common patterns for handling transactions across microservices?

Handling transactions across microservices is a significant challenge. The traditional ACID properties (Atomicity, Consistency, Isolation, Durability) are difficult to maintain in a distributed environment. We typically employ these patterns:

- Saga Pattern: A saga is a sequence of local transactions, where each transaction updates a single service. Each step in the saga compensates for previous steps if a failure occurs. This makes the system more resilient to failures.

- Two-Phase Commit (2PC): Although less common in microservices due to its blocking nature, 2PC provides strong consistency but can introduce performance issues and single points of failure.

- Message Queues (e.g., Kafka, RabbitMQ): Using message queues allows for asynchronous communication between services. This improves resilience and avoids blocking calls during failures.

- Eventual Consistency: This approach accepts that data consistency might not be immediate. Services update their data independently, and consistency is reached eventually through asynchronous communication. It is suitable for scenarios where immediate consistency is not crucial.

For example, an order placement involves updating the order database, inventory, and payment services. A saga would coordinate these updates, ensuring that a rollback mechanism exists if any part fails.

Q 20. Explain the concept of eventual consistency and its implications for microservices.

Eventual consistency means that data consistency is achieved asynchronously over time, rather than immediately. Think of it like sending a letter – you don’t get an immediate response confirming its arrival, but you eventually expect the recipient to receive it. This is different from strong consistency where data is immediately consistent after an operation.

In microservices, eventual consistency is often preferred due to its resilience and scalability. It reduces the risk of blocking calls and improves system availability. However, it requires careful design to manage the implications:

- Data Synchronization Challenges: It’s essential to ensure that data eventually becomes consistent, even if there are delays or failures.

- Conflict Resolution: Mechanisms are needed to handle potential conflicts that might arise when multiple services update data asynchronously.

- UI Considerations: The user interface should clearly communicate the fact that data might not be immediately consistent.

Example: In a social media application, if you post a message, it might not immediately appear on all users’ feeds due to eventual consistency. It will eventually propagate to all users, potentially with a slight delay.

Q 21. Describe your experience with service mesh technologies (e.g., Istio, Linkerd).

Service mesh technologies like Istio and Linkerd provide a dedicated infrastructure layer for managing and securing communication between microservices. They act like a ‘sidecar’ alongside each service, handling tasks such as:

- Traffic Management: Routing, load balancing, and fault tolerance.

- Security: Authentication, authorization, and encryption of inter-service communication.

- Observability: Monitoring, tracing, and logging of service interactions.

- Resilience: Circuit breakers, retries, and timeouts to enhance service reliability.

My experience with Istio involves configuring traffic policies, setting up authentication using mutual TLS, and leveraging its tracing capabilities for debugging. Istio’s control plane provides centralized management of the entire service mesh, which simplifies operations. Linkerd, on the other hand, is known for its simplicity and performance. I have used Linkerd to establish a robust and efficient communication layer for a high-throughput microservices system, prioritizing minimal overhead and rapid deployment.

Using a service mesh significantly reduces the burden on individual microservices by offloading the concerns of communication management and security to the dedicated infrastructure. This allows development teams to focus on building business logic instead of tackling low-level infrastructure details.

Q 22. How do you choose the right technology stack for your microservices?

Choosing the right technology stack for microservices is crucial for long-term success. It’s not a one-size-fits-all solution; the ideal stack depends heavily on your specific application needs, team expertise, and business constraints. Think of it like choosing the right tools for a construction project – you wouldn’t use a sledgehammer to drive in a finishing nail.

My approach involves considering several key factors:

- Programming Language: The language should align with your team’s skills and the nature of the service. For example, Go is excellent for high-performance, concurrent services, while Node.js might be preferred for event-driven architectures. Java remains a strong choice for enterprise-grade applications due to its maturity and robust ecosystem.

- Framework: Frameworks provide structure and simplify development. Spring Boot (Java), .NET Core, and Micronaut are popular choices, offering features like dependency injection, configuration management, and built-in support for various protocols.

- Database: The database choice depends on the data’s nature and the service’s requirements. Relational databases (e.g., PostgreSQL, MySQL) are suitable for structured data, while NoSQL databases (e.g., MongoDB, Cassandra) are better for unstructured or semi-structured data. Consider factors like scalability, consistency, and data access patterns.

- Message Broker: For inter-service communication, a message broker like Kafka or RabbitMQ is often essential. They provide asynchronous communication, enabling better scalability and resilience.

- API Gateway: An API gateway acts as a single entry point for clients, managing routing, authentication, and security. Popular choices include Kong, Apigee, and AWS API Gateway.

For example, in a project involving high-volume real-time data processing, I might opt for a stack including Go, a lightweight framework like Fiber, a NoSQL database like Cassandra, and Kafka for inter-service communication. In contrast, a project focused on transactional data and requiring strong ACID properties might utilize Java, Spring Boot, a relational database like PostgreSQL, and a more traditional RESTful approach.

Q 23. Explain your experience with serverless computing and its relevance to microservices.

Serverless computing is a powerful paradigm that aligns perfectly with the microservices architecture. It allows you to run individual microservices as independent functions without managing the underlying servers. This simplifies operations, reduces infrastructure costs, and improves scalability. Instead of provisioning and managing servers, you simply deploy your code, and the cloud provider handles the rest.

My experience with serverless involves using AWS Lambda, Azure Functions, and Google Cloud Functions. These platforms offer several advantages when applied to microservices:

- Reduced Operational Overhead: No server management is required; the platform handles scaling, patching, and maintenance.

- Cost Efficiency: You only pay for the compute time your functions consume, making it highly cost-effective for applications with fluctuating workloads.

- Improved Scalability: Serverless platforms automatically scale your functions based on demand, ensuring high availability and responsiveness.

- Faster Deployment: Deploying and updating functions is significantly faster than managing traditional servers.

For instance, in a recent project, we used AWS Lambda to process image uploads. Each image upload triggered a Lambda function, which performed image resizing and storage in S3. This serverless approach allowed us to handle peak loads without provisioning additional servers, leading to significant cost savings and improved performance.

Q 24. How do you manage dependencies between microservices?

Managing dependencies between microservices is critical to maintaining a healthy and manageable system. Tight coupling between services can lead to cascading failures and hinder independent deployment. My approach focuses on minimizing dependencies and utilizing asynchronous communication where possible.

Strategies I employ include:

- Asynchronous Communication: Using message queues (like Kafka or RabbitMQ) or event-driven architectures to decouple services. This allows services to communicate without direct dependencies, improving resilience and enabling independent scaling.

- API Design: Carefully designing well-defined APIs with clear contracts between services. Using versioning in APIs is also crucial for managing changes and preventing breaking changes to dependent services.

- Circuit Breakers: Implementing circuit breakers to prevent cascading failures. If a dependent service is unavailable, the circuit breaker prevents repeated requests, allowing the calling service to continue functioning.

- API Gateways: Using an API gateway for routing requests and managing dependencies. It can act as a single point of entry and also handle tasks such as authentication and rate limiting.

- Service Discovery: Employing service discovery mechanisms (like Consul or etcd) allows services to dynamically discover and communicate with each other without hardcoded addresses. This promotes flexibility and resilience.

For example, instead of Service A directly calling Service B synchronously, Service A sends a message to a queue, and Service B consumes the message asynchronously. This eliminates the direct dependency, allowing them to operate independently.

Q 25. Describe your experience with different deployment strategies for microservices (e.g., blue/green, canary).

Deployment strategies are essential for minimizing downtime and ensuring smooth updates in a microservices environment. I have extensive experience with blue/green and canary deployments.

Blue/Green Deployment: In this strategy, you have two identical environments: ‘blue’ (production) and ‘green’ (staging). You deploy the new version to the ‘green’ environment, thoroughly test it, and then switch traffic from ‘blue’ to ‘green’. If there are issues, you can quickly switch back to ‘blue’.

Canary Deployment: This is a more gradual approach. You deploy the new version to a small subset of users (the ‘canary’). You monitor its performance and behavior in real-world conditions before rolling it out to the entire user base. This helps identify issues early on and minimizes the impact of potential problems.

Example: In a recent project, we used a canary deployment to update a critical payment processing microservice. We deployed the new version to 1% of users, monitored key metrics (e.g., transaction success rate, latency), and gradually increased the rollout percentage based on the results.

Other strategies I’m familiar with include rolling updates and rolling backouts, each with its own advantages and disadvantages depending on the specific application and risk tolerance.

Q 26. How do you handle rollbacks in a microservices architecture?

Handling rollbacks in a microservices architecture requires a robust and well-planned strategy. It’s about quickly reverting to a known stable state when a deployment fails.

My approach involves:

- Version Control: Thorough version control of all microservices is paramount. Each deployment should have a unique version tag allowing easy rollback to previous versions.

- Automated Rollbacks: Implementing automated rollback mechanisms triggered by monitoring systems detecting issues such as high error rates or significant performance degradation.

- Infrastructure as Code (IaC): Using IaC (e.g., Terraform, CloudFormation) enables the automated creation and destruction of infrastructure, facilitating rapid rollbacks by recreating previous infrastructure states.

- Blue/Green Deployment or Canary Deployments: These strategies inherently support quick rollbacks by having a known good version readily available (the ‘blue’ environment or the previous version deployed to the majority of users).

- Detailed Logging and Monitoring: Extensive logging and monitoring are crucial to identify the root cause of deployment issues and make informed decisions during a rollback.

For instance, if a new version of a microservice causes a surge in errors, the monitoring system can automatically trigger a rollback, switching traffic back to the previous stable version. The detailed logs would aid in identifying the reason for the failure and resolving the issue for future deployments.

Q 27. Explain your understanding of observability in a microservices environment.

Observability in a microservices environment is crucial for understanding the system’s behavior, identifying issues, and ensuring optimal performance. It goes beyond simple monitoring and involves collecting and analyzing data from various sources to gain a comprehensive view of the system’s health.

Key aspects of observability in microservices include:

- Logging: Structured logging with consistent formats and contextual information is essential. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) are commonly used.

- Metrics: Collecting key performance indicators (KPIs) such as request latency, error rates, and resource utilization. Prometheus and Grafana are popular choices for metrics collection and visualization.

- Tracing: Distributed tracing is vital for understanding request flow across multiple microservices. Tools like Jaeger and Zipkin provide end-to-end visibility into requests.

- Alerting: Setting up alerts based on critical metrics and events to enable proactive issue detection.

By combining logs, metrics, and traces, you can gain a holistic understanding of your system’s behavior, identify bottlenecks, and troubleshoot complex issues effectively. Imagine a car’s dashboard – it provides various metrics (speed, fuel level, engine temperature) for the driver to monitor its status. Observability in microservices is analogous to having a comprehensive dashboard for your entire system.

Q 28. Describe a time you had to debug a complex issue in a microservices architecture.

In a previous project involving an e-commerce platform, we encountered a performance bottleneck affecting the product catalog service. Initially, monitoring showed high latency, but the root cause wasn’t immediately apparent. This is where the power of observability came into play. By combining distributed tracing with metrics and logs, we discovered that a specific database query within the product catalog service was unexpectedly slow during peak hours.

The problem was that this query wasn’t optimized for the increased data volume during peak hours. It caused a ripple effect, impacting the overall performance of the entire platform. To solve this, we took several steps:

- Database Query Optimization: We added indexes to the database tables used by the query, significantly reducing the query execution time.

- Caching: We implemented caching to store frequently accessed product data, reducing the load on the database.

- Load Balancing: We adjusted the load balancer to better distribute traffic among multiple instances of the product catalog service.

- Capacity Planning: We increased the capacity of the database to handle future growth.

Through systematic investigation using distributed tracing and careful examination of logs and metrics, we successfully identified and resolved the issue, improving the performance and stability of the e-commerce platform. This highlighted the importance of observability in effectively troubleshooting complex problems in a microservices architecture.

Key Topics to Learn for Cloud Microservices Architecture Interview

- Microservices Principles: Understand the core tenets of microservices architecture, including independent deployability, bounded contexts, and fault isolation. Explore the benefits and trade-offs compared to monolithic architectures.

- Design Patterns: Familiarize yourself with common design patterns used in microservices, such as API gateways, service discovery, and circuit breakers. Be prepared to discuss their practical application and implications.

- Containerization and Orchestration: Gain a strong understanding of Docker and Kubernetes. Be ready to discuss containerization strategies, orchestration techniques, and deployment pipelines.

- API Design and Communication: Master RESTful APIs and other communication protocols used between microservices. Discuss considerations for API versioning, security, and scalability.

- Data Management: Explore different strategies for data management in a microservices environment, including distributed databases, message queues, and event-driven architectures. Understand the challenges and solutions related to data consistency and transactions.

- Monitoring and Logging: Learn about effective monitoring and logging techniques for microservices. Discuss tools and strategies for identifying and resolving issues in a distributed system.

- Security Considerations: Understand the unique security challenges posed by microservices and how to address them, including authentication, authorization, and data encryption.

- Cloud Platforms: Gain practical experience with at least one major cloud platform (AWS, Azure, GCP) and their services relevant to microservices deployment and management.

- Testing and Deployment Strategies: Explore different testing methodologies (unit, integration, end-to-end) and deployment strategies (blue/green, canary) for microservices. Understand the importance of CI/CD pipelines.

- Problem-Solving and Troubleshooting: Be prepared to discuss how you would approach common challenges in a microservices environment, such as debugging distributed systems, handling failures, and ensuring scalability.

Next Steps

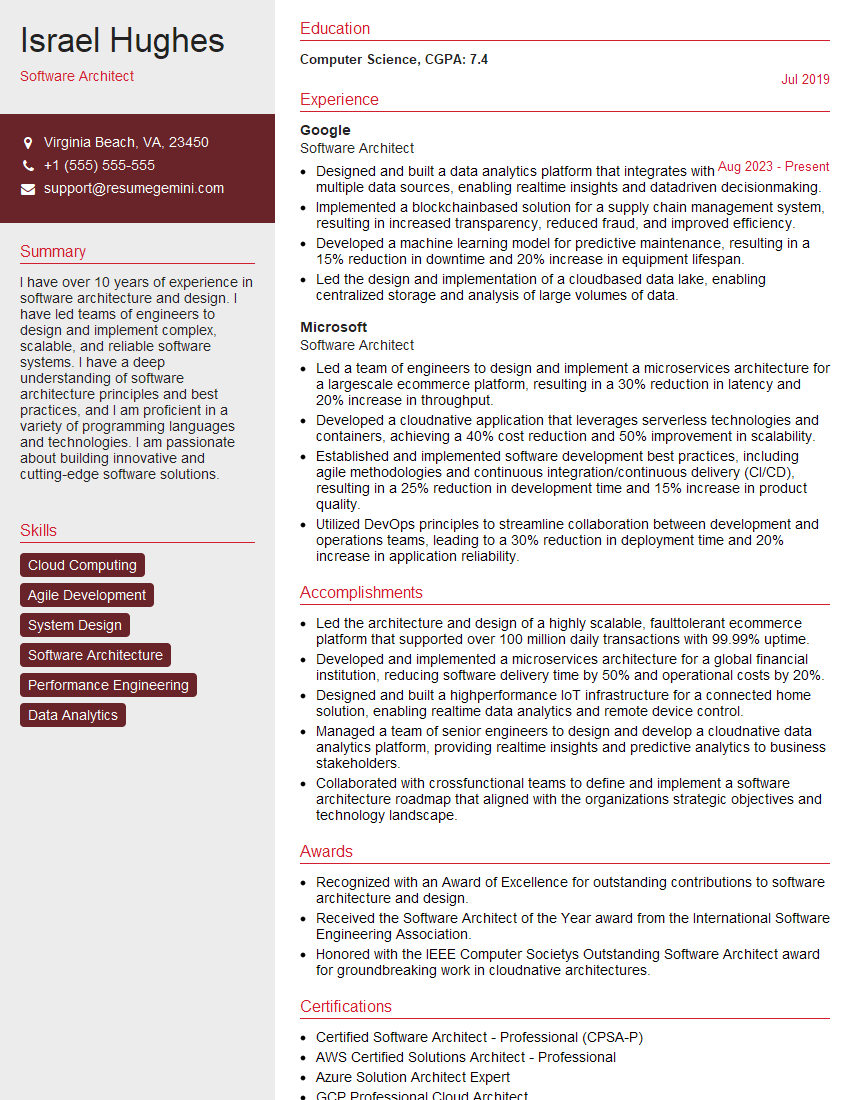

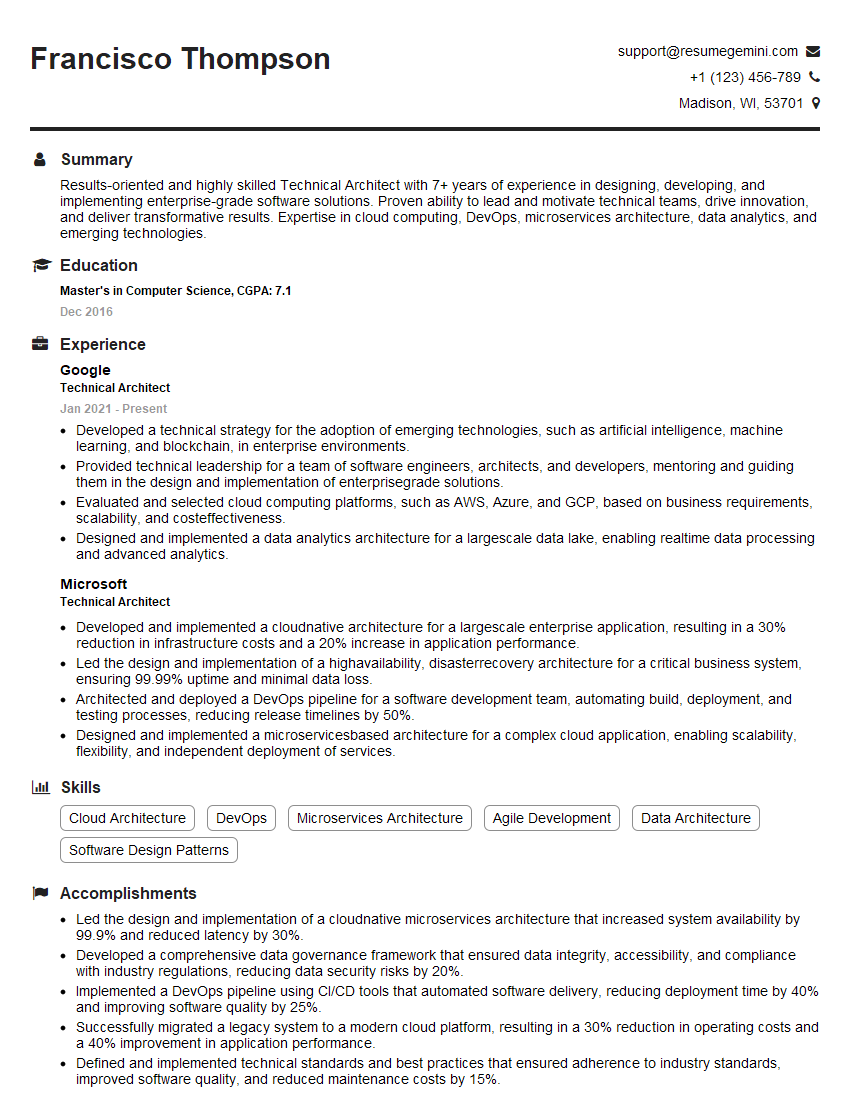

Mastering Cloud Microservices Architecture is crucial for career advancement in the ever-evolving tech landscape. It opens doors to high-demand roles and significantly increases your earning potential. To maximize your job prospects, create a compelling, ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional resume that stands out. We provide examples of resumes tailored to Cloud Microservices Architecture to guide you. Take the next step towards your dream career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent a social media marketing agency that creates 15 engaging posts per month for businesses like yours. Our clients typically see a 40-60% increase in followers and engagement for just $199/month. Would you be interested?”

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?