Warning: search_filter(): Argument #2 ($wp_query) must be passed by reference, value given in /home/u951807797/domains/techskills.interviewgemini.com/public_html/wp-includes/class-wp-hook.php on line 324

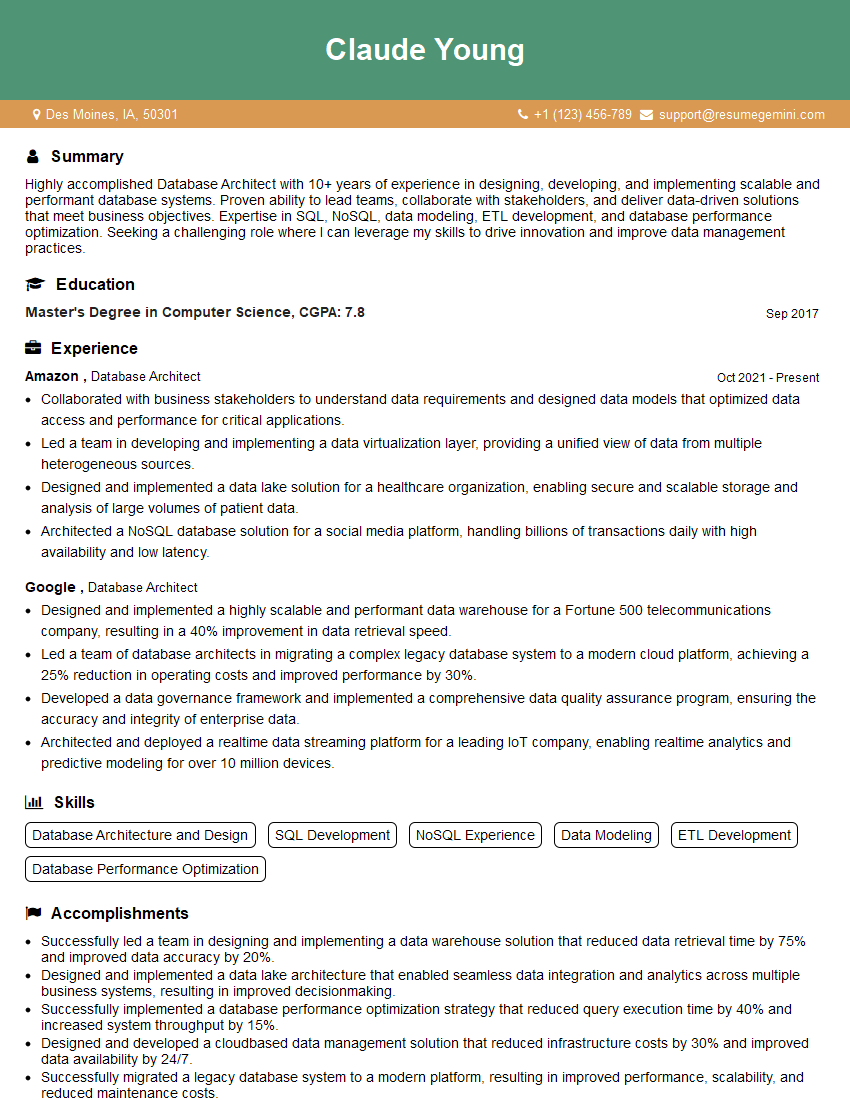

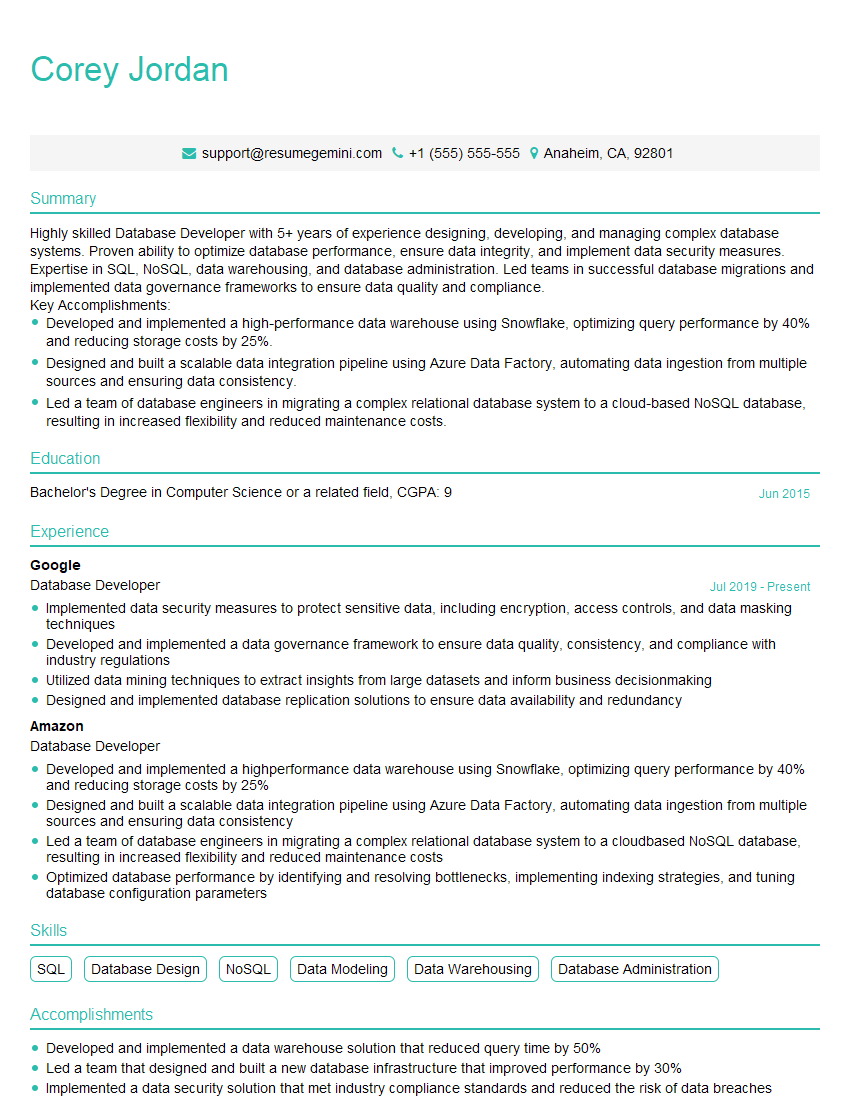

Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Experience with engineering databases interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Experience with engineering databases Interview

Q 1. Explain the difference between OLTP and OLAP databases.

OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing) databases serve fundamentally different purposes. Think of OLTP as your bank’s daily transaction system – recording deposits and withdrawals in real-time. It prioritizes speed and efficiency for individual transactions. OLAP, on the other hand, is like a financial analyst’s dashboard, summarizing and analyzing vast amounts of historical data to identify trends. It prioritizes complex queries and analytical capabilities over individual transaction speed.

- OLTP: Designed for high-volume, short, simple transactions. Focuses on data integrity and concurrency control. Uses normalized database structures for efficient data storage.

- OLAP: Designed for complex analytical queries across large datasets. Focuses on data aggregation and summarization. Often uses denormalized structures for faster query performance. Commonly uses dimensional modeling techniques (star schemas, snowflake schemas).

Example: An e-commerce website uses an OLTP database to process orders, manage inventory, and update customer accounts. They might use an OLAP database to analyze sales trends, identify popular products, or understand customer purchasing behavior.

Q 2. Describe different types of database indexing techniques and their trade-offs.

Database indexing is like creating an index for a book – it makes finding specific information much faster. Different indexing techniques offer various trade-offs between speed and storage space.

- B-tree index: A balanced tree structure, excellent for range queries (e.g., finding all customers between ages 25 and 35). Efficient for both searching and updating data. Widely used as a default index type.

- Hash index: Uses a hash function to map keys to locations. Extremely fast for exact-match lookups but not suitable for range queries. Less efficient for updates and insertions since it requires re-hashing.

- Full-text index: Used for searching within text data (e.g., finding documents containing specific keywords). Allows for complex search operations like wildcard searches and phrase matching.

- Spatial index: Designed to manage and query spatial data (e.g., location coordinates). Useful in applications like GIS and mapping.

Trade-offs: Indexes improve query speed but consume disk space and add overhead to data modification operations (insertions, updates, deletions). Choosing the right index type depends on the type of queries and the frequency of data modifications. Over-indexing can lead to performance degradation, so careful planning is crucial.

Q 3. What are ACID properties and why are they important?

ACID properties (Atomicity, Consistency, Isolation, Durability) are crucial for maintaining data integrity in database transactions, especially in financial systems or other applications where data accuracy is paramount. Think of them as the four pillars of reliable transactions.

- Atomicity: A transaction is treated as a single, indivisible unit. Either all changes within the transaction are committed, or none are (all-or-nothing). Imagine transferring money between accounts; either both accounts are updated correctly, or neither is.

- Consistency: The database remains in a valid state before and after a transaction. This involves enforcing constraints (e.g., ensuring balance remains positive). The transfer example guarantees the total amount remains the same.

- Isolation: Concurrent transactions are isolated from each other; it appears as if each runs individually. This prevents conflicts or data corruption when multiple users access the same data simultaneously. Prevents one user seeing partial updates.

- Durability: Once a transaction is committed, the changes are permanently stored and survive system failures. Even if the system crashes, the data is safe. This usually involves logging and redundancy mechanisms.

Importance: ACID properties ensure reliable and consistent data processing, even in high-concurrency environments, preventing data loss, inconsistency, and corruption. They are essential for maintaining trust and ensuring accuracy in data-driven applications.

Q 4. How do you handle database concurrency issues?

Database concurrency issues arise when multiple users or processes access and modify the same data simultaneously. This can lead to data inconsistency or corruption. Several techniques help handle this:

- Locking: A simple yet effective mechanism. Different types of locks (exclusive, shared) prevent simultaneous access to data items. However, excessive locking can lead to performance bottlenecks (deadlocks).

- Optimistic Locking: Assumes conflicts are rare and only checks for conflicts at commit time. If a conflict is detected, the transaction is rolled back. More efficient than pessimistic locking when conflicts are infrequent.

- Pessimistic Locking: Assumes conflicts are frequent and locks data resources immediately. More restrictive but guarantees no conflicts.

- Multi-version concurrency control (MVCC): Maintains multiple versions of data, allowing transactions to read older versions without blocking each other. Effective in high-concurrency environments but more complex to implement.

Strategies: Choosing the right concurrency control mechanism depends on the application’s specific needs. For high-throughput systems, MVCC might be preferred. For applications with frequent updates, pessimistic locking might be suitable. A robust system may combine different techniques.

Q 5. Explain different database normalization forms.

Database normalization is a process of organizing data to reduce redundancy and improve data integrity. It involves splitting databases into two or more tables and defining relationships between the tables.

- 1NF (First Normal Form): Eliminates repeating groups of data within a table. Each column should contain only atomic values (indivisible values).

- 2NF (Second Normal Form): Builds upon 1NF and eliminates redundant data that depends on only part of the primary key (in tables with composite keys).

- 3NF (Third Normal Form): Builds upon 2NF and eliminates transitive dependency; no non-key column should depend on another non-key column.

- BCNF (Boyce-Codd Normal Form): A stricter version of 3NF, addressing certain anomalies not covered by 3NF.

- 4NF (Fourth Normal Form): Addresses multi-valued dependencies, which occur when a single attribute can have multiple values independently.

- 5NF (Fifth Normal Form): Addresses join dependencies, ensuring that data can be correctly retrieved through joins without redundancy.

Example: A table with repeating address information (street, city, state) for multiple contacts would violate 1NF. Normalization would split it into two tables: one for contacts and another for addresses, linked by a contact ID.

Q 6. What is database sharding and when is it necessary?

Database sharding, also known as horizontal partitioning, is a technique of splitting a large database into smaller, more manageable parts called shards. Each shard is stored on a separate database server.

Necessity: Sharding becomes necessary when a single database server can no longer handle the volume of data or the transaction load. This is common in large-scale applications with massive user bases and high data growth rates.

Example: A social media platform with billions of users might shard its user database by geographic location. Users in North America are stored on one set of shards, those in Europe on another, and so on. This distributes the load and improves performance for queries targeting specific regions.

Considerations: Sharding introduces complexity in data management and query execution. Careful planning is required to ensure data consistency, handle shard distribution and routing, and avoid hotspots (shards with disproportionately high load). Consistent hashing is a common technique for distributing data across shards.

Q 7. Describe your experience with SQL query optimization.

SQL query optimization is a crucial skill in database engineering. It involves identifying bottlenecks in queries and rewriting them for improved performance. My experience encompasses various techniques:

- Profiling: Using database profiling tools to identify slow-running queries and pinpoint the exact areas causing delays.

- Index tuning: Creating, modifying, or deleting indexes to improve query speed. Often involves analyzing query patterns and choosing appropriate index types.

- Query rewriting: Rewriting inefficient queries using more optimized SQL syntax. This may involve using joins effectively, avoiding subqueries, or optimizing filter conditions.

- Database statistics: Understanding and utilizing database statistics to guide query optimization strategies.

- Query plan analysis: Examining the execution plan generated by the database to identify inefficient operations, like full table scans instead of index lookups.

- Caching: Effectively using query caching to reduce the need to execute queries repeatedly.

- Materialized views: Creating pre-computed views for frequently used aggregated data, improving response times for certain queries.

Example: I once worked on optimizing a query that was performing a full table scan on a large table. By analyzing the query plan and creating an appropriate index, I reduced query execution time by over 90%. This significantly improved overall application performance.

Q 8. How do you troubleshoot database performance issues?

Troubleshooting database performance issues involves a systematic approach. Think of it like diagnosing a car problem – you wouldn’t just start replacing parts randomly. You need to identify the root cause.

My process typically starts with monitoring. I use tools to track key metrics like query execution time, CPU usage, I/O wait times, and memory consumption. This provides a snapshot of the system’s health and often points to bottlenecks. For example, consistently high I/O wait times might indicate a storage problem, while high CPU usage could suggest inefficient queries.

Next, I analyze slow queries. Database systems usually have query logging and profiling capabilities. I examine these logs to identify queries taking excessive time. This often reveals poorly written queries, missing indexes, or table design issues. Optimizing these queries frequently yields dramatic performance improvements. For instance, I once worked on a project where a single poorly optimized query was causing significant slowdowns. By adding an appropriate index, query time decreased by over 90%.

If the problem isn’t query-related, I investigate the database configuration. Are the allocated resources (memory, CPU, disk space) sufficient? Are there any resource contention issues? Proper configuration is critical. I might need to adjust buffer pool size, increase the number of connections, or optimize the storage configuration. Incorrect configuration can lead to significant performance degradation.

Finally, I consider external factors. Are there any network issues affecting communication between the application and the database? Is the hardware itself a limiting factor? A slow network connection or failing hardware can mimic database performance problems. Always investigate the entire system, not just the database itself.

Q 9. Explain your experience with different NoSQL databases (e.g., MongoDB, Cassandra).

I have extensive experience with both MongoDB and Cassandra, two prominent NoSQL databases. My work with MongoDB focused primarily on document-oriented data modeling. I’ve used it in projects requiring flexible schemas and rapid prototyping. For instance, I leveraged MongoDB’s aggregation framework for building complex analytics dashboards. Its ease of use and scalability made it ideal for these applications.

With Cassandra, I’ve focused on building highly scalable, distributed systems. Its strengths lie in its fault tolerance and horizontal scalability. I used Cassandra in a project where we needed to handle millions of concurrent writes with minimal downtime. Its distributed nature and replication capabilities proved invaluable in ensuring data availability and consistency even during hardware failures. I also have experience with schema management in Cassandra, ensuring consistency across the distributed nodes using CQL.

Beyond these, I’ve also worked with other NoSQL databases like Redis (primarily for caching) and Neo4j (for graph databases) depending on project-specific needs. The choice of the database is always driven by the specific requirements of the application.

Q 10. What are the advantages and disadvantages of using NoSQL databases?

NoSQL databases offer several advantages, but also come with certain trade-offs. Think of it like choosing between a sports car and an SUV – each excels in different areas.

- Advantages:

- Scalability: NoSQL databases are typically designed for horizontal scalability, meaning you can easily add more servers to handle increasing data volumes and traffic.

- Flexibility: They often offer flexible schema designs, allowing for easier adaptation to evolving data structures.

- Performance: For specific types of workloads (like high-volume writes), NoSQL databases can outperform relational databases.

- Disadvantages:

- Data Consistency: Maintaining strong data consistency across multiple nodes can be challenging in some NoSQL systems.

- Limited Query Capabilities: Compared to SQL databases, the query capabilities of NoSQL databases are often more limited, making complex data analysis more difficult.

- Data Modeling: Designing effective data models in NoSQL requires a different approach than with relational databases. This can lead to challenges if not handled carefully.

The optimal choice depends heavily on the application’s requirements. If scalability and flexibility are paramount, a NoSQL database might be preferable. However, if strong data consistency and complex querying are crucial, a relational database might be a better fit.

Q 11. How do you choose the right database for a specific application?

Selecting the right database involves careful consideration of several factors. There’s no one-size-fits-all answer; the best database depends entirely on your application’s needs.

I typically start by understanding the application’s requirements: What type of data will be stored? What kind of queries will be performed? What are the scalability and performance requirements? What are the consistency requirements? How important is ACID compliance (Atomicity, Consistency, Isolation, Durability)? Then I consider the following:

- Data Model: Is the data relational (with well-defined relationships between entities) or non-relational (more flexible, schema-less)? This often dictates whether a relational (SQL) or NoSQL database is more appropriate.

- Scalability Needs: Will the application need to handle large volumes of data and high traffic? If so, a horizontally scalable database (like Cassandra or MongoDB) might be necessary.

- Transaction Requirements: Does the application require strict ACID compliance for transactional integrity? If so, a relational database is typically a better choice.

- Query Complexity: How complex are the queries likely to be? Relational databases often provide more powerful querying capabilities.

- Cost and Maintenance: Consider the cost of licensing, infrastructure, and ongoing maintenance.

By carefully weighing these factors, I can make an informed decision about the most appropriate database technology for the given application. In some cases, a hybrid approach might be the most effective, utilizing different database systems for various aspects of the application.

Q 12. Describe your experience with database replication and high availability.

Database replication and high availability are critical for ensuring data durability and application uptime. Imagine a bank’s database – downtime would be catastrophic. Replication creates multiple copies of the database on different servers, while high availability ensures the application remains accessible even if one or more servers fail.

My experience includes implementing various replication strategies, including synchronous and asynchronous replication. Synchronous replication provides stronger consistency but can impact performance; asynchronous replication prioritizes performance but might lead to some data inconsistency in case of failures. The choice depends on the application’s requirements. I’ve worked with both master-slave and multi-master configurations, selecting the best approach based on the specific needs of the application.

High availability is achieved through techniques like clustering and load balancing. I have experience using tools and technologies that automatically failover to a backup server in case of a primary server failure. This ensures minimal downtime and maintains application availability. Monitoring is a crucial part of this process; I implemented systems to constantly monitor the health of the database servers and trigger failovers automatically when needed.

For example, in one project we used a clustered setup with asynchronous replication to handle a large volume of transactions with high availability. The automatic failover mechanism ensured that even when a server failed, application downtime was measured in seconds, not minutes or hours.

Q 13. Explain your experience with database backup and recovery procedures.

Database backup and recovery procedures are essential for protecting data from loss or corruption. Regular backups are like insurance for your valuable data. My experience covers a wide range of techniques, including full, incremental, and differential backups. The best strategy depends on factors like the size of the database, recovery time objectives (RTO), and recovery point objectives (RPO).

Full backups create a complete copy of the database, while incremental backups only capture changes since the last full or incremental backup. Differential backups capture changes since the last full backup. A combination of these strategies is frequently used to optimize both storage space and recovery time. I’ve used both physical and logical backups, selecting the appropriate method based on the database system and application requirements.

Recovery procedures are equally crucial. I’ve developed and tested recovery plans for various scenarios, including hardware failures, software crashes, and accidental data deletion. This involves creating and regularly testing recovery procedures, ensuring that they can restore the database to a consistent state in a timely manner. This testing is critical – a plan is useless if it doesn’t work in practice. Regular drills ensure the team is familiar with the process and can respond effectively during an actual emergency.

Q 14. How do you ensure data integrity in a database?

Ensuring data integrity is paramount – it’s about guaranteeing the accuracy, consistency, and validity of the data. This involves several layers of defense.

Firstly, I use constraints and validation rules within the database itself. This includes things like data type constraints (e.g., ensuring a field is a number, not text), check constraints (e.g., ensuring a value is within a certain range), and foreign key constraints (e.g., ensuring relationships between tables are valid). These constraints help prevent incorrect data from being entered into the database in the first place.

Secondly, I employ stored procedures and triggers. Stored procedures help encapsulate data access logic and ensure consistent data modification. Triggers automatically execute before or after data modification events, allowing for additional validation or auditing. For example, a trigger could prevent deleting a customer if they have outstanding orders.

Thirdly, robust testing and quality assurance (QA) are essential. Thorough testing of the application and database interactions helps uncover potential data integrity issues before they impact production systems. This includes unit tests, integration tests, and user acceptance testing.

Finally, regular auditing and monitoring are crucial. Monitoring the database for anomalies and performing regular audits help detect and correct integrity problems early on. This proactive approach helps maintain the long-term integrity of the database.

Q 15. Describe your experience with database security best practices.

Database security is paramount. My experience encompasses a multi-layered approach, prioritizing prevention and detection. This includes implementing robust access controls, using strong passwords and multi-factor authentication, regularly patching the database system and underlying operating system, and adhering to the principle of least privilege – granting users only the necessary permissions.

I’ve worked extensively with encryption, both at rest and in transit, using technologies like TLS/SSL for secure communication and Transparent Data Encryption (TDE) for protecting data stored on disk. Regular security audits, including vulnerability scans and penetration testing, are crucial. I’ve actively participated in these exercises, using tools like Nessus and Burp Suite, identifying weaknesses, and remediating vulnerabilities before they can be exploited. Furthermore, I’m experienced in monitoring database activity for suspicious behavior using security information and event management (SIEM) systems, proactively detecting and responding to potential threats.

For example, in a previous role, we migrated a legacy system to a cloud-based database. A key component of this migration was implementing encryption at rest and in transit, coupled with robust network security measures, to ensure compliance with industry regulations and protect sensitive customer data. We also implemented a robust logging system to track database activity and facilitate security auditing.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is a database transaction and how does it work?

A database transaction is a sequence of database operations performed as a single logical unit of work. Think of it like a bank transaction: either all the operations succeed, or none do. This ensures data consistency and integrity, even in the event of failures.

Transactions are governed by the ACID properties:

- Atomicity: The entire transaction is treated as a single, indivisible unit. Either all changes are committed, or none are.

- Consistency: The transaction maintains the integrity of the database. It starts in a valid state and ends in a valid state.

- Isolation: Concurrent transactions appear to be executed sequentially, preventing interference between them. This is crucial for preventing race conditions and data corruption.

- Durability: Once a transaction is committed, the changes are permanent and survive even system failures.

Transactions are managed using SQL commands like BEGIN TRANSACTION, COMMIT, and ROLLBACK. BEGIN TRANSACTION starts the transaction, COMMIT saves the changes permanently, and ROLLBACK undoes all changes made within the transaction if any errors occur.

For example, transferring money from one account to another requires a transaction. If the debit from one account succeeds but the credit to the other fails, the transaction would be rolled back, ensuring the system remains consistent.

Q 17. Explain your experience with database migration.

Database migration involves moving data and schemas from one database system to another. This can be a complex process, requiring careful planning and execution. My experience involves various strategies, from simple data exports/imports to sophisticated schema transformation and data synchronization techniques.

I’ve worked with different tools and techniques, including:

- Data Export/Import: Using native database tools for simple migrations.

- Schema Transformation: Using scripting languages (e.g., Python) or specialized tools to convert schemas between different database systems.

- Data Synchronization: Using change data capture (CDC) mechanisms to maintain consistency between source and target databases after the initial migration.

- Third-party Migration Tools: Utilizing specialized tools designed for database migrations, often providing features for schema comparison, data transformation, and testing.

A key aspect is thorough testing to validate data integrity and application functionality post-migration. I typically employ a phased approach, starting with a pilot migration to a staging environment before deploying to production. Rollback strategies are essential to mitigate potential problems during the migration process. For example, during one project, we migrated a large Oracle database to a cloud-based PostgreSQL instance using a combination of scripting and a third-party migration tool, successfully completing the migration with minimal downtime.

Q 18. How do you handle large datasets in a database?

Handling large datasets efficiently requires a multi-pronged strategy. It’s not just about the database itself but also about how you query and process the data. Key techniques include:

- Database Tuning: Optimizing database parameters, indexes, and query plans to ensure efficient data retrieval.

- Data Partitioning: Dividing large tables into smaller, more manageable chunks for faster queries and improved scalability.

- Data Warehousing: Using a separate data warehouse for analytical processing, offloading the load from the operational database.

- Indexing: Creating appropriate indexes to speed up data retrieval. The right index can dramatically improve query performance.

- Query Optimization: Writing efficient SQL queries, avoiding full table scans, and using appropriate joins.

- Caching: Implementing caching mechanisms to store frequently accessed data in memory for faster access.

- Distributed Databases: Distributing the data across multiple servers to improve scalability and availability.

For instance, in a project involving millions of customer records, we implemented data partitioning to significantly reduce query times. We also optimized queries by adding indexes and using appropriate join types. Regular monitoring and performance testing allowed us to identify and address any bottlenecks promptly.

Q 19. What is the difference between a clustered and non-clustered index?

Both clustered and non-clustered indexes are used to speed up data retrieval, but they differ significantly in how they organize data.

A clustered index physically reorders the data rows in the table based on the index key. Think of it like a phone book sorted alphabetically by last name – the physical order of the entries matches the alphabetical order. A table can have only one clustered index because the data can only be physically ordered in one way. This makes lookups based on the clustered index key extremely fast.

A non-clustered index, on the other hand, creates a separate structure that points to the data rows. It’s like having an index in a book – the index entries point to the pages where the relevant information is located, but the pages themselves aren’t rearranged. A table can have multiple non-clustered indexes. Non-clustered indexes are faster for queries involving columns not included in the clustered index.

Choosing between them depends on the query patterns. If a particular column is frequently used in WHERE clauses, it might be beneficial to make it part of a clustered index. However, frequent updates might be slower with clustered indexes due to data reorganization. Non-clustered indexes can be more flexible but require an extra step to retrieve the actual data rows.

Q 20. Explain your experience with data warehousing and ETL processes.

Data warehousing is the process of creating a central repository for analytical processing. ETL (Extract, Transform, Load) processes are crucial for populating the data warehouse. My experience involves designing, implementing, and maintaining data warehouses using various technologies.

The Extract phase involves pulling data from various source systems (databases, files, etc.). The Transform phase cleanses, transforms, and aggregates data to prepare it for the warehouse. The Load phase involves loading the processed data into the data warehouse.

I’ve used various ETL tools, including Informatica PowerCenter and Apache Kafka. I’ve also worked with scripting languages like Python for custom data transformations. Data quality is a major concern; I typically implement data validation and cleansing steps to ensure data accuracy and consistency. Designing a scalable and maintainable data warehouse requires careful consideration of data modeling techniques, such as dimensional modeling (star schema and snowflake schema), to support efficient querying and reporting.

In one project, we built a data warehouse to support business intelligence and analytics. We used dimensional modeling and ETL processes to consolidate data from various operational systems. This enabled the business to gain valuable insights into customer behavior and sales trends.

Q 21. How do you monitor database performance and identify bottlenecks?

Monitoring database performance and identifying bottlenecks is crucial for ensuring application responsiveness and data availability. My approach involves a combination of tools and techniques:

- Database Monitoring Tools: Using tools like SQL Server Profiler, Oracle Enterprise Manager, or third-party monitoring tools to track key performance indicators (KPIs) such as CPU usage, disk I/O, memory usage, and query execution times.

- Query Analysis: Identifying slow-running queries using execution plans and performance statistics. This helps pinpoint areas needing optimization.

- Log Analysis: Examining database logs to identify errors, warnings, and performance-related issues.

- Performance Testing: Conducting load tests and stress tests to simulate real-world scenarios and identify performance bottlenecks under various conditions.

- Indexing Strategies: Regularly reviewing indexing strategies to ensure they’re aligned with query patterns.

For example, in one scenario, we used database monitoring tools to identify a slow-running query that was causing significant performance degradation. Analysis of the query execution plan revealed a missing index. Adding the index dramatically improved query performance and resolved the bottleneck. Regular performance testing and monitoring are vital for proactively identifying and addressing potential issues.

Q 22. Describe your experience with database tuning and performance optimization.

Database tuning and performance optimization are crucial for ensuring applications run smoothly and efficiently. It involves identifying bottlenecks, analyzing query performance, and implementing strategies to improve response times and resource utilization. My experience encompasses a wide range of techniques, from simple index optimization to complex query rewriting and schema redesign.

For example, I once worked on an e-commerce platform where slow query performance was impacting sales. Through analyzing query execution plans using tools like SQL Server Profiler, I identified a poorly performing join operation. By creating a composite index on the relevant columns, we reduced query execution time by over 80%, significantly improving the user experience. Another instance involved optimizing a large reporting query by breaking it down into smaller, more manageable subqueries and leveraging temporary tables to reduce I/O operations. I regularly utilize tools like explain plan (Oracle) or query analyzer (SQL Server) to understand query performance and make informed decisions.

- Index Optimization: Creating and maintaining appropriate indexes on frequently queried columns is fundamental.

- Query Optimization: Rewriting inefficient queries, using set-based operations, and avoiding full table scans are key strategies.

- Schema Design: A well-designed database schema is crucial for performance. Normalization helps avoid data redundancy, while appropriate data types ensure efficient storage and retrieval.

- Hardware Optimization: In some cases, performance improvements can be achieved by upgrading server hardware, such as adding more RAM or faster storage.

Q 23. What is your experience with database administration tools?

My experience with database administration tools is extensive. I’m proficient in using a variety of tools depending on the specific database system. For example, I routinely use SQL Server Management Studio (SSMS) for managing SQL Server databases, including tasks like creating and managing databases, users, and roles, monitoring performance, and troubleshooting issues. Similarly, I’m comfortable using Oracle Enterprise Manager for Oracle databases and pgAdmin for PostgreSQL. These tools allow for efficient database administration, from routine maintenance to complex troubleshooting. I also have experience with command-line tools like sqlplus (Oracle) and psql (PostgreSQL) for more advanced tasks and scripting.

Beyond individual database management systems, I’m also experienced in using monitoring tools like Nagios or Zabbix to track database health, resource utilization, and potential issues proactively. This allows for early detection of potential problems and proactive maintenance.

Q 24. How do you ensure data consistency across multiple databases?

Ensuring data consistency across multiple databases requires a strategic approach and often involves a combination of techniques. The most common approach is to use database replication, where data changes are automatically propagated to other databases. There are various types of replication, including synchronous and asynchronous, each with its own trade-offs in terms of performance and data consistency.

Another crucial aspect is transactional consistency. Using distributed transactions or two-phase commit protocols ensures that data modifications across multiple databases are atomic – either all changes succeed, or none do. This prevents inconsistencies resulting from partial updates. However, distributed transactions can impact performance and are not always feasible in large-scale distributed systems. In such cases, techniques like eventual consistency, where data consistency is achieved over time, might be more appropriate, requiring careful design and consideration of data conflicts.

Data synchronization tools also play a significant role. These tools facilitate the transfer of data between databases, often involving change data capture (CDC) mechanisms to track only the changes that need to be synchronized. The choice of approach depends on factors such as data volume, the frequency of updates, and the acceptable level of latency.

Q 25. Explain your experience with database scripting languages (e.g., PL/SQL, T-SQL).

I have extensive experience with various database scripting languages, including PL/SQL (Oracle), T-SQL (SQL Server), and procedural SQL for PostgreSQL and MySQL. These languages allow for automating database tasks, creating stored procedures, functions, and triggers to enhance efficiency and data integrity.

For instance, I’ve used PL/SQL to create stored procedures that automate complex data transformations and reporting processes, improving efficiency significantly compared to ad-hoc SQL queries. In SQL Server, I’ve leveraged T-SQL to implement triggers that enforce business rules and ensure data consistency across tables. My scripts often involve error handling and logging mechanisms for robustness and maintainability. Below is a simple example of a T-SQL stored procedure:

CREATE PROCEDURE UpdateCustomerName (@CustomerID INT, @NewName VARCHAR(255))ASBEGIN UPDATE Customers SET CustomerName = @NewName WHERE CustomerID = @CustomerIDEND;Q 26. Describe a challenging database problem you solved and how you approached it.

In a previous role, we faced a significant performance bottleneck in our data warehouse. The warehouse served various reporting and analytical needs, and query response times had become unacceptably slow. Initial investigations pointed towards the lack of proper indexing and inefficient query patterns. My approach involved a multi-pronged strategy:

- Profiling and Analysis: We used database monitoring tools to identify the slowest queries and analyze their execution plans. This helped pinpoint the specific areas of inefficiency.

- Indexing Optimization: We reviewed the existing indexes and created new composite indexes on frequently joined columns. This significantly reduced the number of rows scanned during query execution.

- Query Rewriting: We optimized poorly performing queries by rewriting them using more efficient SQL constructs, including the use of window functions and set-based operations.

- Materialized Views: For frequently used aggregated reports, we implemented materialized views. These pre-computed views reduced the processing time for repetitive queries.

- Partitioning: We partitioned the largest tables based on time to further improve query performance. This allowed queries to access only the relevant partitions.

By implementing these measures, we reduced average query response times by over 75%, significantly improving the responsiveness of our reporting and analytical applications. This case highlighted the importance of a systematic approach to performance optimization, combining thorough analysis with the appropriate technical solutions.

Q 27. How familiar are you with cloud-based database services (e.g., AWS RDS, Azure SQL Database)?

I have considerable experience with cloud-based database services, including AWS RDS (Relational Database Service), and Azure SQL Database. I understand the benefits and trade-offs of using cloud databases, including scalability, cost-effectiveness, and ease of management. I have worked on migrating on-premises databases to these cloud services, configuring high-availability setups, and optimizing performance within the cloud environment.

For example, I’ve leveraged AWS RDS’s features like automated backups, read replicas, and multi-AZ deployments to ensure high availability and disaster recovery capabilities for production databases. With Azure SQL Database, I’ve utilized features like elastic pools to efficiently manage and scale multiple databases within a single environment. This experience includes configuring performance settings such as I/O and compute resources to optimize for specific application needs. I understand the implications of choosing between different deployment models and storage options based on factors like cost, performance, and availability requirements.

Q 28. What are your experiences with database schema design and evolution?

Database schema design and evolution are critical aspects of database management. A well-designed schema is crucial for data integrity, efficiency, and maintainability. My experience covers the full lifecycle, from initial design to ongoing evolution. I utilize various design principles, including normalization to reduce redundancy and ensure data consistency. I use Entity-Relationship Diagrams (ERDs) to visually represent the relationships between database entities, facilitating communication and collaboration among team members.

Schema evolution is an ongoing process, often necessitated by changing business requirements. This can involve adding new tables, columns, or modifying existing data structures. I follow a structured approach to schema changes, using version control and rigorous testing to minimize disruptions. This includes thorough impact analysis before implementing changes and using database migration tools to manage the changes effectively. For instance, I’ve extensively used schema migration tools such as Liquibase or Flyway to track and manage schema changes across different environments, ensuring consistency across development, testing, and production databases. This approach is critical to ensure that the changes are implemented consistently and without errors, minimizing the risk of data loss or inconsistencies.

Key Topics to Learn for Experience with Engineering Databases Interview

- Database Fundamentals: Relational database models (e.g., SQL), NoSQL databases (e.g., MongoDB, Cassandra), data modeling techniques, normalization, and ACID properties. Understanding the trade-offs between different database types is crucial.

- SQL Proficiency: Mastering SQL queries (SELECT, INSERT, UPDATE, DELETE), joins, subqueries, aggregate functions, and database optimization techniques. Practice writing efficient and scalable queries for complex data scenarios.

- Data Modeling and Design: Learn to design efficient database schemas, considering data integrity, scalability, and performance. Understanding ER diagrams and their application is essential.

- Database Administration (DBA) Concepts: Familiarize yourself with basic DBA tasks such as user management, access control, backup and recovery, and performance monitoring. This demonstrates a holistic understanding of database management.

- NoSQL Databases: Explore the characteristics and applications of NoSQL databases, understanding when to choose them over relational databases. Focus on practical use cases and potential challenges.

- Data Warehousing and Business Intelligence: Understanding concepts related to data warehousing, ETL processes, and business intelligence tools will showcase your ability to handle large datasets and extract meaningful insights.

- Problem-Solving and Optimization: Prepare to discuss how you approach database performance issues, troubleshoot query inefficiencies, and optimize database designs for scalability and maintainability.

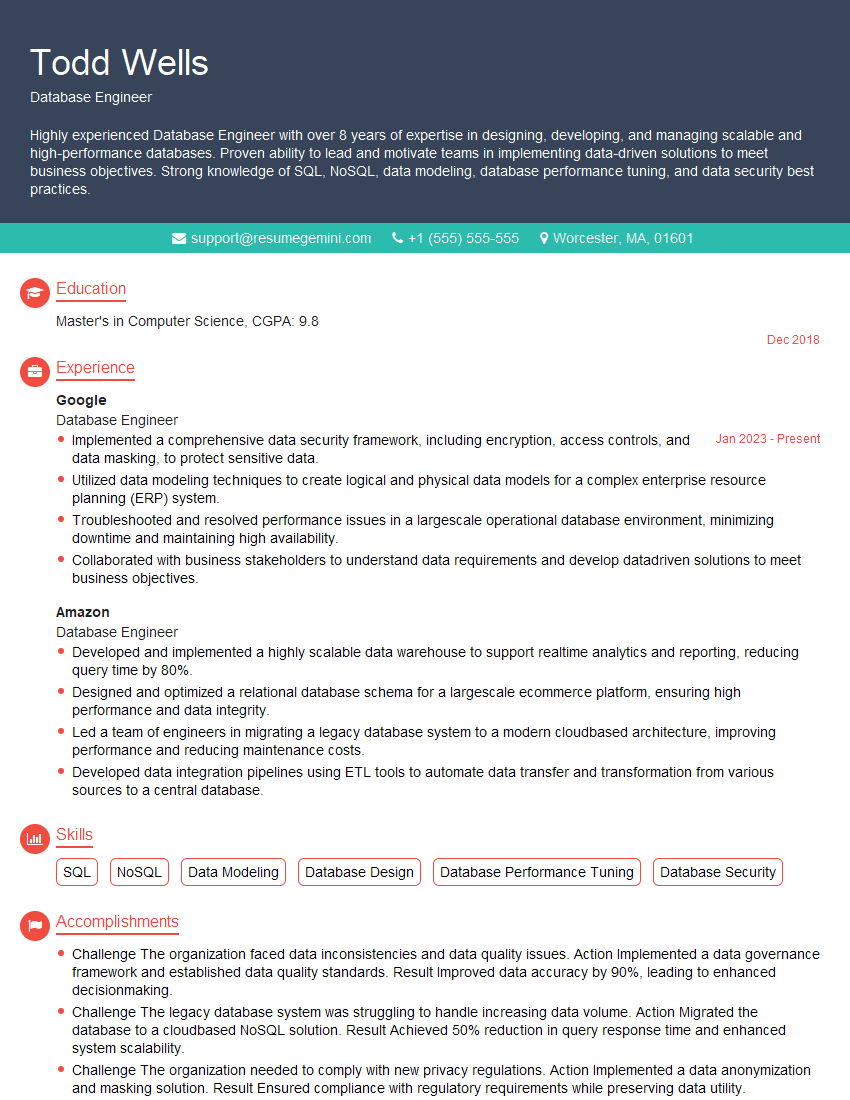

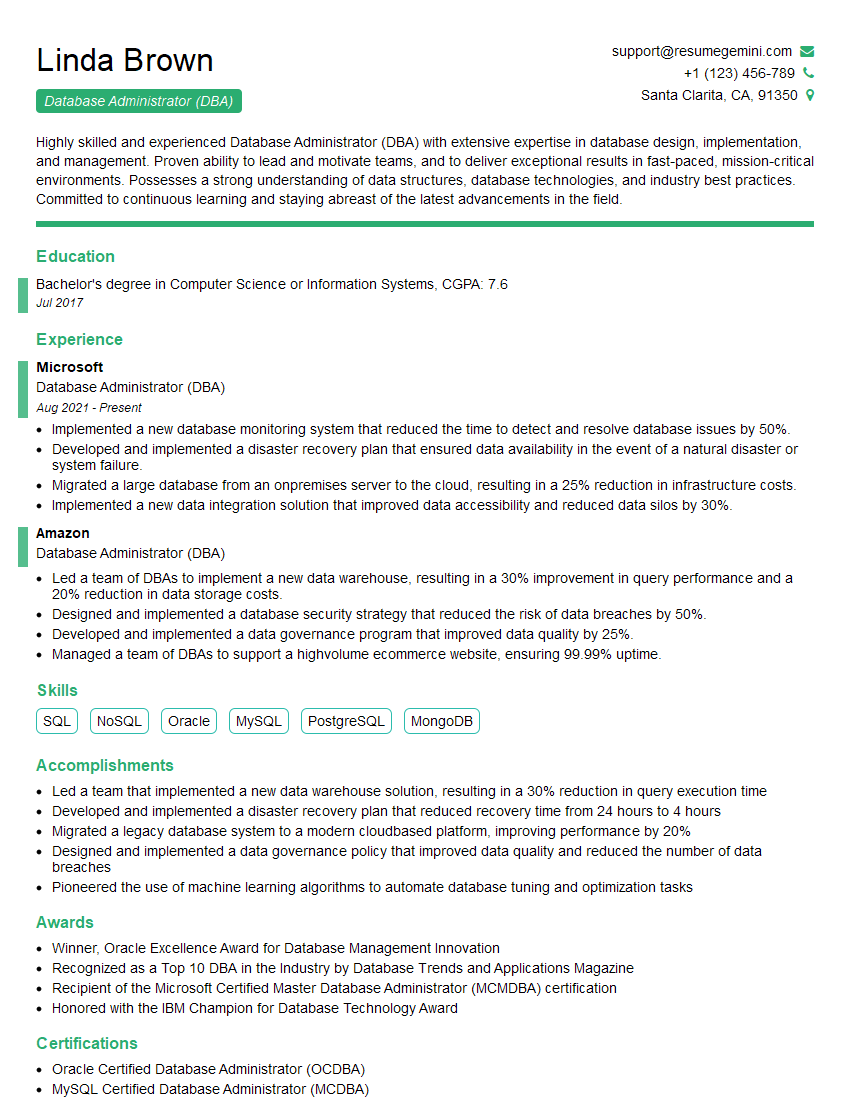

Next Steps

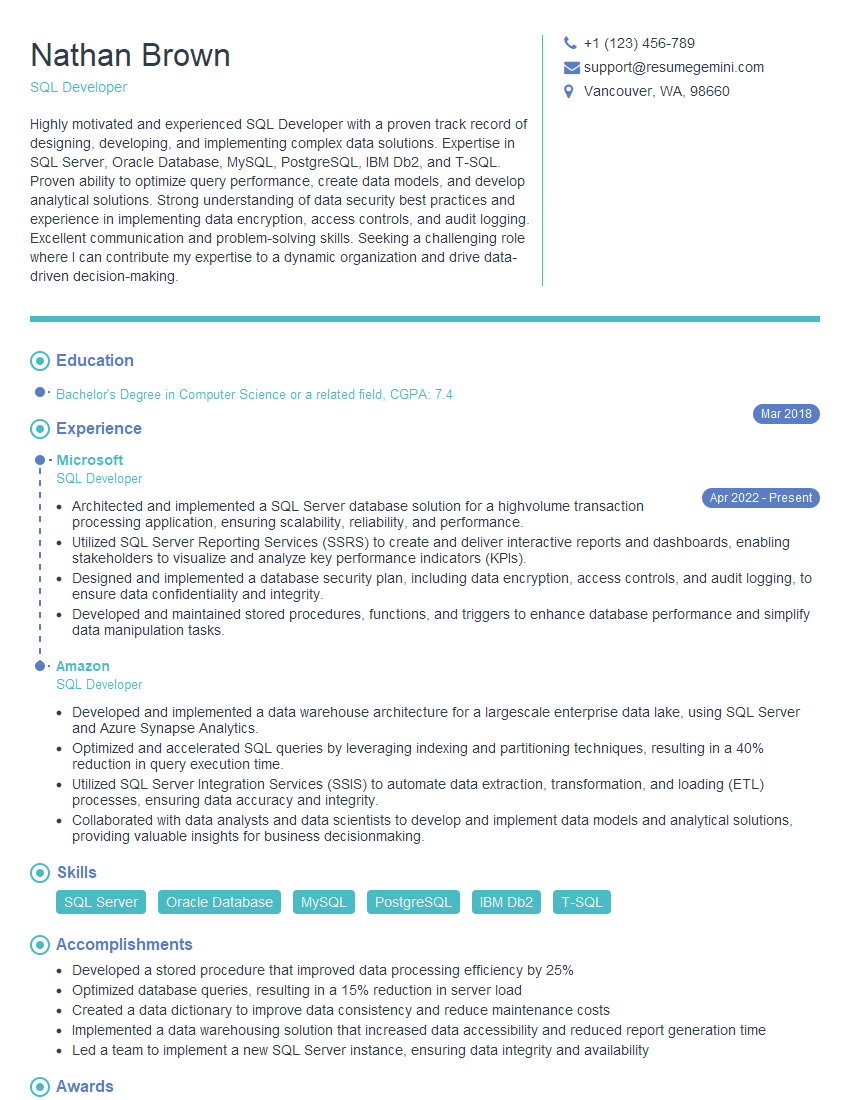

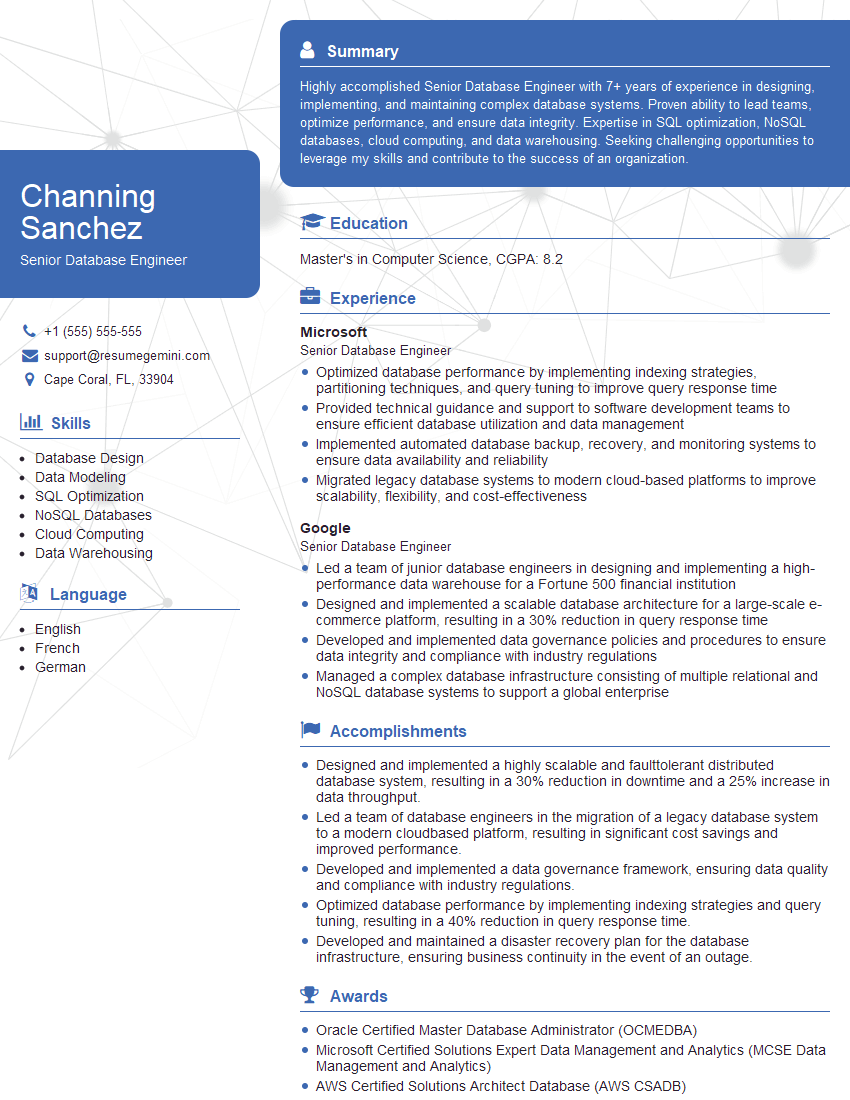

Mastering experience with engineering databases is paramount for career advancement in today’s data-driven world. Strong database skills are highly sought after across various engineering disciplines, opening doors to exciting opportunities and higher earning potential. To maximize your job prospects, it’s crucial to present your skills effectively. Building an ATS-friendly resume is key to getting your application noticed. We highly recommend leveraging ResumeGemini to craft a professional and impactful resume that highlights your expertise. ResumeGemini provides examples of resumes tailored to experience with engineering databases, helping you showcase your qualifications effectively. Take the next step towards your dream career – build a compelling resume with ResumeGemini today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent a social media marketing agency that creates 15 engaging posts per month for businesses like yours. Our clients typically see a 40-60% increase in followers and engagement for just $199/month. Would you be interested?”

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?