Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Language Modeling interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Language Modeling Interview

Q 1. Explain the difference between an n-gram model and a neural language model.

N-gram models and neural language models represent fundamentally different approaches to predicting the probability of a sequence of words. Think of it like this: an n-gram model is like building with LEGOs – you only consider a limited number of bricks (words) at a time (the ‘n’ in n-gram), while a neural language model is like building with clay – it can consider the entire structure simultaneously and understand complex relationships between words, even those far apart.

N-gram models are statistical models that estimate the probability of a word given the preceding n-1 words. For example, a trigram (n=3) model would predict the next word based on the previous two. They’re simple and computationally efficient, but limited in their ability to capture long-range dependencies. They rely on counting word frequencies from a large corpus of text.

Neural language models, on the other hand, use artificial neural networks to learn complex patterns and relationships in language. They can capture much longer-range dependencies and handle nuanced contexts. Instead of just counting word frequencies, they learn representations of words (word embeddings) and use these representations to predict the probability of the next word. Examples include models based on RNNs and Transformers.

In essence, n-gram models are simpler, faster, and require less data, but neural language models are significantly more powerful and accurate, especially for complex language tasks.

Q 2. Describe different types of language models (e.g., autoregressive, causal, masked).

Language models come in various flavors, each with its own strengths and weaknesses. Let’s explore some key types:

- Autoregressive Language Models: These models predict the probability of a word based on the preceding words in a sequence. Think of it as writing a story word by word, each word influenced by the ones before. GPT-3 is a prime example.

- Causal Language Models: Similar to autoregressive models, but specifically designed to avoid looking ahead in the sequence when making predictions. This is crucial for applications where you cannot have access to future information, like real-time chatbots. They are often used for generating text sequentially.

- Masked Language Models: These models predict masked (hidden) words in a sentence. They learn by filling in the blanks. Think of it as a fill-in-the-blank exercise. BERT is the quintessential example. It’s excellent for understanding the context of words within a sentence.

The choice of language model depends heavily on the specific application. If you need to generate text, autoregressive or causal models are suitable. If you need to understand the context of words within a sentence, a masked language model might be better.

Q 3. What are the advantages and disadvantages of using recurrent neural networks (RNNs) for language modeling?

Recurrent Neural Networks (RNNs), specifically LSTMs and GRUs, were initially popular for language modeling due to their ability to handle sequential data. However, they have limitations.

- Advantages:

- Sequential Processing: RNNs naturally process sequential data like text by maintaining a hidden state that captures information from previous time steps.

- Variable Length Sequences: They can handle input sequences of varying lengths.

- Disadvantages:

- Vanishing/Exploding Gradients: Training RNNs can be challenging due to the vanishing or exploding gradient problem, making it difficult to learn long-range dependencies.

- Sequential Computation: Processing is sequential, meaning you can’t process multiple words in parallel, making them slow for long sequences.

- Difficult to Parallelize: The sequential nature hinders parallelization, further impacting training speed.

While RNNs provided a significant advancement over n-gram models, their limitations paved the way for more efficient architectures like Transformers.

Q 4. How do transformers improve upon RNNs for language modeling?

Transformers revolutionized language modeling by addressing the shortcomings of RNNs. They achieve this primarily through two key innovations: self-attention and parallelization.

- Parallel Processing: Unlike RNNs’ sequential processing, Transformers process the entire input sequence simultaneously using self-attention. This dramatically speeds up training and inference.

- Self-Attention: Self-attention allows the model to weigh the importance of different words in the input sequence when predicting the next word. This enables the model to capture long-range dependencies much more effectively than RNNs, which struggle with long sequences due to vanishing gradients.

- Improved Handling of Long-Range Dependencies: Because of parallel processing and self-attention, transformers can capture relationships between words far apart in a sentence, significantly improving performance on tasks requiring understanding of long-range context.

In short, Transformers are faster, can handle longer sequences, and capture long-range dependencies far better than RNNs, leading to significant improvements in language modeling performance.

Q 5. Explain the concept of attention mechanisms in transformers.

The attention mechanism is a core component of Transformers that allows the model to focus on different parts of the input sequence when generating output. Think of it as a reader scanning a text; their attention shifts depending on the relevance of each word to the overall understanding. Instead of processing each word in isolation, attention allows the model to consider the relationships between all words.

For each word in the input sequence, the attention mechanism computes a weighted sum of all other words in the sequence. The weights represent the importance of each word in the context of the current word. Words that are semantically related or contextually important will receive higher weights.

This weighted sum is then used as input to the next layer of the network, allowing the model to effectively incorporate information from across the entire sequence, regardless of the distance between words.

Q 6. What are the different types of attention mechanisms (e.g., self-attention, multi-head attention)?

There are several types of attention mechanisms, with self-attention being the most prominent in Transformers:

- Self-Attention: This mechanism allows the model to attend to all other words in the input sequence when processing a particular word. It computes relationships between all words within the same sequence, capturing long-range dependencies.

- Multi-Head Attention: This extends self-attention by using multiple ‘heads’, each attending to different aspects of the input sequence. It’s like having multiple readers, each focusing on a different aspect of the text. This allows the model to capture richer and more nuanced relationships between words.

- Cross-Attention: This mechanism attends to different sequences, for example, in machine translation, attending to words in the source language when generating words in the target language.

The specific type of attention used often depends on the task. Self-attention and multi-head attention are central to the success of modern Transformer-based language models.

Q 7. Describe the role of word embeddings in language modeling.

Word embeddings are crucial to language modeling because they represent words as dense, low-dimensional vectors. This allows the model to understand semantic relationships between words.

Instead of treating words as discrete symbols, word embeddings capture the meaning and context of words. Words with similar meanings are closer together in the vector space, allowing the model to learn relationships even between words it hasn’t seen before during training. This is achieved through techniques like Word2Vec, GloVe, and FastText.

For example, the embeddings for ‘king’ and ‘queen’ will be closer together than the embeddings for ‘king’ and ‘table’. This enables the model to capture syntactic and semantic relationships more effectively, leading to improved performance in language tasks.

In essence, word embeddings are a way to provide the model with a richer representation of words, moving beyond simple one-hot encodings and enabling it to understand the nuances of language.

Q 8. Compare and contrast Word2Vec, GloVe, and FastText.

Word2Vec, GloVe, and FastText are all popular word embedding techniques used in natural language processing to represent words as dense vectors, capturing semantic relationships between them. However, they differ in their approaches:

- Word2Vec: This uses either the Continuous Bag-of-Words (CBOW) or Skip-gram model. CBOW predicts a target word from its context words, while Skip-gram predicts context words from a target word. Word2Vec learns embeddings by optimizing an objective function that maximizes the likelihood of observing the target word given its context (or vice versa). It’s relatively simple to implement and computationally efficient for smaller vocabularies.

- GloVe (Global Vectors): GloVe leverages global word-word co-occurrence counts across the entire corpus. Unlike Word2Vec, which focuses on local context, GloVe considers the ratios of co-occurrence probabilities to capture richer semantic relationships. This often leads to improved performance, especially for rare words.

- FastText: An extension of Word2Vec, FastText considers not only individual words but also their character n-grams. This allows it to handle out-of-vocabulary (OOV) words more effectively by representing them as combinations of their constituent character n-grams. This is particularly beneficial for morphologically rich languages.

In summary: Word2Vec is simpler and faster but might struggle with rare words. GloVe provides better performance by considering global co-occurrence statistics. FastText handles OOV words well and is suitable for languages with rich morphology. The choice of method often depends on the specific application and dataset characteristics.

Q 9. Explain how positional encodings work in transformers.

Positional encodings in transformers are crucial for providing information about the order of words in a sequence. Unlike recurrent neural networks (RNNs) which process sequences sequentially, transformers process the entire sequence in parallel. Without positional information, the model wouldn’t know which word comes before or after another, leading to inaccurate representations.

Positional encodings are added to the word embeddings before being fed into the transformer’s encoder or decoder layers. There are two main approaches:

- Learned Embeddings: A separate embedding vector is learned for each position in the sequence. This allows the model to learn the most effective positional representations from data.

- Fixed Functions: These use mathematical functions (like sine and cosine waves with different frequencies) to generate positional encodings. These functions provide a smooth representation of position and have the advantage of handling sequences longer than those seen during training. A common example is using sine and cosine functions with different frequencies:

PE(pos, 2i) = sin(pos / 10000^(2i/d_model))PE(pos, 2i+1) = cos(pos / 10000^(2i/d_model))where

posis the position,iis the dimension index, andd_modelis the embedding dimension.

These encodings are then added to the word embeddings, providing the model with the necessary positional context for processing the sequence.

Q 10. What are some common evaluation metrics for language models?

Evaluating language models depends on the specific task. Common metrics include:

- Perplexity: Measures how well the model predicts a sample. Lower perplexity indicates better performance (explained in more detail in the next answer).

- BLEU (Bilingual Evaluation Understudy): Used for machine translation, comparing the generated translation to one or more reference translations.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Used for text summarization, measuring overlap between generated summaries and reference summaries.

- METEOR (Metric for Evaluation of Translation with Explicit ORdering): Similar to BLEU but considers synonyms and paraphrases.

- Accuracy: For tasks like text classification or part-of-speech tagging.

- F1-score: The harmonic mean of precision and recall, often used in information retrieval and classification tasks.

The choice of metric depends heavily on the application. For example, perplexity is suitable for general language modeling, while BLEU is specific to machine translation.

Q 11. How do you handle out-of-vocabulary (OOV) words in language modeling?

Out-of-vocabulary (OOV) words are words not present in the model’s vocabulary during training. Several techniques handle OOV words:

- Unknown Token (UNK): Replace all OOV words with a special UNK token during training. The model learns to represent UNK, but information specific to the OOV words is lost.

- Character-level Modeling: Represent words as sequences of characters. This allows the model to generate or process words it has never seen before by composing them from known characters.

- Subword Tokenization: Break words into subword units (like Byte Pair Encoding (BPE) or WordPiece). This enables the model to represent OOV words by combining known subword units. This strikes a balance between vocabulary size and handling OOV words.

- Word Embeddings with Out-of-Vocabulary Handling: Some embedding techniques, like FastText, inherently address OOV words (as discussed previously).

The best approach depends on factors like the size of the vocabulary, the type of task, and the characteristics of the language.

Q 12. Explain the concept of perplexity in language modeling.

Perplexity is a metric used to evaluate language models. Intuitively, it measures how surprised the model is by the test data. A lower perplexity indicates that the model is less surprised, implying better performance.

Formally, perplexity is the inverse probability of the test set, normalized by the number of words. Imagine the model assigning probabilities to each word in a sentence. A low perplexity means the model assigned high probabilities to the words it observed, while a high perplexity suggests the model was unsure about what words would follow. It’s calculated as:

Perplexity = exp(-(1/N) * Σ log P(w_i | w_1, ..., w_i-1))where N is the total number of words, w_i is the i-th word, and P(w_i | w_1, ..., w_i-1) is the probability of the i-th word given its preceding words. Lower perplexity means the model is better at predicting the sequence.

Q 13. Describe different techniques for training large language models efficiently.

Training large language models efficiently is a significant challenge due to their massive size and computational demands. Techniques include:

- Distributed Training: Splitting the model across multiple GPUs or machines to parallelize computation. This significantly reduces training time.

- Mixed Precision Training: Using lower precision (FP16) for computation, reducing memory usage and speeding up training while maintaining accuracy.

- Gradient Accumulation: Accumulating gradients over multiple mini-batches before updating the model’s weights. This allows using larger effective batch sizes without requiring excessive memory.

- Model Parallelism: Distributing different parts of the model across different devices.

- Data Parallelism: Distributing the data across different devices.

- Optimizer Techniques: Utilizing efficient optimizers like AdamW which are well suited for training large models.

- Learning Rate Scheduling: Strategically adjusting the learning rate during training to improve convergence and avoid overfitting.

These techniques allow researchers to train significantly larger and more complex models that were previously infeasible.

Q 14. What are some common challenges in training and deploying language models?

Training and deploying large language models present several challenges:

- Computational Cost: Training requires significant computational resources (GPUs, memory, and time).

- Data Requirements: Large models demand massive amounts of high-quality training data, often requiring significant effort to collect and clean.

- Overfitting: Large models are prone to overfitting, especially with limited data. Regularization techniques are crucial.

- Bias and Fairness: Models trained on biased data can perpetuate and amplify existing societal biases. Careful consideration of data quality and fairness is essential.

- Energy Consumption: Training and deploying large models can consume significant energy, raising environmental concerns.

- Deployment and Inference: Deploying large models for inference can be resource-intensive, requiring optimized techniques for efficient processing.

- Explainability and Interpretability: Understanding why a model makes a specific prediction can be difficult, especially for large, complex models.

Addressing these challenges requires careful planning, resource management, and a focus on responsible AI development.

Q 15. Explain the difference between generative and discriminative language models.

Generative and discriminative language models differ fundamentally in their approach to language modeling. Think of it like this: a generative model learns the underlying probability distribution of the data – it tries to understand the ‘recipe’ for creating text. A discriminative model, on the other hand, focuses on distinguishing between different classes or categories of text; it learns the ‘differences’ between text types.

A generative model, like GPT-3, aims to generate new text samples that resemble the training data. It learns the joint probability P(x, y) where x is the input and y is the output. It’s like a chef learning to cook different dishes – it knows the ingredients and how to combine them to create a delicious meal.

A discriminative model, like many sentiment analysis models, focuses on assigning labels (positive, negative, neutral) to input text. It learns the conditional probability P(y|x). This is more like a food critic – it knows what characteristics define a good or bad dish but doesn’t necessarily know how to cook it.

In short, generative models create, while discriminative models classify.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss different techniques for text generation using language models.

Several techniques enable text generation using language models. The most common approaches include:

- Autoregressive models: These models predict the next word in a sequence given the preceding words. Think of it like writing a sentence word by word – each word is influenced by what came before. Examples include GPT-3 and Transformer-based models. The process often involves sampling from a probability distribution to introduce randomness and creativity.

- Non-autoregressive models: These models generate the entire text sequence in parallel, making them faster than autoregressive models but potentially sacrificing the coherence and quality of the output. They’re like writing the entire sentence at once, potentially missing nuances.

- Beam search: This technique explores multiple possible text continuations at each step, keeping the ‘best’ k sequences (beam size) at each step. It helps to find better-quality text but is computationally more expensive.

- Nucleus sampling (top-p sampling): This approach samples from the smallest set of words whose cumulative probability exceeds a threshold p. It’s a more controlled approach than simple sampling, avoiding unlikely words while still allowing for some randomness.

- Temperature scaling: This adjusts the sharpness of the probability distribution – higher temperature increases randomness, while lower temperature makes the model more deterministic. It’s like adjusting the ‘spice’ of the generated text.

The choice of technique depends on the desired trade-off between speed, quality, and creativity.

Q 17. How do you address bias in language models?

Addressing bias in language models is crucial for building ethical and fair AI systems. Bias arises because models learn from data, and if that data reflects societal biases, the model will perpetuate them. Here’s a multi-pronged approach:

- Data curation and preprocessing: Carefully examining and cleaning the training data is the first step. This involves identifying and mitigating biased or offensive content. Techniques include data augmentation to increase representation of underrepresented groups and re-weighting samples to balance the dataset.

- Algorithmic mitigation techniques: These methods aim to counteract bias during model training or post-processing. Examples include adversarial training (training the model to resist adversarial examples designed to trigger bias) and fairness-aware training (incorporating fairness constraints into the loss function).

- Post-processing methods: These techniques modify the model’s output to reduce bias after training. This might involve recalibrating probabilities or applying filters to remove biased expressions.

- Regular evaluation and monitoring: Continuously evaluating the model’s performance on various bias benchmarks is crucial to identify and address emerging bias over time. Tools and metrics are vital for this process.

Addressing bias is an ongoing process; it requires a combination of technical solutions and a critical examination of societal biases.

Q 18. Explain the concept of transfer learning in NLP.

Transfer learning in NLP leverages knowledge gained from a large pre-trained language model (like BERT or RoBERTa) and applies it to a new, often smaller, downstream task. Imagine you’ve learned to ride a bicycle; now learning to ride a motorcycle is much easier because you already possess the fundamental skills of balance and steering. Similarly, pre-trained models already know a lot about language – vocabulary, grammar, and even some semantic relationships – which can be ‘transferred’ to your specific task.

Instead of training a model from scratch on a small dataset, which can lead to overfitting and poor performance, transfer learning allows you to fine-tune a pre-trained model with a smaller dataset, resulting in faster training and improved performance. This is particularly useful when dealing with limited data for a specific task.

Q 19. How do you fine-tune a pre-trained language model for a specific task?

Fine-tuning a pre-trained language model involves adapting the pre-trained model’s weights to a specific task using a new dataset. Here’s a typical process:

- Choose a pre-trained model: Select a model appropriate for your task (e.g., BERT for classification, GPT for generation).

- Prepare your data: Format your data to match the model’s input requirements. This often involves tokenization and creating input features.

- Add a task-specific layer: Add a new layer or modify existing layers to suit your specific task. For example, add a classification layer for a sentiment analysis task.

- Fine-tune the model: Train the model on your new dataset, typically with a lower learning rate than used for initial pre-training. This avoids overwriting the learned knowledge.

- Evaluate and iterate: Evaluate the model’s performance on a held-out test set and adjust hyperparameters (learning rate, batch size, etc.) as needed.

The specific implementation depends on the chosen framework (e.g., Hugging Face Transformers) and the task. Code snippets would vary depending on the framework and the task but generally involve using libraries to load pre-trained weights, modify the model architecture, and train the model using appropriate optimizers and loss functions.

Q 20. Describe your experience with different NLP libraries (e.g., spaCy, NLTK, Transformers).

I have extensive experience with several NLP libraries, each with its strengths and weaknesses:

- spaCy: I’ve used spaCy extensively for its speed and efficiency in tasks like named entity recognition (NER) and part-of-speech tagging (POS). Its streamlined API makes it easy to integrate into production systems. For example, I used spaCy to build a real-time news summarizer, leveraging its fast NER capabilities.

- NLTK: NLTK provides a comprehensive collection of tools for various NLP tasks. It’s excellent for research and prototyping due to its extensive documentation and community support. I used NLTK to build a sentiment analyzer for social media data during a research project.

- Transformers (Hugging Face): This library simplifies working with transformer-based models like BERT, GPT, and others. Its ease of use for loading, fine-tuning, and deploying these powerful models is unparalleled. I’ve utilized Transformers for numerous projects, ranging from question answering systems to text generation tasks. Recently, I used it to build a chatbot fine-tuned on a specific domain’s technical documentation.

My experience allows me to select the most appropriate library based on the task’s specific needs and constraints – prioritizing speed for production systems and flexibility for research or exploratory tasks.

Q 21. How do you handle noisy or incomplete data in language modeling?

Handling noisy or incomplete data is a critical aspect of real-world language modeling. Several strategies can be employed:

- Data cleaning: This involves removing irrelevant characters, correcting spelling errors, and handling inconsistencies in the data. Techniques include using regular expressions, spell-checking tools, and manual review for particularly complex cases.

- Data imputation: For missing values, various imputation techniques can be used to fill in the gaps. This might involve filling missing words with placeholders, using statistical methods to estimate missing values, or employing more sophisticated techniques based on contextual information.

- Robust model training: Some models are inherently more robust to noise. For instance, techniques like dropout during training can make the model less sensitive to individual data points.

- Pre-training on noisy data: Pre-training a language model on a large dataset that contains some noise can make it more resistant to noise in the downstream task.

- Using appropriate evaluation metrics: Choose metrics that are less sensitive to noise, such as F1-score instead of accuracy, which might be heavily affected by noisy data points.

The best approach often involves a combination of these techniques, tailored to the specific nature of the noise and the characteristics of the data and task.

Q 22. Explain different methods for data augmentation in NLP.

Data augmentation in NLP involves artificially expanding your training dataset to improve model robustness and generalization. Think of it like showing a child more examples of a cat – pictures, descriptions, even sounds – to better solidify their understanding. This is crucial because large language models are data-hungry beasts; more data generally means better performance. Here are several methods:

Synonym Replacement: Replacing words with their synonyms. For example, changing “The quick brown fox jumps” to “The rapid brown fox leaps.” This introduces slight variations without altering the meaning.

Random Insertion: Inserting random words into the sentence. While seemingly disruptive, this can help the model become more resilient to noisy input.

Random Deletion: Randomly removing words. Similar to insertion, this forces the model to learn context better, as it needs to fill in the gaps.

Back Translation: Translating a sentence into another language and then back to the original. This can generate slightly different phrasing, adding variety to the training data. For example, translating an English sentence to French, then back to English might produce a grammatically correct but slightly different sentence.

EDA (Easy Data Augmentation): A collection of techniques including the above, often applied systematically to create a broader range of augmented data.

The choice of method depends on the specific task and the nature of the data. For instance, synonym replacement is generally safer than random insertion or deletion, as it’s less likely to introduce nonsensical sentences. Careful monitoring of the impact of augmentation on model performance is essential; poorly implemented augmentation can hurt performance rather than help it.

Q 23. What are some common regularization techniques used in language modeling?

Regularization techniques prevent overfitting in language models – that is, preventing the model from memorizing the training data rather than learning general patterns. Overfitting leads to poor performance on unseen data. Common methods include:

Dropout: Randomly ignoring neurons during training. This forces the network to learn more robust features and prevents reliance on any single neuron.

Weight Decay (L1/L2 Regularization): Adding a penalty to the loss function based on the magnitude of the model’s weights. This encourages smaller weights, preventing them from becoming too large and thus reducing overfitting.

Early Stopping: Monitoring the model’s performance on a validation set during training and stopping when performance starts to decrease. This prevents the model from continuing to learn and overfitting the training data.

Gradient Clipping: Limiting the magnitude of the gradients during training. This prevents extremely large updates to the model’s weights, which can destabilize training and lead to overfitting. Think of it as preventing overly aggressive adjustments during learning.

The choice of regularization technique often involves experimentation and depends heavily on the specific architecture of the language model and the characteristics of the dataset. Often, a combination of techniques provides the best results. For instance, using dropout in conjunction with weight decay is a common practice.

Q 24. How do you deploy a language model for production use?

Deploying a language model for production involves several crucial steps. It’s like launching a rocket – careful planning and execution are paramount. First, you need to optimize the model for inference: this often involves techniques like quantization (reducing the precision of model weights) and pruning (removing less important connections) to reduce the model size and improve inference speed. Then, you choose a deployment platform – this could be a cloud service (AWS SageMaker, Google Cloud AI Platform, Azure Machine Learning), a serverless function, or even an embedded system depending on the application. The next step is designing an API to allow access to your model. Think of this as creating a user-friendly interface for external systems to interact with your model. Finally, you need comprehensive monitoring and logging – this ensures you can track performance, detect issues, and react to problems quickly. This whole process needs robust error handling and recovery mechanisms – things are rarely perfect, and you need a plan for when issues arise.

Q 25. Discuss your experience with model monitoring and maintenance.

Model monitoring and maintenance are crucial for the long-term success of any deployed language model. Imagine a car – regular maintenance ensures it runs smoothly. For models, this includes continuously monitoring metrics like accuracy, latency, and resource consumption. We use dashboards and alerting systems to detect anomalies promptly. For example, a sudden drop in accuracy might indicate a data drift (the distribution of input data changes over time) or a problem with the underlying infrastructure. In such cases, we investigate the root cause and take corrective actions – this could include retraining the model with new data, adjusting hyperparameters, or even rolling back to a previous version. Another key aspect is data versioning, allowing us to track and recover from unexpected issues. This process also includes keeping an eye on fairness metrics to catch biases that might emerge over time.

Q 26. Explain the concept of prompt engineering.

Prompt engineering is the art and science of crafting effective prompts for large language models. It’s about understanding how to guide the model to generate the desired output. Think of it like asking a question – a poorly phrased question leads to a poor answer. Similarly, a poorly designed prompt will elicit an unsatisfactory response from the model. Effective prompt engineering involves understanding the model’s strengths and weaknesses, and tailoring the prompt accordingly. Techniques include providing context, specifying the desired format, providing examples, and iteratively refining the prompt based on the model’s output. For instance, instead of simply asking “Write a story,” a better prompt might be: “Write a short science fiction story about a robot exploring a deserted planet, in the style of Isaac Asimov.” This adds specificity and guidance, leading to more focused and relevant results.

Q 27. How do you evaluate the ethical implications of a language model?

Evaluating the ethical implications of a language model requires a multi-faceted approach. We must consider potential biases embedded in the training data – this could lead to discriminatory or unfair outputs. For instance, a model trained on biased data might perpetuate stereotypes about certain groups. We also assess the potential for misuse – could the model be used to generate harmful content, spread misinformation, or be exploited for malicious purposes? We analyze the model’s transparency and explainability: understanding how the model arrives at its conclusions is crucial for identifying and mitigating biases. Furthermore, we consider the environmental impact of training and deploying such resource-intensive models. Addressing these concerns requires careful consideration of the data used, the model’s architecture, and its deployment strategy. We often involve ethicists and other stakeholders in this process to obtain diverse perspectives and ensure responsible development and deployment.

Q 28. Describe your experience with different cloud platforms for NLP (e.g., AWS, Google Cloud, Azure).

I have extensive experience with various cloud platforms for NLP, including AWS, Google Cloud, and Azure. Each platform offers a unique set of strengths. AWS offers a comprehensive suite of services for NLP, including SageMaker for model training and deployment, Comprehend for natural language understanding tasks, and Transcribe for speech-to-text. Google Cloud provides similar capabilities with Vertex AI, Natural Language API, and Speech-to-Text. Azure offers Azure Machine Learning, Cognitive Services (including Language), and Speech services. My experience involves selecting the optimal platform based on specific project requirements, considering factors such as cost, scalability, existing infrastructure, and the specific features offered by each platform. For example, if we require a specific pre-trained model readily available on a particular platform, that would heavily influence our decision. The choice often involves a careful cost-benefit analysis and also depends on our team’s familiarity and comfort level with a given platform’s tools and ecosystem.

Key Topics to Learn for Language Modeling Interview

- Fundamentals: N-grams, Markov Models, Language Model Evaluation Metrics (Perplexity, BLEU score), and smoothing techniques.

- Statistical Language Models: Understanding the probabilistic nature of language modeling, and the application of various statistical methods for model training and improvement.

- Neural Language Models: Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Gated Recurrent Units (GRUs), Transformers (Attention mechanisms), and their applications in various NLP tasks.

- Practical Applications: Machine Translation, Text Summarization, Chatbots, Speech Recognition, Text Generation, and Sentiment Analysis. Be prepared to discuss your experience with specific applications and how language models contribute to their success.

- Model Training and Optimization: Data preprocessing, feature engineering, model selection, hyperparameter tuning, and evaluation strategies. Understanding concepts like backpropagation and gradient descent is crucial.

- Advanced Topics: Transfer learning, fine-tuning pre-trained models, handling imbalanced datasets, and addressing ethical considerations in language model deployment.

- Problem-Solving: Practice designing and implementing solutions to language modeling challenges. Be ready to discuss your approach to problem-solving and your ability to debug and optimize models.

Next Steps

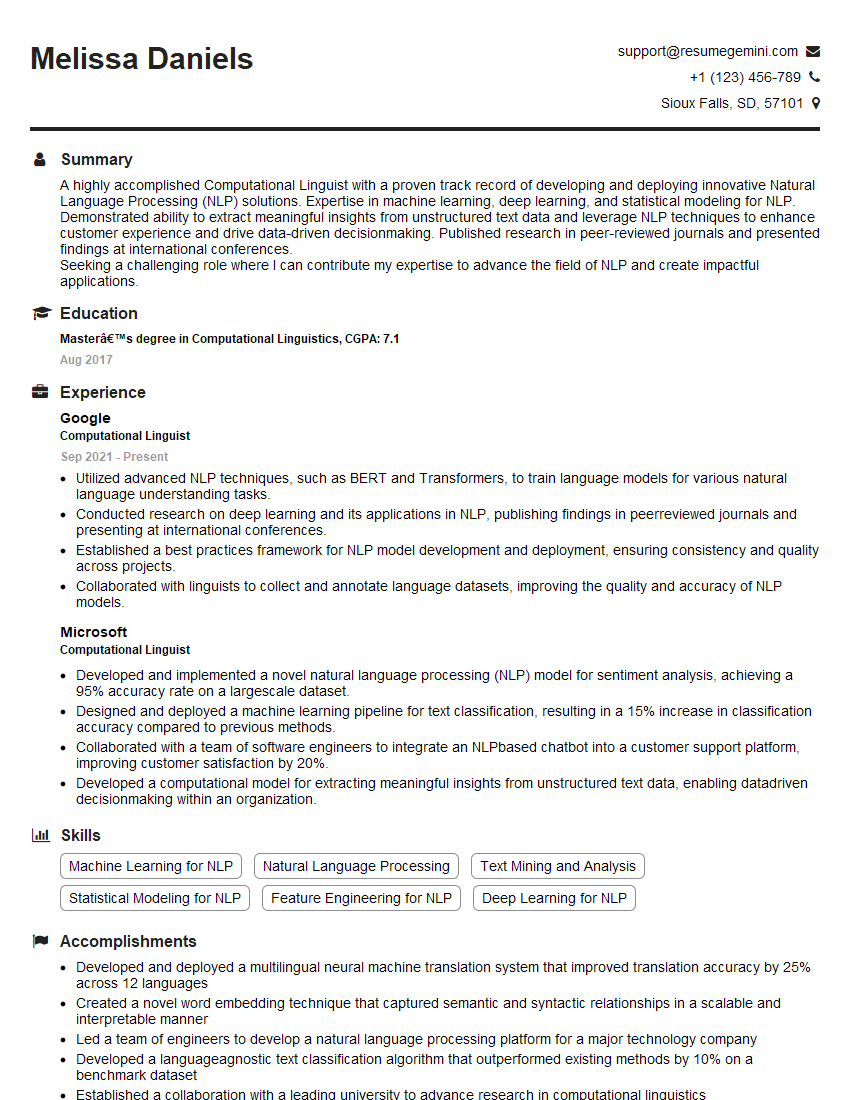

Mastering Language Modeling opens doors to exciting and impactful careers in cutting-edge fields like Artificial Intelligence and Natural Language Processing. To maximize your job prospects, creating a strong, ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional resume tailored to showcase your skills and experience in Language Modeling. Examples of resumes specifically designed for Language Modeling roles are available to help guide you. Invest the time to create a compelling resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?