The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to 3D Graphics interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in 3D Graphics Interview

Q 1. Explain the difference between rasterization and ray tracing.

Rasterization and ray tracing are two fundamentally different approaches to rendering 3D scenes. Think of it like this: rasterization is like painting a picture, while ray tracing is like meticulously tracing every light ray.

Rasterization works by projecting 3D polygons onto a 2D screen, filling in each pixel based on the polygon’s color and properties. It’s highly efficient for real-time rendering, such as in video games, because it can leverage specialized hardware (GPUs) designed for parallel processing. However, it struggles with accurate reflections, refractions, and global illumination effects.

Ray tracing, on the other hand, simulates the path of light rays from the camera through the scene and back. It traces each ray to determine the color of each pixel based on interactions with objects and light sources. This allows for incredibly realistic rendering of effects like reflections and shadows, but it’s computationally expensive, making it less suitable for real-time applications. It excels in creating photorealistic images and is commonly used in film and architectural visualization.

In essence, rasterization prioritizes speed and efficiency, while ray tracing prioritizes visual accuracy and realism.

Q 2. Describe your experience with different 3D modeling software (e.g., Maya, Blender, 3ds Max).

I have extensive experience with several industry-standard 3D modeling software packages. My expertise in Maya spans a decade, where I’ve utilized its powerful animation and rigging tools for character animation projects, including creating realistic facial expressions and intricate body mechanics. I’ve also worked extensively in Blender, leveraging its open-source nature and versatility for tasks ranging from hard-surface modeling of vehicles to creating stylized environments. This includes experience with its node-based material system for creating complex shaders. Finally, I’ve used 3ds Max for architectural visualization projects, utilizing its modeling and rendering capabilities to produce high-quality presentations for clients. In each software, I’ve developed a strong understanding of workflow optimization, focusing on efficient modeling techniques to ensure smooth and fast rendering.

Q 3. What are the advantages and disadvantages of using different polygon meshes (e.g., triangles, quads)?

The choice between triangle and quad polygons significantly impacts the efficiency and quality of your 3D model.

- Triangles: Triangles are the most fundamental polygon type and offer unparalleled robustness. They’re guaranteed to be planar and never self-intersect, making them ideal for complex models where deformation is significant. However, they often require more polygons to represent the same surface area compared to quads, potentially increasing render times.

- Quads: Quads (four-sided polygons) are generally preferred for modeling surfaces due to their ability to represent smoother curves with fewer polygons compared to triangles. They lend themselves well to UV mapping, creating less distortion in textures. However, they are prone to issues like non-planar geometry, which can cause rendering problems. Careful modeling techniques are necessary to ensure quad meshes remain clean.

The optimal choice depends on the project’s needs. For complex animations where deformation is crucial, triangles are safer. For static models with smooth surfaces, well-maintained quad meshes are generally more efficient.

Q 4. Explain the process of creating a realistic skin shader.

Creating a realistic skin shader requires a multi-layered approach, combining several techniques to mimic the complex properties of human skin.

The process typically involves:

- Subsurface Scattering (SSS): Skin’s translucency is key. SSS simulates light scattering beneath the surface, providing a lifelike appearance. This is often achieved with specialized shaders or by using techniques like diffusion profiles.

- Normal Maps and Displacement Maps: These add surface detail without increasing polygon count. Normal maps simulate fine details like pores and wrinkles, while displacement maps subtly deform the mesh surface to give it more depth.

- Diffuse Color Map: This provides the base skin tone and variations. It can include details like freckles and other skin imperfections.

- Specular Maps: These control the reflective properties of the skin. Skin isn’t perfectly reflective, so specular maps need to be carefully adjusted to mimic its subtle shine.

- Multiple Layers and Blending: Advanced skin shaders often combine multiple layers—for example, a base layer, a subsurface scattering layer, and a layer for imperfections. These layers are often blended to create the most realistic effect.

Finally, it is crucial to incorporate realistic variations and imperfections to avoid a plasticky appearance. This includes things like skin redness, blemishes, and overall unevenness. The final shader requires precise parameter tuning to achieve photorealism.

Q 5. How do you optimize a 3D model for real-time rendering?

Optimizing a 3D model for real-time rendering focuses on reducing the computational load without sacrificing visual fidelity. Key strategies include:

- Polygon Reduction: Reducing the number of polygons is crucial. This can involve decimation techniques, level of detail (LOD) generation, or using techniques like geometry simplification. The goal is to maintain the visual quality at different distances.

- Texture Optimization: Use efficiently sized textures. Larger textures take up more memory and bandwidth. Consider using normal maps and other techniques to add detail without increasing texture resolution unnecessarily.

- Draw Call Optimization: Minimize the number of draw calls (individual rendering commands) by combining meshes with similar materials or using techniques like instancing.

- Material Optimization: Use shaders that are efficient for your rendering pipeline. Avoid overly complex shaders and make use of shader code optimization techniques.

- Mesh Simplification: Techniques like edge collapsing and vertex clustering can reduce polygon count whilst preserving model shape.

The specific optimization strategies will depend on the target platform (PC, mobile, console) and the rendering engine.

Q 6. Describe your experience with normal mapping and other texture mapping techniques.

I have substantial experience with various texture mapping techniques, particularly normal mapping. Normal mapping is a powerful method for adding surface detail without increasing polygon count. It works by storing surface normal vectors in a texture, which the renderer uses to simulate bumps and irregularities. This is far more efficient than creating high-polygon models.

Beyond normal mapping, I’m proficient in other techniques such as:

- Diffuse Mapping: This is the most basic technique, where a color texture is applied directly to the surface of the model.

- Specular Mapping: This affects the reflective properties of the surface, controlling the highlights and shine.

- Ambient Occlusion Mapping (AO): This texture simulates shadows in the crevices of the model, adding depth and realism.

- Displacement Mapping: This technique actually moves the vertices of the mesh based on the texture data, providing much more pronounced surface detail than normal mapping but is computationally more expensive.

- Parallax Mapping: A more advanced technique than normal mapping that simulates surface depth by offsetting the texture coordinates based on the view direction.

The choice of technique depends on the desired level of realism and the performance constraints of the project.

Q 7. What are the different types of animation techniques, and when would you use each?

There’s a wide range of animation techniques, each with its strengths and applications. Here are a few:

- Keyframe Animation: This is the most fundamental method, where animators manually set key poses at various points in time. The computer interpolates the poses between the keyframes. It’s versatile and widely used but requires significant artist time and skill.

- Motion Capture (MoCap): This involves capturing the movement of actors or objects using specialized cameras and sensors. The data is then used to animate virtual characters or objects, providing realistic movements. It’s excellent for realistic human and animal animation but can be expensive and require post-processing.

- Procedural Animation: This involves using algorithms and code to generate animations. This is beneficial for repetitive tasks, simulations, or creating complex animations that would be difficult or impossible to create manually. Examples include simulating cloth, hair, or particle effects.

- Skeletal Animation: This method uses a rig (a skeleton) to deform a 3D model, allowing for realistic character movement. It’s extensively used in character animation.

- Inverse Kinematics (IK): A technique used to control the position of limbs or objects indirectly, based on their end points. This can make animation easier and more intuitive.

The choice depends on the project’s needs: keyframing for stylized control, MoCap for realism, procedural for simulations, and skeletal/IK for character animation.

Q 8. Explain the concept of skeletal animation and inverse kinematics.

Skeletal animation and inverse kinematics (IK) are fundamental techniques for animating 3D characters realistically. Skeletal animation uses a hierarchical structure of bones (a skeleton) connected by joints to represent the character’s rig. Each bone’s position and rotation are controlled, and the mesh (the character’s surface) is deformed accordingly to reflect the bone movements. Think of it like a puppet – the bones are the armature, and the mesh is the puppet’s cloth.

Inverse kinematics takes this a step further. Instead of directly controlling bone rotations, you specify the position of an end effector (e.g., a hand or foot) and the IK solver calculates the necessary bone rotations to achieve that position. This is particularly useful for creating natural-looking poses and interactions, for example, having a character’s hand naturally grasp an object without manually animating each joint.

Example: In a game, a character needs to reach for a doorknob. With forward kinematics (the standard skeletal animation approach), you’d manually adjust each bone’s rotation in the arm. With IK, you’d just specify the target position (the doorknob), and the IK solver would handle the complex calculations to position the arm correctly.

Q 9. How do you handle UV unwrapping for efficient texture mapping?

UV unwrapping is the process of projecting a 3D model’s surface onto a 2D plane to prepare it for texture mapping. Efficient UV unwrapping aims to minimize distortion, seams, and texture stretching while keeping UV islands (groups of connected polygons) compact and organized. This prevents artifacts and makes the texture appear more natural on the 3D model.

My preferred methods involve using a combination of automated unwrapping tools (found in most 3D modeling packages) and manual adjustments. I typically start with an automatic unwrap, then analyze the result, identifying areas of significant distortion. I then manually adjust those regions to achieve a more even distribution of texture space. Techniques like planar, cylindrical, and spherical mapping are used as initial steps, depending on the model’s shape.

Practical Example: For a character model, I would typically unwrap the body parts separately to minimize distortion. The face, for instance, might require a more careful, manual approach to preserve fine details like wrinkles and pores.

Q 10. What are your preferred methods for lighting a 3D scene?

Lighting a 3D scene is crucial for realism and mood. My approach is usually a layered system combining different lighting techniques. This often starts with ambient lighting to provide a base level of illumination, followed by directional lighting (imitating the sun) for overall scene brightness and shadows, and then adding point lights or spotlights to highlight specific areas and create focal points.

I often employ image-based lighting (IBL) to enhance realism, particularly for environment reflections and subtle lighting effects. IBL uses a high-resolution environment map to simulate realistic lighting conditions based on captured real-world scenes.

Example: A nighttime scene might use a dark ambient light, a subtle moonlight directional light, and strategically placed point lights for street lamps and building windows.

Q 11. Explain your understanding of global illumination techniques.

Global illumination (GI) simulates the way light bounces around a scene, affecting the overall lighting and appearance. Unlike local lighting, which only considers direct light sources, GI accounts for indirect lighting, creating more realistic shadows, reflections, and color bleeding.

Several techniques achieve GI. Path tracing is a computationally expensive but highly accurate method that simulates light paths realistically. Photon mapping is another approach that pre-calculates light paths and stores them for efficient rendering. Radiosity is a more older technique suitable for diffuse lighting in static scenes.

Real-world application: GI is essential for creating photorealistic renders, especially in architectural visualization, where accurate lighting is crucial to showcasing a building’s design. A scene rendered without GI might appear flat and unrealistic, lacking the subtle lighting nuances created by light bouncing off surfaces.

Q 12. Describe your experience with different rendering pipelines.

I have experience with both deferred and forward rendering pipelines. Forward rendering is simpler to implement, processing lights per-pixel for each object. This approach is efficient for scenes with a low number of light sources.

Deferred rendering, on the other hand, processes geometry and material properties initially, storing them in buffers. Lighting calculations are then performed in a separate pass, making it highly scalable for scenes with many lights. This approach is more complex but ideal for scenes with many light sources and complex materials. I’ve also worked with tiled deferred rendering, which further optimizes performance by breaking down rendering into smaller tiles.

Practical Choice: The choice between forward and deferred rendering depends on the project’s needs. For mobile games with limited resources, forward rendering might be preferred. For high-end AAA titles, deferred rendering is often the better choice.

Q 13. What are the different types of cameras and their uses in 3D graphics?

3D graphics utilize various camera types, each with specific properties and applications:

- Perspective Camera: Simulates the human eye, with objects appearing smaller in the distance. This is the most commonly used camera type for realistic scenes.

- Orthographic Camera: Objects maintain their size regardless of distance, commonly used for technical drawings or isometric views, where maintaining proportions is vital.

- Fisheye Camera: A highly distorted lens providing a wide field of view, often used for special effects or to capture a panoramic view.

Example: A first-person shooter game uses a perspective camera to provide an immersive experience. A CAD program would likely employ an orthographic camera for precise measurements and representations.

Q 14. How do you troubleshoot rendering issues and performance bottlenecks?

Troubleshooting rendering issues and performance bottlenecks requires a systematic approach. I start by profiling the application using tools like RenderDoc or NVIDIA Nsight to identify performance hotspots. This helps pinpoint areas consuming excessive resources such as CPU or GPU time.

Common Issues and Solutions:

- Overdraw: Multiple polygons rendering over the same pixels. Solution: Optimize geometry, use occlusion culling, or employ techniques like early-z testing.

- Shader Complexity: Complex shaders can reduce performance. Solution: Profile shaders, simplify code, or use optimized shader variants.

- Draw Calls: Excessive draw calls can limit performance. Solution: Batch rendering or use instancing techniques to reduce the number of draw calls.

- Texture Memory Issues: Large or unoptimized textures can cause performance problems. Solution: Use appropriately sized textures, compress textures, or employ mipmapping.

Beyond profiling, I often systematically investigate lighting, materials, and mesh complexity. It’s often a process of elimination, meticulously checking each component to isolate the source of the problem.

Q 15. Explain your experience with version control systems (e.g., Git) in a 3D pipeline.

Version control is absolutely crucial in any collaborative 3D project. I’ve extensively used Git for managing assets, code, and scene files throughout my career. Think of Git as a time machine for your project – it allows you to track changes, revert to previous versions if needed, and collaborate seamlessly with other artists and developers. In a 3D pipeline, this is especially important because you often have multiple artists working on different parts of a model, textures, animations, etc.

In practice, I utilize branching strategies like Gitflow to manage features and bug fixes separately from the main development branch. For example, I might create a branch to model a specific character, then merge it into the main branch once it’s complete and reviewed. This prevents conflicts and ensures a stable main project. I also regularly commit my changes with clear and descriptive commit messages, making it easy to understand the history of the project. Using tools like Git LFS (Large File Storage) is also essential for managing large 3D files efficiently.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your workflow for creating a 3D character model from concept to final render.

My 3D character modeling workflow is an iterative process that starts with a concept and ends with a final render. It generally follows these steps:

- Concept & Ideation: This phase involves sketching initial ideas, gathering references, and defining the character’s personality, pose, and overall style.

- Blocking: I start by creating a rough base mesh, focusing on the character’s overall proportions and silhouette. This stage isn’t about detail; it’s about establishing the fundamental form. I often use simple primitives like boxes and spheres to begin with.

- Modeling: Once the block-out is approved, I refine the model by adding details, such as muscle definition, clothing folds, and facial features. I use various techniques like edge loops and subdivisions for smooth surfaces. I usually work in ZBrush or Blender depending on the project requirements.

- Texturing: This stage involves creating or importing textures to add color, detail, and realism to the model. I’m proficient in Substance Painter and Mari for creating high-quality textures. I consider the lighting and material properties while texturing.

- Rigging (if needed): If the character is intended for animation, I create a rig – a skeletal structure that allows for flexible posing and animation.

- Animation (if needed): If required, this stage involves animating the rigged character.

- Lighting & Rendering: Finally, I set up the lighting and render the character using a suitable renderer like Arnold, V-Ray, or Cycles, making sure to optimize settings for performance.

Q 17. What is your experience with game engines (e.g., Unity, Unreal Engine)?

I have significant experience with both Unity and Unreal Engine. My proficiency extends beyond simply importing assets; I understand how to optimize scenes for performance, implement custom shaders, and leverage the engines’ built-in features to create interactive and visually appealing experiences. In Unity, I have worked extensively with its scripting API using C#, creating custom tools and gameplay mechanics. Unreal Engine’s Blueprint visual scripting system has also been very useful for rapid prototyping and complex interactive features. I’ve used both engines for developing both small-scale personal projects and larger, more complex commercial projects.

For instance, in a recent Unity project, I optimized a large environment by implementing level-of-detail (LOD) techniques to reduce the polygon count for distant objects, significantly improving the frame rate.

Q 18. Explain your understanding of shaders and their role in rendering.

Shaders are small programs that run on the GPU and determine how surfaces look in a 3D scene. They calculate the color of each pixel based on various factors like light sources, surface materials, and camera position. They are fundamental to rendering and allow for creating a wide variety of visual effects.

A simple example is a diffuse shader, which calculates the color of a surface based on the angle between the surface normal and the light source. More complex shaders can simulate realistic materials like metals, glass, or skin, incorporating reflections, refractions, subsurface scattering, and more. I’m experienced with writing shaders in HLSL (High-Level Shading Language) and GLSL (OpenGL Shading Language). For example, I’ve written custom shaders to create realistic water effects, stylized cel-shading, and physically based rendering (PBR) materials.

Q 19. How do you optimize textures for memory usage and performance?

Texture optimization is critical for performance, especially in real-time applications. There are several strategies I employ:

- Appropriate Texture Resolution: Using the lowest resolution that still provides acceptable visual quality. Higher resolutions mean more VRAM usage and slower rendering.

- Texture Compression: Using compression formats like DXT, ETC, or ASTC to reduce file size without significantly impacting visual quality. The choice of format depends on the target platform and hardware capabilities.

- Mipmapping: Generating mipmaps (smaller versions of the texture) to reduce aliasing and improve performance when rendering objects from a distance.

- Texture Atlases: Combining multiple smaller textures into a single larger texture to reduce the number of draw calls, which boosts performance.

- Normal Maps & Other Detail Maps: Using normal maps, height maps, and other detail maps to add surface detail without increasing the polygon count of the model.

For example, in a game, a high-resolution texture for a close-up character might be paired with much lower resolution textures for the same character viewed from a distance. Using a texture atlas for UI elements avoids multiple texture binds, optimizing draw calls.

Q 20. What are your experience with different shading models (e.g., Phong, Blinn-Phong, Cook-Torrance)?

Shading models define how light interacts with surfaces. I’m familiar with Phong, Blinn-Phong, and Cook-Torrance, each offering a different level of realism and computational cost.

- Phong Shading: A simple model that combines diffuse and specular reflections. It’s computationally inexpensive but can look somewhat unrealistic, especially for highly reflective surfaces.

- Blinn-Phong Shading: An improvement over Phong, offering a smoother specular highlight. It’s still relatively efficient but provides a more visually appealing result.

- Cook-Torrance Shading: A physically based model that accurately simulates light interactions based on microfacet theory. It’s more computationally expensive but produces highly realistic results, especially for metals and other reflective materials.

The choice of shading model depends on the project’s requirements and performance constraints. For real-time applications, Blinn-Phong is often a good compromise between realism and performance. For high-quality offline rendering, Cook-Torrance is frequently preferred.

Q 21. Describe your experience with particle systems and their applications.

Particle systems are used to simulate a large number of small elements, such as fire, smoke, rain, snow, or explosions. They are a powerful tool for creating dynamic and visually engaging effects. I have extensive experience implementing and customizing particle systems in various game engines and rendering software.

The key aspects I consider when designing particle systems include particle emission rate, lifetime, velocity, size, color, and various forces. I often use these to create realistic or stylized effects. For instance, I might use a particle system to simulate realistic fire with flickering embers and rising smoke, or a stylized magical effect with glowing particles and trails. I can also use particle systems to create more abstract and artistic effects, pushing the boundaries of what is realistically achievable.

Q 22. Explain your understanding of different file formats used in 3D graphics (e.g., FBX, OBJ, Alembic).

3D graphics rely on various file formats, each with strengths and weaknesses. Choosing the right one depends on the project’s needs and the software used.

- FBX (Filmbox): A versatile, widely supported format capable of handling animation, materials, and textures. It’s a great choice for interoperability between different 3D packages, like transferring models between Maya and Unreal Engine. Think of it as a universal translator for 3D data.

- OBJ (Wavefront OBJ): A simpler, text-based format primarily storing geometry (vertices, faces, normals). It’s lightweight and widely compatible, but lacks support for animation, materials, and complex data like shaders. Good for basic model exchange where only the shape is essential.

- Alembic (.abc): Specialized for animation and effects caching. It handles high-resolution geometry and complex simulations efficiently. It’s commonly used for exchanging complex character animations or fluid simulations between software packages, often in visual effects pipelines. Imagine it as a time-lapse record of a 3D scene’s movement.

The decision of which format to use involves carefully weighing the need for detailed information versus file size and software compatibility. For example, a game might prioritize the compact OBJ format for static geometry, while a VFX shot might rely on Alembic’s ability to handle detailed motion capture data.

Q 23. How do you approach creating realistic water or fire effects?

Creating realistic water and fire effects requires understanding fluid dynamics and leveraging simulation techniques. It’s not just about visual appearance but also physically plausible behavior.

For water, methods include:

- Particle systems: Simulating water as individual particles interacting with each other and the environment, allowing for realistic splashes, waves, and foam.

- Fluid simulation: Using specialized software or engine features to solve Navier-Stokes equations, capturing intricate water movement. This offers higher fidelity but is computationally expensive.

- Mesh-based techniques: Deforming a water surface mesh based on wave calculations, offering good visual results at a reasonable performance cost.

For fire, similar approaches apply:

- Particle systems: Simulating fire as glowing, semi-transparent particles with varied life spans and movement patterns.

- Volume rendering: Rendering a 3D volume of data representing temperature and density, creating realistic flames with volumetric lighting and shadows.

- GPU-accelerated simulations: Utilizing the power of graphics cards to perform complex calculations in real-time, enhancing realism and performance.

Often, a hybrid approach combining these techniques yields the best results, optimizing for visual fidelity and performance based on the target platform and hardware capabilities.

Q 24. What are some common challenges in 3D graphics and how do you overcome them?

3D graphics present numerous challenges. Some common ones include:

- Performance optimization: Balancing visual fidelity with frame rates and resource usage, especially crucial in real-time applications like games. Solutions include level of detail (LOD) systems, occlusion culling, and efficient shader programming.

- Realism: Achieving photorealistic visuals requires meticulous attention to lighting, materials, and texturing. Physically Based Rendering (PBR) is crucial here.

- Pipeline management: Coordinating workflows between modeling, texturing, rigging, animation, and rendering stages. Using version control and clear communication is vital.

- Debugging: Troubleshooting graphical glitches, performance bottlenecks, or unexpected behavior can be time-consuming. Debuggers, profiling tools, and a methodical approach are essential.

Overcoming these requires a combination of technical skills, problem-solving abilities, and efficient workflow management. Tools like profilers help identify performance bottlenecks, while version control systems ensure collaboration runs smoothly.

Q 25. Describe your experience with creating and optimizing level geometry for games.

Creating and optimizing level geometry for games is crucial for performance and visual appeal. It’s about finding the sweet spot between detail and efficiency.

My approach involves:

- Planning and design: Starting with a clear understanding of the game’s level design and visual style. This guides decisions regarding polygon count, texture resolution, and level layout.

- Modeling efficient geometry: Using optimized primitives and avoiding unnecessary polygons. Techniques like edge loops and quad modeling are key for creating clean and efficient meshes.

- Level of Detail (LOD): Creating multiple versions of the same geometry with varying levels of detail. Faraway objects use low-poly versions, while close-up objects use high-poly versions, improving performance without sacrificing visual quality.

- Texture optimization: Using appropriate texture resolutions and compression formats. This minimizes memory usage and improves loading times.

- Occlusion culling: Identifying and hiding geometry that’s not visible to the camera, significantly reducing rendering load.

For example, in a racing game, distant mountains might use very low-poly models with low-resolution textures, while the track itself would be highly detailed, but optimized for smooth rendering.

Q 26. How do you approach collaborating with other artists and developers in a team environment?

Effective teamwork is essential in 3D graphics. My approach emphasizes clear communication, collaboration tools, and a respect for diverse skill sets.

I ensure:

- Regular communication: Frequent meetings, updates, and feedback sessions keep everyone informed and aligned.

- Version control: Using systems like Git to track changes, manage revisions, and prevent conflicts, allowing multiple artists to work on the same assets simultaneously.

- Shared file systems and cloud storage: Providing easy access to project files for all team members.

- Clear roles and responsibilities: Defining tasks and responsibilities clearly to avoid duplication of effort and confusion.

- Constructive feedback: Providing and receiving criticism in a positive and helpful manner, fostering a collaborative environment.

I also strive to understand different roles and appreciate the unique contributions of each team member, whether they are modelers, animators, riggers, or programmers. This ensures smooth project delivery and reduces conflicts.

Q 27. Explain your understanding of physically based rendering (PBR).

Physically Based Rendering (PBR) is a rendering technique that simulates how light interacts with materials in the real world. It moves away from the arbitrary parameters of older rendering methods and uses physically accurate models to define material properties.

Key aspects of PBR include:

- Energy conservation: The amount of light reflected, refracted, and absorbed must be physically consistent. No more than 100% of the incoming light can be reflected or emitted.

- Microfacet theory: Modeling the surface roughness at a microscopic level, impacting how light is scattered and reflected.

- Specular and diffuse reflections: Accurately simulating the different ways light reflects from a surface, based on its roughness and material properties.

- Metallic and dielectric materials: Differentiating between metallic surfaces (like gold or silver) and non-metallic surfaces (like wood or plastic), which behave differently under lighting.

- Normal maps and roughness maps: Using texture maps to provide detailed surface information, enhancing realism.

PBR leads to more realistic lighting and materials, making 3D scenes significantly more visually believable. A wooden table, for instance, will accurately reflect and scatter light depending on its roughness and wood grain, unlike older methods which relied on arbitrary parameters for shine and reflectivity.

Q 28. What are your future goals and aspirations in the field of 3D graphics?

My future goals involve pushing the boundaries of real-time rendering and exploring the intersection of 3D graphics with other fields like AI and virtual reality.

Specifically, I aim to:

- Master advanced rendering techniques: Deepen my understanding of path tracing, ray tracing, and global illumination to create even more realistic scenes.

- Develop expertise in AI-assisted tools: Explore how AI can automate tasks, improve workflow, and enhance the creative process in 3D graphics.

- Contribute to the development of innovative VR/AR experiences: Leverage my skills to create immersive and engaging virtual and augmented reality applications.

- Stay current with industry trends: Continuously learn and adapt to the ever-evolving landscape of 3D graphics technology.

Ultimately, I want to contribute to the creation of visually stunning and technically impressive 3D experiences that captivate audiences and push the boundaries of what’s possible in the field.

Key Topics to Learn for Your 3D Graphics Interview

- Mathematics for 3D Graphics: Understand linear algebra (vectors, matrices, transformations), trigonometry, and calculus as they relate to 3D space manipulation. Practical application includes modeling object movement, camera control, and lighting calculations.

- 3D Modeling and Pipelines: Familiarize yourself with common 3D modeling techniques, the rendering pipeline (vertex, fragment shaders), and different rendering techniques (rasterization, ray tracing). Practical application includes creating and optimizing game assets or visual effects.

- Shader Programming (GLSL, HLSL): Master the fundamentals of shader programming, including writing efficient and optimized shaders for various effects (lighting, texturing, shadows). Practical application includes creating visually stunning and performant graphics.

- Real-time Rendering Techniques: Explore techniques like deferred shading, shadow mapping, and screen-space effects. Practical application includes creating high-fidelity visuals in real-time applications like games and simulations.

- Game Engines and Frameworks (Unity, Unreal Engine): Gain hands-on experience with popular game engines, understanding their architecture and how to leverage their features for efficient development. Practical application includes building prototypes and demonstrating your ability to work within industry-standard tools.

- Data Structures and Algorithms: Brush up on relevant data structures (like octrees and kd-trees for spatial partitioning) and algorithms for efficient scene traversal and collision detection. Practical application includes optimizing performance in complex 3D scenes.

- Optimization Techniques: Learn about techniques to optimize performance in 3D graphics applications, including level of detail (LOD), culling, and texture compression. This is critical for interview success and real-world development.

Next Steps

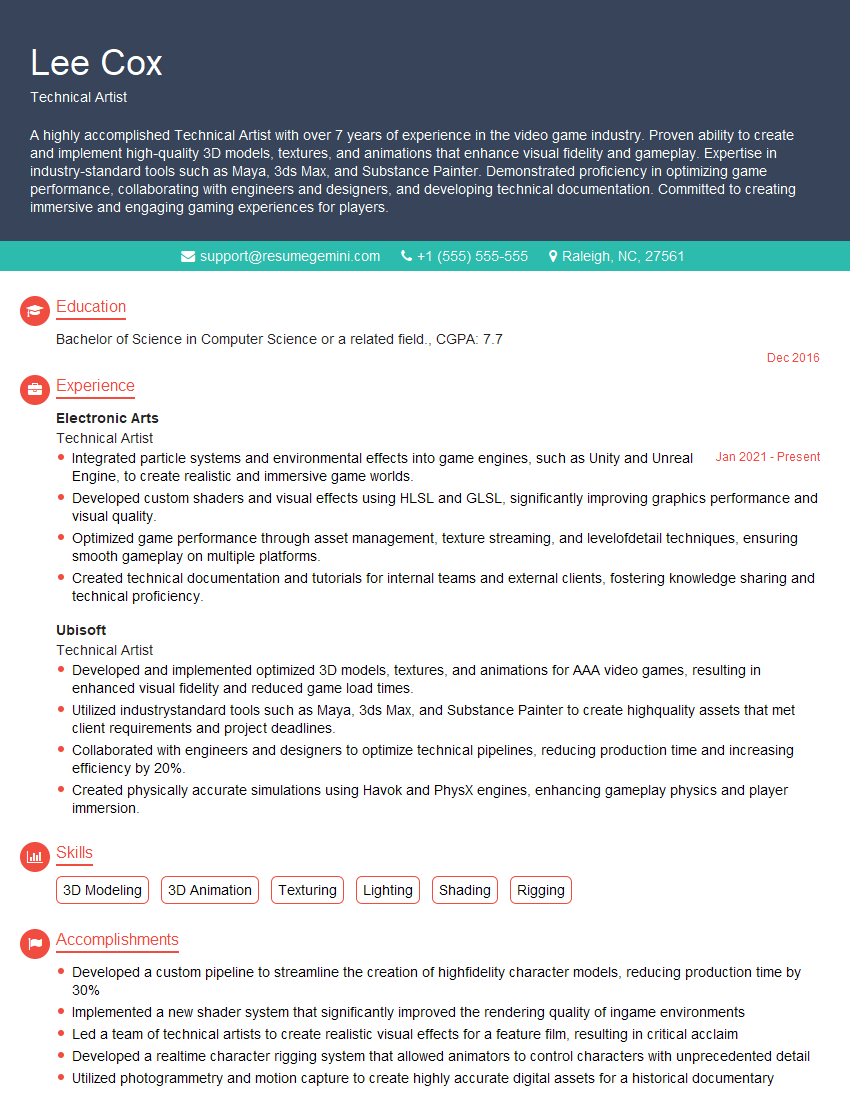

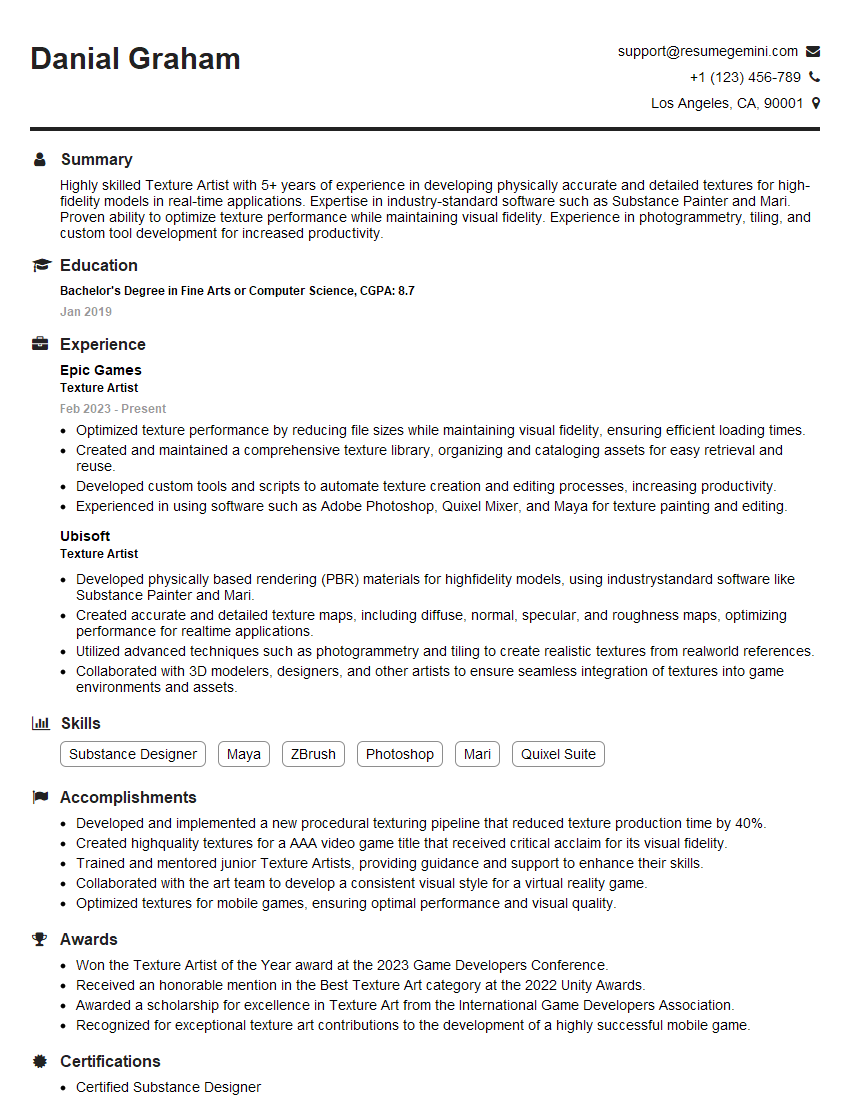

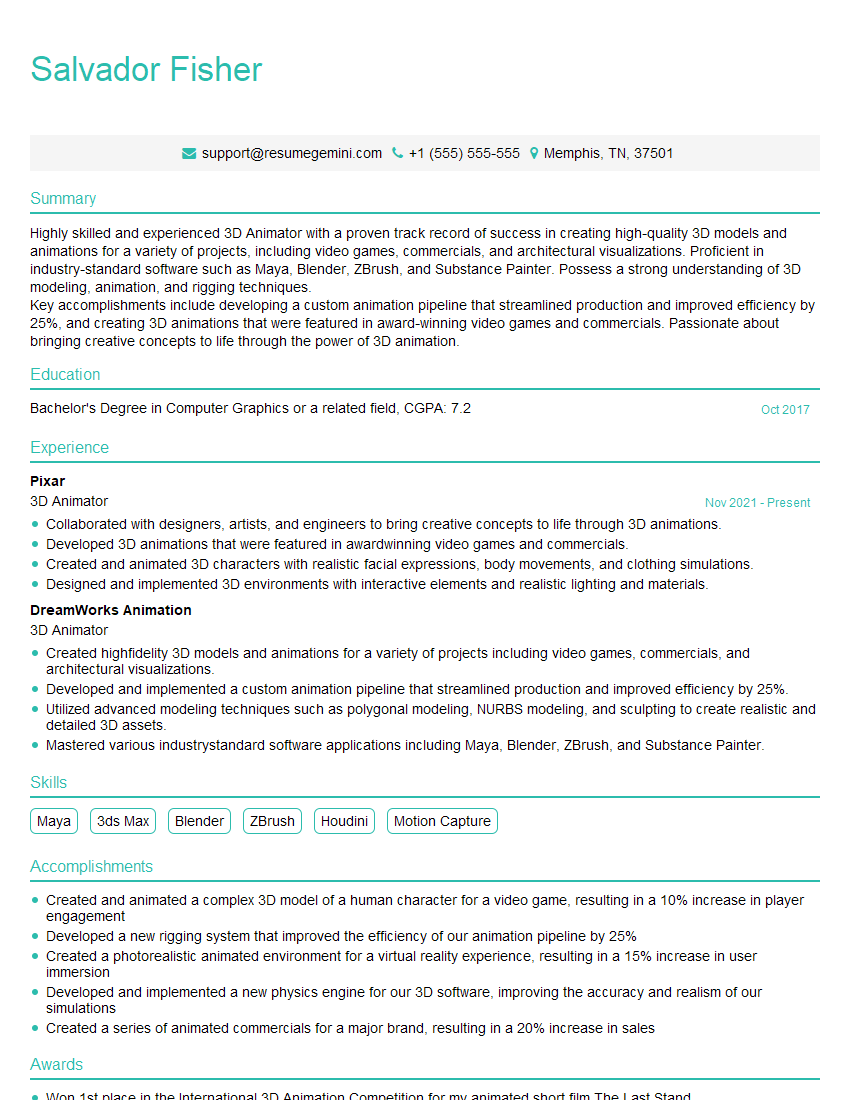

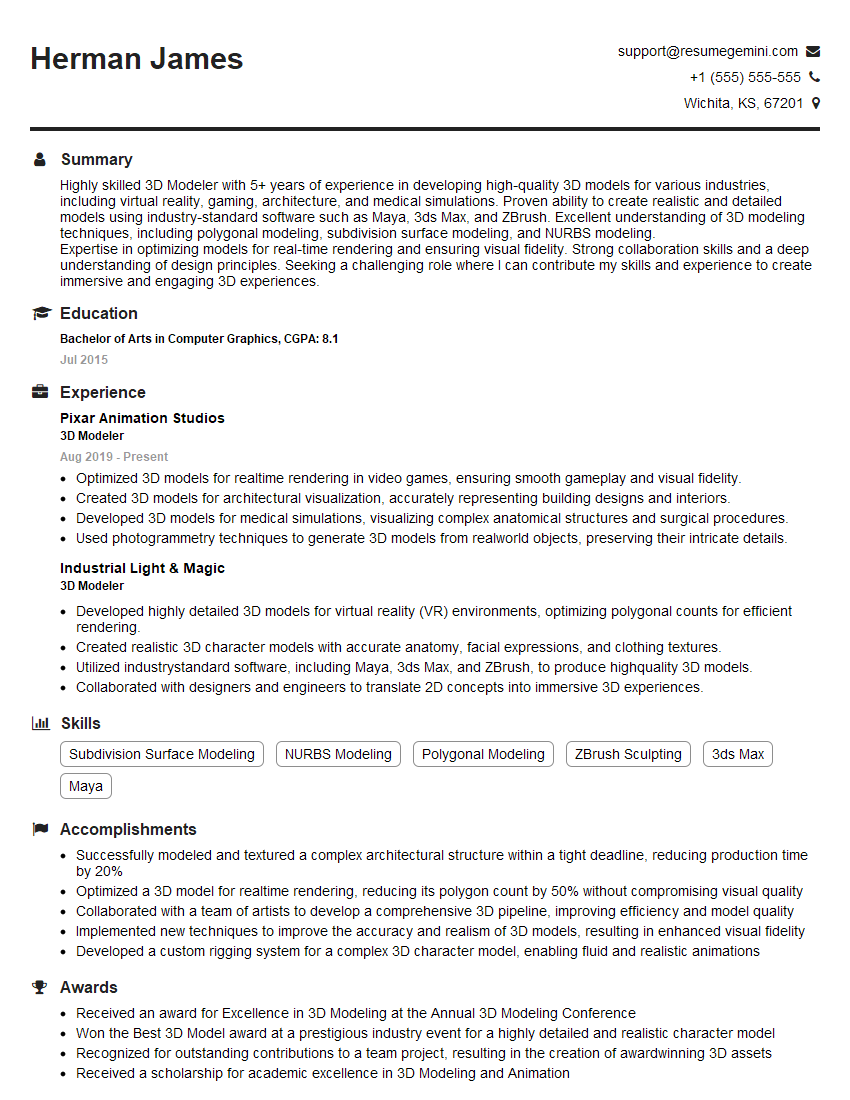

Mastering 3D graphics opens doors to exciting careers in game development, visual effects, virtual reality, and more. To maximize your job prospects, invest time in crafting a strong, ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource for building professional resumes that get noticed. They offer examples of resumes tailored to the 3D Graphics field to help you create a compelling application. Take the next step towards your dream job – build a resume that showcases your expertise!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

These apartments are so amazing, posting them online would break the algorithm.

https://bit.ly/Lovely2BedsApartmentHudsonYards

Reach out at [email protected] and let’s get started!

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?