Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Sound Particles interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Sound Particles Interview

Q 1. Explain the core functionality of Sound Particles.

Sound Particles is a revolutionary 3D audio engine that allows for the creation of incredibly realistic and immersive soundscapes. At its core, it simulates sound propagation in a 3D environment using a particle-based system. Instead of traditional methods that rely on pre-calculated reverb or ray tracing, Sound Particles uses millions of tiny sound particles that are emitted from sources, bounce off surfaces, and eventually reach the listener. This approach allows for highly dynamic and accurate spatial audio, responding realistically to changes in the environment and listener position.

Think of it like this: Imagine throwing pebbles into a pond. Each pebble represents a sound particle, and the ripples it creates represent the sound wave spreading. Sound Particles simulates this process, calculating the interactions of these particles with objects in the virtual environment, to generate the final sound.

Q 2. Describe the difference between emitter and listener objects in Sound Particles.

In Sound Particles, emitters and listeners are fundamental objects defining the audio’s source and reception points. An emitter is the origin of a sound; it’s the source of the sound particles. This could be anything from a single point source like a speaker to a complex object with multiple emission points, representing, for example, a car with engine, tire, and horn sounds each emanating from a different location on the model.

The listener, on the other hand, represents the point of sound reception – essentially, where the ‘ears’ are placed in the virtual environment. The listener’s position relative to the emitters greatly influences the final perceived sound, impacting aspects like direct sound, reflections, and overall spatial perception. By moving the listener or the emitter, the entire soundscape dynamically changes, creating an interactive and immersive experience.

Imagine creating a virtual concert: each instrument would be an emitter, and the audience members would be represented by listeners, each with a slightly different perspective on the sound.

Q 3. How do you manage large-scale sound design projects within Sound Particles?

Managing large-scale projects in Sound Particles relies heavily on organization and the effective use of its features. Breaking down the project into smaller, manageable sections is key. For example, I might create separate scenes for different areas of a game level or different parts of a virtual environment. This approach simplifies the scene graph, preventing overwhelming complexity.

Utilizing Sound Particles’ scene management tools is vital. Grouping emitters logically (e.g., ‘ambience sounds,’ ‘character sounds’) aids in selection and modification. The ability to load and unload scenes allows for efficient management of resources, especially when dealing with high polygon counts or large numbers of emitters. I would also recommend leveraging the scene’s ability to be exported and imported as individual files, enabling collaboration amongst a team.

Finally, efficient naming conventions and a clear project structure are crucial for maintainability and collaborative work. Using a consistent system for naming emitters and objects makes it easier to locate and manage specific elements within a complex scene.

Q 4. What are the advantages of using Sound Particles compared to other sound design software?

Sound Particles distinguishes itself through its unique particle-based approach to 3D audio. Unlike traditional methods that often rely on pre-computed reverb or simplified ray-tracing, Sound Particles offers unparalleled realism and dynamic control. This means sounds react realistically to changes in the environment in real-time, leading to a more immersive and believable experience. This is particularly advantageous in applications such as video games, virtual reality, and architectural acoustics where the environment plays a critical role in shaping the sound.

Other advantages include its intuitive interface, making complex spatial audio design accessible to a wider range of users. Its flexibility also allows for integration with various game engines and other software through its robust API and export options. Finally, the ability to create highly detailed soundscapes, with accurate reflections and occlusion, adds layers of realism often missing in other solutions.

Q 5. How do you optimize sound design for different platforms using Sound Particles?

Optimizing sound design for different platforms using Sound Particles involves considering platform limitations and capabilities. For example, lower-end devices might struggle with the processing demands of a large number of particles. To optimize, you can reduce the particle count by using fewer emitters or lowering the particle density. Furthermore, careful consideration must be given to the audio file formats used – opting for compressed formats like OGG Vorbis for mobile devices to reduce file sizes and loading times.

Additionally, Sound Particles allows you to adjust various settings, such as the quality of the reflections and the accuracy of the sound propagation calculations. Lowering the quality settings can significantly reduce processing overhead on less powerful platforms without drastically affecting the overall sound quality. A/B testing on different target platforms is crucial to finding the best balance between quality and performance.

Finally, pre-rendering or pre-baking audio for specific situations can offload processing from the real-time engine. This might involve generating pre-rendered reverb or other effects that can be played back directly on the target device.

Q 6. Explain the concept of occlusion in Sound Particles and its importance.

Occlusion in Sound Particles simulates the way sounds are blocked or attenuated by objects in the environment. This is crucial for realism because it accurately reflects how sound behaves in the real world. For instance, if a sound source is behind a wall, the listener wouldn’t hear it as clearly as if it were in an open space. Sound Particles simulates this by calculating the path of sound particles and determining if they are blocked by objects along the way. The level of attenuation depends on the material properties and thickness of the object causing the occlusion.

The importance of occlusion cannot be overstated. It significantly contributes to the sense of immersion and spatial accuracy. Without accurate occlusion, a soundscape would feel flat and unrealistic. Imagine a game where you can’t hear the enemy behind a wall – it would severely impact gameplay and believability. Sound Particles’ accurate occlusion modeling greatly enhances the fidelity of the 3D audio experience.

Q 7. Describe your experience working with different sound file formats within Sound Particles.

Sound Particles supports a wide array of common sound file formats, including WAV, AIFF, and MP3. My experience working with these formats has been generally straightforward, with the software seamlessly importing and handling them. However, the choice of format often depends on the specific needs of the project. For example, WAV offers uncompressed high-fidelity audio, ideal for high-quality renders, but results in larger file sizes. MP3, on the other hand, offers a compressed format suitable for reducing file sizes, especially beneficial in projects with many sound sources. AIFF provides a good balance between file size and audio quality, and is often preferred in professional audio workflows.

In practice, I select the format based on factors such as the required audio quality, file size constraints, and the platform targeted. For instance, I might use WAV for initial design and prototyping, then switch to a compressed format like OGG Vorbis for deployment on a game or mobile application to optimize for storage space and processing power.

Q 8. How do you use Sound Particles to create realistic environmental soundscapes?

Creating realistic environmental soundscapes in Sound Particles involves leveraging its powerful features for spatial audio and sound propagation. Think of it like building a 3D audio model of your environment. Instead of just placing a single sound source, you’re crafting a complex interplay of sounds that interact realistically with the virtual space.

My process typically starts with defining the environment’s acoustic properties. This includes identifying key reflective surfaces (walls, floors, etc.) and sound-absorbing materials. In Sound Particles, I use these properties to define the environment’s reverberation and its impact on the sounds. For instance, a large cathedral will have a much longer, more complex reverberation than a small, sparsely furnished room.

Next, I strategically place sound sources. I might use multiple instances of a single sound – like bird chirps – at varying distances and heights to create a sense of depth and realism. I then utilize Sound Particles’ advanced features like occlusion (sounds being blocked by objects) and diffraction (sounds bending around objects) to further enhance the realism. For example, a sound source hidden behind a wall will be significantly quieter than one in open space.

Finally, I use Sound Particles’ built-in effects, like early reflections and late reverberation, to finely tune the overall soundscape. By carefully adjusting parameters, I can create highly believable audio environments. It’s a bit like being a sound architect, meticulously crafting a soundscape that will feel both natural and engaging for the listener.

Q 9. Explain your process for designing and implementing interactive sound within a game engine using Sound Particles.

Designing interactive sound within a game engine using Sound Particles involves a streamlined workflow focusing on real-time performance and integration. I typically start by creating my soundscapes within Sound Particles, leveraging its intuitive interface for sound placement and environmental effects.

Once I have a realistic soundscape, I export it in a format suitable for my chosen game engine (e.g., Unreal Engine, Unity). Sound Particles offers various export options, allowing me to choose the optimal balance between fidelity and performance. The key here is to avoid overwhelming the game engine with excessive audio data.

Within the game engine, I’ll use Sound Particles’ output as a foundation. Then I’ll leverage the engine’s scripting capabilities to add interactivity. For example, I might trigger changes to the soundscape based on player actions, such as approaching a particular location or triggering an event. This could involve modifying the volume, panning, or even adding new sound sources dynamically.

Consider a scenario where a player walks into a forest. Using Sound Particles’ spatial audio capabilities, I can create a rich soundscape filled with rustling leaves and distant bird calls. As the player approaches a specific area, I use the game engine’s scripting to trigger new sound events, like a nearby animal’s vocalization, further enhancing immersion.

Q 10. How do you troubleshoot and debug sound issues within Sound Particles projects?

Troubleshooting in Sound Particles often involves a systematic approach, combining careful listening with a detailed examination of the software’s settings and visualizations. It’s much like detective work, carefully examining each aspect of the audio chain to locate the source of the problem.

My first step is to isolate the issue. Is the problem with a specific sound source, an effect, or the overall mix? I’ll frequently use Sound Particles’ visualization tools – such as its 3D sound map – to visually inspect the positions and properties of sound sources and ensure they are placed and configured correctly. This often helps identify subtle placement errors.

Next, I’ll check for conflicts between different sound sources or effects. Are any settings causing unexpected audio artifacts, such as clipping or phasing? It helps to temporarily disable effects or sound sources one by one to pinpoint the culprit. Remember, Sound Particles’ comprehensive visual feedback is incredibly useful during this stage.

If the issue persists, I’ll meticulously review the entire signal flow within Sound Particles. Are there any unexpected gain staging problems or signal routing errors? Sound Particles’ intuitive interface makes this relatively straightforward. Finally, if I’m still struggling, I’ll consult the Sound Particles documentation and community forums – there’s a wealth of knowledge available there.

Q 11. Describe your experience with spatial audio techniques using Sound Particles.

My experience with spatial audio techniques in Sound Particles is extensive. I regularly use its capabilities to create immersive and realistic soundscapes that place the listener directly within the audio environment. It’s far beyond simple stereo or surround sound; it’s about true 3D audio representation.

Sound Particles provides several crucial tools for spatial audio. Its 3D sound engine enables precise placement of sound sources in a three-dimensional space. This enables accurate sound propagation, reflections, and occlusion based on the environment’s geometry. For instance, a sound source behind a wall will be attenuated (reduced in volume) and possibly delayed, accurately mimicking real-world acoustics.

The software also lets me design virtual microphones. This is crucial for controlling how the sound is ‘picked up’ by a listener. I might use multiple virtual microphones to create binaural recordings, which provide a very convincing sense of space and direction for headphone listeners. I can also adjust the microphone’s directivity (how sensitive it is to sound coming from different directions) to tailor the sound capture to the desired result.

I have utilized these techniques in projects ranging from virtual reality experiences to interactive installations, consistently achieving highly immersive and spatially accurate soundscapes. It’s about providing the listener not just with sounds, but with the realistic sense of where those sounds are coming from in three dimensions.

Q 12. How do you use the different effects processors within Sound Particles?

Sound Particles offers a diverse range of effects processors, each designed to sculpt and refine the audio signal. My approach to using these processors depends largely on the specific project and desired sound, but I always approach them methodically.

For example, I frequently use reverb to create a sense of space and atmosphere. Sound Particles’ reverb effects are particularly powerful, allowing me to simulate different environments through careful adjustment of decay time, pre-delay, and diffusion parameters. A short decay time would suit a small room, while a longer decay is perfect for a cathedral.

Delay effects are another staple in my workflow. They’re useful for creating rhythmic patterns or adding a sense of depth. I might use a simple delay for subtle thickening or a more complex delay network for pronounced rhythmic effects.

EQ (equalization) is crucial for shaping the tonal balance of the sound. Sound Particles’ parametric EQ provides precise control, allowing me to boost or cut frequencies to fix issues like muddiness or harshness. It’s very versatile for cleaning up or enhancing the sound signature.

Finally, I often use compression to control the dynamics of sounds and prevent them from being too quiet or too loud. The aim is to even out the loudness of different sounds and make them sit better within the mix. Using these tools creatively and judiciously allows me to create rich and impactful sounds.

Q 13. Explain how you handle different sound propagation models within Sound Particles.

Sound Particles offers different sound propagation models to simulate how sound behaves in different environments. Understanding these models and selecting the appropriate one is crucial for realistic audio design. It’s like choosing the right physics engine for a game—you need the one that best matches the needs of your scenario.

The simplest model is often ray tracing. This is computationally efficient and useful for basic sound propagation. However, it doesn’t capture some more complex acoustic phenomena as accurately as other models. Imagine a simple scenario where you want to model sound bouncing off flat walls – ray tracing would be suitable.

More sophisticated models, like image-source methods and more advanced ray-tracing methods with reflections and refractions, are better suited to situations where the environment is more complex and requires more accurate modeling of reflections, diffractions, and other acoustic phenomena. If you’re modeling a concert hall with complex geometry and multiple reflective surfaces, you’d choose a more sophisticated method to capture the rich ambience accurately.

My choice of model is always dictated by the complexity of the environment and the level of realism required. For highly realistic simulations, I’ll often opt for the more computationally intensive models. For simpler scenarios or to balance realism with performance, the simpler models work well.

Q 14. How do you integrate Sound Particles into a larger audio pipeline?

Integrating Sound Particles into a larger audio pipeline is a process that involves careful planning and consideration of the overall workflow. It often involves a combination of signal flow management, file format conversion, and potentially custom scripting or automation.

In many cases, Sound Particles acts as a crucial pre-processing stage, creating highly realistic and detailed soundscapes that are then integrated into the final mix. I might use Sound Particles to create environmental sounds, such as ambient sounds or realistic reflections, and then import the output as individual audio files or stems into a Digital Audio Workstation (DAW).

For interactive applications, such as video games, the integration becomes more complex, as it involves real-time processing and communication between Sound Particles (or its export) and the game engine. This might necessitate custom scripting to handle triggers, responses, and dynamic adjustments to the soundscape based on in-game events.

Regardless of the specific application, consistent file management and well-defined signal paths are critical. Using a robust naming convention and a clear understanding of the signal flow from Sound Particles to the next stage (DAW or game engine) is paramount. It helps minimize errors and makes the overall workflow much smoother and more efficient.

Q 15. What are your preferred workflows when using Sound Particles?

My Sound Particles workflow centers around a modular approach. I begin by clearly defining the desired sonic outcome – be it a realistic environment or an abstract soundscape. Then, I break down the project into manageable components, each handled by a separate Particle. This allows for independent tweaking and iterative refinement. For instance, in designing a bustling city street, I might use one Particle for ambient traffic noise, another for distant sirens, a third for pedestrian chatter, and so on. This modularity significantly simplifies complex projects and makes troubleshooting much easier. I extensively use the ‘Send’ and ‘Receive’ functionality between Particles to create intricate routing and control signal flow, enabling dynamic and reactive audio elements. My final step involves meticulous mixing and mastering within Sound Particles, leveraging its built-in tools before exporting.

Career Expert Tips:

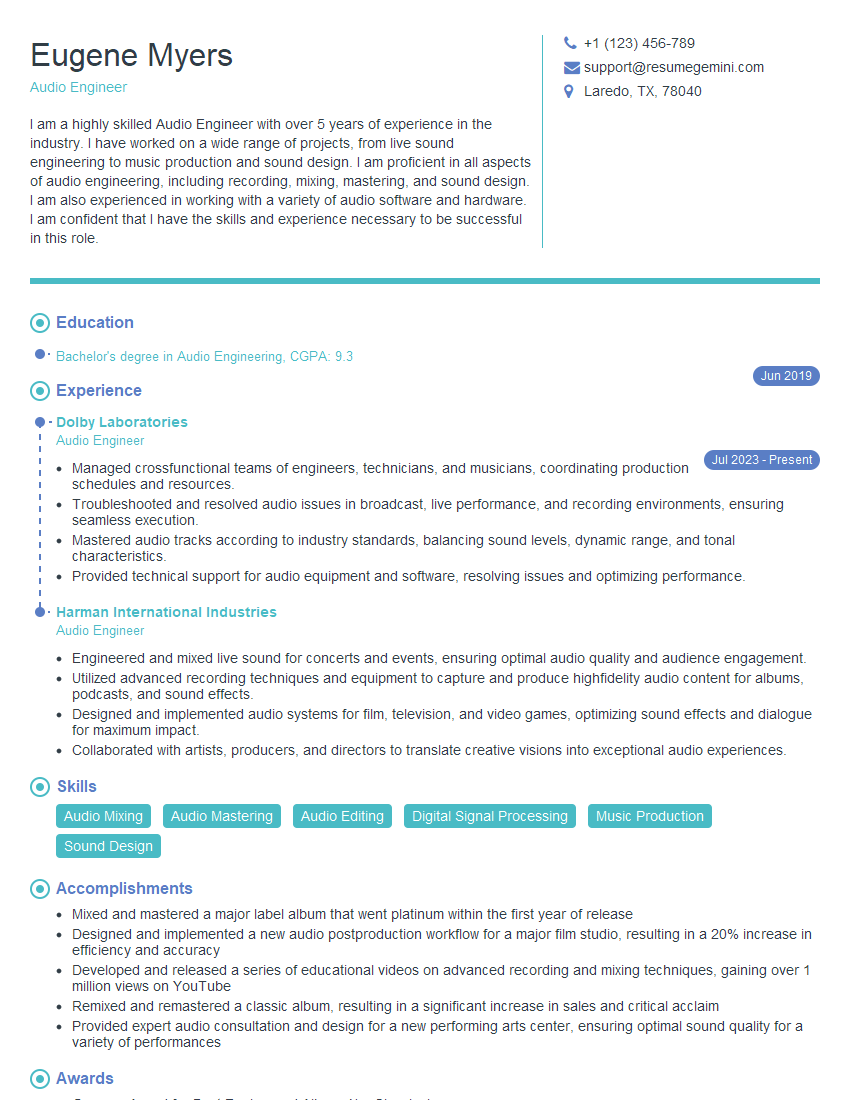

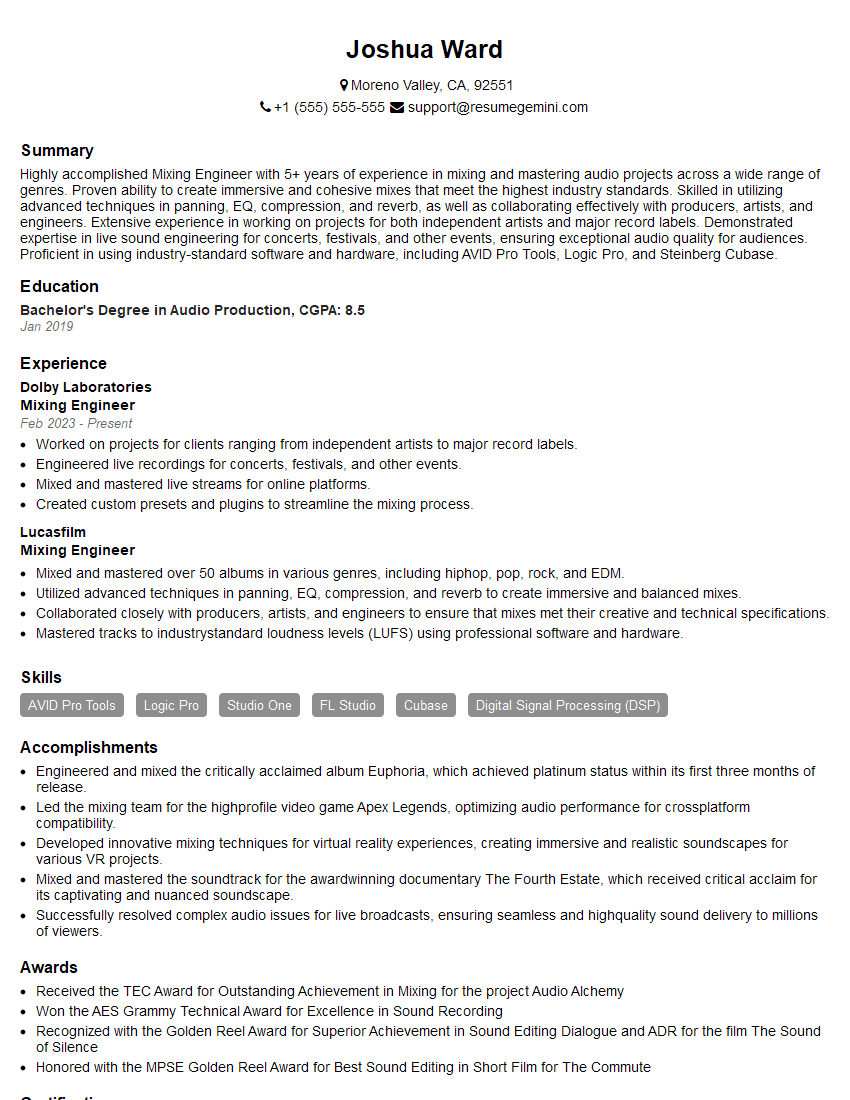

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience working with real-time audio processing in Sound Particles.

Real-time audio processing in Sound Particles is a game-changer. The ability to hear immediate results while tweaking parameters is invaluable for iterative design. Imagine designing the sound of a spaceship flying past – using real-time processing, I can adjust the Doppler effect, the engine rumble, and the environmental reflections instantly, allowing me to perfect the sound in real-time without rendering delays. This is particularly beneficial during collaborations, enabling quick feedback and adjustments based on creative input. While Sound Particles handles large projects impressively, understanding processing limitations is crucial. I often break down exceptionally complex scenes into smaller, independently processed parts that are then combined, ensuring smooth real-time performance. It’s like building with LEGOs – individual blocks are manageable, the overall creation can be massive and complex but still functions as a whole.

Q 17. Explain the significance of using Sound Particles for creating immersive experiences.

Sound Particles excels at creating immersive experiences because of its spatial audio capabilities. It allows for precise control over the position and movement of sound sources in 3D space, going beyond simple stereo panning. This is achieved through the use of binaural rendering and Ambisonics, techniques that create a realistic sense of depth and envelopment. For instance, I recently created an immersive soundscape for a virtual reality experience. Using Sound Particles, I could position sounds precisely within the virtual environment, making the user feel truly present in that space. The ability to easily implement features such as reverberation, occlusion, and diffraction further enhance the realism and immersion, enriching the user’s experience far beyond what traditional stereo mixing can achieve.

Q 18. How do you ensure consistent quality control within Sound Particles projects?

Consistent quality control in Sound Particles projects relies on a multi-stage approach. First, I establish clear reference tracks and sonic goals from the outset. This ensures that my work stays aligned with the overall artistic vision. Regular listening tests throughout the process using calibrated monitors are crucial, as are periodic checks against the reference tracks. I leverage Sound Particles’ built-in metering tools to monitor levels, ensuring there are no clipping issues or unwanted noise. Finally, I perform detailed A/B comparisons between different versions of my work, meticulously examining each adjustment for its impact on the overall quality. This thorough approach reduces the chances of overlooking subtle problems, ensuring a high-quality end product every time.

Q 19. How do you utilize Sound Particles to create believable and impactful sound effects?

Creating believable and impactful sound effects in Sound Particles often involves combining multiple techniques. I start by layering different sound sources; for instance, a realistic footstep might be built from the base recording, combined with additional layers representing surface texture and impact. Sound Particles’ particle-based system is key here, as it allows for subtle manipulation of individual audio elements, creating a nuanced and organic effect. Then, I use spatial audio to place and manipulate sounds realistically within the listener’s environment. For example, I can simulate the sounds’ reflection, diffraction, and distance using Sound Particles’ tools to add depth and believability. Finally, I use reverb and other effects to refine the overall sound, ensuring it fits seamlessly into the project’s context. The key is to think about sound’s physical properties and simulate them as accurately as possible.

Q 20. Describe your experience with scripting or extending Sound Particles’ functionality.

While I haven’t delved into the advanced scripting capabilities of Sound Particles extensively, I have used its built-in automation and modulation features extensively. For instance, I’ve used LFOs and envelopes to modulate parameters like volume and pan in order to create dynamic and evolving soundscapes. //Example: Using an LFO to modulate the volume of a particle over time. This allows for subtle shifts and transitions within a scene, creating a feeling of dynamism and depth. For more complex interactions, I often utilize external MIDI controllers to control various parameters within Sound Particles in real-time. This allows for a more hands-on and intuitive approach during the creative process, providing greater control and fluidity in the audio landscape.

Q 21. How do you optimize the performance of your Sound Particles projects?

Optimizing Sound Particles projects requires careful consideration of several factors. One key aspect is efficient particle management; using a larger number of particles comes at the cost of processing power. So, I tend to minimize particle count by merging similar sounds where possible and optimizing audio file sizes. Another important optimization strategy is to use lower sample rates and bit depths whenever possible without sacrificing the desired audio quality, as this considerably reduces the processing load. Finally, intelligent use of Sound Particles’ processing features can dramatically improve performance. By strategically applying processing only to specific audio segments or using efficient algorithms, it’s possible to maintain a smooth workflow even when dealing with complex projects. It’s always a balancing act between sonic quality and system resources.

Q 22. Explain your understanding of different audio formats (e.g., WAV, MP3, OGG) and their suitability for Sound Particles projects.

Understanding different audio formats is crucial for efficient workflow in Sound Particles. Each format offers a trade-off between file size, audio quality, and compatibility.

- WAV (Waveform Audio File Format): A lossless format, meaning no audio data is discarded during encoding. This results in high fidelity but significantly larger file sizes. Ideal for Sound Particles projects where pristine audio quality is paramount, especially during the initial stages of design and sound creation before final export. For example, I’d use WAV for creating intricate sound effects that need to be manipulated without quality degradation.

- MP3 (MPEG Audio Layer III): A lossy format; data is compressed by discarding less noticeable frequencies. This results in smaller file sizes but some audio quality loss. Suitable for final export, distribution, or when storage space is a concern. It is a common format for final delivery and will work well within Sound Particles, but you should avoid using it during the main production as quality can be lost through repeated processes.

- OGG (Ogg Vorbis): Another lossy format, offering a good balance between file size and audio quality. Often considered a superior alternative to MP3 in terms of audio quality at similar bitrates. A viable option for projects requiring smaller file sizes without significant quality compromise, particularly for web-based applications. In Sound Particles, OGG performs similarly to MP3, but with generally a better audio result.

In practice, I usually begin a Sound Particles project with high-quality WAV files for maximum flexibility and then export to a more compressed format like MP3 or OGG for distribution.

Q 23. Discuss your experience using Sound Particles to create interactive audio for VR/AR applications.

Creating interactive audio for VR/AR applications using Sound Particles is a fascinating area. The software’s ability to handle spatial audio and react to user input makes it an excellent choice.

In one project, we created an interactive soundscape for a VR museum tour. We used Sound Particles to design the spatial audio environment, placing sounds realistically within the virtual space. The user’s position and actions, such as moving closer to an exhibit, triggered dynamic sound changes—like the narrating voice getting louder, adding immersive effects, enhancing the overall experience. We utilized Sound Particles’ particle systems to dynamically adjust sound parameters based on the user’s head tracking data. For example, approaching a specific area may trigger a particle system that plays ambient sounds, increasing their volume as the user gets closer.

Another example was an AR game where the player’s movements triggered distinct sounds. We implemented the game logic in Unity and used Sound Particles as a plugin to generate and manipulate the game audio in real-time based on the player’s position, adding realism and immersiveness.

Q 24. How do you collaborate with other team members using Sound Particles in a shared project environment?

Collaboration in Sound Particles projects relies heavily on effective file sharing and version control. While Sound Particles doesn’t have built-in collaborative editing, we establish workflows around external tools like cloud storage services.

We typically use a combination of approaches: One team member acts as the lead, working on a master project file. This person exports their progress regularly. Others download the latest version, perform their tasks on their own copies, and then submit their changes as individual sound files or updated sections. We frequently use cloud storage (like Google Drive or Dropbox) to facilitate the sharing and versioning. The lead integrator then imports these changes into the main project file, ensuring everyone’s contributions are integrated. We communicate extensively through project management software and regular meetings to coordinate our efforts and resolve any conflicts.

Q 25. Describe your experience with version control for Sound Particles projects.

Version control for Sound Particles projects is crucial, and it’s often handled outside the software itself. Because Sound Particles doesn’t have a native version control system, we employ external methods. We use a combination of techniques including regular project backups and cloud storage.

Our team generally follows this structure: We version our projects using cloud storage’s version history. We often name our projects with a date and version number (e.g., ‘ProjectX_v1.0_20241027’). This allows us to easily revert to previous versions if needed. We also maintain detailed change logs in our project management software. This is especially important when multiple people are working on the same project, allowing us to track who made which changes and easily resolve any conflicts. While not ideal, this approach effectively manages the versioning of the project files until a more integrated system becomes available.

Q 26. What are some common challenges you’ve faced using Sound Particles and how did you overcome them?

One common challenge is managing the complexity of large projects. Sound Particles can handle many particles and effects, leading to performance issues with very complex scenes. To overcome this, we optimize our projects by simplifying particle systems where possible, using lower-resolution audio files for pre-processing steps, and leveraging Sound Particles’ rendering options efficiently. For instance, breaking down a large soundscape into smaller, manageable modules processed individually significantly improves performance.

Another challenge was integrating Sound Particles with other audio tools. While Sound Particles is excellent for spatial audio and particle-based effects, it doesn’t replace other tools entirely. We resolved this by establishing a clear workflow that integrates it with digital audio workstations (DAWs) like Ableton Live or Logic Pro X for tasks such as audio editing and mixing. We use Sound Particles for initial spatial sound design, then import the processed audio into a DAW for more detailed mixing and mastering.

Q 27. How do you approach the design of a complex sound system using Sound Particles?

Designing a complex sound system in Sound Particles requires a structured approach. I begin by breaking down the system into smaller, manageable modules. This approach is similar to modular synthesis where each module is designed and tested independently.

For example, creating a bustling city soundscape might involve distinct modules for traffic, ambient sounds, crowds, and distant sirens. Each module uses separate particle systems to manage the spatial distribution and characteristics of each sound source. Once each module is refined, I integrate them into a unified system, ensuring that the individual elements blend seamlessly. Careful attention to the spatialization of sounds within the system and the management of parameters are key to maintaining control and ensuring proper interaction between modules. The project is often divided into distinct stages: initial design, individual module development, integration testing, and final refinement. This structured approach allows for more manageable development and better overall performance.

Q 28. Describe your experience using the Sound Particles API or plugins.

My experience with the Sound Particles API and plugins has been largely positive, particularly for integrating the software into larger projects or creating custom tools. The API allows for deep control over the software’s functionalities, enabling integration with game engines or custom applications. I’ve used it to create custom plugins for controlling particle parameters based on external data sources. For example, I created a plugin that links particle parameters to game engine variables, enabling interactive sound design within a game. This allows us to dynamically create sound effects that change as the game progresses, based on events such as collisions, enemy spawn points, or the distance to the player.

Similarly, Sound Particles’ plugin architecture allows for using third-party tools for enhancing functionality. We used a custom plugin to directly integrate audio analysis data which impacted particle parameters. This provided a more dynamic and expressive sound design experience. The ability to extend Sound Particles using these methods enhances its utility significantly and makes the software incredibly flexible.

Key Topics to Learn for Your Sound Particles Interview

- Sound Design Principles within Sound Particles: Understand the core concepts of sound design, including signal processing, synthesis, and effects processing, as they apply specifically within the Sound Particles environment.

- Particle System Workflow: Master the creation, manipulation, and animation of particle systems for realistic and creative sound design. Focus on practical application, such as designing complex soundscapes or interactive audio.

- Spatial Audio and 3D Sound Design: Explore the implementation and manipulation of spatial audio within Sound Particles, including binaural rendering and Ambisonics. Understand how to create immersive and engaging soundscapes.

- Integration with Other DAWs and Software: Learn how Sound Particles interacts with other audio software like Ableton Live, Logic Pro X, or Pro Tools. Understand the import/export workflow and common integration challenges.

- Advanced Techniques and Workflows: Explore more advanced features such as scripting, custom particle behaviors, and efficient workflow optimization within Sound Particles.

- Troubleshooting and Problem-Solving: Be prepared to discuss common issues encountered while using Sound Particles and how you approach troubleshooting and finding solutions. This includes debugging unexpected behavior and optimizing performance.

- Sound Particles’ Unique Features and Advantages: Demonstrate your understanding of what sets Sound Particles apart from other audio software and its specific strengths in different applications.

Next Steps

Mastering Sound Particles opens doors to exciting opportunities in audio engineering, game development, and interactive media. A strong understanding of this software significantly boosts your employability and showcases your advanced skills. To maximize your job prospects, create an ATS-friendly resume that highlights your Sound Particles expertise. ResumeGemini is a trusted resource to help you build a professional and impactful resume that gets noticed. We provide examples of resumes tailored to Sound Particles to guide you. Take the next step in your career journey today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

These apartments are so amazing, posting them online would break the algorithm.

https://bit.ly/Lovely2BedsApartmentHudsonYards

Reach out at [email protected] and let’s get started!

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?