Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Bioinformatics Databases and Tools interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Bioinformatics Databases and Tools Interview

Q 1. Explain the difference between relational and NoSQL databases in the context of bioinformatics.

Relational databases, like MySQL or PostgreSQL, organize data into tables with rows and columns, enforcing relationships between them. Think of it like a highly structured spreadsheet. This is great for managing structured data where relationships are clearly defined, like gene annotations where each gene has a defined set of associated transcripts, exons, and functions. NoSQL databases, on the other hand, are more flexible and don’t adhere to the rigid table structure. They’re better suited for handling unstructured or semi-structured data, such as genomic sequences which can vary greatly in length and format, or complex experimental metadata. Examples of NoSQL databases used in bioinformatics include MongoDB and Cassandra.

In bioinformatics, the choice depends on the data type and the type of queries you’ll perform. If you need to join tables based on defined relationships (e.g., finding all genes associated with a specific pathway), a relational database is preferable. If you are storing large amounts of genomic sequence data or other unstructured data needing fast reads and writes, a NoSQL database is more efficient.

Q 2. Describe your experience with SQL and its application in querying biological databases.

I have extensive experience with SQL, using it daily to query various biological databases like UniProt and NCBI’s databases. For example, I’ve used it to retrieve protein sequences based on GO terms, identify genes located within specific genomic regions, and analyze gene expression data across different conditions. My skills include writing complex queries involving joins, subqueries, aggregations, and window functions to extract meaningful insights from large datasets.

SELECT gene_id, gene_name, sequence FROM gene_table WHERE chromosome = 'chr1' AND start_position BETWEEN 10000 AND 20000; This is a simple example of retrieving gene information from a specific chromosomal region. More complex queries would be needed to address more intricate biological questions.

I am also proficient in optimizing SQL queries for performance, a critical aspect when working with large biological databases. This includes using appropriate indexing techniques, avoiding unnecessary joins, and leveraging database-specific optimization features.

Q 3. How would you design a database to store and manage genomic sequence data?

Designing a database for genomic sequence data requires careful consideration of scalability, data integrity, and query efficiency. I would likely use a combination of relational and NoSQL databases. The relational database would store metadata such as sample information (patient ID, experimental conditions), gene annotations, and variant information. The NoSQL database would store the actual genomic sequences themselves, due to their size and less structured nature. This hybrid approach allows efficient querying of metadata and fast retrieval of specific sequences.

For instance, the relational part might contain tables for samples, genes, and variants, with foreign keys linking them. The NoSQL database might use a document-oriented approach where each document represents a genomic sequence associated with a sample ID stored in the relational database. This setup allows for flexibility in handling various sequencing technologies (e.g., Illumina, PacBio) and variations in sequence length.

Data integrity would be ensured through appropriate constraints and validation checks within the relational database, and version control in the NoSQL database to track changes and ensure data consistency across different analyses.

Q 4. What are the advantages and disadvantages of using cloud-based solutions for bioinformatics data storage and analysis?

Cloud-based solutions offer several advantages for bioinformatics data storage and analysis, including scalability (easily adjust storage and computing resources), cost-effectiveness (pay-as-you-go model), and accessibility (collaborate remotely). Cloud platforms like AWS, Google Cloud, and Azure provide pre-configured environments with bioinformatics tools, facilitating faster deployment and analysis. They also provide robust security features and data backup options.

However, there are downsides. Data transfer can be slow and expensive, especially with large datasets. Security concerns remain, although cloud providers have robust security infrastructure, and data privacy regulations need careful consideration. Another challenge is vendor lock-in, meaning migrating data to a different platform can be costly and complex.

The decision to use cloud-based solutions depends on the scale of the project, budget constraints, security requirements, and the availability of in-house infrastructure.

Q 5. Explain your experience with various bioinformatics tools like BLAST, SAMtools, or GATK.

I have extensive experience with several bioinformatics tools. BLAST is routinely used for sequence similarity searches, identifying homologous sequences in vast databases. I’ve used it to identify potential protein functions, classify new sequences, and investigate evolutionary relationships. SAMtools is essential for processing next-generation sequencing data – I’ve used it for alignment file manipulation, variant calling, and generating various statistical summaries. GATK is my go-to tool for variant discovery and genotyping, and I’ve employed it extensively in genome-wide association studies.

For instance, I recently used GATK’s Best Practices workflow to call SNPs and indels from whole-genome sequencing data of cancer patients. This involved aligning reads using BWA, performing variant calling with HaplotypeCaller, and then filtering and annotating the variants using various GATK modules. I regularly use SAMtools to manipulate the alignment files generated by BWA.

Q 6. How would you handle missing data in a bioinformatics dataset?

Handling missing data is crucial in bioinformatics as it can significantly bias downstream analysis. The best approach depends on the nature and extent of missing data, as well as the type of analysis. Simple methods include imputation, where missing values are replaced with estimated values. This could be done using the mean, median, or more sophisticated techniques based on the data’s distribution and correlation with other variables. Another option is to remove rows or columns with excessive missing data; however, this leads to information loss.

For example, if we have missing gene expression values, we could impute them using k-nearest neighbors, which finds the most similar samples and uses their expression values to estimate the missing ones. Alternatively, we might opt to remove genes with a high percentage of missing values if that gene is only relevant for a smaller subset of our analysis. Careful consideration of the implications of each approach is essential to maintain data integrity.

Q 7. What are different file formats used in bioinformatics and how are they processed?

Bioinformatics utilizes a variety of file formats, each designed for specific data types. FASTA stores nucleotide or amino acid sequences, often used in BLAST searches. FASTQ files contain sequencing reads and their quality scores, crucial for next-generation sequencing analysis. SAM/BAM files store sequence alignments, used in variant calling. VCF files store variant calls in a standardized format, enabling sharing and analysis across studies. GFF/GTF files annotate genomic features, providing information about genes, transcripts, and other features.

These files are processed using dedicated tools. FASTA files are parsed using bioinformatics libraries to extract sequences, FASTQ files are processed using tools for quality control and read alignment, SAM/BAM files are manipulated using SAMtools, and VCF files are used for variant annotation and filtering. Specific software and scripting languages like Python with biopython are vital to manage and process these diverse formats effectively. The choice of the tools depends on the specific task and desired output.

Q 8. Describe your experience with data normalization and cleaning in the context of bioinformatics.

Data normalization and cleaning are crucial preprocessing steps in bioinformatics. Think of it like preparing ingredients before cooking – you wouldn’t start a complex recipe with dirty, inconsistent ingredients. In bioinformatics, this means dealing with missing values, inconsistencies in data formats, and errors in sequencing data, gene annotations, or clinical metadata.

Normalization typically involves transforming data to a standard format. For example, normalizing gene expression data might involve log transformation to stabilize variance or z-score normalization to center the data around a mean of 0 and standard deviation of 1. This makes data comparable across different experiments or platforms.

Cleaning involves identifying and correcting or removing errors and inconsistencies. This could involve removing duplicate entries, handling missing values using imputation techniques (e.g., replacing missing values with the mean or median), or correcting data entry errors. In a genomic context, this might involve identifying and removing low-quality reads from next-generation sequencing data, or correcting sequencing errors.

For instance, I once worked on a project analyzing microbiome data where different sequencing centers used varying protocols, leading to inconsistent data formats and missing values. We standardized the formats, imputed missing taxonomic abundances using k-nearest neighbors, and removed low-quality sequences, significantly improving the reliability of downstream analyses.

Q 9. Explain the concept of data warehousing in bioinformatics.

Data warehousing in bioinformatics is the process of consolidating and organizing massive, heterogeneous bioinformatics data from disparate sources into a central repository for efficient querying and analysis. Imagine it as a well-organized library for biological data. It allows researchers to easily access and integrate data from various sources, such as genomics, proteomics, metabolomics, and clinical trials, leading to more comprehensive insights.

A typical bioinformatics data warehouse might include data from genomic databases (like GenBank or Ensembl), proteomic databases (like UniProt), metabolomic databases, and clinical records. These are often integrated using ETL (Extract, Transform, Load) processes. The warehouse uses a schema optimized for querying complex relationships between different data types, leveraging technologies like relational databases (e.g., PostgreSQL) or cloud-based data warehousing solutions (e.g., Snowflake, Google BigQuery).

The benefits include improved data accessibility, reduced redundancy, enhanced data quality, and facilitation of large-scale integrative analyses that would be otherwise impossible.

Q 10. How would you optimize a slow-running bioinformatics query?

Optimizing a slow-running bioinformatics query requires a systematic approach. First, you need to identify the bottleneck. This often involves profiling the query to determine which parts are consuming the most time. Tools like database profilers or query execution plans can be invaluable.

- Indexing: Ensure appropriate indexes are created on frequently queried columns. For example, if you frequently query by gene ID, a B-tree index on the gene ID column would significantly speed up searches.

- Query Optimization: Rewriting the query itself can often drastically improve performance. This might involve using joins more efficiently, avoiding full table scans, and using appropriate aggregate functions.

- Database Tuning: Optimizing database parameters like memory allocation, buffer pool size, and connection pooling can improve overall performance. This often requires in-depth knowledge of the database system.

- Data Partitioning: For extremely large datasets, partitioning the data into smaller, more manageable chunks can significantly reduce query times.

- Hardware Upgrades: In some cases, upgrading server hardware (more RAM, faster processors, SSDs) might be necessary.

For example, if a query joining two large genomic datasets is slow, we might create indexes on the common keys used in the join, optimize the join type (e.g., using an inner join instead of a full outer join if appropriate), or consider partitioning the datasets based on chromosome or genomic region.

Q 11. What are some common challenges in managing large-scale bioinformatics datasets?

Managing large-scale bioinformatics datasets presents several unique challenges:

- Storage Costs: Bioinformatics data is often massive, requiring substantial storage capacity, which can be expensive.

- Data Integration: Integrating data from diverse sources (genomics, proteomics, clinical data) with different formats and ontologies is complex and requires sophisticated data management techniques.

- Data Security and Privacy: Bioinformatics data often contains sensitive information about individuals, necessitating robust security measures to protect patient privacy and comply with regulations (like HIPAA).

- Computational Resources: Analyzing large datasets requires significant computational power, including high-performance computing clusters or cloud computing resources.

- Data Versioning and Reproducibility: Tracking changes in datasets and analysis pipelines is crucial for reproducibility but can be challenging with large, evolving datasets. Tools like Git can help manage code versioning, but specialized tools are needed for data versioning.

- Data Quality: Ensuring data quality and accuracy in large datasets is challenging due to the possibility of errors, inconsistencies, and artifacts introduced during data generation or processing.

For example, the enormous size of whole-genome sequencing data makes storage a major concern. Efficient storage solutions (like cloud storage) and data compression techniques become crucial. The diversity of data types within a study (e.g., genomic sequence data, gene expression profiles, clinical annotations) requires a well-defined data model and careful consideration of data integration strategies.

Q 12. How familiar are you with different database indexing techniques?

I’m familiar with several database indexing techniques, each with its strengths and weaknesses. The choice of index depends heavily on the type of query being performed and the characteristics of the data.

- B-tree indexes: These are widely used for range queries (e.g., finding all genes within a specific genomic region) and equality searches (e.g., finding a specific gene by its ID). They’re efficient for ordered data.

- Hash indexes: These are optimized for equality searches (e.g., finding a specific record based on a unique identifier). They are generally not suitable for range queries.

- R-tree indexes: These are specifically designed for spatial data, such as geographic coordinates or shapes. They are useful in bioinformatics for analyzing spatial data, e.g., cell locations within a tissue sample.

- Full-text indexes: These enable efficient searching within textual data, which can be useful for searching gene descriptions, publications, or clinical notes.

In practice, I’ve utilized B-tree indexes extensively in relational databases to accelerate queries involving genomic coordinates, gene IDs, and other numerical identifiers. I’ve also worked with full-text indexes for searching annotation data within genomic databases.

Q 13. Describe your experience with data visualization tools used in bioinformatics.

I have extensive experience with various data visualization tools used in bioinformatics. The choice of tool depends heavily on the type of data and the insights we want to extract.

- Circos: Excellent for visualizing genomic data, particularly for showing relationships between different genomic regions, chromosomal rearrangements, or comparative genomics.

- ggplot2 (R): A very versatile and powerful package for creating publication-quality static graphics. It’s highly customizable and used for a wide range of bioinformatics visualizations, from scatter plots and box plots to more complex visualizations.

- Interactive Web-based tools: Tools like Shiny (R), Plotly, and D3.js allow the creation of dynamic and interactive visualizations that can provide a more engaging and insightful exploration of data. They are particularly well-suited for exploratory data analysis and sharing results with collaborators.

- Specialized bioinformatics software: Many bioinformatics software packages include their own visualization capabilities; for example, programs that handle NGS data often have integrated visualization tools for viewing read alignments, coverage, and variant calls.

In my past projects, I’ve used ggplot2 extensively for generating publication-ready figures to illustrate gene expression patterns, comparative genomics analyses, and statistical results. For exploratory analysis and interactive dashboards, I’ve used Shiny to create web applications that allow users to explore large datasets and visualize relationships interactively.

Q 14. Explain your understanding of data security and privacy in bioinformatics databases.

Data security and privacy in bioinformatics databases are paramount. Bioinformatics data often contains sensitive personal information, such as genetic data, medical history, and lifestyle information. Breaches can have serious ethical and legal consequences.

My approach to data security involves several key strategies:

- Data Access Control: Implementing strict access control mechanisms to limit access to sensitive data based on roles and responsibilities. This often involves using role-based access control (RBAC) systems.

- Data Encryption: Encrypting data both at rest (while stored) and in transit (during transmission) to protect against unauthorized access. Encryption algorithms should be strong and regularly updated.

- Data Anonymization and De-identification: Removing or altering identifying information from datasets to minimize the risk of re-identification. Techniques include data masking, generalization, and suppression.

- Regular Security Audits: Performing regular security audits and penetration testing to identify vulnerabilities and ensure systems are secure.

- Compliance with Regulations: Adhering to relevant regulations and guidelines, such as HIPAA (in the US), GDPR (in Europe), and other regional data protection laws.

For example, in a recent project involving human genomic data, we implemented strict access control protocols, encrypted all data at rest and in transit using strong encryption, and employed data anonymization techniques to remove or alter directly identifying information before data analysis began. We also followed HIPAA guidelines for protecting patient health information.

Q 15. What is your experience with scripting languages like Python or R in bioinformatics?

Python and R are indispensable tools in my bioinformatics toolkit. I’ve used Python extensively for tasks ranging from parsing complex sequence files (like FASTA and FASTQ) and manipulating biological data using libraries like Biopython and Pandas, to developing custom scripts for automating analyses and data visualization with Matplotlib and Seaborn. For instance, I wrote a Python script to process Next Generation Sequencing (NGS) data, automatically trimming adapters, aligning reads to a reference genome using tools like Bowtie2, and then calling variants with GATK. In R, I’m proficient in statistical analysis of biological data, particularly using packages like ggplot2 for stunning visualizations and edgeR or DESeq2 for differential gene expression analysis. I’ve applied R to analyze microarray and RNA-Seq data, identifying genes differentially expressed between experimental conditions. My experience includes building interactive dashboards using Shiny to explore and present complex biological datasets.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with version control systems like Git.

Git is an integral part of my workflow. I’m highly proficient in using Git for version control, allowing me to track changes to code, data, and analysis pipelines. This ensures reproducibility, facilitates collaboration, and provides a safety net for recovering from mistakes. I routinely use branching strategies, such as Gitflow, for managing concurrent development and feature integration. I understand the importance of commit messages that are clear, concise, and informative, enabling others to easily understand the changes made. I’m comfortable using platforms like GitHub and GitLab for collaborative projects. For example, during a recent collaborative project analyzing microbiome data, we used Git to manage the code for preprocessing the sequencing data, performing taxonomic classification, and creating visualizations; it greatly improved our collaboration and code management.

Q 17. Describe a time you had to troubleshoot a complex bioinformatics database issue.

During a project involving a large genomic database, I encountered an issue where queries were inexplicably slow. The database was running on a clustered system, and initial investigation showed no obvious bottlenecks. My troubleshooting involved several steps: First, I analyzed the database logs for error messages or performance indicators. This highlighted unusually high disk I/O. Then, I investigated the database schema, discovering a poorly indexed join between two large tables, which was causing the slowdowns. By creating appropriate indexes on the relevant columns, query execution time decreased dramatically. Further, using database monitoring tools, I could identify other areas for optimization including regular database maintenance like vacuuming and analyzing.

Q 18. How would you approach the design of a bioinformatics pipeline?

Designing a bioinformatics pipeline involves a structured approach. I usually start by clearly defining the goals and objectives of the pipeline. This involves specifying the input data, the desired output, and the intermediate steps required to transform the input data into the desired output. Next, I would select appropriate tools and software based on their capabilities and compatibility. I then focus on designing the data flow, ensuring the output of one step is compatible with the input of the next. Error handling and logging are crucial aspects of my pipeline design to ensure robustness and facilitate debugging. Finally, thorough testing and validation are key to ensuring the pipeline’s accuracy and reproducibility. Consider a RNA-Seq analysis pipeline: The pipeline would begin with raw sequencing reads, perform quality control, alignment to a reference genome, read counting, normalization, and finally differential gene expression analysis. Each step would have checks and error handling implemented.

Q 19. Explain your familiarity with different types of biological ontologies.

Biological ontologies provide a standardized vocabulary for describing biological entities and their relationships. I’m familiar with several key ontologies, including the Gene Ontology (GO), which describes gene function; the Protein Ontology (PRO), which classifies proteins; and the Ontology for Biomedical Investigations (OBI), which standardizes descriptions of research investigations. These ontologies are crucial for integrating and analyzing diverse biological datasets, allowing for more meaningful comparisons and inferences. For instance, in analyzing gene expression data, GO annotations can help us understand the functional enrichment of differentially expressed genes. Understanding ontology structure (hierarchical relationships between terms) allows for effective querying and data analysis.

Q 20. What is your understanding of data integration in bioinformatics?

Data integration in bioinformatics involves combining data from different sources to gain a more comprehensive understanding of biological systems. This is often challenging because different databases may use different formats, terminologies, or even represent the same information differently. Successful integration requires a strategic approach, including data standardization (e.g., using common identifiers), data transformation (e.g., converting data formats), and data merging (e.g., joining tables based on common keys). For example, integrating genomic data from a genome browser with proteomic data from a mass spectrometry experiment requires careful mapping of identifiers (e.g., gene symbols to protein accession numbers) and ensuring consistent units of measurement.

Q 21. How would you handle data inconsistencies across multiple databases?

Handling data inconsistencies across multiple databases requires a careful and systematic approach. First, I’d identify the nature and extent of the inconsistencies. This could involve comparing data across databases looking for discrepancies in values, units, or terminology. Then, I’d develop a strategy to address the inconsistencies, which could involve data cleaning (correcting obvious errors), data transformation (mapping values to a consistent standard), or data reconciliation (using external information to resolve conflicts). Sometimes, it may be necessary to create a reconciliation table to map values between databases. For example, if one database uses a different gene nomenclature system than another, I would create a mapping table to resolve differences in naming conventions before integrating the data. Ultimately, careful documentation of these decisions is critical for transparency and reproducibility.

Q 22. Describe your experience with database replication and failover mechanisms.

Database replication and failover mechanisms are crucial for ensuring high availability and data redundancy in bioinformatics databases, which often store massive and irreplaceable datasets. Replication involves creating copies of the database on different servers. Failover mechanisms automatically switch to a replicated database if the primary one becomes unavailable.

In my experience, I’ve worked with both synchronous and asynchronous replication. Synchronous replication guarantees data consistency across all copies, but it can impact performance. Asynchronous replication prioritizes speed, accepting a small window of inconsistency. I’ve used tools like MySQL’s replication feature and PostgreSQL’s streaming replication to implement these strategies.

For failover, I’ve implemented solutions using techniques like heartbeat monitoring and load balancers. Heartbeat monitoring regularly checks the status of the primary database server. If it fails, the load balancer automatically redirects traffic to a standby server, minimizing downtime. I’ve used technologies like HAProxy and Keepalived for this purpose. In a real-world scenario, imagine a genome sequencing project processing terabytes of data. With a robust replication and failover setup, if the primary database server crashes, the project won’t lose data and can continue analysis quickly with minimal disruption.

Q 23. Explain the concept of schema design in relational databases.

Schema design in relational databases involves defining the structure of the database, including tables, columns, data types, relationships, and constraints. A well-designed schema is fundamental for data integrity, efficient querying, and scalability. It’s like creating a blueprint for a house; a poorly designed blueprint leads to structural problems later.

Key aspects include normalization to reduce data redundancy and improve data consistency. I often use the Boyce-Codd Normal Form (BCNF) as a target, aiming for efficient data storage. Defining primary and foreign keys is critical for establishing relationships between tables. Proper data types, such as integers for counts and VARCHAR for strings, ensure data integrity. For example, in a bioinformatics database storing genomic data, I would create a table for genes with columns like gene_id (INT, PRIMARY KEY), gene_name (VARCHAR), chromosome (VARCHAR), start_position (INT), and end_position (INT). Another table could link these genes to associated proteins, using gene_id as a foreign key. Constraints, such as NOT NULL and UNIQUE, enforce data quality. A well-thought-out schema ensures the database can efficiently handle large volumes of genomic data while maintaining accuracy.

Q 24. What are some common performance bottlenecks in bioinformatics databases?

Performance bottlenecks in bioinformatics databases are often caused by several factors. One common issue is slow queries, especially those involving complex joins across large tables. Poorly indexed tables are a major culprit, as are inefficient queries with many subqueries.

Another bottleneck comes from the sheer volume of data. Analyzing whole genomes or large transcriptomes requires handling massive datasets, exceeding available memory or processing power. Inappropriate data types or lack of partitioning can further exacerbate this.

For example, searching for specific DNA sequences within millions of genomic reads can be extremely time-consuming without proper indexing. Similarly, analyzing large datasets of next-generation sequencing (NGS) data can overwhelm resources if not appropriately handled. Poorly chosen database technology can also contribute to bottlenecks; a database system not designed for handling large volumes of data may be insufficient. To address these bottlenecks, one needs to optimize queries, use appropriate indexing strategies, consider partitioning, and choose a suitable database management system.

Q 25. How would you assess the quality of bioinformatics data?

Assessing the quality of bioinformatics data is a multi-faceted process, involving several key aspects. It’s not simply about the number of samples or reads; it’s about their reliability and fitness for the intended purpose.

Firstly, data completeness and accuracy are paramount. Missing values or erroneous entries can seriously compromise analyses. Methods like quality control (QC) checks for NGS data, evaluating read quality scores, and assessing base call accuracy are critical. Secondly, consistency across the dataset and adherence to established standards (e.g., FASTA for sequence data) are vital. Inconsistent data formats lead to errors and hinder analysis.

Thirdly, metadata quality plays a significant role. Comprehensive and accurate metadata (e.g., sample origin, sequencing platform, experimental conditions) ensures reproducibility and reliable interpretation of results. Finally, biases in the data need to be carefully considered and documented. This may involve technical biases (e.g., PCR amplification bias) or selection biases (e.g., sample selection). Thorough quality assessment is a critical step in all bioinformatics projects.

Q 26. What are some ethical considerations related to managing bioinformatics data?

Ethical considerations in managing bioinformatics data are central to responsible research practices. The core principles revolve around privacy, security, and consent.

Firstly, data privacy is paramount. Genomic data is highly personal and sensitive, and its unauthorized access or disclosure can have severe consequences. Robust security measures are essential, including access control mechanisms, encryption, and data anonymization techniques. These must comply with regulations such as HIPAA (in the US) and GDPR (in Europe). Secondly, informed consent is crucial. Researchers must obtain explicit consent from individuals before using their genomic data for research purposes. This involves clearly explaining the research objectives, potential risks and benefits, and how the data will be handled.

Thirdly, data security is critical. Bioinformatics databases must be protected against unauthorized access, alteration, or destruction. Regular security audits, robust password policies, and intrusion detection systems are essential. Finally, data sharing needs to balance the benefits of collaboration with the need to protect individual privacy. Appropriate data sharing agreements and mechanisms for data de-identification are essential.

Q 27. Describe your experience with using containerization technologies (e.g., Docker) for bioinformatics workflows.

Containerization technologies like Docker provide a highly beneficial approach to managing bioinformatics workflows. Docker containers package software and their dependencies into isolated units, ensuring consistent execution across different environments.

In my experience, using Docker has significantly improved reproducibility and simplified deployment of bioinformatics pipelines. Instead of struggling with complex software installations and dependencies on different systems, I can create a Docker image containing all necessary tools and libraries for a specific analysis. This image can then be run consistently on any machine with Docker installed, minimizing compatibility issues.

For instance, I’ve used Docker to package a complex RNA-Seq pipeline, including tools like FastQC, HISAT2, StringTie, and DESeq2. Each step of the pipeline runs within a separate container, ensuring that the dependencies for each tool are isolated. This modular approach simplifies maintenance and updates. Docker Compose further enhances this workflow by orchestrating multiple containers to work together in a coordinated manner. Containerization has become an indispensable part of my bioinformatics workflow, boosting reproducibility and reducing deployment headaches.

Q 28. How would you implement a scalable solution for analyzing next-generation sequencing data?

Analyzing next-generation sequencing (NGS) data requires a scalable solution to handle the massive datasets generated. A single-machine approach is often insufficient. A cloud-based solution utilizing distributed computing frameworks like Apache Spark or Hadoop is a highly effective approach.

The solution would involve breaking down the analysis into parallel tasks. This could be done by splitting the NGS reads into smaller chunks and processing them concurrently across multiple machines. Cloud storage solutions such as Amazon S3 or Google Cloud Storage can efficiently store the massive amounts of data, providing scalability and durability.

For example, alignment of reads to a reference genome could be parallelized using Spark, with each node handling a subset of reads. Subsequent analyses, such as variant calling, can similarly benefit from distributed processing. The use of a database optimized for managing large-scale genomic data, such as a graph database or a specialized NoSQL database like Cassandra, can efficiently handle intermediate and final results. This approach leverages the power of distributed computing to analyze NGS data in a scalable and cost-effective manner, making it possible to process large datasets efficiently.

Key Topics to Learn for Bioinformatics Databases and Tools Interview

- Database Structure and Querying: Understanding relational databases (e.g., SQL), NoSQL databases, and their application in biological data management. Practical application: Designing efficient queries to retrieve specific genomic information from a large database.

- Sequence Alignment and Analysis: Mastering algorithms like BLAST, Needleman-Wunsch, and Smith-Waterman. Practical application: Identifying homologous sequences and understanding their evolutionary relationships.

- Phylogenetic Analysis: Constructing phylogenetic trees using various methods and interpreting the evolutionary relationships depicted. Practical application: Inferring the evolutionary history of a group of organisms based on their genomic data.

- Genome Browsers and Annotation Tools: Familiarity with popular genome browsers (e.g., UCSC Genome Browser, Ensembl) and annotation tools. Practical application: Analyzing gene structures, identifying regulatory regions, and visualizing genomic variations.

- Data Visualization and Interpretation: Creating meaningful visualizations of biological data using tools like R or Python. Practical application: Presenting complex genomic data in a clear and understandable manner.

- Bioinformatics Tool Selection and Workflow Design: Understanding the strengths and limitations of different bioinformatics tools and designing efficient workflows for analyzing biological data. Practical application: Choosing the appropriate tools and creating a robust pipeline for analyzing Next-Generation Sequencing data.

- Data Mining and Machine Learning in Bioinformatics: Applying machine learning techniques to analyze large biological datasets. Practical application: Predicting protein function or identifying disease biomarkers using machine learning algorithms.

Next Steps

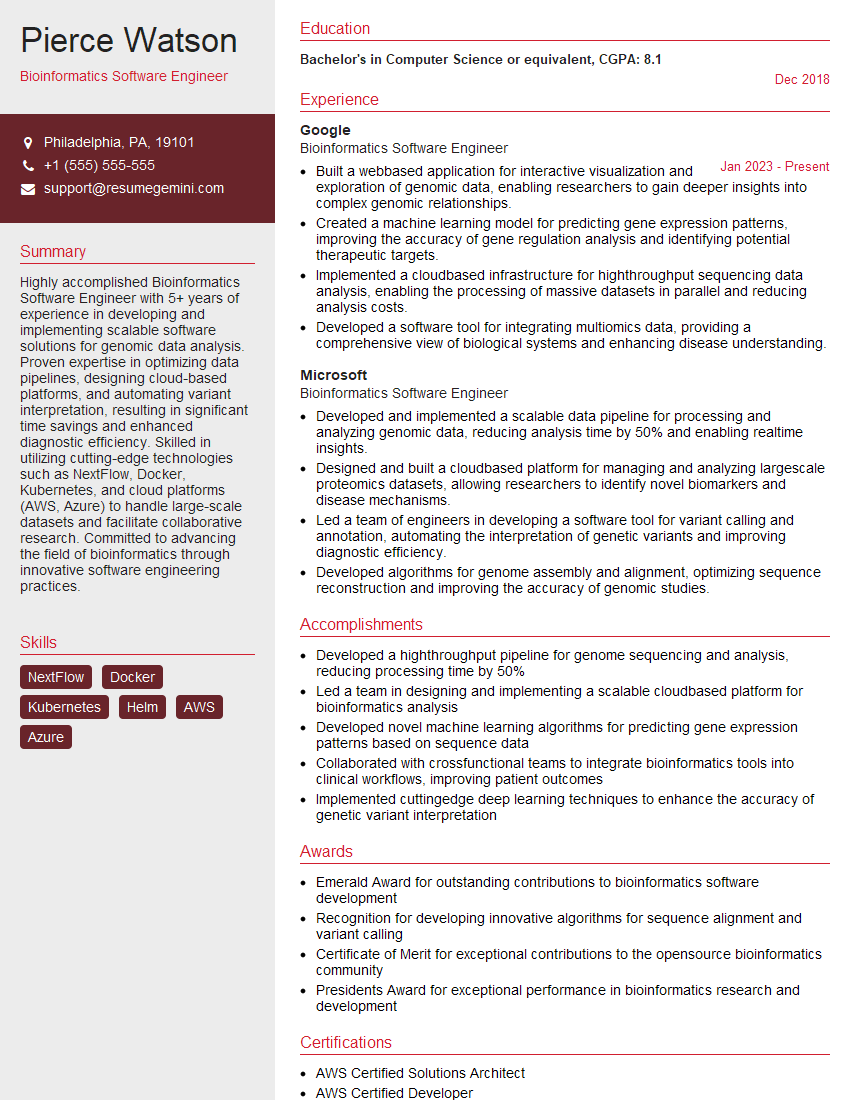

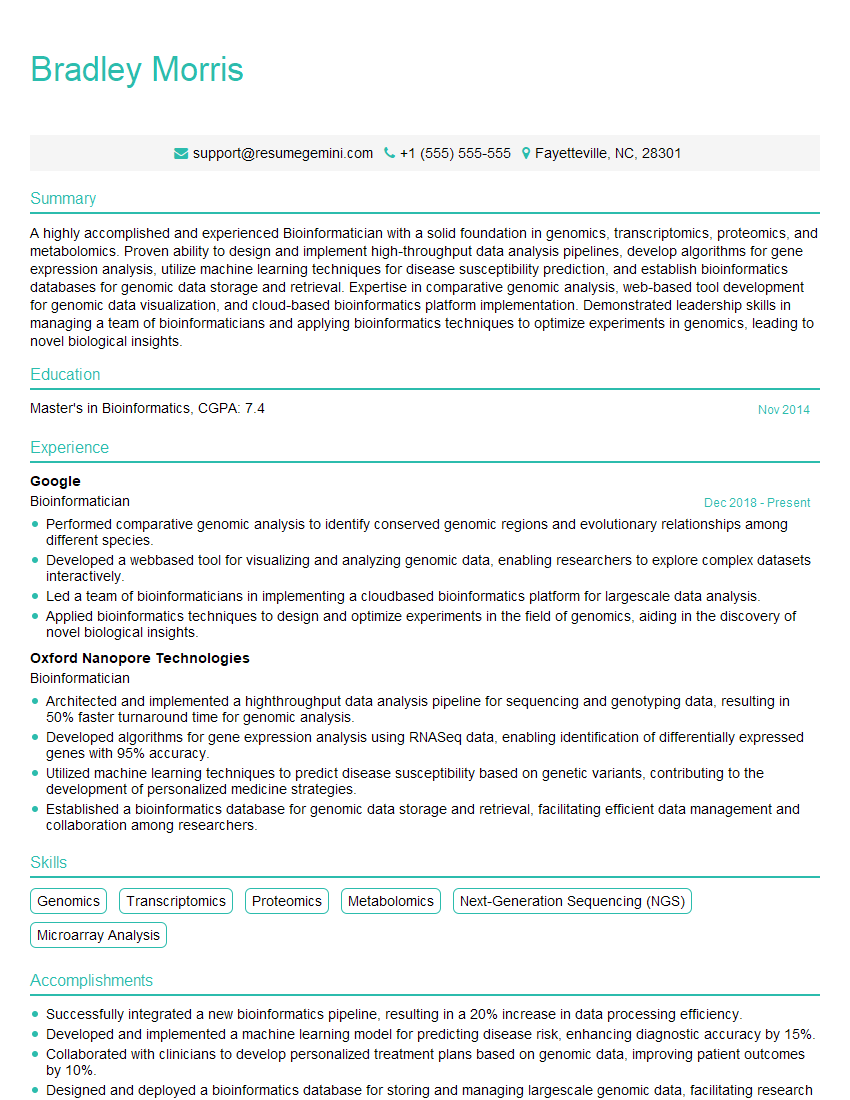

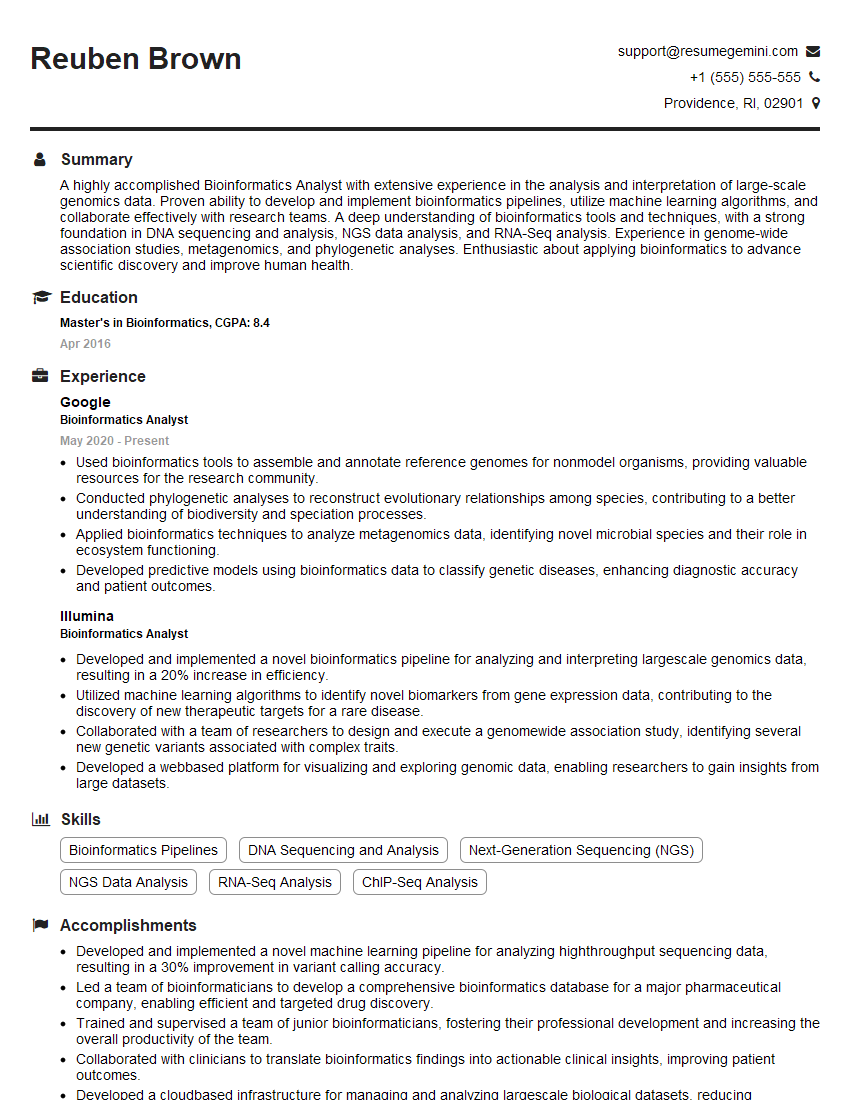

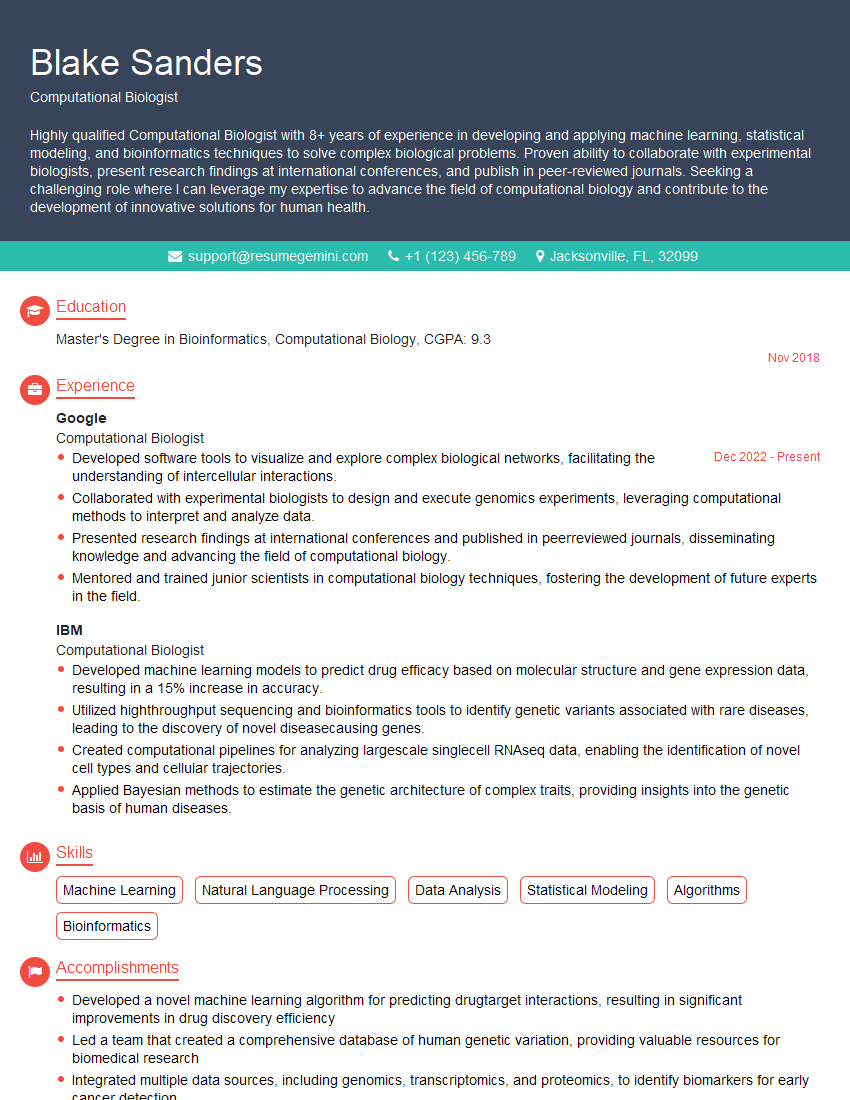

Mastering Bioinformatics Databases and Tools is crucial for a successful and rewarding career in the field. It opens doors to exciting roles in research, industry, and academia. To maximize your job prospects, it’s essential to create a compelling, ATS-friendly resume that showcases your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume tailored to your specific career goals. Examples of resumes tailored to Bioinformatics Databases and Tools are available to help guide you. Invest the time in crafting a strong resume—it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?