Cracking a skill-specific interview, like one for Fraud Data Analysis, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Fraud Data Analysis Interview

Q 1. Explain the difference between supervised and unsupervised learning in fraud detection.

In fraud detection, both supervised and unsupervised learning play crucial roles, but they differ significantly in their approach. Supervised learning uses labeled data – that is, data where each transaction is already classified as fraudulent or legitimate. The algorithm learns from this labeled data to build a model that can classify new, unseen transactions. Think of it like teaching a child to identify poisonous berries by showing them pictures of both poisonous and safe berries and labeling them accordingly. The child learns to distinguish between the two based on the labeled examples.

Unsupervised learning, on the other hand, works with unlabeled data. It aims to identify patterns and anomalies within the data without prior knowledge of which transactions are fraudulent. This is more akin to asking the child to sort berries into groups based on their inherent characteristics – size, color, shape – without prior knowledge of which are poisonous. The algorithm identifies unusual groupings, which might indicate fraudulent activity. These anomalies then require human review for confirmation.

For example, a supervised model might use historical data of fraudulent credit card transactions (labeled as fraudulent) and legitimate transactions (labeled as legitimate) to learn the patterns separating the two. An unsupervised model might cluster transactions based on spending patterns, identifying clusters that deviate significantly from the norm, which could warrant further investigation for potential fraud.

Q 2. Describe your experience with anomaly detection techniques.

Anomaly detection is a cornerstone of unsupervised fraud detection. I have extensive experience with several techniques, including:

- Clustering algorithms: Like K-means or DBSCAN, these group similar transactions together. Outliers, transactions far from any cluster center, are potential fraud candidates. For example, I once used DBSCAN to identify unusual patterns in international money transfers, revealing a ring of money laundering.

- One-class SVM: This technique builds a model representing ‘normal’ transactions. Transactions falling outside this model are flagged as anomalies. I’ve effectively employed this for detecting unusual login attempts on a banking system.

- Isolation Forest: This algorithm isolates anomalies by randomly partitioning the data. Anomalies are identified as data points requiring fewer partitions to isolate. This method is particularly efficient for high-dimensional data and proved valuable in identifying unusual insurance claim patterns.

My experience includes selecting the appropriate algorithm based on dataset characteristics (size, dimensionality, noise levels), tuning hyperparameters to optimize performance, and integrating these techniques with other fraud detection methods for a holistic approach. I also understand the limitations of each technique and the importance of carefully interpreting the results, often incorporating domain expertise to validate identified anomalies.

Q 3. How would you handle imbalanced datasets in fraud detection?

Imbalanced datasets, where fraudulent transactions are significantly fewer than legitimate ones, are a common challenge in fraud detection. Simply relying on accuracy as a metric can be misleading, as a model predicting everything as legitimate will achieve high accuracy but fail to detect fraud. To address this, I employ several strategies:

- Resampling techniques: Oversampling the minority class (fraudulent transactions) using techniques like SMOTE (Synthetic Minority Over-sampling Technique) creates synthetic data points to balance the classes. Alternatively, undersampling the majority class (legitimate transactions) reduces the number of majority class instances, although this can lead to loss of information.

- Cost-sensitive learning: Assigning different misclassification costs. Misclassifying a fraudulent transaction as legitimate (a false negative) is far more costly than misclassifying a legitimate transaction as fraudulent (a false positive). This cost matrix is integrated into the model’s training process, penalizing false negatives more heavily.

- Ensemble methods: Combining multiple models trained on different subsets or resampled versions of the data can improve performance and robustness. This helps mitigate the impact of an imbalanced dataset and improves the generalizability of the model.

The choice of technique depends on the specific dataset and the cost associated with different types of errors. Experimentation and careful evaluation are crucial to determine the most effective strategy.

Q 4. What are some common types of fraud you have experience analyzing?

My experience encompasses a wide range of fraud types, including:

- Credit card fraud: This involves unauthorized use of credit cards, including card-not-present and card-present fraud. I’ve analyzed transactions to detect anomalies such as unusually high purchase amounts, multiple transactions in quick succession, or transactions from geographically disparate locations.

- Insurance fraud: This includes false claims, inflated claims, and staged accidents. I’ve worked on identifying patterns in claim data, such as unusual claim frequencies from specific individuals or unusual injury patterns.

- Financial market fraud: This includes insider trading, market manipulation, and money laundering. My analysis involved detecting unusual trading patterns and transactions that deviate from established market norms.

- Cybersecurity fraud: This comprises various online frauds, including phishing, identity theft, and account takeovers. Analyzing login attempts, user behavior, and network traffic patterns proved vital in detecting fraudulent activities.

This diversity of experience allows me to adapt my analytical approach to different types of fraud, considering the unique characteristics and data sources associated with each.

Q 5. Explain your understanding of various data mining techniques used in fraud detection.

Data mining plays a vital role in fraud detection. I’m proficient in various techniques, including:

- Association rule mining: This helps discover relationships between different transactions or variables. For example, identifying that a specific sequence of transactions is often associated with fraudulent activity. Algorithms like Apriori and FP-Growth are frequently employed.

- Classification algorithms: These models, such as Logistic Regression, Support Vector Machines (SVMs), and Random Forests, predict whether a transaction is fraudulent or legitimate based on the learned patterns from historical data. These are particularly effective in supervised learning settings.

- Regression analysis: This technique helps identify the relationship between different variables and predict the likelihood of fraud. This might be useful in predicting the amount of loss associated with different types of fraud.

- Decision Trees and Random Forests: These create a tree-like model that predicts the fraud probability based on various attributes. They offer good interpretability and are robust to outliers.

The choice of technique depends on the specific problem and the available data. I often combine multiple techniques to achieve a more comprehensive and robust fraud detection system.

Q 6. How do you evaluate the performance of a fraud detection model?

Evaluating the performance of a fraud detection model is crucial for ensuring its effectiveness. The evaluation process usually involves several steps:

- Splitting the data: Dividing the dataset into training, validation, and test sets. The training set is used to train the model, the validation set for hyperparameter tuning, and the test set for final performance evaluation.

- Applying appropriate metrics: Accuracy, precision, recall, F1-score, AUC-ROC curve, and lift charts are used, but the choice depends on the business context. Prioritizing the reduction of false negatives (fraudulent transactions classified as legitimate) is crucial.

- Confusion matrix analysis: This provides a detailed breakdown of the model’s performance, showing true positives, true negatives, false positives, and false negatives. It aids in understanding the types of errors made by the model.

- Backtesting: Applying the model to historical data to simulate its performance in real-world scenarios and assessing its stability over time.

By combining these techniques and taking a holistic approach, you get a reliable picture of the model’s performance and identify areas for improvement.

Q 7. What metrics are most important for evaluating a fraud detection model?

The most important metrics for evaluating a fraud detection model are those that reflect the business objectives. While accuracy is important, it can be misleading in imbalanced datasets. Instead, I focus on:

- Precision: The proportion of correctly identified fraudulent transactions out of all transactions flagged as fraudulent. This minimizes false positives (legitimate transactions incorrectly flagged as fraudulent).

- Recall (Sensitivity): The proportion of correctly identified fraudulent transactions out of all actual fraudulent transactions. This minimizes false negatives (fraudulent transactions missed by the model).

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of both. This gives a single number representing the overall effectiveness.

- AUC-ROC (Area Under the Receiver Operating Characteristic curve): This summarizes the model’s ability to distinguish between fraudulent and legitimate transactions across different thresholds. A higher AUC-ROC indicates better performance.

- Lift chart: This shows how much better the model performs compared to a random guess at identifying fraudulent transactions. It is essential for understanding the model’s value in prioritizing investigations.

The relative importance of these metrics depends on the specific business context and the costs associated with false positives and false negatives. For example, in a high-security setting, minimizing false negatives is crucial, even if it results in a higher number of false positives which can be investigated further.

Q 8. Describe your experience with different types of fraud datasets (structured, unstructured, etc.).

My experience spans a wide range of fraud datasets. I’ve worked extensively with structured data, typically found in relational databases. This includes transactional data (credit card purchases, insurance claims), customer demographics, and account information. Analyzing this data often involves SQL queries to identify patterns and anomalies. I’m also highly proficient with unstructured data such as text from emails, social media posts, or customer service notes. These require different techniques, including natural language processing (NLP) to extract relevant information and sentiment analysis to detect potentially fraudulent activities. Furthermore, I’ve worked with semi-structured data like JSON or XML files containing transaction details or logs. For example, in one project, analyzing structured transactional data helped identify a ring of fraudulent credit card applications, while sentiment analysis of unstructured customer feedback revealed a pattern of dissatisfaction preceding chargebacks.

The challenges inherent in each data type necessitate diverse analytical approaches. Structured data lends itself well to statistical modeling and rule-based systems, while unstructured data requires more advanced techniques like machine learning to extract meaningful insights.

Q 9. How do you handle missing data in a fraud dataset?

Handling missing data is crucial in fraud detection because incomplete information can skew results and lead to inaccurate conclusions. My approach is multifaceted and depends on the nature and extent of the missing data.

- Understanding the cause of missingness: Is it Missing Completely at Random (MCAR), Missing at Random (MAR), or Missing Not at Random (MNAR)? Understanding this helps choose the right imputation method.

- Imputation techniques: For relatively small amounts of missing data, I often use simple imputation methods like mean/median/mode imputation for numerical data, or mode imputation for categorical data. However, for larger amounts or more complex missingness patterns, I might use more sophisticated techniques like k-Nearest Neighbors (KNN) imputation or multiple imputation.

- Deletion: If the missing data represents a significant portion of the dataset or its pattern suggests a systematic bias, then deleting those records might be the most prudent approach, ensuring data integrity over quantity.

- Model-based approaches: Some machine learning algorithms, like XGBoost, are inherently robust to missing data and don’t require explicit imputation.

The choice of method always involves careful consideration of potential biases and the impact on the overall analysis. For example, in a credit card fraud detection scenario, simply imputing missing transaction amounts with the average might lead to inaccurate risk scores.

Q 10. Explain your experience using SQL for fraud data analysis.

SQL is my primary tool for querying and manipulating large-scale fraud datasets. I’m highly proficient in writing complex queries to extract relevant features, aggregate data, and perform exploratory data analysis. I frequently utilize window functions to calculate rolling averages or cumulative sums for detecting trends indicative of fraudulent behavior. I also employ joins to integrate data from multiple tables (e.g., joining transaction data with customer information).

Example: To identify potentially fraudulent transactions based on unusually high transaction values compared to a customer’s historical spending, I might use a query like this:

SELECT transaction_id, customer_id, transaction_amount FROM transactions WHERE transaction_amount > (SELECT AVG(transaction_amount) * 3 FROM transactions WHERE customer_id = t1.customer_id)This query identifies transactions where the amount exceeds three times the customer’s average transaction amount, flagging them as potential fraud candidates.

Q 11. What programming languages are you proficient in for fraud data analysis?

My proficiency in programming languages for fraud data analysis includes Python and R. Python is my go-to language for its extensive libraries like pandas (for data manipulation), scikit-learn (for machine learning), and TensorFlow/PyTorch (for deep learning). R is excellent for statistical modeling and visualization, particularly when dealing with complex statistical tests or creating compelling visualizations. I also have working knowledge of SQL and Java, useful for database interactions and specific project requirements. My preference depends on the project’s specifics and the types of models or algorithms employed.

Q 12. How would you approach building a fraud detection system from scratch?

Building a fraud detection system from scratch involves a structured approach. First, I would define the problem and business goals, clarifying what types of fraud need to be detected and the acceptable levels of false positives and false negatives.

- Data Collection and Preprocessing: Gather relevant data from various sources, ensuring data quality, and handling missing values as discussed earlier.

- Feature Engineering: Create features from the raw data that are predictive of fraud. This is often the most crucial step, requiring deep understanding of the business domain and potential fraud patterns.

- Model Selection: Choose appropriate machine learning models based on the data characteristics and business requirements. Common choices include logistic regression, random forests, gradient boosting machines (GBM), or neural networks.

- Model Training and Evaluation: Train the chosen model on a labeled dataset (transactions labeled as fraudulent or legitimate), carefully evaluating its performance using metrics like precision, recall, F1-score, and AUC-ROC.

- Deployment and Monitoring: Deploy the model into a production environment, integrating it into existing systems. Continuously monitor its performance and retrain the model periodically as new data becomes available and fraud patterns evolve.

It’s critical to build in a feedback loop to continuously improve the system’s accuracy and adapt to evolving fraud techniques. For instance, a system detecting credit card fraud might initially use simple rule-based methods, which are then enhanced by incorporating machine learning models that adapt to new fraud schemes.

Q 13. Describe your experience with data visualization tools for fraud analysis.

Data visualization is essential for communicating insights derived from fraud analysis. My experience encompasses various tools, including Tableau, Power BI, and Python libraries like Matplotlib and Seaborn. I utilize these tools to create dashboards and reports that showcase key performance indicators (KPIs), fraud trends, and the effectiveness of fraud detection systems.

For instance, I might use a geographic map to visualize the location of fraudulent transactions, revealing potential hotspots. Line charts can track fraud rates over time, identifying seasonal trends or sudden spikes indicative of new fraud schemes. Bar charts compare different fraud types or the performance of various fraud detection models. The choice of visualization depends heavily on the story we’re trying to tell with the data.

Q 14. How do you identify and address data quality issues in fraud detection?

Data quality is paramount in fraud detection. Poor data quality can lead to inaccurate models, ineffective fraud prevention strategies, and ultimately, significant financial losses. My approach involves a multi-stage process:

- Data Profiling: Thoroughly examine the data to understand its structure, identify missing values, inconsistencies, and outliers. This often involves statistical summaries and visualizations.

- Data Cleansing: Address identified data quality issues. This might include handling missing values (as discussed earlier), correcting inconsistencies, and removing or smoothing outliers.

- Data Validation: Implement checks to prevent future data quality issues. This could involve setting up data validation rules within the data ingestion process or using automated data quality monitoring tools.

- Data Governance: Establish clear data governance policies and procedures to ensure data accuracy and consistency over time. This includes defining data ownership, data quality standards, and processes for managing data changes.

For example, detecting inconsistencies in customer addresses or discrepancies between transaction amounts and authorized limits are crucial for identifying and addressing potential fraud. A rigorous data quality process, encompassing all these stages, is vital for the reliability and effectiveness of any fraud detection system.

Q 15. Explain your understanding of A/B testing in the context of fraud prevention.

A/B testing in fraud prevention involves comparing two different versions of a fraud detection system or a specific component to determine which performs better. Imagine you have two different fraud scoring models – one uses a simple rule-based approach, and the other employs a more sophisticated machine learning algorithm. An A/B test would involve routing a portion of your transactions through each model and comparing their performance metrics, such as the detection rate (percentage of fraudulent transactions correctly identified) and the false positive rate (percentage of legitimate transactions incorrectly flagged as fraudulent).

We might use A/B testing to compare the effectiveness of different threshold settings in a fraud detection model. Perhaps lowering the threshold would catch more fraud but also increase false positives. A/B testing allows us to quantitatively measure this trade-off and make an informed decision. The test is conducted over a statistically significant period with randomly assigned traffic to ensure unbiased results. The results then guide us toward the most efficient and accurate fraud prevention strategy.

For example, we might A/B test two different sets of rules for detecting suspicious login attempts. One set might focus on login frequency, while the other emphasizes geolocation changes. By tracking the results, we can determine which set yields better fraud detection with a lower rate of legitimate user lockouts.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you stay up-to-date with the latest trends in fraud detection?

Staying current in fraud detection requires a multi-pronged approach. I actively participate in industry conferences and webinars such as those hosted by RSA, Gartner, and the AICPA. These events offer valuable insights into the newest techniques and emerging threats. I also subscribe to leading industry publications and research papers on topics like deep learning for anomaly detection, and the evolving tactics of fraudsters. Additionally, I regularly engage with online communities and forums dedicated to fraud detection, exchanging knowledge and insights with other professionals. Finally, I dedicate time to hands-on experimentation with new technologies and algorithms to stay familiar with their practical applications and limitations.

A crucial part of this is continuous learning. I often find myself exploring new research papers from institutions like MIT, Stanford, and Carnegie Mellon on cutting-edge approaches to fraud detection and machine learning. Understanding the underlying mathematical and statistical principles is critical to selecting and adapting algorithms appropriately.

Q 17. Describe your experience with specific fraud detection algorithms (e.g., logistic regression, random forest).

I have extensive experience with various fraud detection algorithms. Logistic regression, a relatively simple yet powerful model, is frequently used as a baseline for fraud detection. It’s particularly useful for binary classification (fraudulent or not) and offers good interpretability, making it easier to understand which factors contribute most to fraud risk. For instance, I’ve utilized it in identifying potentially fraudulent credit card transactions based on factors like transaction amount, location, and merchant category code (MCC).

Random forests, on the other hand, are ensemble methods that combine multiple decision trees to improve accuracy and robustness. Their ability to handle high-dimensional data and non-linear relationships makes them well-suited for more complex fraud detection scenarios. I’ve employed random forests in detecting sophisticated fraud schemes involving account takeover or synthetic identity fraud, where multiple factors interact in complex ways. In one project, a random forest model significantly improved the detection rate of fraudulent insurance claims by incorporating data from diverse sources, such as claimant demographics and medical records.

Beyond these, I’m also proficient with other techniques such as gradient boosting machines (GBM), support vector machines (SVM), and neural networks (especially for tasks such as image recognition for check fraud). The choice of algorithm depends heavily on the nature of the data, the type of fraud being addressed, and the need for model interpretability versus predictive accuracy.

Q 18. How do you handle false positives and false negatives in fraud detection?

Handling false positives and false negatives is a critical aspect of fraud detection, as it directly impacts business operations and customer experience. False positives (flagging legitimate transactions as fraudulent) can lead to customer frustration and disruptions to legitimate business activities. False negatives (failing to detect fraudulent transactions) result in financial losses for the organization. The ideal approach involves finding an optimal balance between these two error types.

Strategies for managing these include: adjusting model thresholds, incorporating human-in-the-loop review processes, and employing techniques like cost-sensitive learning. We might weight the cost of a false positive differently from the cost of a false negative in our model training, reflecting the relative financial and reputational impact of each type of error. We also frequently use rule-based systems to reduce false positives from machine learning models, acting as a secondary layer of validation. Additionally, continuous monitoring and model retraining are essential to keep pace with evolving fraud patterns and maintain optimal performance.

Imagine a scenario where our model flags a large transaction as fraudulent due to an unusual spending pattern. Before blocking the transaction, we might implement a secondary verification step, such as a phone call or a one-time password (OTP), reducing the risk of a false positive. Meanwhile, for confirmed fraudulent transactions, a post-mortem analysis helps us understand the model’s limitations and allows us to iterate and improve its accuracy over time.

Q 19. What is your experience with real-time fraud detection systems?

My experience with real-time fraud detection systems is extensive. I’ve worked with systems designed to analyze transactions and events as they occur, using low-latency technologies like Apache Kafka and in-memory databases. The goal in these systems is to make decisions rapidly, often within milliseconds, to prevent fraud in real time.

This demands specialized techniques including stream processing, feature engineering adapted for real-time scenarios, and lightweight machine learning models that can run efficiently on high-volume data streams. For example, I’ve worked with systems that monitor credit card transactions, analyzing various factors like transaction amount, location, and velocity to flag suspicious activity immediately. Implementing appropriate alerting and response mechanisms is also crucial for efficient intervention. The challenge lies in striking a balance between speed and accuracy; we need to prevent fraud quickly while minimizing false positives.

One significant project involved building a real-time fraud detection system for an online payment platform. This required the use of technologies like Apache Flink to process a massive volume of transactions in real-time while incorporating various data sources including customer history, geolocation data, and device information. The system generated alerts for suspicious activities that required immediate human review and intervention. The real-time detection significantly reduced financial losses and improved the platform’s overall security.

Q 20. Describe your experience working with large datasets for fraud analysis.

I possess substantial experience working with large datasets for fraud analysis. This commonly involves employing techniques like distributed computing (e.g., Spark, Hadoop) to process terabytes or even petabytes of data efficiently. Effective data handling encompasses not only the technical aspects of processing but also data cleaning, feature engineering, and data transformation crucial for accurate and efficient analysis. I’m comfortable working with relational databases (like PostgreSQL, MySQL), NoSQL databases (like MongoDB, Cassandra), and cloud-based data storage solutions (like AWS S3, Azure Blob Storage).

For instance, in a recent project involving detecting fraudulent insurance claims, we processed a dataset containing millions of claims with hundreds of attributes each. Employing Apache Spark allowed us to distribute the data processing across a cluster, enabling faster model training and evaluation. Techniques like dimensionality reduction and feature selection were crucial for handling the high dimensionality of the data, improving model performance and reducing computational cost.

Data governance and privacy are also paramount. Ensuring data compliance with regulations like GDPR and CCPA is essential, necessitating careful attention to data anonymization and access control throughout the process.

Q 21. How do you communicate complex technical findings to non-technical audiences?

Communicating complex technical findings to non-technical audiences requires a clear, concise, and relatable approach. I avoid using technical jargon and instead focus on using simple language, metaphors, and analogies to convey the key insights. Visual aids like charts, graphs, and dashboards are incredibly effective in simplifying complex data and making it easier to understand.

For example, when explaining the results of a fraud detection model, I might use a simple analogy like a security checkpoint at an airport. The model acts as a screening process, identifying potential threats (fraudulent transactions) while allowing legitimate travelers (legitimate transactions) to pass through. The detection rate can be compared to the percentage of actual threats identified by the checkpoint. The false positive rate describes the percentage of innocent travelers flagged as potential threats.

Storytelling is also a powerful tool. Instead of presenting raw data, I often illustrate findings with specific real-world examples of fraudulent activities detected and the positive impact of the model on the business. I also focus on the business implications of the findings, translating technical metrics into easily understood financial or operational terms (such as reduced losses or improved customer experience).

Q 22. What is your experience with data governance and regulatory compliance in fraud detection?

Data governance and regulatory compliance are paramount in fraud detection. It’s not just about building accurate models; it’s about ensuring the data used is ethically sourced, legally compliant, and properly managed throughout its lifecycle. This involves adhering to regulations like GDPR, CCPA, and PCI DSS, depending on the industry and location. My experience includes establishing data dictionaries to define data elements, implementing data quality checks to ensure accuracy and completeness, and creating access control mechanisms to restrict access to sensitive information based on the principle of least privilege. For instance, in a previous role, I helped implement a robust data governance framework that ensured all personally identifiable information (PII) used in our fraud detection models was anonymized or pseudonymized according to GDPR guidelines, preventing data breaches and potential fines.

- Data Mapping & Lineage: I’ve meticulously documented data flow, origin, and transformations to ensure traceability and auditability.

- Data Quality Control: I’ve developed and implemented automated data quality checks to identify and address inconsistencies, inaccuracies, and missing values.

- Regulatory Compliance: I’ve actively participated in compliance audits and ensured our processes adhered to relevant regulations.

Q 23. How would you handle a situation where a fraud detection model is underperforming?

Underperforming fraud detection models require a systematic diagnostic approach. It’s like diagnosing a medical problem – you need to run tests and find the root cause. I start by investigating several key areas:

- Data Drift: Check if the input data’s characteristics have changed significantly from what the model was trained on. This is common; fraud patterns evolve. I’d look at distribution shifts, missing data, and changes in feature correlations. Tools like feature importance analysis and concept drift detection algorithms are crucial here.

- Model Degradation: Examine if the model’s performance metrics (like precision, recall, and F1-score) have declined over time. I’d analyze model performance metrics across different time periods, potentially using techniques like backtesting to assess historical performance against current data.

- Feature Engineering: Review whether the features used to train the model are still relevant. New features might need to be added or existing ones re-engineered to capture evolving fraud patterns.

- Model Retraining: Often, simply retraining the model with updated data resolves the issue. I’d use appropriate techniques to address class imbalance and overfitting, ensuring the model generalizes well to new data.

- Algorithm Selection: In cases of persistent underperformance, consider exploring alternative algorithms better suited to the problem. A different model architecture might be more effective in capturing the subtleties of the fraudulent activity.

For example, in one project, we discovered that a sudden increase in mobile transactions had skewed our model’s performance. By adding features related to device location and transaction frequency, we successfully improved its accuracy.

Q 24. Explain your experience with different data sources used in fraud detection.

My experience spans a wide range of data sources crucial for comprehensive fraud detection. Think of it as building a detailed profile of a suspect – you need information from multiple sources to build a compelling case.

- Transaction Data: This is the core – details about purchases, transfers, login attempts, etc. I’ve worked extensively with databases like SQL Server and Oracle to access and process this.

- Customer Data: Information about customer demographics, history, and behavior patterns. This often comes from CRM systems and involves careful handling due to privacy concerns.

- Device Data: Information about the device used for the transaction, IP address, geolocation, and user agent. This helps detect suspicious activity from unusual locations or devices.

- Network Data: Data about the relationships between accounts, transactions, and users. This can reveal patterns of collusion or money laundering.

- External Data: Data from third-party providers, such as credit bureaus, fraud databases, and geolocation services. This enriches the analysis and helps identify potentially fraudulent patterns. For example, cross-referencing a transaction with a known fraud database can flag suspicious activity.

Q 25. Describe your experience with ETL (Extract, Transform, Load) processes for fraud data.

ETL processes are the backbone of any effective fraud detection system. They’re how we transform raw data into a format usable by our models. My experience includes designing and implementing ETL pipelines using tools like Informatica PowerCenter, Apache Kafka, and Apache Spark. These pipelines involve:

- Extraction: Retrieving data from diverse sources, handling different formats (CSV, JSON, XML, databases). Error handling and data validation are crucial at this stage.

- Transformation: Cleaning, transforming, and enriching the data. This might involve data type conversions, handling missing values (imputation or removal), feature engineering (creating new variables from existing ones), and data aggregation. For instance, I’ve created features like ‘transaction value per day’ by aggregating daily transaction amounts.

- Loading: Loading the transformed data into a data warehouse or data lake for analysis and model training. This step involves optimizing for performance and ensuring data integrity.

For example, I once improved an existing ETL pipeline by implementing a parallel processing architecture using Apache Spark, reducing processing time by 70% and significantly improving the efficiency of our fraud detection system.

Q 26. What is your experience with cloud-based data platforms for fraud analysis?

Cloud-based data platforms have revolutionized fraud analysis. They offer scalability, flexibility, and cost-effectiveness compared to on-premise solutions. My experience includes working with AWS (S3, Redshift, EMR), Azure (Data Lake Storage, Synapse Analytics), and GCP (BigQuery, Dataflow). These platforms provide:

- Scalability: Easily handle massive datasets and growing data volumes. This is essential as fraud detection requires analyzing terabytes or even petabytes of data.

- Cost-Effectiveness: Pay-as-you-go pricing models avoid upfront investment in expensive hardware.

- Advanced Analytics: Access to powerful machine learning and analytics tools directly within the platform.

- Data Security: Robust security features to protect sensitive data. These features frequently exceed the capabilities of on-premise setups.

In a previous project, migrating our fraud detection infrastructure to AWS significantly improved our model training speed and reduced operational costs. The scalability of the cloud allowed us to handle a sudden surge in transaction volume during a major sales event without performance degradation.

Q 27. How do you prioritize fraud investigations based on risk?

Prioritizing fraud investigations based on risk is critical for efficient resource allocation. It’s similar to triage in a hospital – focus on the most critical cases first. I use a risk scoring system that considers several factors:

- Monetary Value: Larger transactions are naturally higher risk.

- Transaction Velocity: A sudden increase in transaction frequency could indicate suspicious activity.

- Geographic Location: Transactions from unusual locations might be flagged.

- Device Information: Transactions from multiple devices in a short period may trigger alerts.

- Model Score: My fraud detection model assigns a risk score to each transaction, which is a crucial factor.

- Historical Data: A customer’s past behavior can indicate their fraud risk profile.

I combine these factors into a comprehensive risk score, prioritizing investigations based on the highest scores. This allows us to focus on the most likely fraudulent activities while minimizing the investigation of low-risk events.

Q 28. Describe a time you identified a novel approach to fraud detection.

In a previous role, we noticed a significant increase in a particular type of fraud – a sophisticated form of account takeover where attackers would leverage stolen credentials to make small, frequent transactions under the radar. Existing models were failing to catch this due to the low value of individual transactions. My novel approach involved combining network analysis with behavioral biometrics. We analyzed the relationships between accounts and transactions (network analysis), creating features capturing connectivity patterns, which identified groups of compromised accounts. We then combined this with behavioral features extracted from user interactions (like login times, device usage patterns, and typing speed) to create a more comprehensive risk assessment. This hybrid approach resulted in a significant increase in the detection rate of this particular fraud type, which had previously gone largely undetected by our existing systems.

Key Topics to Learn for Fraud Data Analysis Interview

- Data Mining Techniques: Understanding and applying various data mining techniques like anomaly detection, clustering, and classification to identify fraudulent patterns in large datasets. Practical application: Identifying suspicious transactions based on unusual spending behavior.

- Statistical Modeling: Developing and interpreting statistical models to assess fraud risk and predict future fraudulent activities. Practical application: Building a model to predict the probability of a credit card application being fraudulent.

- Data Visualization and Storytelling: Effectively communicating insights from data analysis through clear and concise visualizations. Practical application: Presenting findings on fraud trends to stakeholders using compelling charts and graphs.

- Machine Learning Algorithms: Applying relevant machine learning algorithms (e.g., logistic regression, decision trees, random forests) for fraud detection and prevention. Practical application: Training a model to identify fraudulent insurance claims.

- Database Management and SQL: Proficiently querying and manipulating large datasets using SQL to extract relevant information for analysis. Practical application: Extracting transaction data from a database for fraud investigation.

- Regulatory Compliance and Ethical Considerations: Understanding relevant regulations and ethical implications related to fraud detection and data privacy. Practical application: Ensuring compliance with data protection regulations when handling sensitive financial information.

- Problem-Solving and Critical Thinking: Demonstrating the ability to identify, analyze, and solve complex fraud-related problems using a structured approach. Practical application: Developing a strategy to mitigate a specific type of fraud within an organization.

Next Steps

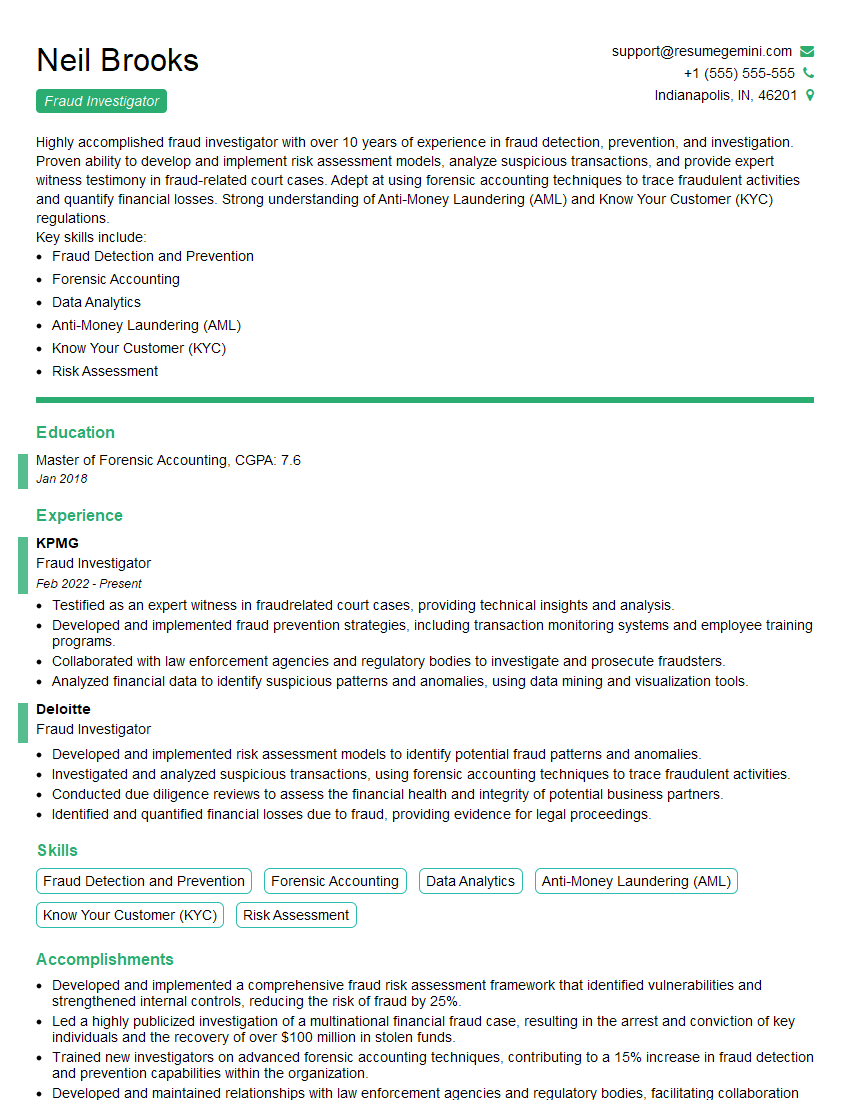

Mastering Fraud Data Analysis opens doors to exciting and impactful career opportunities in a rapidly growing field. To maximize your job prospects, focus on crafting a compelling and ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional resume that showcases your abilities effectively. We provide examples of resumes tailored specifically to Fraud Data Analysis to guide you in creating a standout application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?