Cracking a skill-specific interview, like one for Audio and Video Synchronization, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Audio and Video Synchronization Interview

Q 1. Explain the process of lip-sync correction in video editing.

Lip-sync correction is the process of aligning audio and video so that a speaker’s mouth movements perfectly match their spoken words. It’s crucial for creating a believable and engaging viewing experience. Think of a movie where the dialogue is noticeably off – it’s jarring and breaks immersion. Lip-sync correction addresses this.

The process typically involves using video editing software to subtly adjust the timing of either the audio or video track. This is often done frame by frame, requiring a keen eye and ear. Some software offers automated tools that analyze the audio waveform and video, suggesting adjustments. However, manual fine-tuning is often necessary for optimal results. For instance, if a character’s mouth is opening a fraction of a second before the audio begins, I’d shift the audio slightly forward to achieve perfect synchronization. The level of precision required can be remarkably fine, sometimes involving adjustments of only a few milliseconds.

Advanced techniques include using software with sophisticated algorithms that detect facial landmarks and analyze mouth movements to assist in automated correction. However, even these advanced tools benefit from a skilled editor’s review and refinement. The goal isn’t just to correct timing, but to maintain the natural flow and rhythm of the dialogue and the scene.

Q 2. Describe different methods for synchronizing audio and video.

Synchronizing audio and video involves aligning the timing of both signals to ensure they play in perfect harmony. Several methods achieve this, each with its strengths and weaknesses:

- Timecode Synchronization: This is the most precise method, particularly in professional settings. Timecode is a digital code embedded in both audio and video that acts like a precise clock. Matching the timecodes ensures perfect synchronization. This is commonly used in multi-camera productions where multiple audio and video sources need to be meticulously synced.

- Manual Synchronization: This involves visually aligning audio waveforms with video clips using editing software. It requires a keen ear and eye for detail and is best suited for smaller projects or correcting minor timing discrepancies.

- Automated Synchronization: Some software utilizes algorithms to automatically detect audio cues and align them with corresponding visuals. This approach is faster but may require manual adjustments for optimal results. It’s useful for quickly syncing simpler productions.

- Using a Reference Signal (e.g., clap): A common technique, especially in filming, involves capturing a distinct sound (like clapping) simultaneously with the camera roll. This creates a common reference point for later syncing audio and video in post-production.

The choice of method depends on factors like project scale, budget, and required accuracy. For a feature film, timecode synchronization is crucial; for a short YouTube video, manual synchronization might suffice.

Q 3. What are the common challenges encountered during audio-video synchronization?

Audio-video synchronization can present several challenges:

- Latency: Delays introduced by equipment (like microphones, cameras, or processing units) can throw off synchronization. This is especially true in live streaming situations.

- Jitter: Irregular variations in timing can lead to inconsistent synchronization, resulting in a choppy or stuttering effect. Network issues often contribute to this during live broadcasts.

- Drift: Gradual discrepancies over longer periods, often due to slight inconsistencies in clock speeds across devices, can cause audio and video to become noticeably out of sync. Think of a long recording where the audio gradually falls behind.

- Synchronization Issues across Multiple Sources: Combining audio and video from multiple cameras and microphones increases the complexity and potential for synchronization problems.

- Inaccurate Timecode: If timecode is not properly generated or embedded, it renders this method ineffective.

Overcoming these challenges often involves careful planning, precise equipment, and diligent post-production synchronization efforts. Regular equipment calibration is also vital in mitigating these issues.

Q 4. How do you handle audio delays in live video streaming?

Handling audio delays in live video streaming requires a multi-pronged approach, as the goal is to minimize noticeable latency while maintaining high-quality audio and video. This is a critical issue in interactive applications like live gaming or video conferencing.

- Low-Latency Encoding and Streaming Protocols: Using protocols like SRT (Secure Reliable Transport) or WebRTC, designed for low-latency transmission, is essential. These protocols prioritize speed and reduce buffering delays.

- Optimized Network Infrastructure: A high-bandwidth, low-latency network connection is paramount. Network congestion or instability can significantly increase delay.

- Hardware Acceleration: Employing hardware encoders and decoders speeds up processing, reducing latency. This is particularly important for high-resolution video streaming.

- Audio Buffer Management: Carefully adjusting the audio buffer size in streaming software can help minimize delay without introducing audio glitches. A smaller buffer means less latency but a greater risk of audio dropouts if the network connection is unstable; conversely a larger buffer creates more latency but reduces these risks. It’s a balancing act.

- Careful Monitoring and Adjustment: Continuously monitoring latency and making necessary adjustments to network settings, encoding parameters, and buffer sizes is crucial for maintaining optimal performance.

Sometimes, a small, unavoidable delay might be acceptable to prioritize stability and quality. It’s a trade-off between perfect synchronicity and a flawless, uninterrupted stream.

Q 5. What software and tools are you proficient in for audio-video synchronization?

My proficiency in audio-video synchronization spans a range of software and tools. I’m highly experienced with professional-grade editing software such as Adobe Premiere Pro, Avid Media Composer, and DaVinci Resolve. These platforms offer robust tools for precise synchronization, including timecode-based alignment, waveform visualization, and advanced audio editing features. I also have experience with specialized audio and video analysis tools for troubleshooting and identifying synchronization issues.

Beyond professional software, I’m familiar with various open-source tools and plugins that offer specific functionalities for synchronization. For example, I’ve used command-line tools for batch processing and automation of synchronization tasks, enhancing workflow efficiency.

Q 6. Explain the concept of timecode and its role in synchronization.

Timecode is a standardized system for recording time within audio and video files. Think of it as a very precise, digital stopwatch embedded in the signal. It’s represented as hours:minutes:seconds:frames (HH:MM:SS:FF), and it plays a critical role in synchronization because it provides a shared reference point across all media.

In professional productions, timecode is recorded simultaneously on all cameras and audio recorders. This ensures that the audio and video from multiple sources can be precisely aligned during post-production. Without timecode, syncing multiple camera angles or audio tracks can be a very time-consuming and error-prone process. It’s the backbone of efficient and accurate synchronization in complex projects. It simplifies the workflow enormously by eliminating guesswork and manual trial-and-error attempts to synchronize footage. Simply matching the timecodes from different sources seamlessly aligns them.

Q 7. How do you troubleshoot audio-video synchronization issues?

Troubleshooting audio-video synchronization issues requires a systematic approach. I usually begin by identifying the source of the problem.

- Inspect the Timecode: If timecode is used, verify its accuracy and consistency across all devices. Any inconsistencies immediately point towards a source of error.

- Analyze Audio and Video Waveforms: Visually compare the waveforms to identify any noticeable timing discrepancies. This can often reveal the cause of the problem.

- Check Equipment Connections: Ensure that all audio and video cables are correctly connected and functioning properly.

- Monitor Latency: If it’s a live stream, monitor latency levels and investigate the network conditions. Network issues are frequently the culprits in live scenarios.

- Test with Different Equipment: If possible, try substituting hardware components to isolate potential sources of issues.

- Review Recording Settings: Ensure appropriate sample rates, bit depths, and frame rates are consistent across devices. Mismatched settings can cause syncing problems.

- Utilize Specialized Synchronization Tools: Employ tools for detailed audio and video analysis to identify subtle timing discrepancies.

When dealing with complex issues, a systematic elimination approach is key. Start with the simplest possible explanations and work your way through more complex problems. Detailed documentation at every stage of the troubleshooting process is crucial. The experience of troubleshooting reveals valuable patterns that help to identify problems quickly in future projects.

Q 8. Describe your experience with various audio and video formats.

My experience spans a wide range of audio and video formats, from the ubiquitous MP4 and MOV containers, encompassing codecs like H.264, H.265 (HEVC), and VP9 for video, and AAC, MP3, and PCM for audio, to more specialized formats like ProRes and DNxHD used in professional workflows. I’m also familiar with container formats like AVI, MKV, and MXF, each with their own strengths and weaknesses regarding compression, compatibility, and metadata handling. Understanding these formats is crucial because different codecs have varying degrees of compatibility with editing software and hardware, and their characteristics impact the efficiency and fidelity of synchronization.

For instance, working with high-bitrate ProRes files offers superior quality and simplifies synchronization, especially when dealing with intricate lip-sync adjustments. Conversely, heavily compressed formats like H.264 can introduce artifacts during processing that complicate synchronization, sometimes requiring intermediary formats for seamless editing. My expertise lies not only in knowing the formats, but also in selecting the most appropriate one for a given project based on factors such as resolution, frame rate, audio bitrate, and storage constraints.

Q 9. What are your strategies for ensuring accurate lip-sync in a variety of scenarios?

Ensuring accurate lip-sync is paramount. My strategy involves a multi-faceted approach. First, I use professional-grade software with advanced audio and video waveform analysis tools. These allow precise visual alignment of audio events (like the start of a spoken word) with corresponding mouth movements in the video. For example, I’d utilize the visual cues provided by waveform analysis to fine-tune the alignment of the audio to the video.

Second, I pay close attention to the shooting process itself. In pre-production, we carefully coordinate audio recording with the video capture. Using a dedicated audio recorder with timecode synchronization with the video camera is critical to minimize inherent time discrepancies. This eliminates many post-production challenges. If timecode isn’t possible, I use techniques such as clapping slates or other visual and audio cues for alignment reference points. These provide precise points for matching during post.

Third, I consider the different scenarios that can affect lip-sync. For instance, working with multiple cameras requires careful attention to their individual clock accuracy, and a slight drift in one camera’s timecode could affect lip-sync. In such cases, I would carefully analyze all audio/video pairs and align them based on the primary camera or a common reference point, potentially requiring some creative problem solving.

Q 10. Explain how you would approach syncing audio from multiple sources to a video.

Syncing multiple audio sources to a video is a common task, especially in productions with multiple microphones or separate audio tracks (like music and narration). My approach begins with a thorough assessment of each audio source’s quality and timing. This often includes noise reduction and cleanup to maintain clarity and avoid artifacts that interfere with alignment.

I use software designed for advanced audio editing, leveraging their waveform visualization and time-stretching/compression capabilities. I initially align the audio sources visually, using cues common to each track, for example, a clap or the start of dialogue. Then, I might employ software functionalities to handle finer-grained adjustments, correcting for slight timing differences through algorithms that subtly adjust tempo, without noticeably altering the audio pitch, which can be detrimental to audio quality.

Once individually aligned, I mix the audio tracks and check for any phasing or interference issues before finalizing the mix and syncing it with the video. The result is a seamlessly integrated audio track, ensuring a professional finish.

Q 11. How do you deal with drift or jitter in audio-video synchronization?

Drift and jitter are common enemies in audio-video synchronization. Drift is a gradual desynchronization over time, while jitter is a more erratic, short-term variation. Both can be extremely frustrating and damaging to the viewing experience. To address drift, it’s crucial to prevent it in the first place—using high-quality equipment and paying close attention to timecode syncing during acquisition is crucial. Sometimes, subtle drift can be corrected by carefully applying time-stretching or compression algorithms in post-production.

Jitter is usually harder to resolve, and sometimes requires more invasive techniques. These could include frame-rate conversion, which involves converting from one frame rate to another, or more aggressive time stretching/compression in smaller segments to address specific areas of jitter. However, these often carry a risk of introducing undesirable artifacts or impacting audio quality. In severe cases, re-recording audio and/or video might be the only viable solution.

A key preventative measure is using a high-quality video capture system with stable frame rates and accurate timecode, and employing digital audio workstations (DAWs) designed for precise audio manipulation.

Q 12. What are your preferred methods for aligning audio and video during post-production?

My preferred methods for aligning audio and video during post-production depend on the complexity and scale of the project. For simpler projects, I’d utilize the built-in audio and video synchronization tools within video editing software like Adobe Premiere Pro or DaVinci Resolve. These applications offer visual waveform alignment tools, allowing for precise adjustments through scrubbing and visual cues.

More complex projects often require a more refined approach. I might use dedicated audio editing software such as Pro Tools or Audition in conjunction with the video editor. This allows for more granular control over the audio, enabling more sophisticated corrections like time-stretching, pitch correction, and noise reduction, before integrating the final result back into the video editing timeline. This workflow enables seamless integration and precise control of the alignment.

In any case, iterative testing and listening are essential throughout the process, focusing on eliminating visual and auditory inconsistencies that negatively impact the synchronization.

Q 13. Explain the importance of maintaining frame accuracy during synchronization.

Maintaining frame accuracy is crucial for several reasons. First, and most obviously, it directly affects the visual quality of lip-sync. Even a single frame discrepancy can be noticeable, particularly with fast dialogue. Second, frame-accurate alignment avoids the introduction of judder or stutter in the video, which disrupts the smoothness of motion and overall viewing experience.

Moreover, frame accuracy is critical when combining different visual elements or effects that are keyed to specific frames within the video. An inaccurate synchronization can result in visual artifacts or inconsistencies. For instance, when adding subtitles or lower-thirds, if these are not frame-accurate relative to the audio, the user experience suffers considerably.

Essentially, frame accuracy isn’t just about synchronization; it’s a vital aspect of professional video production that contributes to the overall visual and audio fidelity.

Q 14. How do you manage large media files during the synchronization process?

Managing large media files during synchronization requires a strategic approach to prevent performance bottlenecks and ensure efficiency. The first step is using high-performance storage solutions, such as solid-state drives (SSDs) or high-speed RAID systems to maximize the speed of data access. This greatly improves performance during editing and processing.

Second, I employ proxy workflows. These involve creating smaller, lower-resolution versions of the media files for editing. This allows the software to work much more fluidly during the synchronization process. Once the alignment is finalized, the high-resolution files are then used for rendering the final output. This is a very common practice, especially for projects with 4K or higher resolution footage.

Third, I optimize my editing software settings. For example, I adjust the caching mechanisms to optimize performance and adjust rendering settings to make the most efficient use of hardware resources available. Finally, and importantly, I always back up my project files regularly to prevent data loss due to hardware failure or accidental deletion. Redundant backups are essential to ensure the stability of any project of this scale.

Q 15. What is your experience with automated synchronization tools?

My experience with automated synchronization tools spans several years and various professional settings. I’m proficient in using a range of software, including industry-standard tools like Adobe Premiere Pro, Avid Media Composer, and DaVinci Resolve. I’m also familiar with specialized plugins and scripts that automate aspects of synchronization, such as those employing audio-based timecode detection or sophisticated algorithms for lip-sync correction. For example, in one project involving a multi-camera documentary, I used Premiere Pro’s advanced syncing features combined with a waveform analysis plugin to accurately align audio from multiple sources despite varying recording conditions. This automated approach significantly sped up the workflow compared to manual synchronization.

Beyond the software, I understand the limitations of automated tools. They often require manual refinement, particularly when dealing with complex scenarios, such as significant timecode discrepancies, or when the audio and video sources have undergone post-processing effects that interfere with automated alignment processes. My expertise lies not just in using these tools, but in understanding when and how to effectively leverage them, knowing when to switch to manual adjustments for optimal results.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you ensure consistency in audio levels across different clips during synchronization?

Consistency in audio levels across clips is crucial for a professional-sounding synchronized product. I use a multi-step approach. First, I analyze the audio levels of each clip individually using a waveform monitor and audio metering tools within my editing software. This helps identify potential peaks and dips in audio volume. Then, I employ tools like audio normalization or compression to bring all clips to a consistent average loudness. I might use a target LUFS (Loudness Units relative to Full Scale) value based on broadcasting standards or client specifications. Finally, I perform a careful listen to ensure a smooth transition between clips, addressing any abrupt volume changes that may still be present. Imagine trying to watch a movie where dialogue suddenly becomes deafeningly loud, or conversely, almost inaudible—that’s what inconsistent audio levels can do to the viewing experience.

Sometimes, creative decisions might necessitate variation in audio levels for artistic effect. However, even in these cases, consistency is important within those variations. For instance, a quiet scene should remain quiet consistently across different takes or angles, rather than fluctuating unexpectedly.

Q 17. Describe your process for quality control of synchronized audio and video.

My quality control process for synchronized audio and video is rigorous and multifaceted. It starts with a thorough visual inspection of the synced timeline, looking for any obvious discrepancies like visible lip-sync errors or audio-video misalignments. I then perform several focused listening tests, paying close attention to timing precision and consistency in audio levels. This involves listening on multiple playback systems (headphones, speakers) to account for variations in audio reproduction. A critical part of my process is objective measurement, using tools that analyze audio and video parameters, such as waveform visualization, spectrogram analysis, and detailed timecode comparisons. These checks help me identify subtle sync issues which might be missed through listening alone.

Finally, and critically, I always include a peer review stage where a colleague examines the synchronized output, providing a fresh perspective and catching any errors I might have overlooked. This multi-layered approach ensures a high standard of quality and minimizes the risk of releasing material with synchronization problems.

Q 18. How would you handle a situation where the audio and video sources are significantly out of sync?

Handling significantly out-of-sync audio and video sources requires a strategic approach. The severity of the issue determines the best course of action. If the mismatch is minor, I may be able to adjust the audio or video timeline manually in my editing software using frame-accurate adjustments. However, for substantial discrepancies, the solution may involve more advanced techniques, such as using specialized audio and video alignment software capable of compensating for large time shifts. In some cases, re-synchronization might be necessary, either using frame-accurate adjustment or even re-recording segments to maintain lip-sync. This often involves reference points – analyzing the content to identify consistent cues (like a repeated movement or spoken word) present in both audio and video. For example, if the audio is notably ahead, I would need to either delay the audio or advance the video to find the best match, verifying it by checking several reference points to confirm the accuracy.

Ultimately, the aim is to find the most accurate and least disruptive method of correction without compromising the visual or audio quality of the final product. It might involve compromises, like using a slightly less ideal synchronization point to preserve the overall integrity of the material.

Q 19. What techniques do you use to improve the overall quality of the synchronized output?

Improving the overall quality of synchronized output involves more than just alignment. I use several techniques to enhance the final result. Audio processing plays a vital role. Noise reduction, equalization, and dynamic range compression can significantly improve audio clarity and balance. Visual enhancements such as color correction and stabilization can also contribute to a more polished and professional look. Careful consideration of the overall audio mix is important too. Balancing dialogue, sound effects, and music creates a more immersive and engaging experience.

Beyond technical enhancements, artistic choices also contribute. Careful selection of transitions, use of visual and audio effects, and attention to pacing all play a role in producing a high-quality and engaging final product. This might involve creatively manipulating timing to improve the flow of the synchronized sequence, or subtly adjusting audio to better reflect visual content. The end goal is always a harmonious and polished result.

Q 20. How do you deal with different frame rates in audio and video during synchronization?

Different frame rates in audio and video necessitate careful handling during synchronization. Audio typically has a constant sample rate (e.g., 44.1 kHz or 48 kHz), while video frame rates can vary (24 fps, 25 fps, 30 fps, etc.). Directly merging incompatible frame rates will introduce timing discrepancies and artifacts. The most straightforward approach is to convert either the audio or video to match the other’s frame rate. For example, if you have 24fps video and 48kHz audio, you may convert the audio to match 24fps through re-sampling and frame-rate matching tools. Alternatively, you could convert the video to match the audio sample rate, if there are tools and sufficient quality to justify the resampling. However, video frame rate conversion can introduce quality artifacts, requiring a cautious approach.

It’s crucial to be aware of the potential implications of frame rate conversion. Lowering the frame rate might result in motion blur, while increasing it may lead to judder. The best approach depends on the context and the specific tools available. The process often demands fine-tuning and careful monitoring to ensure minimal negative impact on the visual quality of the final result.

Q 21. Explain the impact of different audio codecs on synchronization.

Different audio codecs significantly impact synchronization. Lossy codecs (like MP3 or AAC) introduce a degree of compression artifacts, potentially affecting timing precision. Although generally minor, these artifacts can accumulate and cause slight synchronization shifts, especially over longer durations. In high-precision applications, the use of lossless codecs (such as WAV or AIFF) is therefore preferred to preserve the original timing information. The reason is that lossy compression processes often involve discarding or modifying audio data that could impact timing. This subtle change accumulates and becomes noticeable in longer audio tracks.

Furthermore, the processing involved in decoding various codecs can introduce latency variations, impacting synchronization. The difference is usually minimal, but with precise synchronization requirements, the choice of codec can be a critical aspect of project planning. In mission-critical work, a consistent codec is paramount to avoid unforeseen issues.

Q 22. Describe your experience with various video codecs and their effects on sync.

Video codecs, like H.264, H.265 (HEVC), and VP9, significantly impact audio-video synchronization. Different codecs have varying levels of compression efficiency and processing demands. Highly compressed codecs, while saving storage space and bandwidth, can sometimes introduce minor timing inconsistencies due to variable bitrate encoding or the codec’s internal processing. For example, a scene with rapid motion might require a higher bitrate, potentially leading to slight delays compared to scenes with less motion. Conversely, codecs with less aggressive compression might maintain more consistent timing but at the cost of larger file sizes. In my experience, I’ve found that understanding the characteristics of each codec is crucial for anticipating potential sync issues. I frequently analyze the bitrate profiles of video files to identify potential problem areas before they escalate. This proactive approach significantly reduces the time needed for troubleshooting later in the workflow. For instance, I once worked on a project using a highly compressed codec where minor audio drift occurred during action sequences. By analyzing the bitrate variations, we were able to pinpoint the exact segments requiring adjustments, optimizing our workflow significantly.

Q 23. How would you handle a situation with missing audio or video frames?

Missing audio or video frames present a significant challenge to synchronization. My approach involves a multi-step process starting with identifying the cause. Is it a problem with the source material, the encoding process, or the playback environment? I often use specialized tools to analyze the media files for dropped frames, examining timestamps and frame numbers. Once identified, the solution depends on the severity and nature of the problem. For minor missing frames, interpolation techniques can be applied, intelligently estimating the missing data based on surrounding frames. For more substantial losses, I might have to consider alternative sources or accept the loss, potentially making creative edits to mitigate the issue. Imagine a scenario where a few frames are missing in a crucial dialogue scene. Simple interpolation might be sufficient. But if significant chunks of audio or video are gone, the solution might involve finding a replacement clip or creatively masking the missing part. My experience emphasizes the importance of meticulous backups and version control to recover from such scenarios.

Q 24. What is your experience working with various audio and video file formats?

My experience encompasses a wide range of audio and video file formats, including common formats like MP4, MOV, AVI, MKV, WAV, AIFF, and AAC. I’m familiar with their respective containers, codecs, and metadata structures. This allows me to anticipate potential issues based on the file format itself. For example, some older container formats have limitations that can affect synchronization. Working with various formats requires a deep understanding of their strengths and weaknesses. Recently, I worked on a project involving both legacy AVI files and modern HEVC encoded MP4 files. Understanding the codecs and containers allowed me to efficiently handle the conversions and ensure seamless synchronization between different formats.

Q 25. Describe your experience with metadata and its role in synchronization.

Metadata plays a vital role in synchronization. Timecodes embedded within audio and video files act as critical reference points. Accuracy in metadata is paramount. Inconsistent or missing timecodes can lead to significant synchronization problems. I often check and correct metadata discrepancies using specialized tools, ensuring consistent timecode across all files. Furthermore, metadata can provide essential information about frame rates, sample rates, and other parameters crucial for accurate synchronization. For instance, improper frame rate interpretation can cause significant sync issues. My experience has shown that meticulously verifying and correcting metadata is crucial for a smooth synchronization process, preventing hours of troubleshooting later.

Q 26. How do you optimize your workflow for efficient audio-video synchronization?

Optimizing my workflow for efficient audio-video synchronization involves using a combination of tools and strategies. I rely heavily on automation wherever possible, leveraging scripting languages like Python to automate repetitive tasks, such as batch processing and metadata validation. This minimizes manual intervention and reduces the chance of human error. I also prioritize using efficient software that handles various file formats and codecs effectively. Moreover, I meticulously document my workflow, including file paths and applied modifications. This documentation ensures reproducibility and simplifies troubleshooting. Lastly, I always test my work with different players and devices to anticipate issues arising from variation in hardware or software capabilities. My optimized workflow strives for accuracy and efficiency, utilizing the power of automation and robust documentation.

Q 27. What are your strategies for maintaining project organization during synchronization?

Maintaining project organization is crucial for large synchronization projects. I utilize a robust folder structure, organizing files logically based on type, date, and scene. Naming conventions are meticulously maintained, employing clear identifiers that prevent confusion. Furthermore, version control systems, like Git, are used to track changes and facilitate collaboration. I utilize project management software to track progress and manage tasks, ensuring that everything stays well-documented and organized. This system minimizes the risk of misplacing files or creating conflicts, helping me deliver accurate and timely results.

Q 28. Explain your approach to collaboration and communication within a team during synchronization.

Collaboration and communication are essential for effective synchronization. I use regular team meetings and utilize communication tools like Slack or Microsoft Teams to ensure everyone is on the same page. Open and transparent communication about any encountered challenges is crucial. I encourage team members to share their expertise and offer constructive feedback during the synchronization process. I often use visual tools like shared timelines to illustrate synchronization points and allow for effective feedback and discussion. In my experience, clear communication and collaboration drastically reduce errors and delays in the synchronization process. A strong team dynamic is key to successfully navigating the complexities of audio-video synchronization.

Key Topics to Learn for Audio and Video Synchronization Interview

- Synchronization Fundamentals: Understanding concepts like lip-sync, audio delay, and timecode. Explore different synchronization methods and their applications.

- Practical Applications: Analyze real-world scenarios such as post-production workflows in film, television, and live streaming. Consider the challenges and solutions involved in synchronizing audio from multiple sources.

- Digital Audio Workstations (DAWs): Gain proficiency in using popular DAWs and their synchronization tools. Understand the importance of session setup and workflow optimization for efficient synchronization.

- Video Editing Software: Familiarize yourself with common video editing software and their audio synchronization features. Practice various techniques for aligning audio and video tracks.

- Troubleshooting and Problem-Solving: Develop strategies for identifying and resolving common synchronization issues such as drift, jitter, and out-of-sync audio. Learn to analyze waveforms and identify discrepancies.

- Audio and Video Formats: Understand the various audio and video formats and their impact on synchronization. Explore compatibility issues and solutions.

- Advanced Techniques: Research advanced techniques like automated synchronization tools and plugins, and their advantages and limitations.

Next Steps

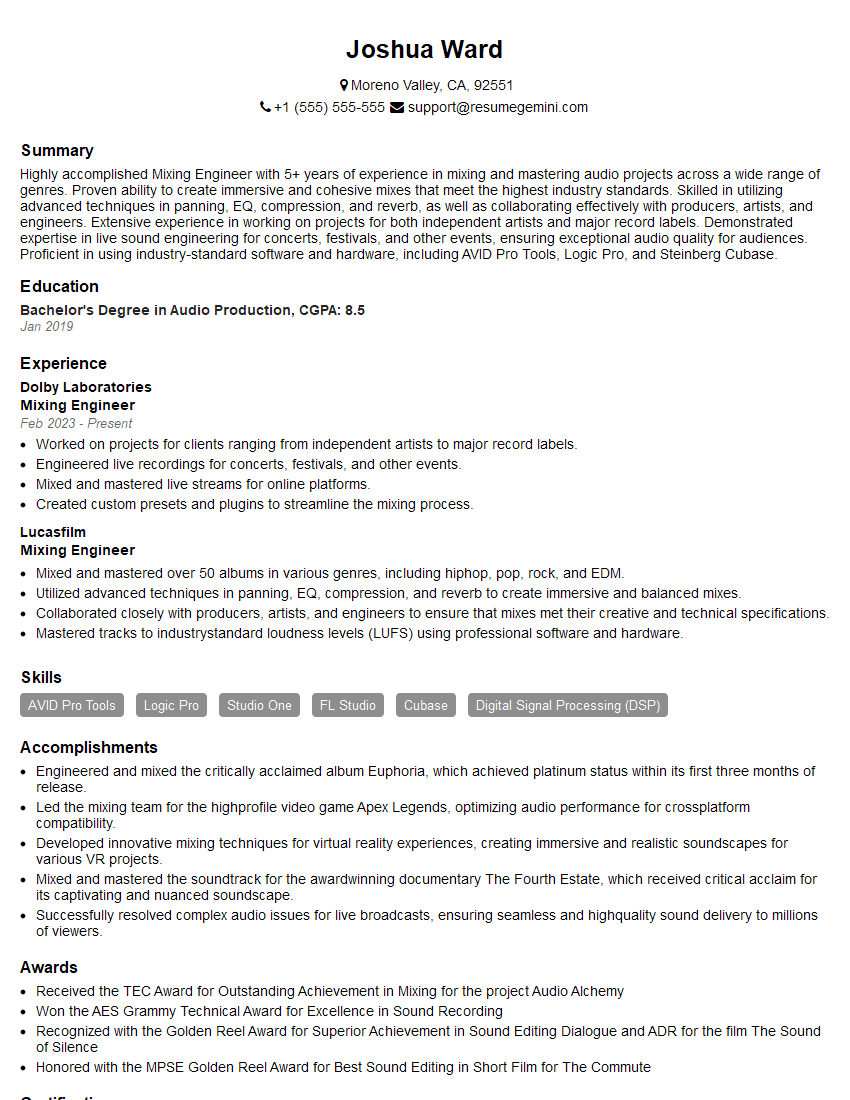

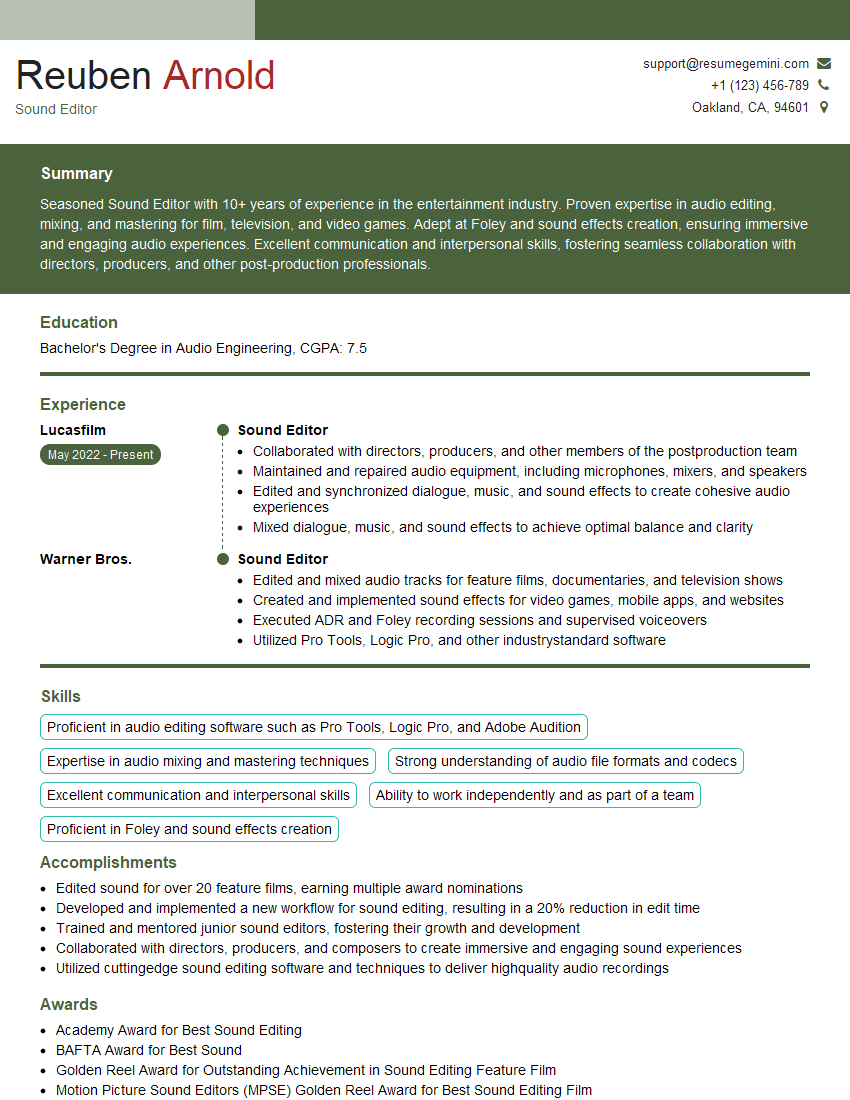

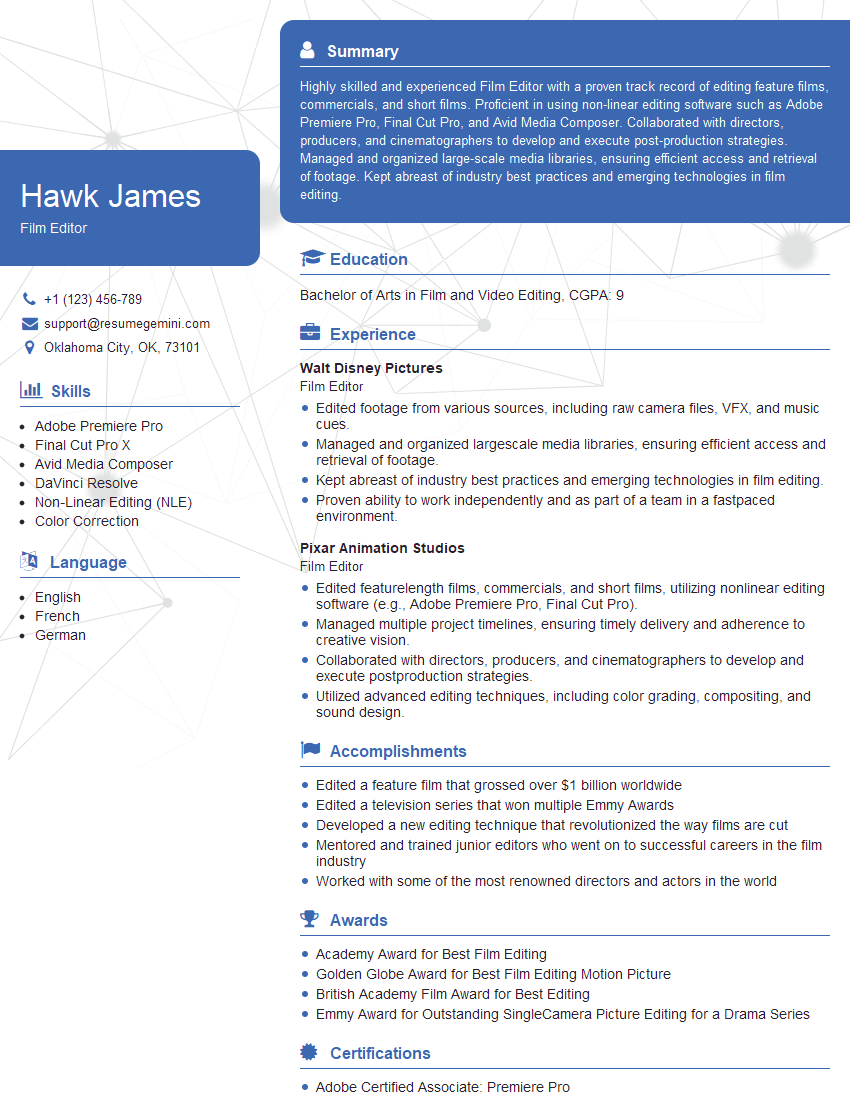

Mastering Audio and Video Synchronization opens doors to exciting career opportunities in the dynamic media and entertainment industry. Proficiency in this area significantly enhances your value to potential employers. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to the specific requirements of Audio and Video Synchronization roles. Examples of resumes tailored to this field are available to help you get started. Invest the time to create a resume that showcases your skills and experience effectively – it’s a key step in your journey to success.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?