The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Audio File Formats and Compression interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Audio File Formats and Compression Interview

Q 1. Explain the difference between lossy and lossless audio compression.

The core difference between lossy and lossless audio compression lies in how they handle data during the compression process. Lossless compression, like a perfectly organized closet, meticulously rearranges data to reduce file size without discarding any information. This means you can decompress the file and get back the exact same audio you started with. Lossy compression, on the other hand, is like throwing out clothes you rarely wear to make space; it permanently removes data deemed less important to the human ear to achieve greater compression. While the resulting file is much smaller, some audio quality is inevitably lost.

Q 2. Describe the advantages and disadvantages of MP3, AAC, FLAC, and WAV formats.

Let’s compare four common audio formats:

- MP3: Advantages: Very small file sizes, widely compatible. Disadvantages: Significant loss of audio quality, especially noticeable at higher compression ratios. Think of it as a heavily edited photo – smaller, but some detail is lost.

- AAC: Advantages: Better audio quality than MP3 at comparable bitrates, also widely compatible. Disadvantages: Still a lossy format, so some audio quality is lost. It’s like a better-edited photo than MP3, retaining more detail.

- FLAC: Advantages: Lossless compression, preserving all audio data. File sizes are larger than MP3 or AAC, but audio quality is pristine. It’s like having the original high-resolution photo.

- WAV: Advantages: Uncompressed, meaning no quality loss. Wide compatibility. Disadvantages: Extremely large file sizes. It’s like having the original, unedited photo – high quality but takes up a lot of space.

The choice depends on your priority – smaller file size versus perfect audio fidelity.

Q 3. What are codecs and how do they work in audio compression?

Codecs are the algorithms and technologies responsible for encoding and decoding audio data. Think of them as the translators between raw audio and compressed files. During compression (encoding), the codec analyzes the audio signal and removes or transforms data according to its compression algorithm. During playback (decoding), the codec reconstructs the audio signal from the compressed data. For example, the MP3 codec uses psychoacoustic models to identify and remove sounds that are less likely to be heard by the human ear. AAC uses more sophisticated models for better efficiency, resulting in higher quality at the same bitrate compared to MP3.

Q 4. How does sampling rate affect audio quality and file size?

Sampling rate, measured in Hertz (Hz), determines how many audio samples are taken per second. A higher sampling rate captures more data, leading to higher fidelity (more accurate representation of the original sound) and larger file sizes. For instance, 44.1kHz (common for CDs) captures 44,100 samples per second, while 96kHz (used in high-resolution audio) captures nearly double that, leading to a richer, more detailed sound, but a much larger file. The higher the sampling rate, the more accurate the representation of the audio wave, and thus the better the quality – but at the cost of larger file sizes.

Q 5. Explain the concept of bit depth and its impact on audio fidelity.

Bit depth represents the number of bits used to store each audio sample. A higher bit depth provides a greater range of amplitude values, leading to higher dynamic range (difference between the quietest and loudest sounds) and improved audio fidelity. A 16-bit audio file has a smaller dynamic range and can have subtle audio distortion compared to a 24-bit file with a much larger dynamic range. Think of it as the number of colors in a photograph; more bits mean more colors (or amplitude levels), resulting in a smoother, more nuanced audio experience. However, higher bit depths also increase file size.

Q 6. What are the different types of audio metadata and their uses?

Audio metadata is the information about the audio file itself, not the audio data. It’s like the label on a CD. Common types include:

- Title, Artist, Album: Basic identification information.

- Genre, Year: Categorization and date information.

- Track Number, Disc Number: Information for albums with multiple discs or tracks.

- Artwork (album cover): Visual information.

- Copyright Information: Legal information.

- Comment Field: User-added notes.

Metadata enhances organization and playback experience by providing context and allowing for easy searching and sorting of audio files.

Q 7. Discuss the trade-offs between audio quality and file size in various compression algorithms.

The trade-off between audio quality and file size is a central challenge in audio compression. Lossless codecs like FLAC prioritize quality, resulting in larger files. Lossy codecs like MP3 and AAC aggressively reduce file size but sacrifice quality. The extent of the trade-off depends on the specific codec and its settings (bitrate, sampling rate, etc.). For example, a high bitrate MP3 will sound better than a low bitrate MP3 but will also have a larger file size. The optimal balance depends on individual needs; a music streaming service might prioritize smaller file sizes for efficient delivery, while a professional audio engineer requires pristine, uncompressed WAV files for mastering.

Q 8. How does perceptual coding work in audio compression?

Perceptual coding is the cornerstone of efficient audio compression. Instead of simply discarding data, it leverages how our ears perceive sound. It exploits the limitations of human hearing to remove or reduce information that’s inaudible or masked by other sounds. Think of it like this: if you’re listening to a loud rock song, you’re unlikely to notice a faint whisper in the background. Perceptual codecs identify these inaudible or masked frequencies and either reduce their bitrate significantly or remove them entirely.

This process involves psychoacoustic models, which analyze the audio signal to predict which parts are perceptually significant and which are not. These models consider factors like frequency masking (louder sounds masking quieter sounds nearby), temporal masking (sounds masking sounds immediately before or after them), and the absolute threshold of hearing (the quietest sound a person can hear).

For example, MP3 uses perceptual coding extensively. It analyzes the audio and discards information deemed insignificant to the human ear, leading to smaller file sizes compared to uncompressed formats like WAV.

Q 9. Explain the process of audio mastering and its relevance to file formats.

Audio mastering is the final stage of audio production, where the audio is optimized for its intended playback environment. It’s like putting the finishing touches on a painting before it’s displayed in a gallery. The goal is to achieve the best possible sound quality and consistency across various playback systems. This includes tasks like equalization (adjusting the balance of frequencies), compression (reducing the dynamic range), limiting (preventing clipping or distortion), and stereo widening (creating a wider soundstage).

The choice of file format is crucial in mastering. Lossless formats like WAV or AIFF are preferred for the mastering process itself because they preserve all the audio data without any loss of quality. Once mastering is complete, the audio can be encoded into lossy formats like MP3 or AAC for distribution, with the mastering engineer carefully selecting the bitrate to strike a balance between file size and audio quality.

For instance, a mastering engineer might create a high-resolution WAV file for archival purposes, then create various versions in different formats (e.g., MP3 320kbps, AAC 256kbps) for distribution on different platforms.

Q 10. What are some common challenges in audio compression and how are they addressed?

Audio compression, while invaluable for reducing file sizes, presents several challenges. One major challenge is achieving a balance between compression ratio and audio quality. High compression ratios lead to smaller files but can result in noticeable artifacts, such as a loss of clarity or the introduction of distortion. This is particularly noticeable in lossy compression techniques.

Another challenge is maintaining consistency across different codecs and devices. The same compressed audio file might sound different when played back on various devices or using different software players due to variations in decoding algorithms or hardware limitations.

These challenges are addressed through several strategies. Advanced psychoacoustic models in newer codecs are designed to improve the efficiency of compression without sacrificing as much audio quality. Moreover, using higher bitrates in lossy formats mitigates quality degradation. Standardization efforts, such as those for audio codecs and container formats, help to ensure more consistent playback across different platforms.

Q 11. Compare and contrast different audio container formats (e.g., MP4, MOV, etc.).

Audio container formats, like MP4 and MOV, are essentially wrappers that hold the audio data along with other data like video, subtitles, and metadata. They’re not audio codecs themselves but rather define how the audio (and other data) are structured within the file.

MP4 (MPEG-4 Part 14) is a very common container format supporting a wide variety of audio and video codecs, including AAC, MP3, and HE-AAC. Its widespread compatibility makes it a popular choice for web distribution.

MOV (QuickTime File Format) is another container format, largely associated with Apple products. While it can support many of the same codecs as MP4, its adoption is not as universally widespread.

The key difference is largely in compatibility and prevalence. MP4 has become a near-universal standard, while MOV is more commonly found in Apple’s ecosystem. Both can hold the same audio data encoded with the same codec; the difference lies solely in the container which holds that data and other associated metadata.

Q 12. Describe your experience with various audio editing software and their handling of different formats.

My experience includes extensive use of professional audio editing software like Pro Tools, Logic Pro X, and Audacity. Each software handles different formats differently, primarily based on the codecs it supports and its ability to perform lossless editing.

Pro Tools, for instance, excels in handling high-resolution audio formats and offers robust features for mastering and mixing. Logic Pro X provides a comprehensive suite of tools, including advanced effects processing, and handles a vast array of formats. Audacity, while more user-friendly for beginners, has limitations in terms of high-resolution format support and advanced features but provides good basic editing capabilities across various common formats.

For example, in Pro Tools, I routinely work with WAV and AIFF files for lossless editing, then export to MP3 or AAC for distribution. In Audacity, I’ve successfully edited and exported MP3, WAV, and OGG files for simpler projects.

Q 13. How do you ensure audio file compatibility across different platforms and devices?

Ensuring audio file compatibility across different platforms and devices involves carefully considering the choice of codec and container format. Using widely supported codecs like AAC or MP3 increases compatibility. Sticking to common container formats like MP4 also improves chances of broader compatibility.

Further, metadata embedding in the audio file can improve playback consistency. This includes information about the bitrate, sample rate, and number of channels, allowing the playback device to correctly interpret and render the audio. Finally, testing the audio across various devices and platforms is crucial to identify any compatibility issues and ensure a consistent listening experience for the end user.

Q 14. Explain the concept of psychoacoustic modeling in audio compression.

Psychoacoustic modeling is a crucial component of perceptual coding in audio compression. It’s based on our understanding of how the human auditory system processes sound. These models mathematically represent the characteristics of human hearing, such as frequency masking, temporal masking, and the absolute threshold of hearing.

By analyzing the audio signal, a psychoacoustic model identifies frequencies or portions of the audio that are likely to be masked by other sounds or below the threshold of hearing. This information is then used by the audio codec to selectively reduce the bitrate of these insignificant parts or remove them altogether, resulting in significant data reduction without a noticeable decrease in perceived audio quality. Different codecs use different psychoacoustic models, leading to variations in compression efficiency and perceived audio quality.

For example, the MPEG audio codecs (including MP3 and AAC) use sophisticated psychoacoustic models to achieve high compression ratios while minimizing perceptible artifacts.

Q 15. Describe your experience working with embedded systems and audio compression.

My experience with embedded systems and audio compression spans several projects. I’ve worked extensively on resource-constrained devices, such as microcontrollers in hearing aids and digital audio players. In these scenarios, efficient audio compression is paramount. I’ve implemented codecs like AAC and Opus, optimizing their configurations for minimal latency and power consumption. For example, in a hearing aid project, we utilized a custom-built AAC encoder that prioritized low-complexity algorithms to minimize CPU usage, enabling longer battery life. In another project involving a portable music player, I optimized the Opus decoder to minimize buffer underruns, which prevented audio dropouts during playback.

This involved careful consideration of memory allocation, data structures, and bit-manipulation techniques. I also have experience profiling code for performance bottlenecks and implementing low-power optimizations. This often involves trade-offs between compression ratio and computational complexity, requiring a deep understanding of the underlying algorithms.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the key considerations for selecting an appropriate audio format for a given application?

Choosing the right audio format depends heavily on the application’s requirements. Key considerations include:

- File size: Lossless formats like WAV or FLAC offer pristine quality but are large. Lossy formats like MP3, AAC, or Opus are smaller but compromise quality. Consider storage space and bandwidth constraints.

- Audio quality: High-fidelity applications like studio mastering need lossless formats. Streaming services might use highly compressed formats like Opus for efficient delivery.

- Computational resources: Encoding and decoding require processing power. Resource-constrained devices may benefit from codecs with lower computational complexity. For example, using a simpler codec like MP3 might be better for a low-power embedded system than FLAC.

- Compatibility: Consider the target playback devices and software. Wider compatibility is achieved with more common formats like MP3. However, niche applications might favor codecs that offer superior quality or features.

- Latency requirements: Real-time applications like voice chat demand low-latency codecs. Streaming applications might tolerate higher latency for better compression.

For example, a music streaming service might choose AAC or Opus due to its balance of quality and compression, while a professional audio editing software would prefer WAV or FLAC for lossless editing.

Q 17. How do you handle audio file corruption or damage?

Handling audio file corruption requires a multi-pronged approach. The first step is identifying the type and extent of the damage. This might involve using file repair tools that can detect and fix minor errors in the file structure. Some file formats include built-in error correction mechanisms.

If repair tools are unsuccessful, more advanced techniques may be needed. If only a small portion of the file is corrupted, we might attempt to salvage the undamaged sections. This involves carefully examining the file’s structure and removing the corrupted parts, potentially resulting in an incomplete but usable audio file.

For severe damage, recovery might be impossible. The best prevention is regular backups and the use of robust storage systems. Data redundancy and error-correcting codes help ensure data integrity.

Q 18. Explain the process of converting between different audio file formats.

Converting between audio file formats involves several steps. First, the source file is decoded into its raw audio data (samples). Then, this raw data is encoded into the target format. This often involves resampling (changing the sample rate) and potentially altering the bit depth (resolution) during conversion. Many tools (like ffmpeg) handle this automatically.

ffmpeg -i input.wav -acodec libmp3lame -ab 128k output.mp3

This command uses ffmpeg to convert a WAV file (input.wav) to an MP3 file (output.mp3) with a bitrate of 128 kbps. The process is computationally intensive, especially for high-resolution audio files and complex codecs.

Quality can be affected during conversion, particularly when converting between lossy formats. Converting from a lossless to a lossy format introduces data loss; the reverse conversion does not recover lost information.

Q 19. Discuss your experience with audio streaming technologies and their compression techniques.

My experience with audio streaming technologies includes working with protocols like RTP (Real-time Transport Protocol) and codecs optimized for streaming, such as Opus, AAC-LC, and HE-AAC. These codecs balance compression efficiency with low latency. Opus, in particular, is designed for low-latency streaming and handles a wide range of bitrates and bandwidth conditions.

Streaming introduces challenges beyond simple file compression. Network conditions (jitter, packet loss) need to be addressed. Techniques like forward error correction (FEC) and adaptive bitrate streaming (ABR) are crucial for reliable audio delivery. ABR dynamically adjusts the bitrate based on network conditions to maintain a smooth listening experience. Proper buffer management is also essential to avoid interruptions.

Q 20. How does compression affect audio latency?

Compression directly affects audio latency, although the relationship isn’t always straightforward. Highly compressed audio might require more processing time for decoding, increasing latency. This is because the decoder needs to perform more complex calculations to reconstruct the original audio signal from the compressed data.

Choosing a codec with a fast decoder is crucial for low-latency applications. Real-time applications demand codecs that minimize both compression ratio and decoding time. This often involves a trade-off: higher compression ratios may result in increased decoding latency.

In contrast, applications like offline music playback might tolerate higher latency for greater compression, as long as the resulting increase in decoding time is negligible.

Q 21. What are some common issues related to audio file synchronization?

Audio file synchronization issues can stem from various sources. In multi-track recordings, timing discrepancies between tracks (drift) can occur due to slight variations in clock speeds or recording equipment. This manifests as audio tracks getting out of sync over time. Precise synchronization requires careful attention to clock accuracy during recording and professional audio editing software to align the tracks.

Network-based audio synchronization also faces latency variations due to network jitter and packet loss. Precise synchronization in streaming applications often utilizes techniques like timestamping and buffering to minimize the effects of network variations.

Another common issue is the lack of proper metadata (timing information) in audio files, leading to synchronization problems when embedding them in multimedia applications like videos. Careful handling of metadata during the creation and editing process is important to avoid synchronization errors.

Q 22. Explain your understanding of different audio compression standards (e.g., MPEG, Opus).

Audio compression standards aim to reduce the size of audio files without significantly impacting perceived audio quality. Let’s explore some key examples:

- MPEG (Moving Picture Experts Group): This family of standards includes several audio codecs, most notably MPEG-1 Layer 3 (MP3), which uses perceptual coding to discard sounds deemed inaudible to the human ear. MP3 offers a good balance between file size and quality but can introduce artifacts at high compression ratios. MPEG-4 AAC (Advanced Audio Coding) is a successor, generally offering better quality at the same bitrate than MP3.

- Opus: A modern, royalty-free codec designed for both audio and speech. Opus is highly versatile, adapting well to various bitrates and audio content. It often outperforms both MP3 and AAC in terms of quality at lower bitrates, making it ideal for streaming applications where bandwidth is a concern. It also handles variable bitrate streams very well, adjusting quality in response to network conditions.

The choice of codec depends on the specific application. For high-fidelity music, AAC or even uncompressed formats might be preferred. For streaming or applications where file size is paramount, Opus excels. Consider factors such as desired quality, file size limitations, and compatibility with target devices when making your decision.

Q 23. How do you optimize audio files for web delivery?

Optimizing audio for web delivery is crucial for a positive user experience. It involves balancing audio quality and file size to ensure fast loading times and minimal buffering. Key strategies include:

- Choosing the right codec: Opus is a strong contender for its efficiency and broad compatibility. AAC is also a popular and well-supported choice.

- Selecting appropriate bitrate: Higher bitrates result in better quality but larger files. Experiment to find the sweet spot where quality meets acceptable loading times. Consider using a variable bitrate (VBR) encoding, which allocates more bits to complex passages and fewer to simpler ones, optimizing file size while maintaining quality.

- Pre-processing: Techniques like noise reduction and equalization can improve the perceived quality and allow for lower bitrates without significant quality loss. However, be careful not to over-process, as this can introduce undesirable artifacts.

- Using appropriate container formats: MP4 is a versatile and widely supported container format for web delivery. It efficiently handles metadata and supports different codecs.

- Compression level: Different codecs allow varying degrees of compression. Higher compression results in smaller files, but potentially lower quality. Experimentation is key to finding the ideal balance.

Remember to test your optimized audio across different browsers and devices to ensure a consistent user experience.

Q 24. Describe your experience with audio quality assessment and measurement tools.

I have extensive experience using various audio quality assessment tools. These range from subjective listening tests, where human listeners rate the quality of different audio files, to objective measurement tools that analyze audio waveforms and spectral properties. Some tools I’ve used include:

- Spectrogram analyzers: These tools visualize the frequency content of audio signals, allowing me to identify artifacts and distortion introduced during compression or processing.

- Signal-to-noise ratio (SNR) meters: These measure the ratio of the desired audio signal to background noise, providing an objective indication of audio clarity.

- Perceptual evaluation of audio quality (PEAQ): This standardized algorithm predicts the perceived quality of audio based on objective measurements, providing a numerical score that correlates well with subjective listening tests.

- ABX testing software: This allows for blind comparison of different audio versions, helping to determine which sounds better to a listener.

Combining objective measurements with subjective listening tests is critical for a thorough and reliable assessment of audio quality. Objective measurements provide a quantitative basis, while subjective listening ensures the results align with human perception.

Q 25. What is your experience with Dolby Digital or DTS compression?

I’m proficient with both Dolby Digital and DTS compression technologies. Both are widely used in home theater and professional audio applications. They are lossy codecs optimized for multi-channel audio.

- Dolby Digital (AC-3): A widely adopted standard known for its efficiency and compatibility. It’s commonly found in DVDs, Blu-rays, and streaming services. It utilizes psychoacoustic modeling similar to MP3 to reduce file size.

- DTS (Digital Theater Systems): A competing technology offering a slightly different approach to compression and often praised for its slightly improved audio clarity at similar bitrates. Also prevalent in home cinema systems and streaming platforms.

My experience includes working with these formats in encoding, decoding, and troubleshooting. Understanding their intricacies, such as bitstream structures and channel configuration, is crucial for effective implementation and problem-solving.

Q 26. Explain the role of quantization in audio compression.

Quantization is a fundamental step in almost all digital audio compression techniques. It involves representing continuous analog audio signals using a discrete set of values. Imagine a smooth curve representing the sound wave – quantization essentially converts that smooth curve into a series of steps.

The number of steps available determines the resolution. A higher number of quantization levels results in finer resolution and improved fidelity (less loss of information), but larger file sizes. Lower numbers of levels lead to coarser resolution and potentially audible artifacts, but smaller file sizes. This trade-off between file size and audio quality is at the heart of audio compression.

In simpler terms, think of it like rounding a number. If you round to the nearest whole number, you lose some precision. Similarly, in audio quantization, you lose some information, which is why it’s a lossy process in many cases. The sophisticated algorithms in various audio codecs aim to minimize the perceptual impact of this loss.

Q 27. How would you troubleshoot a problem with an audio file that won’t play correctly?

Troubleshooting audio playback issues requires a systematic approach. My strategy involves these steps:

- Identify the specific problem: Is the file completely unplayable, or are there specific issues like distortion or crackling? Note any error messages displayed.

- Check file integrity: Verify the file hasn’t been corrupted. Try playing it on different devices or software to rule out issues with a specific player.

- Examine file format and codec: Ensure the player supports the file’s format and codec. If not, use a converter to change the format or try different software.

- Check audio hardware: Test with different audio devices (headphones, speakers) to rule out hardware issues.

- Review system resources: If you are experiencing issues with streaming audio, check your network connection and system performance. Insufficient RAM or processing power can lead to playback problems.

- Seek expert assistance: If the issue persists after checking these aspects, consult with community forums, the software provider, or an audio expert for further assistance.

Effective troubleshooting often involves careful observation and methodical elimination of potential causes.

Q 28. Describe your understanding of dynamic range compression and its use in audio.

Dynamic range compression (DRC) reduces the difference between the loudest and quietest parts of an audio signal. Think of it like squeezing the dynamic range to make the quiet parts louder and the loud parts quieter. It’s often used to make audio sound more consistent and louder, particularly in environments with varying background noise levels or for listening on smaller, portable devices.

How it works: DRC uses a compressor that attenuates (reduces) the amplitude of the signal when it exceeds a threshold. A ratio parameter determines how much the signal is reduced. For example, a 4:1 ratio means that for every 4 dB increase above the threshold, the output signal increases by only 1 dB. A gain parameter offsets the overall level. The resulting signal has a reduced dynamic range, making it perceptually louder and more balanced. This is often applied during mastering or for broadcast use.

Use in audio: DRC is widely used in various scenarios, including:

- Music mastering: To make music more suitable for various listening environments like radio or portable devices.

- Broadcast audio: To ensure consistent loudness across different programs.

- Live sound reinforcement: To make speech or music more easily audible in noisy environments.

While it can improve the perceived loudness and clarity, overuse of DRC can lead to a less dynamic and less natural-sounding audio with reduced detail. Finding the right balance is key to its successful application.

Key Topics to Learn for Audio File Formats and Compression Interview

- Lossless vs. Lossy Compression: Understand the fundamental differences, trade-offs in quality and file size, and appropriate applications for each (e.g., WAV vs. MP3).

- Common Audio File Formats: Become proficient in the characteristics, strengths, and weaknesses of formats like WAV, AIFF, MP3, AAC, FLAC, and Ogg Vorbis. Be prepared to discuss their use cases and suitability for different applications (e.g., music mastering, podcasting, streaming).

- Codec Principles: Grasp the underlying principles of audio codecs, including quantization, sampling rates, bit depth, and their impact on audio quality and file size. Consider exploring different encoding and decoding techniques.

- Psychoacoustics: Learn how psychoacoustics informs lossy compression techniques. Understand how our perception of sound allows for efficient data reduction without significant audible degradation.

- Bitrate and its Implications: Be able to explain the relationship between bitrate, file size, and audio quality. Discuss the impact of different bitrates on various applications and the optimal settings for different scenarios.

- Metadata and Audio Files: Understand the role of metadata in audio files, including ID3 tags and other relevant information. Discuss the importance of accurate and consistent metadata for organization and searchability.

- Practical Applications: Prepare examples from your experience (projects, personal work) demonstrating your understanding of audio file formats and compression choices in real-world scenarios (e.g., optimizing audio for a website, preparing audio for a specific playback device).

- Troubleshooting Compression Issues: Be ready to discuss common problems encountered during audio compression and your approaches to resolving them (e.g., artifacts, distortion, compatibility issues).

Next Steps

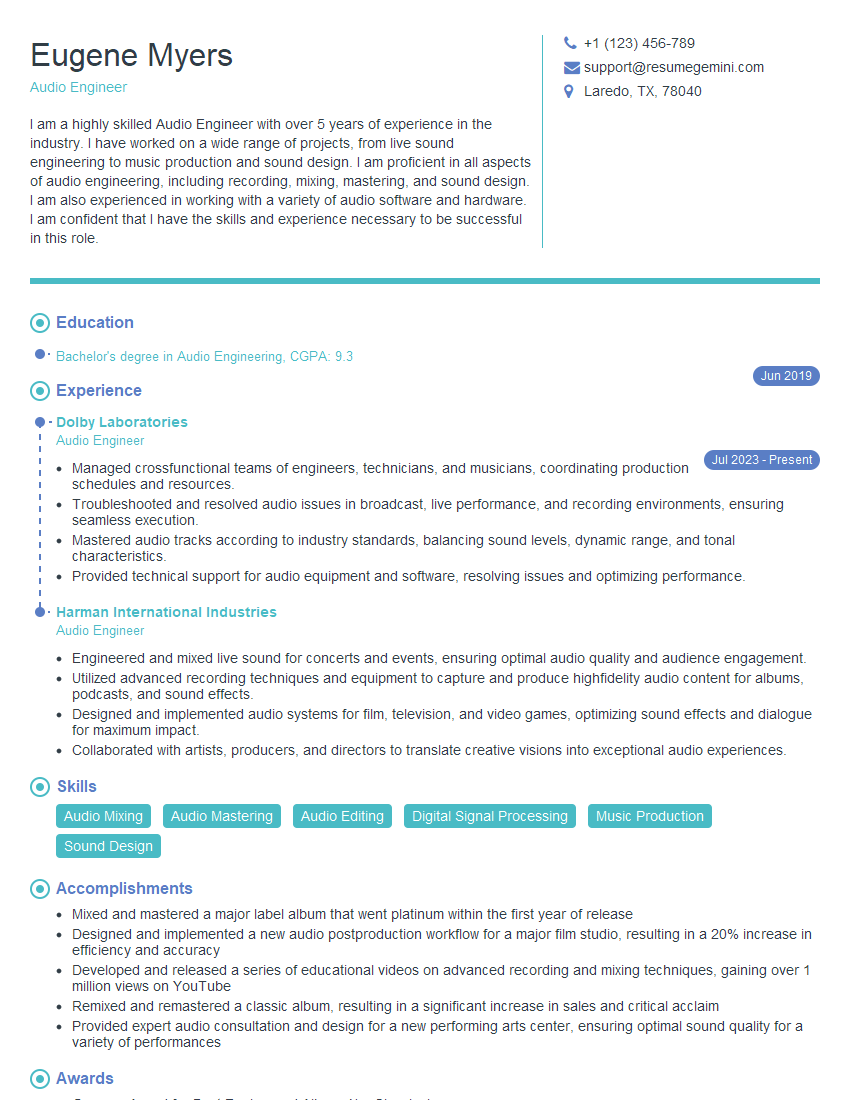

Mastering audio file formats and compression is crucial for career advancement in audio engineering, digital media, and related fields. A strong understanding of these concepts demonstrates technical proficiency and problem-solving skills highly valued by employers. To significantly increase your job prospects, create an ATS-friendly resume that effectively showcases your skills and experience. ResumeGemini is a trusted resource for building professional, impactful resumes. We provide examples of resumes tailored to Audio File Formats and Compression to help you craft a compelling application that stands out from the competition.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?