Preparation is the key to success in any interview. In this post, we’ll explore crucial Bioinformatics Tools (e.g., SAMtools, BWA, GATK) interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Bioinformatics Tools (e.g., SAMtools, BWA, GATK) Interview

Q 1. Explain the purpose of SAMtools and its key functionalities.

SAMtools is a suite of utilities for manipulating and analyzing sequence alignment data in the SAM/BAM format. Think of it as a powerful toolbox for managing and interpreting the results of genome sequencing experiments. Its core purpose is to efficiently handle and process massive datasets, allowing researchers to extract meaningful biological insights.

- Viewing alignments: SAMtools

viewallows you to inspect alignments in the SAM or BAM format, filter them based on various criteria, and convert between formats. - Sorting alignments:

sortefficiently sorts alignments by genomic coordinates or read names, essential for downstream analysis. - Indexing alignments:

indexcreates an index file (BAI) for BAM files enabling rapid random access to specific regions, significantly speeding up data retrieval. - Generating statistics:

statsprovides summary statistics of an alignment file, such as mapping rates and coverage depth. - Variant calling: While not its primary function, SAMtools provides basic variant calling capabilities through its

mpileupcommand, which is frequently used as a precursor to more advanced variant callers.

For instance, imagine you have a BAM file containing millions of read alignments. SAMtools allows you to quickly find the alignments in a specific genomic region, calculate the coverage at that region, and identify potential variants. Without SAMtools, handling such data would be incredibly challenging.

Q 2. Describe the difference between SAM and BAM formats.

SAM (Sequence Alignment/Map) and BAM (Binary Alignment/Map) formats both represent sequence alignments, but BAM is a binary version of SAM. Think of it like a compressed file: BAM is a compact, space-saving representation of the same information stored in the human-readable SAM format.

- SAM: Human-readable, text-based format. Easy to inspect and understand but significantly larger in file size and slower to process.

- BAM: Binary format, highly compressed. Much faster to process and requires significantly less storage space. While not human-readable directly, it can be easily converted to SAM with SAMtools

view.

In practice, BAM is the preferred format for storing and analyzing large-scale sequencing data due to its efficiency. Researchers usually work with BAM files but might occasionally convert to SAM for quick visual inspection.

Q 3. How would you use SAMtools to sort a BAM file by coordinate?

Sorting a BAM file by coordinate is a common preprocessing step for many bioinformatics analyses. This ensures that reads aligning to the same genomic location are grouped together, making downstream analyses more efficient and accurate. SAMtools makes this straightforward.

The command is simply:

samtools sort input.bam -o output.bamWhere input.bam is your unsorted BAM file and output.bam is the name of the sorted BAM file you want to create. The -o flag specifies the output file name.

This command will efficiently sort the input BAM file by coordinates and create a new sorted BAM file. This sorted BAM file can then be used for various downstream analyses like variant calling or coverage analysis, where sorted alignments are crucial for efficiency and accuracy.

Q 4. Explain the purpose of BWA and its alignment algorithm.

BWA (Burrows-Wheeler Aligner) is a widely used algorithm for aligning sequencing reads to a reference genome. Imagine you have a puzzle (your genome) and many pieces (your sequencing reads). BWA helps to find the best fit for each piece in the puzzle.

BWA primarily uses the Burrows-Wheeler Transform (BWT), a clever data structure that enables incredibly fast searching for short sequences within a much larger genome. It’s like having a highly optimized index for your genome, allowing BWA to quickly pinpoint potential alignment locations for each read. BWA then uses a scoring scheme to determine the best alignment, considering factors like mismatches and gaps.

BWA offers several alignment algorithms, most notably BWA-MEM and BWA-ALN, each with strengths and weaknesses.

Q 5. What are the advantages and disadvantages of BWA-MEM compared to BWA-ALN?

BWA-MEM and BWA-ALN are both BWA alignment algorithms, but they cater to different needs.

- BWA-MEM: Designed for longer reads (e.g., Illumina paired-end reads) and offers superior performance for detecting longer indels and handling highly repetitive regions. It’s the current gold standard for most applications.

- BWA-ALN: Primarily designed for shorter reads and is generally faster but less accurate, particularly for longer indels and repetitive regions.

Advantages of BWA-MEM: Higher accuracy, especially with longer reads and repetitive regions; better handling of indels.

Disadvantages of BWA-MEM: Can be slower than BWA-ALN.

Advantages of BWA-ALN: Faster alignment speed.

Disadvantages of BWA-ALN: Lower accuracy for longer reads, indels, and repetitive regions. In many modern applications, the slight speed advantage of BWA-ALN is often outweighed by the improved accuracy of BWA-MEM.

Q 6. How would you assess the quality of BWA alignments?

Assessing the quality of BWA alignments involves evaluating several key metrics. This is similar to checking your work after solving a puzzle – did you put the pieces together correctly?

- Mapping quality (MAPQ): A phred-scaled score reflecting the confidence in the alignment. Higher MAPQ scores indicate better alignments.

- Number of mismatches: The number of differences between the read and the reference genome indicates alignment quality. Fewer mismatches usually suggest a better alignment.

- Alignment length: Longer alignments generally indicate higher confidence unless caused by repetitive regions.

- Properly paired reads (for paired-end data): Checks if paired-end reads align to the genome in the expected orientation and distance.

- Visual inspection (optional): Using tools like IGV (Integrative Genomics Viewer) allows visual inspection of alignments to identify potential errors or artifacts.

By examining these factors, you gain insight into the reliability of your alignment data and how that might influence downstream analysis. Poor quality alignments can lead to inaccurate variant calling or gene expression quantification.

Q 7. Describe the GATK pipeline for variant calling.

The GATK (Genome Analysis Toolkit) pipeline for variant calling is a sophisticated workflow used to identify single nucleotide polymorphisms (SNPs) and insertions/deletions (Indels) from sequencing data. It’s a multi-step process, akin to carefully assembling and verifying the pieces of a very complex puzzle.

- Read alignment: This is done using an aligner like BWA. The output is usually a BAM file.

- Read sorting and indexing: Using SAMtools to create a sorted and indexed BAM file.

- Base quality score recalibration (BQSR): A crucial step to correct systematic errors in base quality scores, improving variant calling accuracy.

- Indel realignment: Re-aligns reads around indels to improve accuracy, especially around regions prone to indel misalignments.

- Variant calling (HaplotypeCaller or UnifiedGenotyper): The core step. These GATK tools call variants based on the aligned reads and quality scores.

- Variant filtration and annotation: Applying filters to remove low-quality variants and annotating remaining variants with additional information (e.g., functional consequences).

The GATK Best Practices workflow is highly recommended, as it combines multiple steps to ensure high quality and reliability of the called variants. The output is a variant call format (VCF) file containing the identified SNPs and indels along with associated quality scores and annotation data. This VCF file can be used for further analysis such as genome-wide association studies (GWAS) or population genomics.

Q 8. Explain the purpose and functionality of BaseRecalibrator in GATK.

BaseRecalibrator in GATK is a crucial tool for improving the accuracy of variant calling by correcting for systematic errors in base quality scores. Think of it like this: your sequencing machine provides a ‘confidence score’ for each base it calls (A, T, C, or G). However, these scores might be inaccurate due to various biases in the sequencing process. BaseRecalibrator analyzes your reads against a known reference genome and a set of known variants (often a high-quality database like dbSNP) to learn these biases. It then builds a recalibration model that adjusts the base quality scores, making them more reliable.

The process involves several steps. First, you provide BaseRecalibrator with your aligned BAM file (containing your sequencing reads aligned to the reference genome) and a known variation database. The tool then analyzes the relationship between base qualities and observed errors, building a statistical model. Finally, you apply this model using ApplyBQSR to recalibrate your base quality scores in your BAM file. This refined BAM file is then used for more accurate variant calling.

For example, if the machine consistently miscalls ‘G’ bases at certain positions, BaseRecalibrator would learn this bias and lower the confidence score for ‘G’ calls in those specific areas. This prevents these inaccurate bases from influencing the downstream variant calling and results in more reliable variant detection. The output is a recalibrated BAM file ready for variant calling with tools like HaplotypeCaller.

Q 9. How would you handle low-quality reads in GATK variant calling?

Low-quality reads can significantly impact the accuracy of variant calling, leading to false positives (incorrectly identifying a variant) or false negatives (missing a true variant). In GATK, we address low-quality reads in several ways.

- Read Filtering: Before variant calling, we can filter out reads based on mapping quality score (MAPQ), read length, and other quality metrics. Reads with low MAPQ scores (indicating low confidence in their alignment to the reference genome) are often removed. This is done using tools like

Samtools viewor GATK’s filtering capabilities. - Base Quality Score Recalibration (BQSR): As discussed previously, BaseRecalibrator and ApplyBQSR help correct systematic errors in base quality scores. This ensures that low-quality bases are properly weighted during variant calling.

- Variant Calling Parameters: GATK tools like HaplotypeCaller offer various parameters to control the stringency of variant calling. Adjusting these parameters can help reduce the influence of low-quality reads. For example, increasing the minimum base quality threshold will exclude variants supported by low-quality bases.

- Using appropriate GATK tools: Tools like HaplotypeCaller are designed to handle low quality reads more effectively than older tools.

The optimal approach often involves a combination of these strategies. A good practice is to experiment with different filtering thresholds and parameters to find a balance between removing low-quality data and retaining sufficient information for accurate variant detection. Remember, blindly removing all low-quality reads might also remove true signal.

Q 10. What are the different types of variants detected by GATK?

GATK detects several types of variants, primarily categorized by their size and nature:

- Single Nucleotide Polymorphisms (SNPs): These are the most common type, representing a single base change (A, T, C, or G) at a specific position in the genome.

- Insertions and Deletions (INDELs): These involve the insertion or deletion of one or more bases in the genome sequence. INDELs can range from small (1-2 bases) to large (hundreds or thousands of bases).

- Structural Variants (SVs): These are larger-scale variations, including inversions, translocations, duplications, and copy number variations (CNVs). While GATK can detect some smaller SVs, specialized tools are generally better suited for analyzing larger SVs.

- Multi-nucleotide Polymorphisms (MNPs): Similar to SNPs, but affecting multiple consecutive bases.

GATK’s HaplotypeCaller is particularly powerful for identifying all these variant types, especially SNPs and INDELs, using a sophisticated algorithm that considers the local genomic context and surrounding reads.

Q 11. Explain the concept of read depth in variant calling.

Read depth in variant calling refers to the number of reads that cover a particular genomic position. Think of it as the ‘voting power’ at that position. A higher read depth generally means more evidence to support a variant call, increasing its confidence. Imagine trying to decide whether a single word is misspelled in a book. If you only read one copy, your confidence is low. If you read ten copies, and nine have the same misspelling, your confidence is much higher.

Low read depth can lead to false negatives (missing true variants) because there might not be enough reads to detect a minor allele. Conversely, very high read depth might introduce more noise or artifacts, potentially leading to false positives. The ideal read depth is often a balance between enough coverage to detect real variants and limiting the impact of sequencing errors. A typical range for human genome sequencing projects is 30x – 50x coverage (meaning each base is covered by 30-50 reads on average). Specific applications might require higher or lower depth depending on the task and sensitivity requirements.

Q 12. How do you assess the quality of variant calls?

Assessing the quality of variant calls is critical for ensuring the reliability of downstream analyses. We evaluate this through various metrics and visualizations:

- Genotype Quality (GQ): This score reflects the confidence in the assigned genotype (e.g., homozygous reference, heterozygous, homozygous variant). Higher GQ scores indicate greater confidence.

- Read Depth (DP): As discussed before, sufficient read depth is essential for confident variant calls. Low DP might raise concerns.

- Variant Allele Frequency (VAF): In heterozygous variants, the VAF should be around 50%. Significant deviations might point to errors.

- Quality Score of the variant allele: The base quality scores of the reads supporting the variant allele should be high.

- Visual inspection: Using tools like Integrative Genomics Viewer (IGV) allows visual inspection of the reads aligned to the genomic region of the variant call. This helps in identifying potential artifacts or errors.

- Comparison with known databases: Comparing the variant calls with databases like dbSNP can provide clues about their validity. Variants present in multiple databases are generally considered more reliable.

These assessments are often combined to build a holistic view of the quality of the variant calls. A stringent filtering strategy, combining several criteria, helps remove low-confidence calls before any further analysis.

Q 13. What are some common metrics used to evaluate variant calling accuracy?

Several metrics evaluate variant calling accuracy, often calculated by comparing the calls to a gold standard dataset (e.g., a highly accurate, manually curated set of variants). Key metrics include:

- Sensitivity (Recall): The proportion of true variants correctly identified by the caller. High sensitivity means few false negatives.

- Specificity (Precision): The proportion of identified variants that are actually true variants. High specificity means few false positives.

- F1-score: The harmonic mean of sensitivity and specificity, providing a balanced measure of accuracy.

- Accuracy: The overall proportion of correctly identified variants.

- Positive Predictive Value (PPV): The probability that a positive result (variant call) is indeed true.

- False Discovery Rate (FDR): The proportion of positive results that are false positives.

These metrics help quantify the performance of a variant calling pipeline and compare different tools or parameter settings. A high F1-score typically indicates good overall performance.

Q 14. Describe the difference between SNP and INDEL variants.

SNPs and INDELs are both types of genetic variations, but they differ in their size and nature:

- SNPs (Single Nucleotide Polymorphisms): These are single-base substitutions in the DNA sequence. For example, a change from A to G at a specific position.

- INDELs (Insertions and Deletions): These involve the insertion or deletion of one or more bases in the DNA sequence. An insertion might add a single ‘T’ base, while a deletion might remove a sequence of three bases (‘ATC’).

The key difference is scale. SNPs are point mutations affecting a single base, while INDELs can vary greatly in size, from a few bases to several kilobases. Both types of variations can have functional consequences depending on their location and the affected gene.

Q 15. What is the purpose of Picard tools in NGS data analysis?

Picard is a collection of command-line tools for manipulating high-throughput sequencing (NGS) data in the SAM/BAM format. Its primary purpose is to ensure data quality and prepare it for downstream analysis. Think of it as the meticulous data janitor, cleaning up and organizing your raw sequencing data before you can extract meaningful insights. It handles tasks crucial for successful analysis, addressing issues that can derail your research if left unattended.

- Data cleaning and validation: Picard checks for inconsistencies, errors, and potential artifacts in your data.

- Data manipulation: It allows you to sort, merge, and filter BAM files efficiently.

- Metrics generation: Picard provides quality metrics that are essential for assessing the quality of your sequencing experiment.

- Preparation for downstream analysis: It prepares your data for variant calling, gene expression analysis, and other advanced analyses.

For instance, Picard can identify and remove duplicate reads, a common problem in NGS, improving the accuracy of downstream analyses like variant calling. Without it, these duplicates can skew your results and lead to false positives.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How would you use Picard to mark duplicate reads?

Picard uses the MarkDuplicates tool to identify and mark duplicate reads. Duplicate reads arise from PCR amplification during library preparation and can inflate coverage and bias downstream analyses. This tool compares read pairs based on their start positions and other parameters, flagging those that are identical as duplicates. It then adds a tag to the BAM file header indicating that these reads have been marked.

java -jar picard.jar MarkDuplicates I=input.bam O=output.bam M=metrics.txt REMOVE_DUPLICATES=trueIn this command:

I=input.bamspecifies the input BAM file.O=output.bamspecifies the output BAM file with duplicates marked.M=metrics.txtcreates a metrics file summarizing the duplicate marking process.REMOVE_DUPLICATES=true(optional) actually removes the marked duplicates from the output file. Often, it’s better to just mark them and analyze the metrics to understand the extent of duplication before making a decision to remove them.

The resulting metrics.txt file provides key statistics such as the total number of reads, number of duplicates, and percentage of duplicates. This information is crucial in assessing the quality of your sequencing library and the impact of duplicate reads on downstream analyses.

Q 17. Describe the process of RNA-Seq data alignment using tools like STAR or HISAT2.

RNA-Seq data alignment involves mapping RNA sequencing reads to a reference genome or transcriptome to determine which genes were expressed and at what levels. Tools like STAR (Spliced Transcripts Alignment to a Reference) and HISAT2 (Hierarchical Indexing for Spliced Alignment of Transcripts 2) are highly efficient aligners designed to handle the spliced nature of RNA transcripts.

- Index Creation: First, a genome or transcriptome index is created using the aligner’s indexing tools. This index allows for rapid searching and alignment of reads.

- Read Alignment: Next, the RNA-Seq reads are aligned to the index. The aligners cleverly handle potential splicing events that occur in mature RNA molecules. They search for optimal alignments considering possible exon junctions and gaps.

- Alignment Output: Finally, the output is typically a SAM/BAM file containing the aligned reads. This file indicates which genomic location(s) each read originated from, the mapping quality, and other relevant information.

STAR is known for its speed and accuracy, particularly with complex transcriptomes, while HISAT2 offers a good balance between speed and memory efficiency. The choice of aligner depends on the specific needs of the project, the size of the genome, and available computational resources. For very large genomes, HISAT2 might be preferred due to its lower memory footprint.

Q 18. How would you perform quality control on RNA-Seq data?

Quality control (QC) of RNA-Seq data is crucial to ensure the reliability of downstream analyses. It involves assessing several aspects of the data to identify potential biases or artifacts that might affect the results. We assess both the raw reads and the aligned reads.

- Raw read QC: Tools like FastQC provide metrics on read quality, base quality distribution, adapter contamination, and other relevant parameters. Analyzing this data helps identify potential issues such as low quality reads or overrepresentation of adapters.

- Aligned read QC: After alignment, metrics such as the percentage of mapped reads, read distribution across the genome, and the number of uniquely mapped reads are crucial. These metrics indicate the overall success of the alignment and can highlight potential biases such as uneven coverage.

- Transcript abundance and saturation: Assessment of gene expression distribution and read saturation helps ensure adequate sequencing depth.

Identifying and addressing quality issues early in the process can significantly improve the accuracy and reliability of the overall RNA-Seq analysis. If there are significant quality issues, adjustments to experimental parameters, or even rerunning parts of the experiment might be necessary.

Q 19. Explain the concept of gene expression quantification.

Gene expression quantification is the process of determining the abundance of RNA transcripts for each gene in a sample. This tells us how actively a gene is being expressed. It essentially measures how many copies of each RNA molecule are present. This is often measured in reads per kilobase million (RPKM), fragments per kilobase million (FPKM), or transcripts per million (TPM). These different metrics account for variations in gene length and sequencing depth to provide a standardized measure of expression.

Imagine a library with many different books. Gene expression quantification is like counting how many copies of each book are present in the library. A book with many copies is like a highly expressed gene, while a book with few copies is like a lowly expressed gene. The different metrics help account for variations in book length (gene length) and the total number of books in the library (sequencing depth).

Q 20. How would you identify differentially expressed genes using tools like DESeq2 or edgeR?

Identifying differentially expressed genes (DEGs) involves comparing gene expression levels between different experimental conditions (e.g., treated vs. control samples) to find genes that show significant changes in expression. Tools like DESeq2 and edgeR are popular choices for this task. They use statistical models to account for experimental variability and determine which genes exhibit statistically significant differences in expression.

- Data Import: Import the gene expression counts generated from tools like RSEM or Salmon.

- Data Preprocessing: Perform data normalization to account for variations in sequencing depth and library size.

- Statistical Testing: Apply statistical methods (e.g., Negative Binomial distribution models in DESeq2 and edgeR) to compare the expression levels of genes between the groups being compared.

- Multiple testing correction: Adjust p-values to account for multiple hypothesis testing using methods like Benjamini-Hochberg correction to control the false discovery rate (FDR).

- Result interpretation: Identify genes that show a statistically significant difference in expression between groups, and analyze the results based on the chosen threshold (e.g. adjusted p-value < 0.05 and log2 fold change > 1).

These tools provide detailed statistics, including adjusted p-values and log2 fold changes, to help researchers determine the significance and magnitude of expression differences for each gene. The resulting list of DEGs can then be used for further biological interpretation.

Q 21. What are some common challenges in NGS data analysis?

NGS data analysis presents numerous challenges, even with powerful tools like those mentioned above.

- High dimensionality and computational costs: NGS datasets are incredibly large, requiring substantial computational resources for storage, processing, and analysis. Memory and processing power limitations can significantly slow down the analysis pipeline.

- Data quality and biases: Errors during sequencing, library preparation, and amplification can introduce various biases into the data, affecting the accuracy and reliability of downstream analyses.

- Data interpretation and biological relevance: Interpreting the results of NGS experiments and linking them to biological processes often requires advanced statistical and biological knowledge. It’s not simply about generating numbers; it’s about understanding their meaning.

- Alignment ambiguity: Reads can map to multiple locations in the genome, creating ambiguities and uncertainties in the alignment process. This necessitates the use of sophisticated alignment algorithms to address these issues.

- Variant calling accuracy: Accurate variant calling remains a significant challenge, especially when dealing with low-frequency variants or complex genomic regions.

Addressing these challenges often requires a multi-faceted approach that combines advanced bioinformatics tools, careful experimental design, and rigorous statistical analysis.

Q 22. Describe your experience with scripting languages (e.g., Python, R) in bioinformatics.

Scripting languages are fundamental to bioinformatics. I’m proficient in both Python and R, leveraging their strengths for different tasks. Python, with its versatility and extensive libraries like Biopython and Scikit-learn, is my go-to for automating workflows, processing large datasets, and developing complex pipelines. For example, I’ve used Python to automate the entire process of aligning reads with BWA, performing variant calling with GATK, and annotating variants using ANNOVAR, all within a single, reproducible script. R, on the other hand, excels in statistical analysis and data visualization. I frequently use R packages like ggplot2 to create publication-quality figures representing complex genomic data, such as genomic coverage plots or Manhattan plots for GWAS studies. My experience includes developing custom R scripts for performing differential gene expression analysis using RNA-Seq data and visualizing the results using interactive plots.

Q 23. How do you handle large datasets in bioinformatics analysis?

Handling large datasets is a daily challenge in bioinformatics. My strategies involve a combination of efficient algorithms, parallel processing, and database management. For example, instead of loading an entire BAM file into memory, I use SAMtools’ indexing and region-based querying to access only the necessary parts of the file. This significantly reduces memory footprint and speeds up processing. For even larger datasets, I leverage parallel computing capabilities, employing libraries like multiprocessing in Python or using tools like GNU parallel to distribute computationally intensive tasks across multiple cores or even a cluster. Furthermore, I utilize database systems like MySQL or PostgreSQL to store and query large genomic datasets efficiently, facilitating complex analyses without loading everything into RAM.

Q 24. Explain your experience with cloud computing platforms (e.g., AWS, Google Cloud) for bioinformatics.

I have extensive experience using cloud computing platforms, primarily AWS and Google Cloud, to manage and analyze large-scale bioinformatics projects. I’m familiar with utilizing cloud-based virtual machines (EC2 on AWS, Compute Engine on Google Cloud) to run computationally intensive pipelines, leveraging the scalability of these platforms to handle datasets that wouldn’t be feasible on a local machine. I also utilize cloud storage services (S3 on AWS, Cloud Storage on Google Cloud) for cost-effective and easily accessible data storage. Furthermore, I’m familiar with managed services like AWS Batch or Google Cloud Dataproc for simplifying the management of parallel computing jobs. In one project, we used AWS to process a whole-genome sequencing dataset of 1000 individuals, leveraging the scalability of the cloud to complete the analysis significantly faster than it would have been possible on our local infrastructure.

Q 25. Describe your experience with version control systems like Git.

Git is an indispensable tool for me. I use it daily for version control, allowing me to track changes, collaborate effectively with others, and easily revert to previous versions of my code and data if needed. I am proficient in branching, merging, and resolving conflicts. I routinely use Git for managing both code (scripts, pipelines) and data (intermediate analysis files) associated with bioinformatics projects, ensuring reproducibility and facilitating collaboration. For example, in a recent project involving RNA-Seq analysis, we used a Git repository to manage the entire analysis pipeline, including the scripts, input data, intermediate results, and final reports. This allowed for easy collaboration, tracking of changes, and seamless reproducibility.

Q 26. How would you troubleshoot a bioinformatics pipeline that’s failing?

Troubleshooting a failing bioinformatics pipeline requires a systematic approach. I start by carefully examining the error messages, looking for clues about the point of failure. Then, I break down the pipeline into smaller, manageable units, testing each component individually. I make use of logging throughout the pipeline to get better insights into what steps are succeeding and failing. I leverage tools like debugging tools (e.g., pdb in Python) to step through code and identify the root cause. Additionally, I carefully check input data integrity, ensuring files are properly formatted, and have the correct size. If the issue is related to memory or processing power, I consider optimizing the code, re-evaluating the algorithm’s efficiency, and moving to a higher computing resource (e.g., a cloud server).

Q 27. What are your preferred methods for visualizing NGS data?

My preferred methods for visualizing NGS data depend on the specific type of data and the question being asked. For visualizing read alignments and coverage, I utilize tools like IGV (Integrative Genomics Viewer). For exploring genomic variations, I frequently use custom plots generated through R, employing packages like ggplot2 to create visually appealing and informative graphs. For visualizing differential gene expression from RNA-Seq data, heatmaps and volcano plots generated in R are invaluable. Interactive visualizations using tools like Plotly or Bokeh can provide even greater insights, allowing users to explore the data dynamically. The choice of visualization method always depends on providing the clearest communication of the results to the intended audience.

Q 28. Describe a complex bioinformatics project you’ve worked on and your contributions.

I recently completed a complex project involving the identification of novel biomarkers for a specific type of cancer using whole-exome sequencing data from a cohort of patients. My contributions included developing the entire analysis pipeline, from raw data preprocessing (quality control, alignment with BWA-MEM, variant calling with GATK) to downstream analysis (variant annotation, pathway enrichment analysis, survival analysis). I wrote custom Python scripts to automate the process, ensuring reproducibility and facilitating collaboration among the research team. I employed statistical methods and machine learning techniques to identify potential biomarkers and validate their significance. The project resulted in a publication identifying several novel candidate biomarkers for the disease, significantly advancing our understanding of the disease’s genetics.

Key Topics to Learn for Bioinformatics Tools (e.g., SAMtools, BWA, GATK) Interview

- SAMtools: Understanding the SAM/BAM format, filtering and sorting BAM files, indexing for efficient querying, variant calling basics using SAMtools mpileup.

- BWA: Principles of short-read alignment, understanding different alignment algorithms (e.g., BWA-MEM), evaluating alignment quality metrics (MAPQ), handling paired-end reads.

- GATK: Variant discovery workflow (from raw reads to variant calls), understanding base recalibration and indel realignment, variant annotation and filtering, working with GVCF files.

- Practical Applications: Describe scenarios where you’ve used these tools for tasks like genome alignment, variant detection, and analysis of next-generation sequencing data. Be ready to discuss your approach and problem-solving strategies.

- Theoretical Concepts: Demonstrate a strong understanding of concepts like read mapping, variant calling accuracy, genome assembly principles, and statistical significance in genomic data analysis.

- Command-line proficiency: Be comfortable explaining your approach to scripting and automating analyses using these tools. Familiarity with common command-line arguments and options is essential.

- Data interpretation: Show your ability to interpret the output from these tools, identify potential issues, and explain your conclusions. Practice explaining complex results in a clear and concise manner.

- Troubleshooting: Discuss how you would approach common errors or unexpected results encountered when using these tools. Highlight your problem-solving skills and ability to identify and resolve technical challenges.

Next Steps

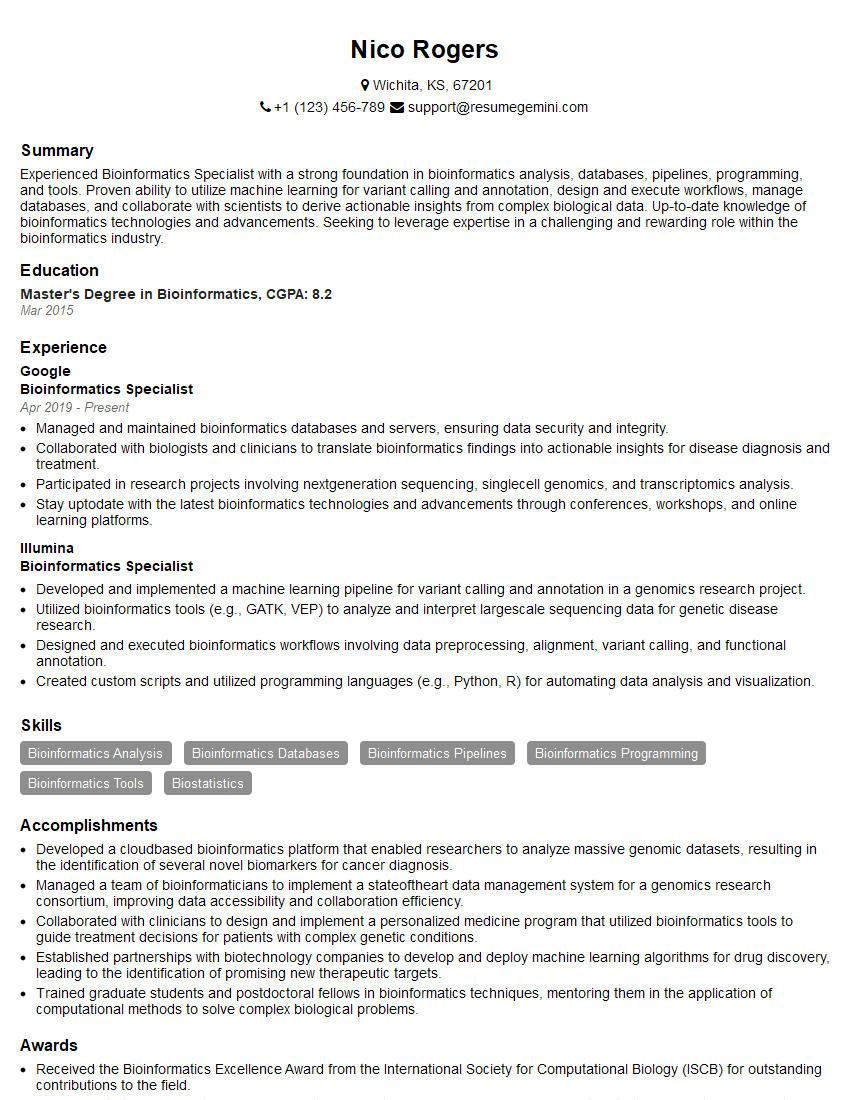

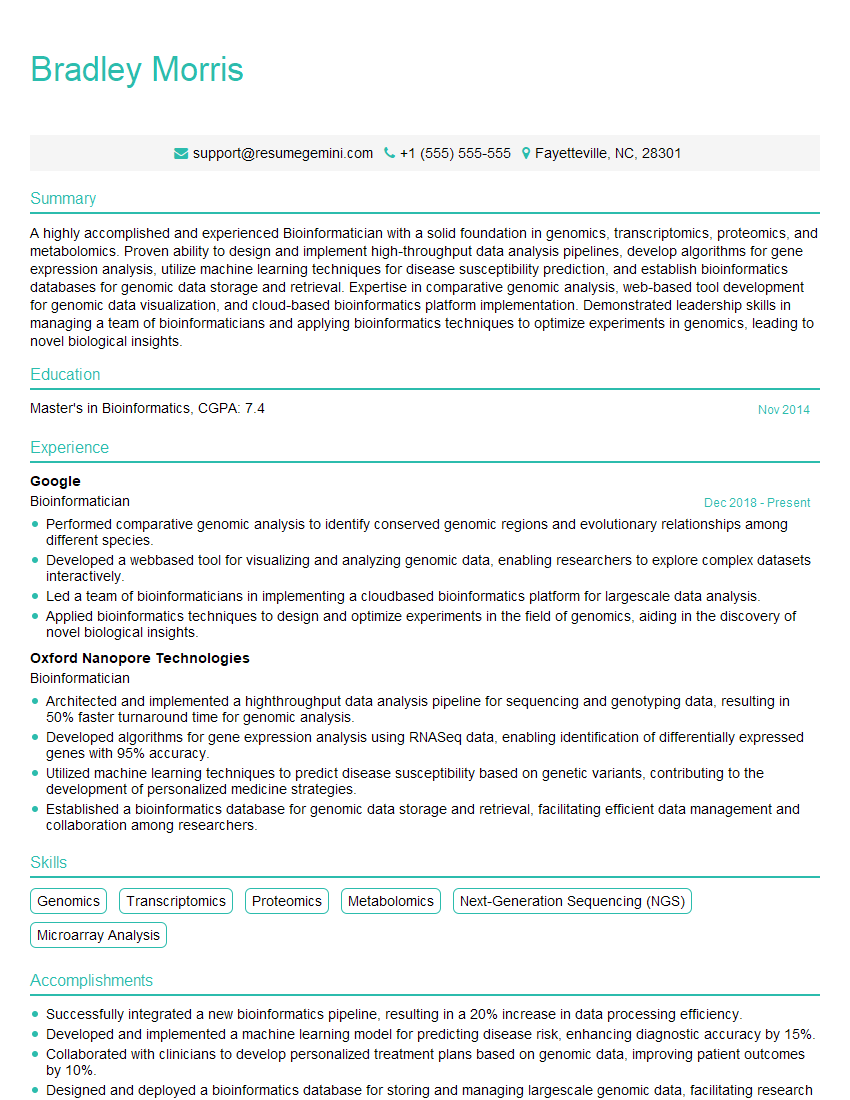

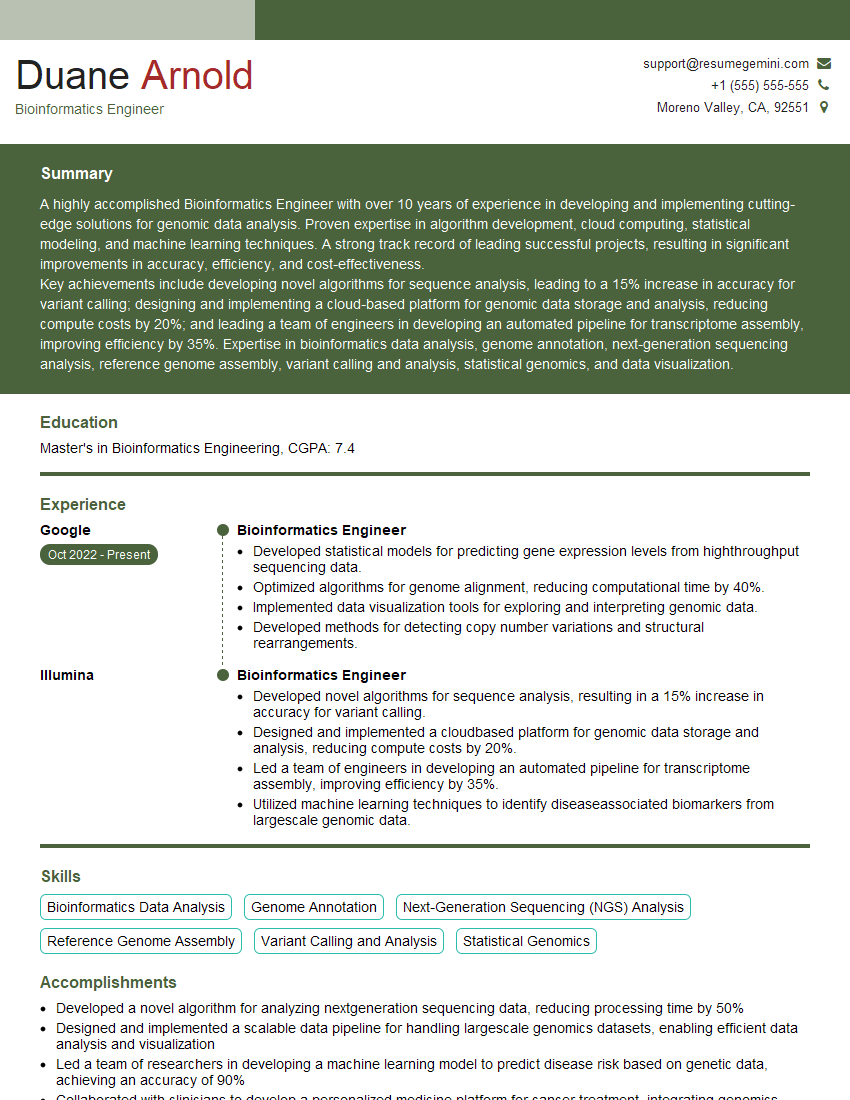

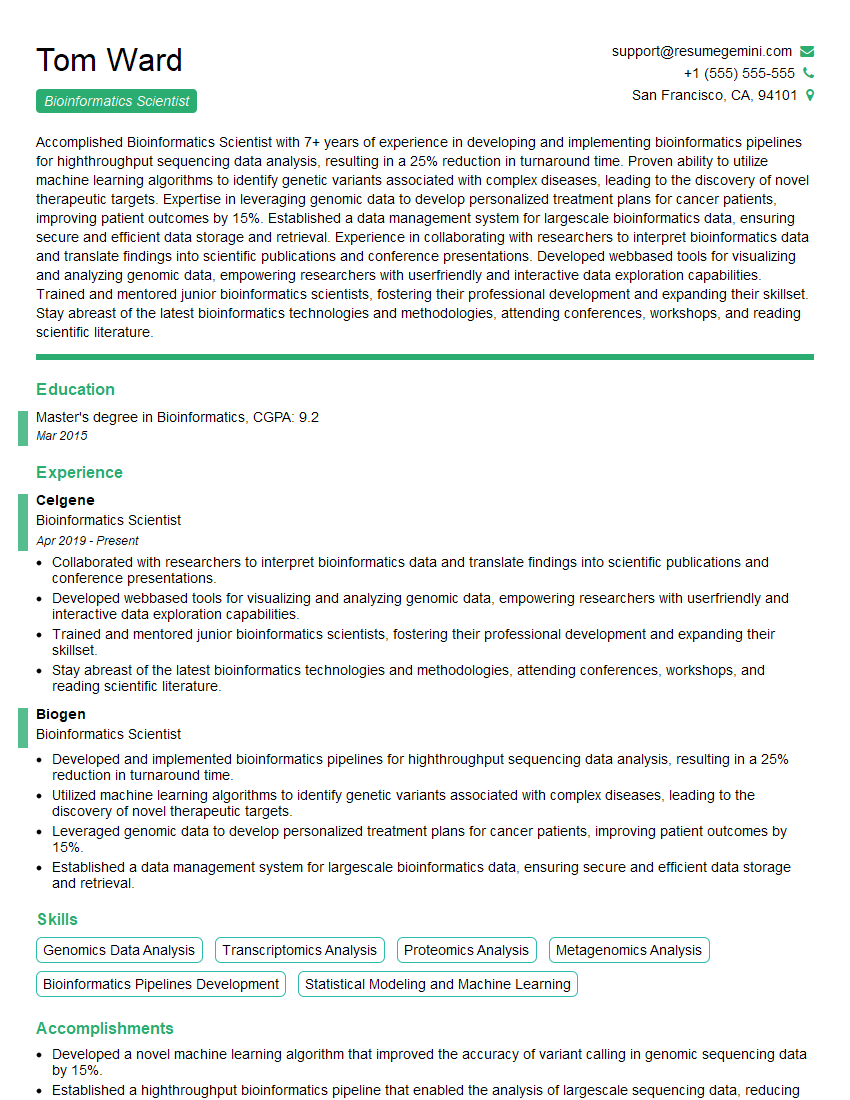

Mastering Bioinformatics tools like SAMtools, BWA, and GATK is crucial for career advancement in genomics and bioinformatics. These tools are fundamental for many roles, and demonstrating proficiency significantly enhances your job prospects. Building an ATS-friendly resume is key to getting your application noticed. To create a powerful resume that highlights your skills effectively, leverage the resources available at ResumeGemini. ResumeGemini offers a streamlined approach to resume building, and you’ll find examples of resumes tailored to Bioinformatics Tools (e.g., SAMtools, BWA, GATK) to help you create a compelling application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

These apartments are so amazing, posting them online would break the algorithm.

https://bit.ly/Lovely2BedsApartmentHudsonYards

Reach out at [email protected] and let’s get started!

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?