Are you ready to stand out in your next interview? Understanding and preparing for Camera Testing and Evaluation interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Camera Testing and Evaluation Interview

Q 1. Explain the difference between spatial and temporal noise in camera images.

Spatial noise and temporal noise are both forms of unwanted variations in a camera image, but they manifest differently and stem from different sources. Think of it like this: spatial noise is like static on a radio, affecting the image’s detail at a single point in time, while temporal noise is like the radio signal flickering in and out – it changes over time.

Spatial noise refers to random variations in pixel values across the image sensor. This is often seen as graininess or blotchiness and is typically more noticeable in low-light conditions. Common sources include the sensor’s inherent thermal noise (heat-generated fluctuations), shot noise (due to the quantized nature of light), and read noise (noise introduced during the readout process from the sensor). Reducing spatial noise often involves techniques like noise reduction algorithms in post-processing or using better sensors with lower noise characteristics.

Temporal noise, on the other hand, is noise that varies over time. It can manifest as flickering or instability in the image, particularly noticeable in video. Sources include variations in the light source (such as flickering fluorescent lights), rolling shutter artifacts (where the sensor scans the image line by line, causing distortion in fast motion), and issues with the camera’s internal clock or signal processing. Temporal noise mitigation often relies on using stable light sources, employing advanced sensor designs, and leveraging temporal filtering techniques in post-processing.

Q 2. Describe your experience with color calibration and profiling techniques.

Color calibration and profiling are crucial for ensuring accurate and consistent color reproduction across different devices and conditions. My experience encompasses both hardware and software aspects. In hardware calibration, I’ve worked with colorimeters and spectrophotometers to measure the actual color output of a camera, comparing it to a known standard. This involves creating color profiles which the camera can then use to adjust its output.

Software calibration usually involves using specialized software to analyze images and generate color profiles. For example, I’ve used software such as X-Rite ColorChecker to create camera profiles by analyzing images of a color chart with known values. This profile can then be applied to the camera or used as a look-up table in post-processing to correct color imbalances. The techniques are chosen based on the specific needs: high-end professional cameras often have integrated calibration features, whereas consumer cameras might rely on software-based profiles.

I’ve also worked extensively with color spaces (like sRGB, Adobe RGB, and ProPhoto RGB), understanding their limitations and choosing the best one for the application. For example, a wider gamut color space like Adobe RGB offers more colors but might be less compatible with standard displays, so the optimal choice depends on the workflow and final output destination.

Q 3. How do you measure dynamic range in a camera system?

Measuring dynamic range is essential for assessing a camera’s ability to capture detail in both the brightest and darkest areas of a scene. We typically use a technique called a ‘step chart’ measurement. A step chart is a series of evenly spaced grayscale patches with varying brightness levels. The camera captures an image of the chart under controlled lighting conditions.

The image is then analyzed using specialized software. The software measures the luminance values for each patch, calculating the signal-to-noise ratio (SNR) for both the brightest and darkest usable patches. The difference between these two SNR values determines the camera’s dynamic range, usually expressed in stops (or f-stops). A higher dynamic range indicates a better ability to capture detail across a wider range of brightness levels. This is crucial for images with high contrast, where details in both bright highlights and deep shadows need to be preserved.

It’s important to note that the measurement conditions – particularly the lighting – must be carefully controlled to get accurate results. Variations in lighting can significantly affect the dynamic range measurement.

Q 4. What are the key performance indicators (KPIs) you would use to evaluate camera image quality?

Evaluating camera image quality requires a comprehensive approach, using multiple KPIs. Here are some key ones I routinely employ:

- Resolution: Measured in line pairs per millimeter (lp/mm) or megapixels, this quantifies the level of detail the camera can capture.

- Sharpness: Often assessed using modulation transfer function (MTF) curves, which plot the camera’s ability to resolve contrast at different spatial frequencies.

- Dynamic Range: As explained previously, this represents the range of brightness levels the camera can capture without losing detail.

- Signal-to-Noise Ratio (SNR): This indicates the ratio of the signal (image data) to noise (unwanted variations). Higher SNR means less noise in the image.

- Color Accuracy: This measures the fidelity of color reproduction, often assessed using colorimetric measures like Delta E.

- Chromatic Aberration: This measures the extent of color fringing, indicating lens imperfections.

- Distortion: This quantifies the degree of geometric distortion in the image.

- Noise Levels: This considers spatial and temporal noise levels which can degrade image quality.

The weighting of each KPI depends on the application. For example, a surveillance camera might prioritize low-light performance (high SNR and dynamic range), while a professional photography camera might emphasize high resolution and color accuracy.

Q 5. Explain the process of lens distortion correction.

Lens distortion correction is a crucial step in image processing, particularly important in applications requiring precise geometric accuracy. It addresses imperfections in the lens that cause straight lines to appear curved in the image. These distortions can be radial (barrel or pincushion) or tangential.

The process typically involves identifying the distortion pattern using a calibration procedure with a known geometric pattern, such as a chessboard. The camera captures images of this pattern from various angles. Software algorithms then analyze the pattern and measure the deviations from ideal geometry. Based on these measurements, a mathematical model of the lens distortion is created. This model, often represented by polynomial equations, is then used to transform the image pixels, correcting the distortions.

There are several approaches to correcting distortion: one common method is using inverse mapping, where the distorted pixel coordinates are transformed into their undistorted counterparts. This involves creating a lookup table mapping each pixel’s distorted coordinates to its corrected position. Advanced techniques also utilize machine learning models to achieve more accurate and efficient correction, especially in complex scenes with varied distortion patterns.

Q 6. How would you test for chromatic aberration in a camera lens?

Chromatic aberration is a lens defect where different wavelengths of light (colors) are not focused at the same point. This results in color fringing, often visible as purple or green halos around high-contrast edges. Testing for chromatic aberration typically involves capturing images of high-contrast scenes with bright and dark elements against a clear background.

For a more controlled test, use a resolution chart with fine, high-contrast lines. The presence of color fringing along these lines clearly indicates chromatic aberration. The severity of the aberration is often evaluated visually, but quantitative measurements can also be made by analyzing the color profiles along the edges of the high-contrast elements in the image. Specialized software can quantify the extent of chromatic aberration, providing numerical metrics to compare different lenses.

Careful examination of the images, particularly around edges with high contrast, will reveal any color fringing, which is a clear sign of chromatic aberration. The severity will depend on the lens design and its quality.

Q 7. What are the common types of camera image artifacts, and how do you identify them?

Camera image artifacts are undesirable features that appear in images due to various factors. Identifying these artifacts is a crucial aspect of image quality evaluation. Here are some common types:

- Noise: As previously discussed, both spatial and temporal noise are common artifacts, degrading image quality and clarity.

- Blur: This can be caused by motion blur (subject movement), defocus blur (incorrect focus settings), or lens imperfections.

- Aliasing: This results from insufficient sampling, leading to jagged edges or ‘stair-stepping’ artifacts.

- Moiré patterns: These are interference patterns that occur when fine textures (e.g., clothing) are captured at frequencies close to the sensor’s sampling rate.

- Lens distortion: Barrel, pincushion, and tangential distortions, which distort straight lines.

- Chromatic aberration: Color fringing along high-contrast edges.

- Vignetting: Darkening of the image corners, often caused by the lens design or improper light shading.

- Ghosting and flare: Internal reflections within the lens, producing unwanted light spots or halos.

Identifying these artifacts requires careful visual inspection of images, often under magnification. Specialized software can also help quantify certain artifacts, such as noise level or distortion.

Q 8. Describe your experience with automated camera testing frameworks.

My experience with automated camera testing frameworks is extensive. I’ve worked with a variety of frameworks, both open-source and proprietary, focusing on streamlining the testing process and improving efficiency. These frameworks typically involve scripting languages like Python or MATLAB to automate tasks such as image capture, parameter adjustment (ISO, shutter speed, aperture), and image quality metric calculation. For example, I’ve used OpenCV extensively to automate image acquisition from various camera models, perform image processing tasks (like noise reduction analysis), and compare results against predefined standards. I’ve also integrated these frameworks with continuous integration/continuous deployment (CI/CD) pipelines for automated regression testing, ensuring consistent quality across software and hardware iterations. A typical workflow might involve using a framework to capture hundreds of images under varying conditions, then using image processing algorithms to analyze sharpness, color accuracy, and noise levels. The results are then compared to pre-determined thresholds, generating a comprehensive report detailing any discrepancies. This automated approach significantly reduces testing time and ensures consistent, objective results.

Specifically, I’ve leveraged frameworks that support parallel testing across multiple cameras and scenarios, drastically accelerating the overall testing time. This is crucial in today’s fast-paced development cycles. Furthermore, I have experience customizing these frameworks to meet specific testing needs, such as integrating with specialized hardware or implementing unique test cases tailored to specific camera functionalities.

Q 9. How do you troubleshoot issues related to camera autofocus performance?

Troubleshooting autofocus issues requires a systematic approach. I typically start by identifying the nature of the problem: is it consistently inaccurate, intermittent, or dependent on lighting conditions? I’d then investigate several key areas. First, I’d check the camera’s sensor calibration, ensuring the autofocus system is properly aligned. This often involves using specialized test charts with known patterns and distances to evaluate the accuracy of focus across the image plane. Secondly, I’d examine the camera’s firmware and software. Bugs or outdated firmware can directly impact autofocus performance. I would check for any known issues and update the firmware if necessary. Third, I’d test the camera’s lens, looking for any damage or malfunctions. A faulty lens can easily lead to autofocus problems. Finally, I’d assess the environmental factors. Poor lighting or strong backlighting can negatively affect autofocus performance, particularly with contrast-detection systems. This might require analyzing the contrast transfer function (CTF) of the lens-sensor combination under various lighting conditions. Each step involves detailed logging and testing, utilizing image analysis tools and automated testing methodologies to pinpoint the root cause efficiently.

For instance, if the autofocus consistently misses focus at a specific distance, this could suggest a calibration problem or a defect in the lens. If the issue is intermittent, this might point toward a software bug or a problem with the autofocus motor itself. This structured debugging approach ensures a comprehensive and efficient diagnosis of the problem.

Q 10. How do you assess the low-light performance of a camera?

Assessing low-light performance requires a multifaceted approach that goes beyond simply taking a picture in a dark room. It involves quantifying the camera’s ability to capture detail and maintain acceptable image quality under various low-light conditions. Key metrics include:

- Noise Level: I’d measure the amount of noise (graininess) present in the images at various ISO settings. Higher ISO settings amplify the signal, but also the noise. Tools like image analysis software can quantify the noise level using metrics like standard deviation.

- Dynamic Range: This measures the camera’s ability to capture detail in both the highlights and shadows. In low light, maintaining dynamic range is particularly challenging.

- Signal-to-Noise Ratio (SNR): A higher SNR indicates a cleaner image with less noise relative to the signal strength. This is directly related to the camera’s sensitivity.

- Detail Retention: I’d assess how well the camera preserves fine details in low-light conditions. This might involve using a high-resolution test chart and analyzing the sharpness and clarity of captured images.

The testing would be conducted under controlled low-light scenarios, varying the light intensity and measuring the aforementioned metrics. We’d compare these measurements to the performance of other cameras in the same class and also to pre-defined quality standards.

Q 11. What is the importance of signal-to-noise ratio (SNR) in camera image quality?

The signal-to-noise ratio (SNR) is paramount in camera image quality. Think of it like this: the signal is the actual image information captured by the sensor, while the noise is the unwanted artifacts that degrade the image quality (graininess, color artifacts). A higher SNR signifies a stronger signal relative to the noise – resulting in a cleaner, more detailed image with less grain. A low SNR, conversely, leads to a noisy, grainy image with poor detail.

SNR directly impacts many aspects of image quality, such as:

- Sharpness: Noise can obscure fine details, making the image appear less sharp.

- Color Accuracy: Noise can introduce color artifacts, affecting the overall color fidelity.

- Dynamic Range: High noise can make it difficult to recover detail in both the highlights and shadows.

In practical terms, we often express SNR in decibels (dB). A higher dB value indicates a better SNR. During camera testing, we meticulously measure the SNR at various ISO settings and under different lighting conditions to assess the camera’s performance in different scenarios. This is crucial for determining the camera’s overall image quality and its ability to handle challenging lighting situations.

Q 12. Explain your understanding of camera sensor technologies (e.g., CMOS, CCD).

Camera sensor technologies, primarily CMOS (Complementary Metal-Oxide-Semiconductor) and CCD (Charge-Coupled Device), are the heart of any camera. While both capture light and convert it into electrical signals, they differ significantly in their architecture and performance characteristics.

- CCD: CCD sensors are known for their high image quality, particularly in terms of color accuracy and low noise. However, they are generally more power-hungry and expensive than CMOS sensors. They are less commonly used in modern consumer cameras.

- CMOS: CMOS sensors have become the dominant technology in most cameras due to their lower power consumption, faster readout speeds, and lower manufacturing cost. They allow for on-chip image processing, making them suitable for many advanced features such as autofocus and image stabilization. However, CMOS sensors can sometimes produce more noise than CCD sensors, especially at higher ISO settings.

My understanding extends to the various architectures within CMOS technology (e.g., backside-illuminated sensors, stacked CMOS sensors) and their impact on image quality, sensitivity, and speed. I also have experience working with different pixel sizes and sensor formats, understanding how these factors affect image resolution, depth of field, and low-light performance. For example, larger pixels generally collect more light, improving low-light performance but reducing resolution if the same sensor size is kept.

Q 13. Describe your experience with camera image processing algorithms.

My experience with camera image processing algorithms is extensive, encompassing a wide range of techniques. I’ve worked with algorithms for:

- Noise Reduction: Implementing and optimizing various noise reduction algorithms, such as bilateral filtering and wavelet denoising, to minimize noise while preserving image detail.

- Sharpening: Employing algorithms such as unsharp masking and adaptive sharpening to enhance image detail and clarity without introducing artifacts.

- Color Correction: Using color balancing and white balance algorithms to accurately reproduce colors in images.

- De-mosaicing: Implementing algorithms to reconstruct full-color images from the raw Bayer pattern data captured by the sensor.

- HDR (High Dynamic Range) Imaging: Combining multiple exposures to extend the dynamic range of the image, capturing detail in both highlights and shadows.

I’m proficient in using image processing libraries such as OpenCV and MATLAB to implement and evaluate these algorithms. My experience includes optimizing these algorithms for different hardware platforms, balancing computational cost with image quality improvement. For example, I’ve worked on optimizing noise reduction algorithms for embedded systems to minimize power consumption while maintaining acceptable noise reduction performance. I regularly assess the performance of these algorithms through objective quality metrics and subjective evaluations, always aiming for the best balance between computation and image quality.

Q 14. How do you validate the accuracy of a camera’s exposure metering system?

Validating the accuracy of a camera’s exposure metering system is crucial for consistent image quality. It’s about ensuring the camera correctly determines the appropriate exposure settings (aperture, shutter speed, ISO) to capture a properly exposed image. This involves a series of rigorous tests using standardized test charts and controlled lighting conditions.

My approach usually involves:

- Using Test Charts: Employing 18% gray cards or other standardized charts with known reflectance values. This allows for objective measurement of exposure accuracy.

- Controlled Lighting: Performing tests under various lighting conditions (daylight, tungsten, fluorescent) to assess the system’s accuracy across different spectral characteristics of light sources.

- Evaluating Exposure Metrics: Quantifying exposure accuracy using metrics such as average brightness, highlight clipping, and shadow detail. Software tools and image analysis techniques are employed here.

- Statistical Analysis: Analyzing a large dataset of test images to evaluate the statistical distribution of exposure errors, identifying potential systematic biases or inconsistencies.

A critical aspect is the comparison against a reference exposure – ideally obtained using a calibrated light meter or through other known accurate exposure methods. Significant deviations from the reference exposure indicate potential problems that need further investigation. The ultimate goal is to ensure the camera consistently delivers correctly exposed images across a wide range of shooting conditions.

Q 15. What is your experience with different camera interface standards (e.g., MIPI, USB)?

My experience with camera interface standards is extensive, encompassing both MIPI and USB interfaces. MIPI (Mobile Industry Processor Interface) is prevalent in mobile and embedded systems due to its high bandwidth and low power consumption. I’ve worked extensively with MIPI CSI-2 (Camera Serial Interface 2) for high-resolution image capture and MIPI D-PHY (Digital Physical Layer) for data transmission. Understanding the intricacies of MIPI, such as lane configuration and data rate negotiation, is crucial for optimizing camera performance and minimizing latency. USB interfaces, particularly USB3 Vision, are common in industrial and high-end imaging applications offering a simpler, more standardized approach to camera control and data transfer, though often at the cost of higher power consumption compared to MIPI. I’ve successfully integrated cameras using both standards, troubleshooting issues related to signal integrity, power management and data packet handling.

For example, on a recent project integrating a high-speed camera into a robotic system, the choice of MIPI CSI-2 over USB3 Vision was critical for meeting the real-time image processing requirements. The higher bandwidth and lower latency of MIPI allowed for seamless integration without image dropouts.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you conduct thermal testing for camera modules?

Thermal testing of camera modules is vital to ensure reliable operation under various environmental conditions. My approach involves a multi-stage process. Initially, we conduct thermal characterization to determine the thermal profile of the camera module under different operating conditions (e.g., continuous image capture, high-intensity illumination). This often involves using thermal cameras and thermocouples to measure temperature at critical points on the module.

Next, we perform stress testing, subjecting the camera to extreme temperatures—both high and low—while continuously monitoring its performance (image quality, functionality, etc.). This may involve using climate chambers that can precisely control temperature and humidity. We look for anomalies such as overheating, image degradation, and functional failures.

Finally, data analysis is crucial. We examine the correlation between temperature and performance metrics. This allows us to define safe operating temperatures and identify potential design weaknesses. If necessary, we iterate on the thermal design (e.g., adding heat sinks or modifying the PCB layout) and repeat the testing process.

Q 17. Describe your experience with camera system integration and verification.

Camera system integration and verification is a complex process that requires a systematic approach. My experience encompasses the entire workflow, from initial hardware selection and driver development to final system-level testing. I’ve worked on numerous projects involving the integration of cameras into various platforms, including mobile devices, industrial automation systems, and autonomous vehicles.

The process typically begins with defining the system requirements, including image resolution, frame rate, field of view, and other performance parameters. Next, I select appropriate hardware components, including cameras, lenses, image processors, and interfaces. Then, I develop or adapt the necessary drivers and software to control the camera and process the image data. Verification involves rigorous testing at each stage. This includes functional testing (e.g., verifying that the camera is correctly capturing and transmitting images) and performance testing (e.g., measuring image quality, latency, and power consumption).

A successful example involved integrating a multi-camera system into an autonomous navigation system. This required careful synchronization of multiple cameras, precise calibration, and robust error handling. I utilized a combination of hardware-in-the-loop simulations and real-world testing to validate the system’s performance and ensure safety.

Q 18. How would you approach testing a camera system for robustness and reliability?

Testing a camera system for robustness and reliability requires a multifaceted approach that goes beyond simple functional testing. We need to simulate real-world stresses and environmental conditions. This includes:

- Environmental Stress Testing: Exposing the camera to extreme temperatures, humidity, vibration, shock, and dust. This helps determine its tolerance to harsh conditions.

- Mechanical Stress Testing: Assessing the camera’s ability to withstand physical impacts and repeated handling. Drop tests and vibration tests are common methods used here.

- Electromagnetic Compatibility (EMC) Testing: Verifying that the camera isn’t susceptible to electromagnetic interference and doesn’t emit excessive electromagnetic radiation.

- Long-Term Stability Testing: Running the camera continuously for extended periods to assess its long-term reliability and detect any potential degradation.

- Failure Analysis: Investigating failures to identify root causes and implement corrective actions.

For example, in a recent project involving a security camera for outdoor use, we performed extensive environmental testing, including exposure to extreme temperatures (-40°C to +85°C) and high humidity. We also subjected the camera to vibration tests simulating installation on a moving vehicle. This rigorous testing helped identify and address design flaws that might have resulted in failures in the field.

Q 19. What are your preferred tools and techniques for analyzing camera image data?

My preferred tools and techniques for analyzing camera image data encompass both hardware and software solutions. Hardware tools include specialized image analysis equipment, such as colorimeters and densitometers, for objective measurements of color accuracy and dynamic range. Software tools are essential for image processing and analysis.

Common software tools I utilize are image processing libraries such as OpenCV and MATLAB. These allow for tasks like image enhancement, noise reduction, feature extraction, and metric calculation. For detailed analysis, I also leverage specialized software designed for image quality assessment, which provide automated metrics for sharpness, noise, color fidelity, and distortion. I also frequently use custom scripts and tools developed for specific projects to perform focused analyses. For example, we might build custom tools for evaluating the performance of auto-focus or auto-exposure algorithms.

Q 20. Explain your understanding of different image file formats (e.g., RAW, JPEG).

RAW, JPEG, and other image formats have distinct characteristics that influence image quality, storage space, and processing requirements. RAW image files contain unprocessed sensor data, providing the most information and flexibility for post-processing. They offer maximum dynamic range, color information, and potential for adjustments but require significantly more storage space and processing time. JPEG (Joint Photographic Experts Group) images, on the other hand, are compressed using lossy compression, resulting in smaller file sizes but some loss of image detail. JPEG is ideal for online sharing and quick viewing but is generally less suitable for professional image editing due to the inherent information loss.

Other formats, such as TIFF (Tagged Image File Format), provide a lossless compression option for balancing file size and image quality. The choice of image format depends heavily on the application. For professional photography or applications requiring high image quality, RAW is preferred. For applications focused on ease of sharing and rapid image display, JPEG is a more practical choice.

Q 21. How do you perform objective image quality assessment?

Objective image quality assessment relies on quantifiable metrics rather than subjective opinions. Several standards and methodologies exist, such as ISO 12233 for image resolution and ISO 15739 for color management. The specific metrics used depend on the application and the aspects of image quality being assessed.

Common metrics include:

- Sharpness/Resolution: Measured using techniques such as edge detection and Modulation Transfer Function (MTF) analysis.

- Noise Level: Quantified by measuring the standard deviation of pixel values in uniform areas of an image.

- Dynamic Range: Calculated as the ratio between the maximum and minimum measurable light intensities.

- Color Accuracy: Assessed by comparing the measured color values to reference values.

- Distortion: Measured as the deviation from geometrical linearity.

These metrics are obtained using specialized software and hardware that analyze the captured images objectively. The results provide valuable data for comparing different camera systems, optimizing image processing algorithms, and identifying potential quality issues.

Q 22. How do you evaluate the performance of a camera’s white balance algorithm?

Evaluating a camera’s white balance (WB) algorithm involves assessing its ability to accurately render colors under various lighting conditions. A perfectly balanced image will appear neutral, with whites appearing white and colors appearing true to life. We typically use a series of controlled tests to measure this.

- Controlled Lighting Environments: We test the camera under different light sources like incandescent, fluorescent, daylight, and mixed lighting. Each source has a distinct color temperature, and the WB algorithm needs to compensate appropriately.

- Color Charts: Using standardized color charts, like the X-Rite ColorChecker, we capture images and then analyze the color values in post-processing using specialized software. We compare the captured values to the known values of the color chart to determine the accuracy of the color reproduction. A deviation from the known values indicates a WB inaccuracy.

- Quantitative Metrics: We use metrics like Delta E (ΔE) to quantitatively measure color differences. A lower ΔE value suggests better color accuracy and therefore better white balance. A ΔE of less than 1 is generally considered imperceptible to the human eye. Above 3 is typically noticeable.

- Subjective Assessment: While quantitative metrics are essential, human visual perception also plays a role. A panel of trained observers can provide qualitative feedback to complement the objective measurements.

For example, if a camera consistently produces images with a blue tint under incandescent lighting, it indicates a problem with its white balance algorithm in that specific lighting condition. The debugging process would then focus on adjusting the algorithm’s parameters for incandescent lighting.

Q 23. Explain your experience with different camera testing standards and specifications.

My experience encompasses various camera testing standards and specifications, including those from organizations like the ISO (International Organization for Standardization) and JEITA (Japan Electronics and Information Technology Industries Association). I’m familiar with standards relating to image quality metrics, such as resolution, dynamic range, signal-to-noise ratio (SNR), and color accuracy. I’ve also worked extensively with standards for environmental testing, including temperature, humidity, and vibration resistance.

For instance, I’ve used ISO 12233 for resolution measurement and its related methods for assessing sharpness. The JEITA standards are often used for evaluating image sensor performance. In practice, choosing the right standard depends on the camera’s intended application and the client’s specific requirements. For a high-end professional camera, we would likely use more stringent standards than for a simple consumer device. Often, a combination of standards and custom tests are necessary for a complete evaluation.

Q 24. How do you manage and track defects found during camera testing?

Defect tracking and management are crucial aspects of camera testing. We typically use a defect tracking system, often integrated with our project management software. This system allows us to systematically log, categorize, and track each identified defect throughout its lifecycle.

- Detailed Description: Each defect report includes a detailed description of the issue, steps to reproduce it, the severity level (critical, major, minor), and the affected camera model or firmware version.

- Categorization: We categorize defects based on their nature (e.g., hardware, software, image quality) to streamline analysis and prioritization. This allows us to quickly identify trends or patterns in defects.

- Assignment and Tracking: The system allows for assigning defects to specific engineers or teams, tracking their progress, and recording any actions taken to resolve the issue. Status updates are regularly recorded, and notifications are sent to relevant stakeholders.

- Root Cause Analysis: Once a defect is resolved, a root cause analysis is performed to prevent similar issues in the future. We often hold meetings to discuss recurring defects and improve our testing processes.

Using a well-defined system ensures that no defect is overlooked, and we maintain a clear understanding of the project’s health throughout the testing phase.

Q 25. How would you debug a camera system that is exhibiting unexpected behavior?

Debugging a camera system with unexpected behavior is a systematic process. It involves a combination of observation, experimentation, and analysis. The first step is to thoroughly document the issue, including specific steps to reproduce the behavior. We follow a structured approach:

- Isolate the Problem: Begin by identifying the component or system responsible for the unexpected behavior. Is it related to the image sensor, the image processing pipeline, the firmware, or the hardware interface? Try to narrow down the source of the problem systematically.

- Utilize Diagnostic Tools: Employ a variety of diagnostic tools, including logic analyzers, oscilloscopes, and specialized camera testing software. These tools provide insights into signal integrity, timing, and data flow within the camera system.

- Analyze Logs and Data: Examine the camera’s internal logs and captured image data for clues. Unusual values, error messages, or patterns in the data can point to the root cause of the issue.

- Reproduce the Behavior: To ensure the problem is well-understood and can be consistently recreated for debugging, we need repeatable steps to observe and measure the behavior. It is critical to create a reproducible test scenario.

- Code Review and Testing: If the problem involves software, a thorough code review is necessary. Unit tests and integration tests can pinpoint problematic code segments. Firmware updates can also be needed.

- Iterative Approach: Debugging is iterative. We may make several attempts at fixing the issue, testing and refining the solution before it is fully resolved.

For instance, if the camera is consistently producing blurry images, we would systematically check the focus mechanism, lens quality, image sensor integrity, and image processing parameters. Using a combination of these techniques helps to effectively find and resolve the root cause.

Q 26. Describe your experience working with different types of imaging sensors.

My experience encompasses a wide range of imaging sensors, including:

- CMOS (Complementary Metal-Oxide-Semiconductor): This is the most prevalent type of sensor today. I’ve worked with various CMOS sensors, differing in resolution, size, and features. I’ve worked on projects involving high-resolution sensors for professional cameras and smaller, low-power sensors for mobile devices. Understanding the specific characteristics of CMOS sensors, such as their noise profile, dynamic range, and sensitivity, is crucial for accurate testing.

- CCD (Charge-Coupled Device): Though less common now, I have experience testing CCD sensors. Compared to CMOS sensors, CCD sensors generally offer better image quality at the expense of higher power consumption. The evaluation of CCD sensors focuses on similar aspects as CMOS sensors but with a specific focus on charge transfer efficiency and blooming artifacts.

- Other Sensor Technologies: I’ve also been involved in projects dealing with specialized sensors such as time-of-flight (ToF) sensors used for depth sensing and infrared (IR) sensors used in thermal imaging. Each sensor type requires specialized testing techniques tailored to its unique capabilities.

Understanding the nuances of different sensor types is vital for effective camera testing, allowing for appropriate test planning, result interpretation, and identification of specific sensor-related defects.

Q 27. What are your thoughts on the future of camera technology and testing?

The future of camera technology and testing is incredibly exciting. Several key trends are shaping the field:

- Higher Resolution and Dynamic Range: We can expect to see even higher resolution sensors with significantly improved dynamic range, capturing more detail in both highlights and shadows. This will demand more sophisticated testing techniques and more rigorous image quality assessment.

- Artificial Intelligence (AI) Integration: AI is rapidly transforming camera systems. Features like automatic scene detection, object recognition, and computational photography are becoming increasingly common. Testing will need to evaluate the accuracy and performance of these AI-powered features.

- Computational Photography: Advanced image processing algorithms will play a critical role in enhancing image quality, potentially overcoming the limitations of the sensor itself. Testing will need to focus on evaluating the effectiveness and artifacts of these processing techniques.

- New Sensor Technologies: We are likely to see the emergence of novel sensor technologies, such as event-based cameras and quantum imaging sensors. This will require development of new testing methodologies and standards.

- Automated Testing: Increased automation in testing is crucial given the complexity of modern cameras. This will involve the development and implementation of automated testing frameworks to improve efficiency and scalability.

As a camera testing professional, I am excited about these advancements. It is a time of great innovation, where creativity and technical skills combine to push the boundaries of image capture. The focus for testing will shift towards measuring the quality of the overall experience rather than focusing solely on traditional metrics.

Key Topics to Learn for Camera Testing and Evaluation Interview

- Image Quality Assessment: Understanding metrics like resolution, dynamic range, signal-to-noise ratio (SNR), and color accuracy. Practical application: Analyzing test images to identify defects and optimize camera settings.

- Optical Performance Testing: Evaluating lens distortion, sharpness, vignetting, and chromatic aberration. Practical application: Using specialized software and equipment to measure optical characteristics and generate reports.

- Hardware and Software Integration: Understanding the interaction between camera hardware (sensor, lens, processor) and software (firmware, image processing algorithms). Practical application: Troubleshooting issues related to image capture, processing, and data transfer.

- Sensor Performance: Analyzing sensor characteristics like sensitivity, linearity, and noise performance under varying lighting conditions. Practical application: Comparing the performance of different image sensors using standardized test procedures.

- Autofocus and Autoexposure Systems: Evaluating the speed, accuracy, and reliability of autofocus and autoexposure mechanisms. Practical application: Developing test methodologies to assess the performance of these systems in different scenarios.

- Video Performance Testing: Assessing video quality parameters like frame rate, bitrate, compression artifacts, and dynamic range. Practical application: Analyzing video recordings to identify issues and optimize video settings.

- Low-Light Performance: Evaluating image quality and noise levels in low-light conditions. Practical application: Developing strategies to enhance low-light image quality through software and hardware adjustments.

- Image Processing Algorithms: Understanding the principles and implementation of image processing algorithms used for noise reduction, sharpening, and color correction. Practical application: Evaluating the effectiveness of different algorithms on image quality.

- Testing Methodologies and Standards: Familiarity with industry standards and best practices for camera testing and evaluation. Practical application: Designing and executing comprehensive test plans to ensure accurate and reliable results.

Next Steps

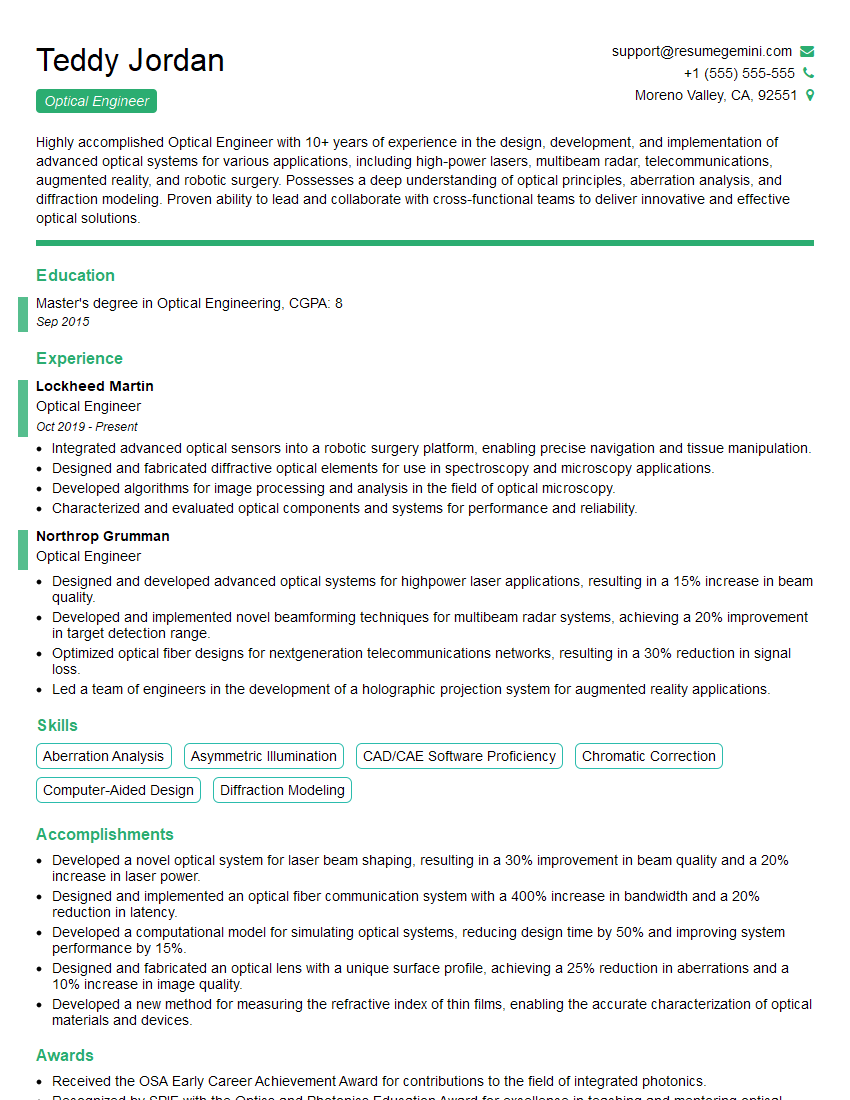

Mastering Camera Testing and Evaluation opens doors to exciting career opportunities in imaging technology, offering diverse roles and continuous learning. To significantly increase your job prospects, creating a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional resume that highlights your skills and experience effectively. Examples of resumes tailored to Camera Testing and Evaluation are available to guide you through the process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

These apartments are so amazing, posting them online would break the algorithm.

https://bit.ly/Lovely2BedsApartmentHudsonYards

Reach out at [email protected] and let’s get started!

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?