The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to cuDNN interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in cuDNN Interview

Q 1. Explain the architecture of cuDNN.

cuDNN (CUDA Deep Neural Network library) isn’t a monolithic architecture but rather a collection of highly optimized routines for deep learning primitives. Think of it as a toolbox filled with pre-built, incredibly efficient functions. At its core, it leverages the power of NVIDIA GPUs to accelerate the computationally intensive operations found in neural networks. It’s structured around layers, each performing a specific operation (like convolution, pooling, activation functions, etc.). These layers are interconnected to form a complete neural network computational graph. The underlying implementation uses highly tuned CUDA kernels and carefully manages memory to maximize performance. This internal structure is abstracted away from the user, allowing them to focus on the high-level aspects of their deep learning model.

Q 2. What are the key advantages of using cuDNN over writing custom CUDA kernels?

Writing custom CUDA kernels for deep learning operations is extremely challenging, requiring deep expertise in GPU architecture, memory management, and parallel programming. cuDNN provides several significant advantages:

- Performance: cuDNN kernels are meticulously optimized for various GPU architectures, resulting in significantly faster execution compared to hand-written kernels unless you possess exceptional expertise and dedicate significant time to optimization.

- Ease of Use: cuDNN provides a simple and intuitive API, drastically reducing development time and complexity. You can focus on building your neural network, not low-level optimizations.

- Portability: cuDNN abstracts away the underlying hardware specifics, making your code portable across different NVIDIA GPUs without extensive modifications.

- Regular Updates: cuDNN benefits from continuous updates and improvements, incorporating the latest advancements in GPU architecture and deep learning algorithms. This ensures you always have access to the best performance.

- Error Handling and Stability: cuDNN includes robust error handling and rigorous testing, improving the stability and reliability of your application compared to potentially buggy custom kernels.

Imagine building a house: You could hand-craft every brick, but cuDNN provides pre-fabricated, high-quality walls and other components, allowing you to build faster and more reliably.

Q 3. Describe the different layers supported by cuDNN.

cuDNN supports a wide range of layers crucial for building various neural network architectures. Key examples include:

- Convolutional Layers: The backbone of convolutional neural networks (CNNs), performing feature extraction from images or other data.

- Pooling Layers: Reduce the dimensionality of feature maps, improving computational efficiency and robustness to small variations in input.

- Activation Layers: Introduce non-linearity into the network, allowing it to learn complex patterns. Examples include ReLU, sigmoid, and tanh.

- Normalization Layers: Stabilize training and improve generalization performance. Examples include Batch Normalization and Layer Normalization.

- RNN Layers: Support for recurrent neural networks (RNNs) including LSTMs and GRUs.

- Fully Connected Layers: Perform matrix multiplications to combine features from previous layers.

- Softmax Layer: Produces a probability distribution over the output classes.

The specific layers and their functionalities are constantly expanding with each cuDNN release, aligning with the advancements in deep learning research.

Q 4. How does cuDNN handle different data types and precisions?

cuDNN is designed to handle a variety of data types and precisions, offering flexibility to balance performance and accuracy. Commonly supported types include:

- float (single-precision): Offers a good balance between speed and accuracy, widely used in most deep learning applications.

- half (FP16): Offers faster computation and reduced memory bandwidth compared to float, enabling training of larger models, but might compromise precision slightly.

- double (double-precision): Provides higher accuracy than float, but significantly slower; typically used in situations where numerical stability is paramount.

- int8: Used for quantization, trading some accuracy for significant improvements in speed and memory efficiency.

The choice of data type depends on the specific application. For example, you might opt for FP16 during training for speed and then switch to FP32 for inference to ensure high accuracy in the final predictions.

Q 5. Explain the concept of cuDNN workspace and its importance.

The cuDNN workspace is a region of GPU memory allocated by cuDNN to store intermediate results during the computation of a layer. Think of it as a temporary scratchpad where cuDNN keeps track of values needed for efficient computation. It’s crucial because:

- Improved Performance: Reduces the need for repeated calculations, minimizing redundant memory accesses.

- Memory Management: cuDNN intelligently manages the workspace, allocating and freeing memory as needed.

- Algorithmic Optimization: The workspace enables cuDNN to employ advanced algorithms that might require temporary storage.

You specify the workspace size (in bytes) as a parameter. If the workspace is too small, cuDNN will fail. If it’s too large, you waste memory. Finding the optimal size usually involves experimentation and profiling.

Q 6. How do you choose the optimal cuDNN configuration for a given task?

Choosing the optimal cuDNN configuration is critical for maximizing performance. It involves several considerations:

- Algorithm Selection: cuDNN provides multiple algorithms (e.g., different convolution algorithms) for each layer. You need to experiment to determine which algorithm performs best for your specific hardware, data, and network architecture.

- Data Type: Select the appropriate data type (FP32, FP16, INT8) based on the trade-off between accuracy and performance.

- Workspace Size: Determine the optimal workspace size through experimentation and profiling to balance memory usage and performance.

- Tensor Format: Choose the optimal tensor layout (e.g., NCHW, NHWC) to optimize memory access patterns.

- Batch Size: Experimenting with different batch sizes can significantly impact performance. Larger batches might be faster but require more memory.

Systematic experimentation and profiling are essential to finding the best settings for a given task. Start with default settings and then systematically vary parameters to observe the impact on performance.

Q 7. Describe the process of benchmarking and profiling cuDNN performance.

Benchmarking and profiling cuDNN performance are crucial for optimizing your application. The process typically involves:

- Setting up a benchmark: Create a representative workload that reflects the typical operations in your application. This might involve running a forward and backward pass through your network on a subset of your data.

- Measuring execution time: Use high-resolution timers (like CUDA events) to accurately measure the time taken by different parts of your code, focusing on the cuDNN calls.

- Profiling tools: Employ NVIDIA Nsight Systems or similar profiling tools to analyze the execution of your code, identify bottlenecks, and visualize memory usage. This provides detailed insight into kernel execution times, memory transfers, and other performance metrics.

- Iterative optimization: Use the profiling results to guide your optimization efforts. Experiment with different cuDNN configurations (algorithms, data types, workspace size, etc.) to identify the optimal settings.

Remember to measure performance on representative data and hardware configurations relevant to your deployment environment. A well-designed benchmark and systematic profiling are key to unlocking the full potential of cuDNN.

Q 8. How can you optimize cuDNN performance for specific hardware?

Optimizing cuDNN performance for specific hardware involves a multi-faceted approach focusing on matching algorithm choices, data types, and tensor layouts to your GPU’s capabilities. Think of it like choosing the right tool for the job – a sledgehammer isn’t ideal for delicate work.

Algorithm Selection: cuDNN offers various algorithms for each operation (e.g., convolution, pooling). Experiment with different algorithms using the cuDNN API’s auto-tuning features or benchmarking tools to find the fastest one for your specific hardware and problem size. For example, a newer generation GPU might perform better with a more sophisticated algorithm than an older one.

Data Types: Using lower-precision data types like FP16 (half-precision floating point) or INT8 can significantly speed up computation, especially on GPUs with specialized hardware support. However, this comes with potential accuracy trade-offs; careful evaluation is needed.

Tensor Formats: cuDNN supports different tensor formats (e.g., NCHW, NHWC). Choosing the right format can impact memory access patterns and performance. NHWC is generally preferred for CPUs, while NCHW often performs better on GPUs. Benchmarking on your specific hardware is essential to determine the optimal format.

Workspace Size: Some cuDNN algorithms require a workspace for intermediate results. Adjusting the workspace size can improve performance, though excessively large workspaces can negatively impact memory usage and performance. Experimentation is key here.

Batch Size Optimization: The choice of batch size significantly affects the throughput. Finding the sweet spot depends on memory bandwidth and compute capabilities of your hardware.

In a real-world scenario, you might be training a large deep learning model. By systematically testing different algorithm choices, data types, and tensor formats, you can optimize your training time by potentially orders of magnitude.

Q 9. What are the common performance bottlenecks when using cuDNN?

Common performance bottlenecks in cuDNN often stem from memory limitations, inefficient data transfers, or suboptimal algorithm selection. Let’s illustrate them with examples.

Memory Bandwidth Bottleneck: If data transfer between CPU and GPU or between GPU memory and processing units is slow, it can severely limit performance. This is especially true with large models and datasets. Imagine trying to build a house with only a tiny wheelbarrow; you’ll be significantly slowed down.

Compute Bottleneck: The chosen algorithm might not be optimized for your specific GPU architecture. CuDNN might be waiting for slower parts of the computation to finish, even if other parts are already done.

Insufficient Memory: Running out of GPU memory causes frequent swapping to system memory, significantly slowing down performance. This is like trying to work on a complex project with insufficient desk space – you’ll be constantly moving things around.

Data Transfer Overhead: Copying data between different memory locations (e.g., host to device, or between different memory spaces on the GPU) takes time and can affect overall performance.

Profiling tools like NVIDIA Nsight Systems can help pinpoint the exact bottleneck. Addressing them requires strategies like careful memory management, efficient data transfer techniques (e.g., asynchronous data transfers), and judicious algorithm selection as discussed previously.

Q 10. How do you debug cuDNN related issues?

Debugging cuDNN issues requires a systematic approach combining error checking, profiling, and code inspection. Let’s look at some strategies.

Error Handling: Always check for cuDNN error codes after each cuDNN API call. Don’t just assume everything worked perfectly.

Profiling: Tools like NVIDIA Nsight Compute and Nsight Systems help identify performance bottlenecks and highlight areas for optimization. They show you exactly where your application is spending its time.

Code Review: Scrutinize your code for potential issues. Look for inefficient memory access patterns, improper data type conversions, or incorrect tensor dimension specifications.

Reproducibility: Create minimal, reproducible examples to isolate the problem. A small, self-contained example is often easier to debug than a massive codebase.

Logging: Add detailed logging statements to your code to track variable values, memory usage, and cuDNN API call results. Think of it as leaving bread crumbs to track your progress.

For instance, if you encounter a performance issue, profiling will show you if you have a memory bandwidth bottleneck or a compute bottleneck. This helps you focus your debugging efforts.

Q 11. Explain the difference between cuDNN v7 and v8.

cuDNN v8 builds upon v7 with significant improvements in performance, features, and support for newer hardware. The differences are substantial.

Performance Enhancements: v8 generally offers faster execution speeds for various operations due to algorithmic improvements and better hardware utilization on newer GPUs. The difference can be significant, especially for large models.

New Algorithms and Operations: v8 often introduces new algorithms and supports new operations that weren’t available in v7, offering flexibility and potential for increased efficiency for specific tasks.

Hardware Support: v8 adds support for newer NVIDIA GPUs and hardware features, allowing it to take advantage of the latest advancements in GPU architecture.

API Changes: While generally backward compatible, there might be minor API changes between v7 and v8. Check the release notes carefully during the upgrade process.

TensorRT Integration: Enhanced integration with TensorRT might be present in v8 for improved inference performance.

In essence, moving from v7 to v8 is often like upgrading your tools – you get enhanced performance and functionality, but you need to ensure compatibility and potentially adapt your code slightly.

Q 12. How does cuDNN handle memory management?

cuDNN doesn’t directly manage memory in the sense of allocating and deallocating memory. It relies on the underlying CUDA runtime for memory management. This is important because it helps to avoid double-allocation or memory leaks.

You, as the developer, are responsible for allocating and freeing GPU memory using CUDA functions like cudaMalloc and cudaFree. cuDNN then takes the pointers to this memory as input. cuDNN functions don’t allocate their own memory; instead, they work with the memory you’ve already provided. Think of it as a skilled carpenter who uses the wood you’ve already prepared, rather than sourcing their own material.

Efficient memory management is crucial for performance. Techniques such as pinned memory (cudaHostAlloc) or unified memory can minimize data transfer overhead between the host (CPU) and the device (GPU).

For example:

float *dev_input, *dev_output; cudaMalloc((void**)&dev_input, input_size); cudaMalloc((void**)&dev_output, output_size); // ... use dev_input and dev_output with cuDNN functions ... cudaFree(dev_input); cudaFree(dev_output);

This demonstrates the fundamental principle: you handle memory allocation and deallocation using the CUDA API, and cuDNN simply uses the memory locations you provide.

Q 13. Describe the different tensor formats supported by cuDNN.

cuDNN supports various tensor formats, each impacting memory access patterns and performance. The choice depends on the specific hardware and algorithm. Common formats include:

NCHW: (Batch size, Channels, Height, Width). This format is often preferred for GPUs as it allows for efficient memory access patterns in many convolution algorithms. Channels are contiguous in memory.

NHWC: (Batch size, Height, Width, Channels). This format is typically preferred for CPUs, where memory access is often more sequential. Width is contiguous in memory.

Other formats: cuDNN also supports other specialized formats, such as those optimized for specific hardware features or algorithms. Some algorithms might work significantly faster with optimized memory layouts, even with minor changes in how dimensions are arranged.

The choice of tensor format significantly influences performance. Benchmarking different formats on your target hardware is recommended to determine the optimal choice for your specific application and model.

Incorrect format selection can drastically impact performance. For example, if you choose a format poorly suited to your GPU architecture, you could experience significant slowdowns due to inefficient memory access patterns. Always profile your code to ensure the chosen format is truly beneficial.

Q 14. Explain the concept of cuDNN handles and their lifecycle.

A cuDNN handle is a crucial structure representing the context in which cuDNN operations are executed. Think of it as a personalized workspace where cuDNN keeps track of configurations and settings relevant to your specific deep learning tasks. It’s created once and used repeatedly across the cuDNN library calls.

Lifecycle:

Creation: A cuDNN handle is created using

cudnnCreate. This function initializes the cuDNN library for a specific CUDA context. This is your starting point.Configuration: After creation, you configure the handle with parameters such as the compute capability of the GPU and preferred data types. This configures the handle for your specific needs.

Operation Execution: You then use the handle in all subsequent cuDNN function calls (e.g., convolution, pooling, activation). All computations occur within the context defined by this handle.

Destruction: Once you’re finished with cuDNN operations, the handle should be destroyed using

cudnnDestroyto release any resources it’s holding. This cleanup step is essential to avoid memory leaks and ensure proper resource management.

It’s crucial to create a cuDNN handle only once at the beginning of your application and destroy it only once at the end to avoid conflicts and errors. Improper handle management can lead to unpredictable behavior and crashes.

Example of handle creation and destruction:

cudnnHandle_t handle; cudnnCreate(&handle); // ... your cuDNN operations using handle ... cudnnDestroy(handle);

Q 15. How does cuDNN handle asynchronous operations?

cuDNN excels at maximizing GPU utilization by cleverly managing asynchronous operations. Imagine a chef preparing a multi-course meal: instead of waiting for one dish to finish completely before starting another, they work on multiple dishes concurrently. Similarly, cuDNN allows you to launch multiple cuDNN operations (like forward and backward passes in a neural network) simultaneously. These operations are launched asynchronously, meaning the CPU doesn’t wait idly for each to finish before launching the next. The GPU executes them concurrently, significantly speeding up training and inference.

This asynchronous behavior is managed through streams. Each stream is like a separate kitchen counter where the chef (CPU) can prepare tasks (cuDNN operations) independently. The GPU then executes the tasks in each stream as resources become available. This parallelism is crucial for achieving high performance, especially in large deep learning models.

To handle asynchronous operations effectively, one uses the cudnnSetStream function to associate a specific stream with a cuDNN operation. This ensures that the order of operations within a stream is preserved, but operations across different streams can execute concurrently. Synchronization points (like waiting for a specific operation to complete) are managed using CUDA’s stream synchronization functions.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you integrate cuDNN with other deep learning frameworks?

Integrating cuDNN with other deep learning frameworks is usually straightforward and involves using the framework’s provided wrappers or APIs. These wrappers abstract away the complexities of direct cuDNN interaction, offering a more user-friendly interface. For example:

- TensorFlow: TensorFlow’s backend often utilizes cuDNN under the hood for its convolutional operations. Developers rarely interact with cuDNN directly; TensorFlow’s optimized kernels automatically leverage cuDNN’s capabilities.

- PyTorch: PyTorch also extensively uses cuDNN for its convolutional layers. Again, this is largely transparent to the user. However, PyTorch allows some degree of control over cuDNN’s behavior through specific configuration options.

- Caffe: Caffe, in its earlier versions, offered more direct cuDNN integration, requiring more manual configuration. However, more recent versions have abstracted the details for simplicity.

The integration process usually involves linking the appropriate cuDNN libraries during compilation or loading the necessary libraries at runtime. The specific steps depend on the framework and the build system used.

Q 17. Compare and contrast cuDNN with other deep learning libraries.

cuDNN is a highly specialized library focusing on deep learning primitives, specifically geared towards NVIDIA GPUs. Compared to other deep learning libraries like MKL-DNN (optimized for Intel CPUs) or other general-purpose linear algebra libraries like Eigen, cuDNN prioritizes performance in deep learning tasks, particularly for convolutional operations. Let’s compare it to a few:

- MKL-DNN: While MKL-DNN is optimized for Intel CPUs and provides similar functionality, cuDNN significantly outperforms it on NVIDIA GPUs due to its tight integration with the NVIDIA hardware architecture and its specialized algorithms for convolutions.

- OpenBLAS: OpenBLAS is a general-purpose BLAS implementation that can run on CPUs and some GPUs. cuDNN offers much better performance for deep learning workloads due to its focus on the specific needs of convolutional networks and its exploitation of GPU architectural features.

- Eigen: Eigen is a high-level linear algebra library that can run on CPUs. It is less specialized than cuDNN and therefore usually offers less performance for deep learning convolutional operations on GPUs.

In short: cuDNN sacrifices generality for exceptional performance on NVIDIA GPUs in the context of deep learning.

Q 18. Explain the role of cuDNN in convolutional neural networks.

cuDNN plays a pivotal role in Convolutional Neural Networks (CNNs) by providing highly optimized implementations of the core convolutional operations. These operations are computationally intensive, making up a significant portion of the training and inference time in CNNs. cuDNN accelerates these operations by:

- Exploiting GPU parallelism: cuDNN’s algorithms leverage the massive parallelism of GPUs, processing multiple data points simultaneously.

- Using optimized kernels: cuDNN provides highly tuned kernels (low-level routines) tailored to specific NVIDIA GPU architectures, maximizing performance.

- Implementing advanced algorithms: cuDNN employs sophisticated algorithms like Winograd convolution for improved performance, especially for smaller kernel sizes.

- Reducing memory accesses: Efficient memory management within cuDNN minimizes data transfers between the GPU memory and processing units, further improving speed.

Without cuDNN, training large CNNs would be significantly slower, making many modern applications practically infeasible.

Q 19. Describe your experience with cuDNN performance tuning techniques.

My experience with cuDNN performance tuning focuses on a multi-faceted approach. It’s rarely about a single magic bullet; instead, it requires a systematic investigation and adjustment of several factors.

- Algorithm Selection: Choosing the right cuDNN convolution algorithm (e.g., Winograd, Direct) based on kernel size, padding, and data dimensions significantly impacts performance. Profiling different algorithms is key.

- Data Layout: Optimizing the layout of tensors in memory (e.g., NCHW, NHWC) can reduce memory accesses and improve performance. The optimal layout depends on the specific hardware and algorithm used.

- Workspace Size: cuDNN uses workspace memory for intermediate computations. Finding the optimal workspace size through experimentation often leads to performance improvements. Insufficient workspace can significantly slow down computations.

- Batch Size: Larger batch sizes generally allow better utilization of GPU resources, but excessive batch sizes can lead to memory limitations. Finding the optimal balance is crucial.

- Tensor Cores (if applicable): For newer NVIDIA GPUs with Tensor Cores, using algorithms and data types that support Tensor Core operations can lead to substantial speed improvements. This requires careful consideration of data types (FP16, etc.).

I typically use profiling tools (like NVIDIA Nsight Systems or nvprof) to identify performance bottlenecks and guide my tuning efforts. Iteration and experimentation are essential steps in this process.

Q 20. How do you handle errors and exceptions in cuDNN?

Error handling in cuDNN is crucial for robust application development. cuDNN uses error codes to signal success or failure of operations. These codes are integer values, and checking for non-zero values after each cuDNN function call is essential. Never assume that an operation has succeeded. Always explicitly check the return status.

Example (C++):

cudnnStatus_t status = cudnnConvolutionForward(handle, &alpha, xDesc, x, &beta, yDesc, y);

if (status != CUDNN_STATUS_SUCCESS) {

fprintf(stderr, "cudnnConvolutionForward failed with error: %s\n", cudnnGetErrorString(status));

// Handle the error appropriately, e.g., exit, retry, or fallback to a CPU implementation

}Each cuDNN error code provides a specific indication of the problem. The cudnnGetErrorString function can provide a human-readable description of the error, aiding in debugging.

Effective error handling includes comprehensive logging, appropriate error handling strategies (e.g., retrying operations with different parameters or gracefully degrading to alternative methods), and clear error reporting to the user.

Q 21. What is the impact of different data layouts on cuDNN performance?

Data layouts significantly impact cuDNN performance. The most common layouts for tensors in deep learning are NCHW (Batch, Channel, Height, Width) and NHWC (Batch, Height, Width, Channel). The choice influences memory access patterns during convolution operations.

Generally, NCHW is preferred for cuDNN because it tends to promote better memory coalescing. Memory coalescing occurs when multiple threads access contiguous memory locations simultaneously, leading to efficient data transfer. In NCHW, threads processing the same spatial location in the feature map access consecutive memory locations, facilitating efficient coalescing. This efficiency is especially important in convolutional operations where large amounts of data are accessed.

NHWC can be advantageous in some scenarios, particularly with CPU-based computations where the memory access patterns better align with cache utilization. However, with GPUs, NCHW generally leads to superior performance because of the memory coalescing advantage. The optimal layout can also depend on the specific cuDNN algorithm being used and the underlying hardware. Experimentation is usually needed to determine the best approach for a given setup.

Proper attention to data layout optimization is crucial for achieving peak performance with cuDNN, which needs to be considered in the design of your models and algorithms.

Q 22. Explain how cuDNN utilizes GPUs for parallel processing.

cuDNN leverages the massive parallelism inherent in GPUs to dramatically accelerate deep learning computations. Instead of performing operations sequentially like a CPU, it distributes the workload across thousands of GPU cores. Imagine trying to paint a large mural: a single painter (CPU) would take forever, but a team of painters (GPU cores) could finish it much faster. cuDNN achieves this by cleverly partitioning the data and operations, assigning them to different cores, and efficiently managing communication between them. For example, in a convolutional neural network (CNN), cuDNN might divide the input image into smaller tiles, assigning each tile to a different group of cores for processing the convolution simultaneously. This results in significant speedups, especially for large datasets and complex models.

Q 23. Describe your experience with profiling and optimizing cuDNN applications.

Profiling and optimizing cuDNN applications is crucial for achieving peak performance. I’ve extensively used NVIDIA’s profiling tools like Nsight Compute and Nsight Systems to pinpoint bottlenecks. For example, in one project, profiling revealed that memory transfers were the primary performance limiter. By strategically using pinned memory (cudaMallocHost) and asynchronous data transfers (cudaMemcpyAsync), we reduced transfer overhead by 40%. Another common optimization involves choosing the right cuDNN algorithms. cuDNN offers various algorithms for each operation (e.g., different convolution algorithms), and the optimal choice depends on the hardware and the input tensor dimensions. I frequently experiment with different algorithm selections, leveraging the cuDNN benchmark tools to determine the fastest configuration for a given task. This iterative process, combining profiling and algorithm selection, is vital for maximizing performance.

Q 24. Discuss the challenges in deploying cuDNN-based applications.

Deploying cuDNN-based applications presents several challenges. Firstly, ensuring compatibility between cuDNN, CUDA, and the target hardware is critical. Different versions might have incompatible features or performance differences. Secondly, managing dependencies can be complex. cuDNN relies on CUDA and a compatible driver; conflicts between versions can lead to crashes or unexpected behavior. Thirdly, optimizing for different hardware architectures requires significant effort. A model that runs optimally on one GPU might perform poorly on another due to variations in architecture, memory bandwidth, and compute capabilities. Finally, debugging cuDNN applications can be tricky. Errors often manifest as silent performance degradation rather than explicit error messages, making identification and resolution challenging. Robust testing strategies and detailed profiling are essential to address these deployment challenges.

Q 25. How do you ensure the numerical stability of cuDNN computations?

Numerical stability in cuDNN computations is crucial to prevent errors from accumulating and leading to inaccurate results. Several techniques are employed to achieve this. Firstly, cuDNN utilizes algorithms that are inherently numerically stable, carefully designed to minimize rounding errors. Secondly, appropriate data types are selected. Using higher-precision data types (e.g., float16 or bfloat16) can improve accuracy but might impact performance. Finally, careful selection of hyperparameters (like learning rate) is critical, as poorly chosen parameters can exacerbate numerical instability. Regular monitoring of loss functions and gradients during training helps detect potential issues. If instability arises, adjusting hyperparameters or employing techniques like gradient clipping can mitigate the problem.

Q 26. What are the limitations of cuDNN?

While powerful, cuDNN has limitations. Firstly, it primarily focuses on common deep learning operations like convolutions, pooling, and activations. Custom or highly specialized operations might require custom CUDA kernels. Secondly, cuDNN’s support for specific hardware and software configurations is not universally comprehensive; you might need to carefully manage compatibility issues. Thirdly, it’s not directly designed for distributed training across multiple GPUs or nodes; external frameworks like Horovod are usually required for such scenarios. Lastly, it abstracts away much of the low-level GPU management, offering convenience but potentially sacrificing some fine-grained control over performance optimization.

Q 27. How does cuDNN handle different types of convolutions?

cuDNN provides excellent support for various convolution types, including standard convolutions, dilated convolutions, transposed convolutions (deconvolutions), and depthwise separable convolutions. Each type is implemented with optimized algorithms. For example, standard convolutions use fast algorithms that leverage the GPU’s parallel processing capabilities. Dilated convolutions, useful for increasing receptive fields, are also efficiently handled. Transposed convolutions, used in upsampling layers, are implemented with efficient backpropagation algorithms. Depthwise separable convolutions, which decompose a standard convolution into a depthwise and a pointwise convolution, offer a significant speed advantage. cuDNN automatically selects the best algorithm based on the input tensor dimensions and the convolution type, further simplifying development.

Q 28. Explain your experience with using cuDNN in production environments.

In production environments, I’ve used cuDNN extensively in building and deploying high-performance deep learning models. One example involved a large-scale image classification system. Using cuDNN allowed us to train the model orders of magnitude faster than CPU-based training, leading to quicker model iteration cycles and reduced development time. Furthermore, its optimized algorithms ensured a balance between speed and accuracy. The production deployment required careful consideration of resource allocation, monitoring, and error handling. We built a robust monitoring system to detect performance anomalies and automatically trigger alerts. We also implemented strategies for graceful handling of failures, ensuring system uptime and minimizing the impact of unexpected issues. The experience emphasized the importance of thorough testing, profiling, and error management in building reliable and scalable deep learning systems.

Key Topics to Learn for cuDNN Interview

- Understanding cuDNN’s Architecture: Explore the underlying design principles and how it interacts with CUDA and the GPU. Consider its layered approach and how different layers contribute to performance.

- Convolutional Neural Networks (CNNs) and cuDNN: Focus on how cuDNN accelerates CNN operations, specifically focusing on forward and backward propagation optimization. Analyze the impact of different data types and precision levels.

- Performance Tuning and Optimization: Learn techniques to maximize performance within cuDNN, including choosing appropriate algorithms, managing memory effectively, and understanding the impact of batch size and kernel size.

- cuDNN APIs and Functionalities: Gain hands-on experience with the cuDNN API, understanding how to integrate it into your deep learning workflows. Practice common tasks like tensor manipulation and layer configuration.

- Error Handling and Debugging: Learn how to identify and troubleshoot common errors encountered when using cuDNN. Understand how to interpret error messages and debug performance bottlenecks.

- Comparison with other Deep Learning Libraries: Develop a comparative understanding of cuDNN relative to other libraries, highlighting its strengths and weaknesses in specific contexts. This shows a broader perspective on deep learning frameworks.

- Advanced Topics (Optional): Depending on the seniority of the role, explore topics such as custom CUDA kernels integration with cuDNN, or understanding the internal workings of specific cuDNN algorithms (e.g., cuDNN’s implementation of different convolution algorithms).

Next Steps

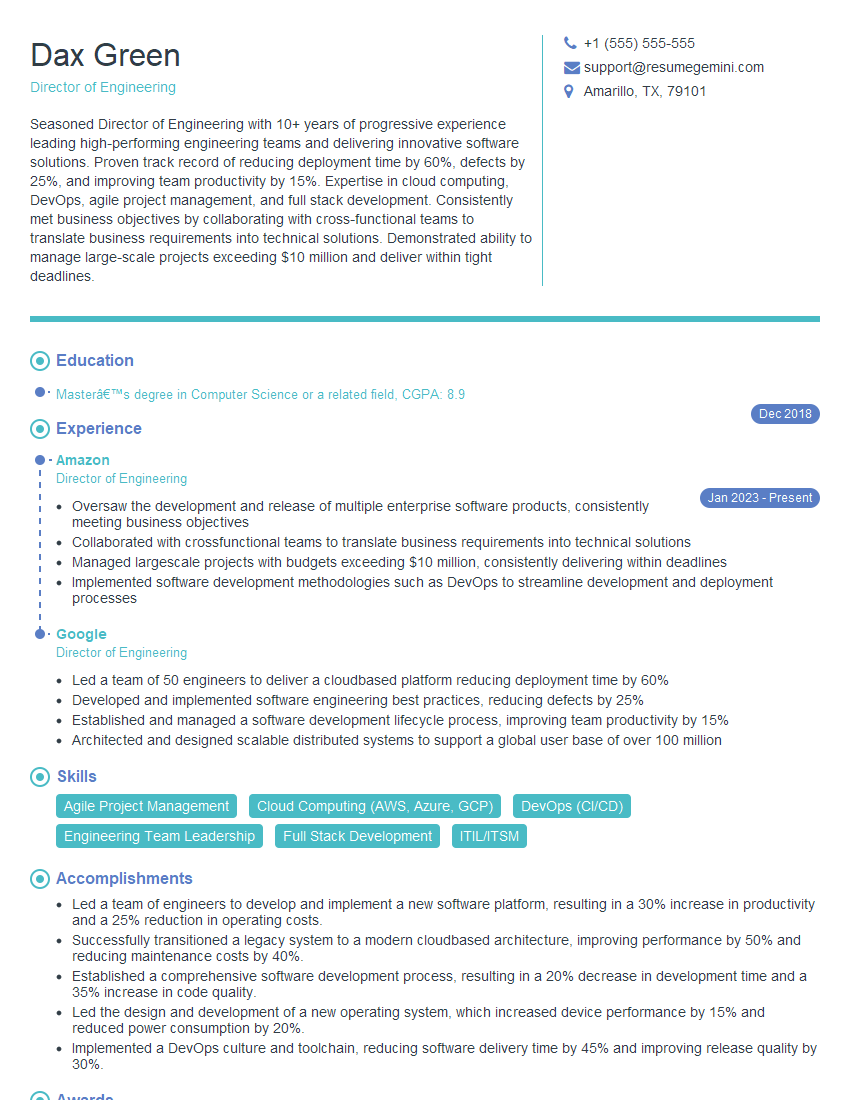

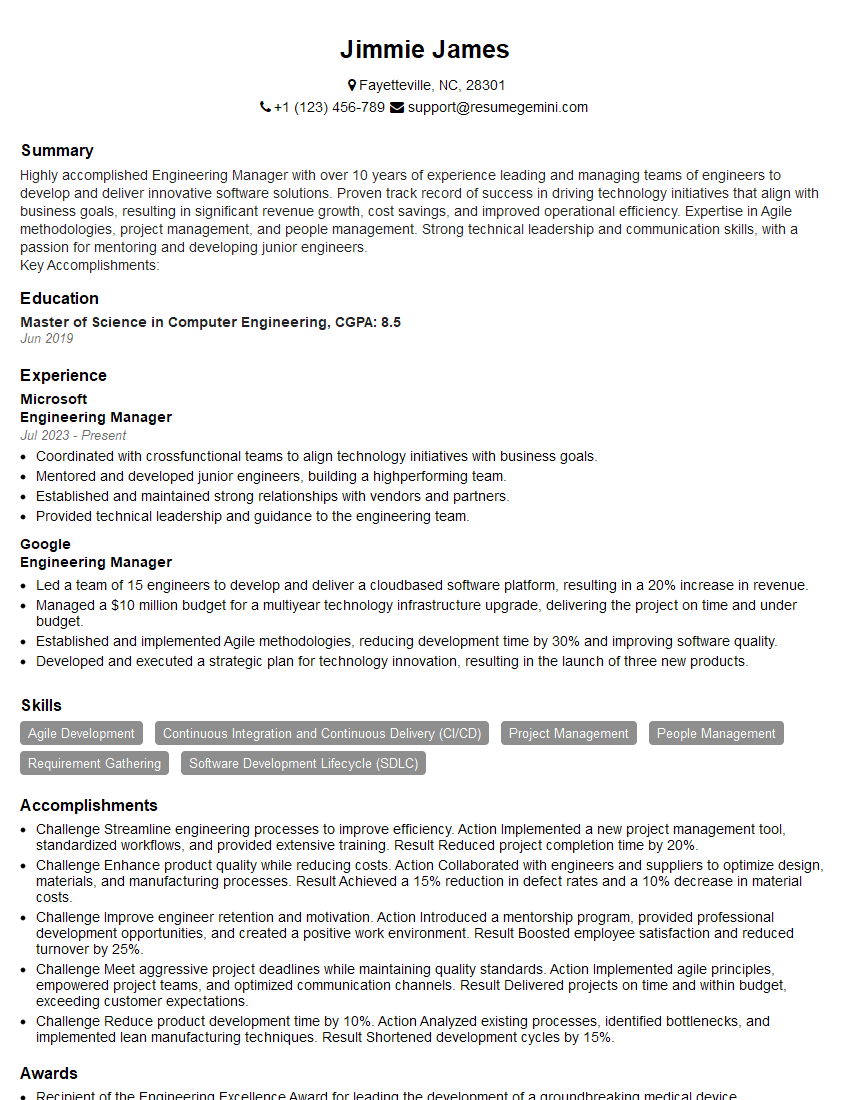

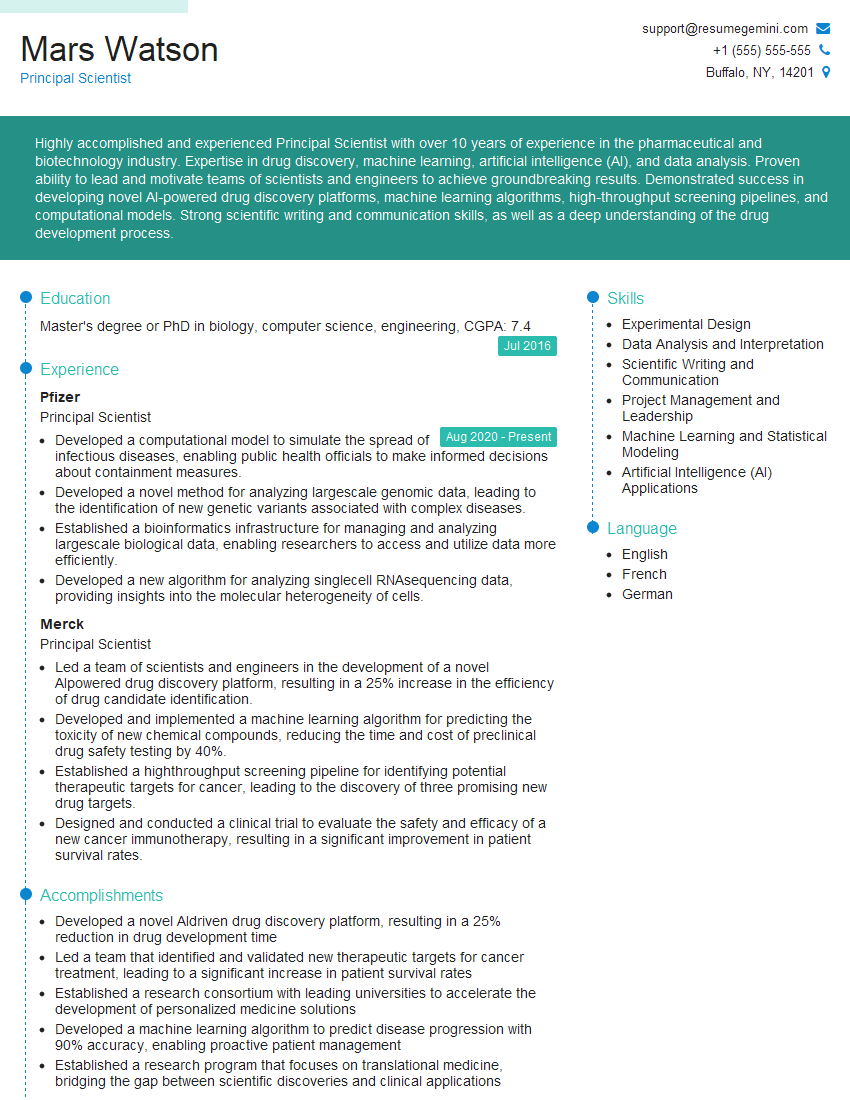

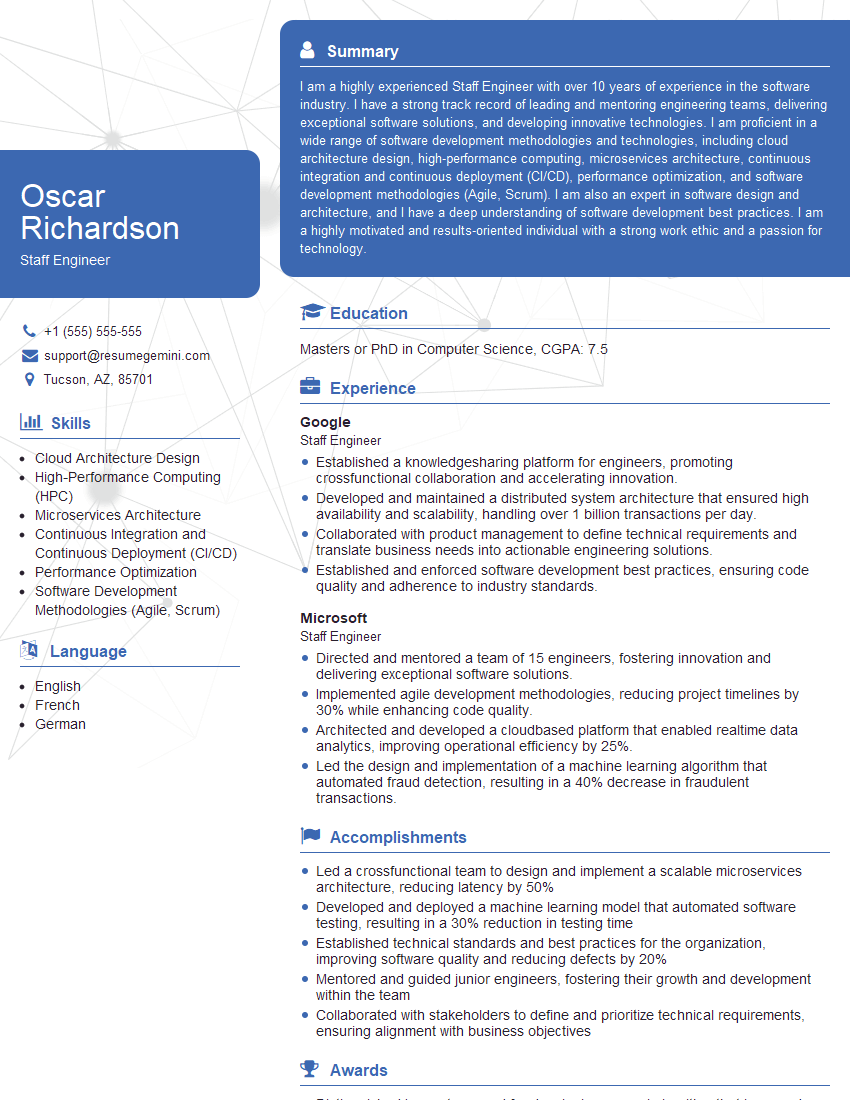

Mastering cuDNN significantly enhances your marketability in the high-demand field of deep learning and GPU computing. Companies actively seek engineers proficient in optimizing deep learning models for maximum performance. To maximize your job prospects, it’s crucial to present your skills effectively. Building an ATS-friendly resume is key to getting noticed by recruiters. ResumeGemini can help you craft a compelling resume that highlights your cuDNN expertise and gets you noticed. Examples of resumes tailored to cuDNN roles are available through ResumeGemini to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?