Warning: search_filter(): Argument #2 ($wp_query) must be passed by reference, value given in /home/u951807797/domains/techskills.interviewgemini.com/public_html/wp-includes/class-wp-hook.php on line 324

Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Data Management and Quality Control interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Data Management and Quality Control Interview

Q 1. Explain your experience with ETL processes and data transformations.

ETL, or Extract, Transform, Load, is the backbone of any data warehousing or business intelligence project. It’s a three-stage process that involves extracting data from various sources, transforming it to meet specific requirements, and finally loading it into a target data warehouse or data lake. My experience spans numerous ETL projects using various tools like Informatica PowerCenter, Apache Kafka, and cloud-based solutions like Azure Data Factory and AWS Glue.

Data transformations are at the heart of the ETL process. This involves cleaning, converting, and enriching raw data to ensure it’s accurate, consistent, and ready for analysis. For example, I’ve worked on projects where we standardized date formats across different sources (from MM/DD/YYYY to YYYY-MM-DD), handled missing values using imputation techniques, and created new fields by combining existing ones. One particularly challenging project involved transforming unstructured text data from customer feedback surveys into structured data for sentiment analysis, requiring techniques like Natural Language Processing (NLP) and regular expressions.

In another instance, I had to deal with inconsistent data types within a customer database. Some fields representing age were stored as strings, while others were integers. Using SQL and scripting, I developed a transformation process to standardize this field to a numeric type, handling and documenting potential issues during the conversion, resulting in a more reliable and efficient database.

Q 2. Describe different data quality dimensions and how you would measure them.

Data quality is multifaceted and can be assessed across several dimensions. Think of it like judging the quality of a cake – you’d consider its taste, texture, appearance, and ingredients. Similarly, data quality dimensions include:

- Accuracy: How close the data is to the true value. We can measure this by comparing data to known sources or using validation rules.

- Completeness: The extent to which all required data elements are present. We calculate the percentage of non-missing values for each field.

- Consistency: Whether the data is uniform and follows established standards. We measure this by identifying and resolving discrepancies between different data sources or fields.

- Uniqueness: Ensuring that each record is distinct and doesn’t contain duplicates. We use deduplication techniques and key constraints to enforce uniqueness.

- Timeliness: How up-to-date the data is. This is measured by the time lag between data creation and its availability for use.

- Validity: Whether the data conforms to predefined rules and constraints. Data validation rules and range checks are employed here.

To measure these dimensions, I utilize a combination of automated checks (using tools like data profiling software) and manual reviews. For instance, I’d use SQL queries to identify missing values, duplicates, and inconsistencies, and then apply statistical methods to assess accuracy and completeness.

Q 3. How do you identify and resolve data inconsistencies?

Identifying and resolving data inconsistencies is a crucial part of data quality management. The process generally involves:

- Detection: Using data profiling techniques and automated checks to identify inconsistencies. This might involve comparing data across different sources, checking for inconsistencies in data types or formats, and verifying data against business rules.

- Analysis: Investigating the root cause of the inconsistencies. This often requires collaboration with subject matter experts and business stakeholders to understand the underlying issues.

- Resolution: Implementing strategies to correct or manage the inconsistencies. Options include data cleansing, standardization, reconciliation, or data enrichment. In some cases, the best course of action might be to flag or remove problematic data, while in others, data transformation or reconciliation are best suited.

- Validation: Verifying that the implemented solutions have effectively resolved the inconsistencies and have not introduced new errors.

For example, I once discovered inconsistencies in customer addresses within a CRM system. Some addresses were missing postal codes, others had inconsistent formats, and some contained typos. I utilized address standardization tools, data matching techniques, and manual review to resolve these issues.

Q 4. What data profiling techniques are you familiar with?

Data profiling is the process of analyzing data to understand its characteristics, identify potential quality issues, and inform data quality improvement strategies. I am proficient in several techniques:

- Data Type Discovery: Identifying the data type of each attribute (e.g., integer, string, date).

- Data Value Distribution Analysis: Examining the frequency of different values within each attribute. This can help identify outliers, unexpected values, and potential data entry errors.

- Data Completeness Analysis: Measuring the percentage of non-missing values in each attribute.

- Data Consistency Analysis: Identifying inconsistencies across different data sources or fields (e.g., inconsistent date formats).

- Data Uniqueness Analysis: Identifying duplicate records or rows.

- Data Range and Boundary Analysis: Determining the minimum and maximum values within each attribute. This can help detect invalid data values.

I typically use both automated tools and SQL queries to perform data profiling. Tools provide a more comprehensive view of the data, while SQL allows for more targeted analysis.

Q 5. Explain your experience with data validation and data cleansing techniques.

Data validation ensures that data meets predefined quality standards. Data cleansing is the process of correcting or removing inaccurate, incomplete, or inconsistent data. My experience involves applying numerous techniques for both:

- Data Validation: Using constraints, rules, and checks during data entry or ETL processes to prevent invalid data from entering the system. Examples include range checks, data type validation, pattern matching (regular expressions), and cross-field validation.

- Data Cleansing: Applying various methods to address data quality issues. These include handling missing values (imputation or removal), correcting incorrect values, removing duplicates, standardizing data formats (e.g., dates, addresses), and resolving inconsistencies.

One project involved validating customer order data. We implemented checks to ensure that order dates were in the past, quantities were positive, and customer IDs matched existing records. Data cleansing involved correcting invalid order dates and addressing missing customer addresses using fuzzy matching to identify likely matches based on similar address components.

Q 6. How do you handle missing data in a dataset?

Missing data is a common challenge in data analysis. The best approach depends on the extent and nature of the missing data and the research question. I consider several strategies:

- Deletion: Removing rows or columns with missing data. This is straightforward but can lead to significant information loss if the missing data is substantial or non-randomly distributed. Listwise deletion is one approach.

- Imputation: Replacing missing values with estimated values. Common techniques include mean/median/mode imputation, regression imputation, and k-nearest neighbors imputation. The choice depends on the type of variable and the structure of missing data.

- Model-based imputation: Building a predictive model to predict missing values. This is more complex but can be more accurate than simpler imputation methods.

- Multiple imputation: Generating multiple plausible imputed datasets and then combining the results. This accounts for the uncertainty introduced by imputation.

- Flag missing values: Create a new variable that indicates whether a value is missing for each row. This allows modeling the missing data process and preserves original information.

The decision of which method to use is crucial and depends on the type of data, size of the dataset and implications for bias.

Q 7. Describe your experience with data governance frameworks and policies.

Data governance frameworks and policies are essential for ensuring data quality, consistency, and compliance. My experience involves working with and implementing various frameworks like DAMA-DMBOK and COBIT. These frameworks provide a structured approach to managing data across the organization. They define roles, responsibilities, processes, and policies related to data management.

A robust data governance framework typically includes:

- Data Policies: Define standards and guidelines for data quality, security, access, and usage. These policies establish clear expectations for data handling throughout the organization.

- Data Standards: Specify naming conventions, data types, and formats to ensure data consistency across different systems.

- Data Quality Metrics: Define key indicators for monitoring and assessing data quality.

- Roles and Responsibilities: Clearly define the roles and responsibilities of individuals and teams involved in data management. This often involves a data steward for each data asset.

- Data Security and Access Controls: Implement mechanisms to secure data and control access to sensitive information.

In a previous role, I was instrumental in developing a data governance program that involved creating data quality metrics, implementing data validation rules, and training employees on data handling best practices. This resulted in a significant improvement in data quality and reduced compliance risks.

Q 8. What are your preferred methods for data modeling?

My preferred methods for data modeling depend heavily on the context, but generally involve a combination of Entity-Relationship Diagrams (ERDs) and dimensional modeling. ERDs are excellent for representing the relationships between entities in a relational database, allowing me to visualize the structure and ensure data integrity. I use them to define tables, attributes, and keys, identifying primary and foreign keys to establish relationships. For example, in an e-commerce system, an ERD would clearly show the relationship between customers, orders, and products. Dimensional modeling, on the other hand, is particularly useful for data warehousing and business intelligence. This technique focuses on creating a star schema or snowflake schema, organizing data into fact tables and dimension tables to facilitate efficient querying and analysis. In a retail setting, a fact table might contain sales transactions, while dimension tables would provide context such as product details, customer information, and store locations.

I often employ a combination of top-down and bottom-up approaches. A top-down approach starts with a high-level understanding of the business requirements, breaking them down into smaller, manageable components. A bottom-up approach starts with existing data sources, identifying key entities and attributes. The choice depends on the project’s complexity and available information.

Q 9. Explain your experience with database management systems (DBMS).

I have extensive experience with various database management systems, including relational databases like MySQL, PostgreSQL, and SQL Server, as well as NoSQL databases such as MongoDB and Cassandra. My expertise encompasses database design, implementation, optimization, and administration. In a previous role, I was responsible for designing and implementing a highly scalable, fault-tolerant database system for a large-scale e-commerce platform using PostgreSQL. This involved optimizing database queries, creating indexes, and implementing sharding techniques to handle millions of transactions per day. Another project involved migrating a legacy system from an outdated SQL Server instance to a cloud-based PostgreSQL environment, which improved performance and reduced maintenance costs. My experience extends to database security best practices, including access control, encryption, and regular backups.

I’m proficient in writing SQL queries for data retrieval, manipulation, and analysis. For instance, I frequently utilize JOINs, subqueries, and window functions to extract meaningful insights from complex datasets. I also understand the importance of database normalization to minimize data redundancy and ensure data integrity.

Q 10. How do you ensure data security and privacy?

Data security and privacy are paramount. My approach involves a multi-layered strategy, encompassing technical, administrative, and physical safeguards. Technically, this includes encryption both in transit (using HTTPS) and at rest (using database encryption), access control mechanisms (role-based access control or RBAC), and regular security audits. Administratively, I ensure compliance with relevant regulations like GDPR and CCPA, implementing data governance policies and procedures, and providing regular security awareness training to all personnel. Physically, I advocate for secure data center facilities with robust access controls and environmental monitoring.

Data anonymization and pseudonymization are critical techniques I employ to protect sensitive information while still allowing for data analysis. For example, replacing personally identifiable information (PII) like names and addresses with unique identifiers protects privacy without sacrificing the data’s utility. I also believe in adhering to the principle of least privilege, granting users only the necessary access rights to perform their tasks. This minimizes the risk of data breaches.

Q 11. Describe your experience with data warehousing and business intelligence.

I have significant experience with data warehousing and business intelligence (BI). I’ve designed and implemented several data warehouses using technologies like Snowflake and Amazon Redshift, leveraging dimensional modeling techniques to create efficient and scalable data structures for analytical processing. I’ve worked with ETL (Extract, Transform, Load) processes to ingest data from various sources, ensuring data consistency and quality. For instance, in a past role, I developed a data warehouse for a telecommunications company, integrating data from various billing systems, customer relationship management (CRM) systems, and network performance monitoring tools. This allowed the company to gain valuable insights into customer churn, network performance, and revenue generation.

My BI experience includes developing dashboards and reports using tools such as Tableau and Power BI to visualize key performance indicators (KPIs) and support data-driven decision-making. I’m adept at creating interactive dashboards that allow users to explore data and gain a comprehensive understanding of business performance.

Q 12. What experience do you have with data visualization tools?

I’m proficient in several data visualization tools, including Tableau, Power BI, and Qlik Sense. I understand that effective data visualization is crucial for communicating insights and making data accessible to a broader audience. My experience goes beyond simply creating charts and graphs; I focus on crafting compelling narratives that effectively convey complex information. For example, I’ve used Tableau to create interactive dashboards that allowed sales teams to track their performance in real time, identify top-performing products, and understand regional sales trends. This improved their efficiency and allowed for more data-driven decision making.

I’m familiar with the principles of effective visualization, such as choosing the right chart type for the data and avoiding misleading representations. I also consider the audience when designing visualizations, ensuring that the information is easily understood and actionable.

Q 13. How do you prioritize data quality issues?

Prioritizing data quality issues requires a structured approach. I typically use a risk-based prioritization framework, considering factors like the impact of the issue on business processes, the frequency of the error, and the cost of remediation. I use a scoring system, assigning weights to each factor. For example, a data quality issue that impacts critical business decisions and occurs frequently would receive a higher priority than a minor issue with low frequency. This approach ensures that the most critical issues are addressed first.

Furthermore, I collaborate with stakeholders to gain a clear understanding of their priorities and concerns. This ensures that the prioritization process is aligned with business needs. I document the prioritization process and regularly review and update it as needed. This ensures that the prioritization remains relevant and effective over time.

Q 14. Explain your approach to data quality monitoring and reporting.

My approach to data quality monitoring and reporting involves a combination of automated checks and manual reviews. I implement automated checks using data quality tools and scripts to continuously monitor data for errors, inconsistencies, and anomalies. This allows for proactive identification and resolution of data quality problems. For example, I might use SQL queries to identify missing values, duplicate records, and outliers. I also use data profiling tools to analyze the characteristics of data and identify potential quality issues.

Manual reviews are equally important to ensure the accuracy and effectiveness of automated checks. I regularly review data quality reports and dashboards, looking for patterns and trends that might indicate underlying issues. I also conduct periodic data audits to assess the overall health of data and identify areas for improvement. My reports are clearly structured, using both quantitative and qualitative measures to present a comprehensive view of data quality. This includes metrics like data completeness, accuracy, consistency, and timeliness. These reports are shared regularly with stakeholders to ensure transparency and accountability.

Q 15. How do you communicate data quality issues to stakeholders?

Communicating data quality issues effectively requires a multifaceted approach, tailoring the message to the stakeholder’s role and understanding. I start by understanding the impact of the issue. Is it impacting a key business decision? Is it causing financial losses? Then I use a clear and concise communication method that fits the audience.

For technical stakeholders, I’d provide detailed reports with metrics such as completeness, accuracy, and consistency rates, perhaps including SQL queries demonstrating the issue. I might also use visualizations like dashboards or heatmaps to highlight problem areas. For example, I might show a dashboard illustrating the percentage of missing values in a critical field. SELECT COUNT(*) FROM table WHERE critical_field IS NULL;

For non-technical stakeholders, I focus on the business impact. I’d translate technical jargon into plain language, focusing on the consequences of poor data quality. A simple example is, “Due to inconsistencies in our customer address data, we’ve seen a 15% increase in undelivered packages, costing the company X dollars.” I might also present the information visually using charts or graphs showing the business impact clearly.

Finally, I always include actionable recommendations for remediation. This shows initiative and provides a path forward, fostering collaboration and trust.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with data lineage and traceability?

Data lineage and traceability are critical for ensuring data quality and understanding the history of data transformations. My experience encompasses the entire lifecycle, from data origin to final consumption. I’ve worked extensively with various tools to track data flows, including metadata management systems and data cataloging platforms.

In a previous role, we implemented a data lineage tool that allowed us to track the origin of every field in our data warehouse, identifying transformations along the way. This was crucial in troubleshooting data quality issues, as we could quickly pinpoint the source of errors. For example, we discovered a data quality issue where a date field was incorrectly formatted. By tracing its lineage back to the source system, we identified a flawed ETL (Extract, Transform, Load) process responsible for the error. The ability to trace the data’s path allowed for swift resolution and prevented future issues.

I also have hands-on experience creating and managing metadata repositories. This ensures all data assets are clearly documented and their provenance is understood, providing clear audit trails and facilitating regulatory compliance.

Q 17. Describe your experience with data integration tools and techniques.

My experience with data integration spans various tools and techniques. I am proficient in both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes, adapting my approach based on project requirements and data volume. I’ve utilized numerous tools, including Informatica PowerCenter, Apache Kafka, and cloud-based solutions like AWS Glue and Azure Data Factory.

For example, in one project, we used Informatica PowerCenter to integrate data from multiple legacy systems into a new data warehouse. We employed various transformation techniques such as data cleansing, standardization, and deduplication to ensure data quality. We also leveraged Informatica’s metadata management capabilities to track data lineage and maintain a comprehensive data catalog.

In another project involving streaming data, we utilized Apache Kafka for real-time data integration. This allowed us to process high volumes of data with low latency, which was critical for our real-time analytics applications.

My approach focuses on efficient and scalable solutions, utilizing the best tool for the specific task and always prioritizing data quality throughout the integration process.

Q 18. How do you handle large datasets?

Handling large datasets requires a strategic approach that leverages the power of distributed computing and optimized data structures. My experience involves employing techniques such as distributed computing frameworks like Hadoop and Spark, and database technologies designed for scalability, such as cloud-based data warehouses (Snowflake, BigQuery, Redshift).

For example, when working with petabyte-scale datasets, I’ve utilized Apache Spark for parallel processing, significantly reducing processing time. The ability to distribute computations across multiple nodes allows for faster processing compared to traditional methods. I’ve also used techniques like data sampling and dimensionality reduction to manage complexity and improve processing speeds without sacrificing the overall accuracy or integrity of the analyses performed. Partitioning and indexing were other important considerations for optimized query performance.

Careful planning and optimization are vital, focusing on efficient data storage, retrieval, and processing to handle the volume, velocity, and variety inherent in big data.

Q 19. Explain your experience with data migration projects.

Data migration projects require meticulous planning and execution. My experience encompasses various migration strategies, including big bang migrations, phased migrations, and parallel runs. The choice depends on factors such as data volume, system complexity, and business requirements. Each project starts with a thorough assessment of the source and target systems, including data profiling and validation.

In one project, we migrated a large database from an on-premises system to a cloud-based data warehouse using a phased approach. This reduced risk and disruption by migrating data in stages. We started with a subset of the data, thoroughly testing the process before migrating the remaining data. This ensured minimal downtime and allowed for early detection and resolution of any issues. We also employed robust data validation techniques at every stage, verifying data integrity and consistency. Comprehensive documentation and change management were crucial to success.

Data quality is paramount during migration. We implement stringent data cleansing and transformation procedures to ensure data accuracy and consistency in the target system. Post-migration, thorough validation and monitoring are essential to confirm the successful completion of the migration and identify any lingering problems.

Q 20. How do you measure the effectiveness of data quality initiatives?

Measuring the effectiveness of data quality initiatives involves establishing key performance indicators (KPIs) and tracking them over time. These KPIs are tailored to the specific goals of the initiative. Common metrics include data accuracy, completeness, consistency, and timeliness.

For example, we might track the percentage of complete records in a particular table, or the number of data quality errors detected. We can use dashboards to visualize these metrics and monitor trends. We’d also track the number of data quality issues resolved and the time it takes to resolve them. A reduction in the number of errors and a decrease in resolution time indicate a successful initiative.

Beyond quantitative metrics, I also assess the qualitative impact, including improved decision-making, reduced operational costs, and increased customer satisfaction. Regular reviews of the KPIs provide insights into the effectiveness of the program, allowing for adjustments and improvements as needed. Feedback from stakeholders is also vital in evaluating the success of the program.

Q 21. What is your experience with different data formats (e.g., CSV, JSON, XML)?

I’m proficient in working with various data formats, including CSV, JSON, XML, and Parquet. My experience involves parsing, transforming, and validating data in these formats. The choice of format often depends on the source of the data and the intended use. CSV is commonly used for simple data exchange, JSON for structured data within applications, and XML for complex, hierarchical data. Parquet is particularly useful for big data applications due to its columnar storage and efficient compression.

For example, when integrating data from an API, which commonly returns JSON, I’ve used programming languages like Python to parse the JSON data and load it into a database. For large datasets, I might use tools designed for processing Parquet files to improve performance. When dealing with legacy systems, it is not uncommon to encounter XML which requires specialized tools to parse and process correctly.

Understanding the strengths and weaknesses of each format is critical for selecting the appropriate approach and ensuring data integrity throughout the entire process.

Q 22. How do you ensure data consistency across different systems?

Ensuring data consistency across different systems is crucial for maintaining data integrity and accuracy. Think of it like keeping all versions of a document in sync – you wouldn’t want conflicting information across different copies. This requires a multi-pronged approach.

- Establish a single source of truth (SSOT): Identify a primary system or database as the authoritative source for critical data elements. All other systems should either pull data from this source or rigorously reconcile data with it.

- Data synchronization mechanisms: Implement robust processes for data replication or synchronization between systems. This could involve ETL (Extract, Transform, Load) processes, change data capture (CDC), or message queues. Real-time synchronization is ideal, but batch processing might be necessary depending on volume and latency requirements.

- Data standardization and validation: Define clear data standards and validation rules to ensure consistency in data formats, units, and naming conventions across all systems. Data cleansing and transformation processes should be incorporated to handle inconsistencies that may arise.

- Data governance and access control: Implement robust governance policies and access controls to manage data changes and prevent accidental or malicious modifications. This includes processes for data approval, version control, and auditing.

For example, imagine a company with separate systems for customer relationship management (CRM), order management, and inventory. A properly implemented SSOT for customer data in the CRM ensures that customer information is consistent across all three systems, preventing discrepancies in order fulfillment and inventory tracking.

Q 23. Describe your experience with master data management (MDM).

My experience with Master Data Management (MDM) spans several projects where I’ve been instrumental in designing, implementing, and maintaining MDM solutions. MDM is all about creating a single, consistent view of master data – things like customer information, product details, and supplier data – that’s shared across the entire organization.

In a previous role, we implemented an MDM solution using a commercial MDM platform for a large retail organization. This involved:

- Defining the master data domains: Identifying the key entities (e.g., customers, products, suppliers) and their attributes.

- Data modeling and integration: Designing the MDM data model and implementing integrations with various source systems (e.g., CRM, ERP, eCommerce platform).

- Data quality rules and workflows: Defining data quality rules and establishing data governance processes to ensure the accuracy and consistency of master data.

- Data cleansing and standardization: Cleaning and standardizing existing master data before loading it into the MDM system.

- User training and support: Training users on how to use the MDM system and providing ongoing support.

This MDM implementation significantly improved data accuracy, reduced data inconsistencies, and enhanced business processes across the organization by providing a single source of truth for master data. It streamlined reporting, improved decision-making, and fostered better collaboration across departments.

Q 24. What are your preferred methods for data anomaly detection?

My preferred methods for data anomaly detection combine statistical techniques with rule-based approaches. It’s a bit like a detective searching for clues: you need multiple approaches to catch all the anomalies.

- Statistical methods: I often use techniques like z-scores or outlier detection algorithms to identify data points that deviate significantly from the norm. This helps find unexpected spikes or dips in patterns. For example, a sudden surge in sales of a particular product could be an anomaly.

- Rule-based methods: Defining business rules that flag inconsistencies helps catch subtle anomalies that statistical methods might miss. For example, a rule could flag orders with a delivery address that differs significantly from the customer’s billing address.

- Data visualization: Visualizing the data using dashboards and charts is crucial. This helps me quickly spot patterns and potential anomalies that might be missed through statistical or rule-based methods alone.

- Machine learning algorithms: For larger datasets, machine learning techniques like anomaly detection algorithms (e.g., Isolation Forest, One-Class SVM) can be incredibly powerful in finding complex patterns that indicate anomalies.

In practice, I use a combination of these methods, starting with simple rules and visualizations then moving to more sophisticated techniques if necessary. It’s a layered approach that ensures a comprehensive analysis.

Q 25. How do you collaborate with different teams to improve data quality?

Collaboration is key to improving data quality. It’s not a solo act; it involves bringing together diverse perspectives to build a comprehensive data quality improvement strategy.

- Cross-functional teams: I advocate for establishing cross-functional teams composed of representatives from IT, business units, and data governance to ensure everyone’s voice is heard and to achieve buy-in for data quality initiatives.

- Communication and stakeholder management: Regular communication is essential. I maintain open communication channels, utilize shared dashboards to track progress, and provide frequent updates to keep stakeholders informed and engaged.

- Data quality workshops: Conducting workshops to discuss data quality issues, identify root causes, and develop solutions can be very effective. This helps in creating a shared understanding of the challenges and facilitating collaborative problem-solving.

- Data quality metrics and reporting: Measuring data quality through key metrics (completeness, accuracy, consistency, etc.) provides data-driven insights into the effectiveness of our initiatives and allows us to monitor progress and adjust our approach accordingly.

For instance, in one project, I worked with the sales team to identify and address inconsistencies in their data entry processes, resulting in a significant improvement in the accuracy of sales data. This collaboration helped build trust and ensure the success of our data quality initiatives.

Q 26. Explain your experience with data quality rules and constraints.

Data quality rules and constraints are the backbone of a robust data quality management program. They’re like the grammar rules of your data, ensuring consistency and accuracy.

My experience includes defining and implementing a wide range of rules and constraints, using both business rules engines and SQL-based approaches. For example:

- Data type validation: Rules that ensure that data is of the correct type (e.g., a phone number is numeric, an email address follows the correct format).

- Range checks: Rules that verify that numerical data falls within acceptable ranges (e.g., age is between 0 and 120).

- Data format validation: Rules that enforce consistent data formatting (e.g., date format, currency format).

- Reference integrity: Constraints that ensure the relationships between tables are valid (e.g., a foreign key referencing a primary key in another table).

- Data consistency checks: Rules that identify conflicts or inconsistencies in data (e.g., a customer having two different addresses).

I often use a combination of tools and techniques. For example, a business rule engine might handle complex business logic, while database constraints ensure data integrity at the database level. The choice of method depends on the complexity of the rule and the overall architecture. A well-defined set of rules ensures data quality from the point of entry.

Q 27. Describe your experience with using SQL for data management tasks.

SQL is my go-to language for many data management tasks. Its power and versatility make it indispensable for data quality control and manipulation. I’ve used it extensively for:

- Data cleansing: Writing SQL queries to identify and correct inaccurate, incomplete, or inconsistent data. For example, using

UPDATEstatements to standardize inconsistent data formats orDELETEstatements to remove duplicate records. - Data transformation: Transforming data into the required format for analysis or reporting. This can involve string manipulation, date/time conversions, and aggregations using functions like

SUBSTRING,DATE_FORMAT,SUM, andAVG. - Data validation: Creating SQL queries to check for data quality violations against predefined rules. For instance, checking for null values or values outside acceptable ranges using

WHEREclauses and aggregate functions. - Data profiling: Generating data profiles using SQL queries to understand the characteristics of the data, including data types, distributions, and null values. This helps in identifying potential data quality problems.

- Database administration: Performing database maintenance tasks such as creating tables, indexes, views, and stored procedures.

Example: UPDATE Customers SET Email = LOWER(Email) WHERE Email LIKE '%@%'; -- standardize email formats

This simple query demonstrates how SQL can be used to standardize email addresses, ensuring data consistency. My expertise lies in crafting efficient and effective SQL queries for various data manipulation and quality control needs.

Q 28. What are your experiences with different data quality tools?

My experience encompasses a range of data quality tools, each suited to different needs and scales. Selecting the right tool depends on factors such as budget, data volume, complexity, and integration requirements.

- Commercial MDM platforms: These provide comprehensive MDM capabilities, including data quality rules, workflows, and data governance features. They’re ideal for large organizations with complex data requirements.

- Data quality software: These tools offer a range of data quality capabilities such as profiling, cleansing, and matching. Examples include Informatica Data Quality and Talend Data Quality.

- Open-source tools: Open-source tools like OpenRefine offer powerful data cleansing and transformation capabilities, and are a cost-effective option for smaller projects.

- Database features: Many database systems have built-in data quality features, such as constraints and triggers, that can be used to enforce data quality rules.

- Custom-built solutions: For very specific requirements, custom-built solutions might be necessary, potentially using scripting languages like Python or R combined with database functionalities.

My approach involves selecting the most appropriate tool for the specific task and integrating it effectively with existing systems. I prioritize tools with good usability, scalability, and integration capabilities, as well as the availability of relevant support and documentation.

Key Topics to Learn for Data Management and Quality Control Interview

- Data Governance and Policies: Understanding data governance frameworks, data quality rules, and compliance regulations (e.g., GDPR, HIPAA).

- Data Modeling and Database Design: Practical experience with relational and NoSQL databases, including schema design, normalization, and data modeling techniques. Consider showcasing your understanding of ER diagrams and data warehousing concepts.

- Data Quality Assurance Techniques: Proficiency in data profiling, cleansing, validation, and standardization. Be prepared to discuss specific tools and techniques you’ve used, and how you’ve addressed data inconsistencies and errors.

- Data Integration and ETL Processes: Experience with Extract, Transform, Load (ETL) processes and tools. Highlight your ability to integrate data from various sources and manage data pipelines effectively.

- Data Validation and Verification: Discuss methods for validating data accuracy and completeness, including checksums, cross-referencing, and reconciliation techniques. Demonstrate your understanding of data quality metrics and how to measure them.

- Data Security and Privacy: Understanding data security best practices, including access control, encryption, and data masking. Discuss your experience with data anonymization and pseudonymization techniques.

- Data Visualization and Reporting: Ability to communicate data insights effectively through visualizations and reports. Showcase examples of your data analysis and reporting skills.

- Problem-solving and Troubleshooting: Be ready to discuss your approach to identifying and resolving data quality issues, and your ability to handle complex data challenges. Use the STAR method (Situation, Task, Action, Result) to illustrate your problem-solving skills.

Next Steps

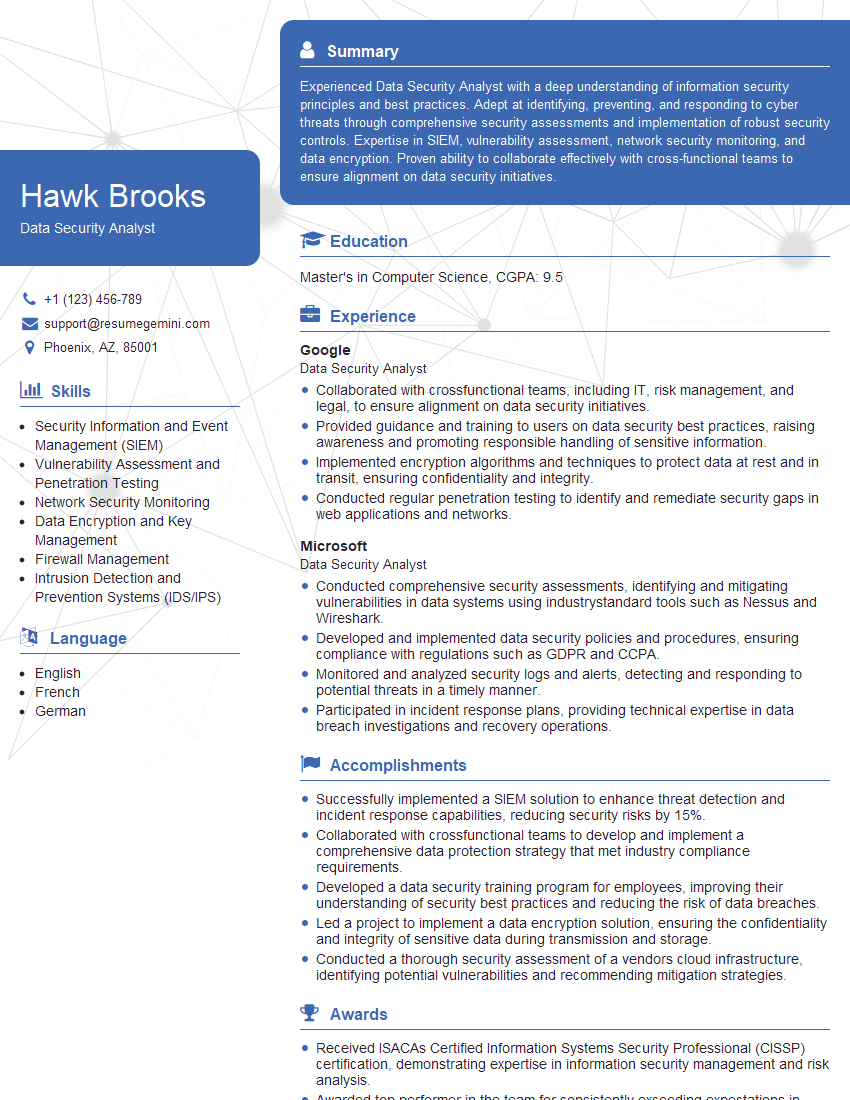

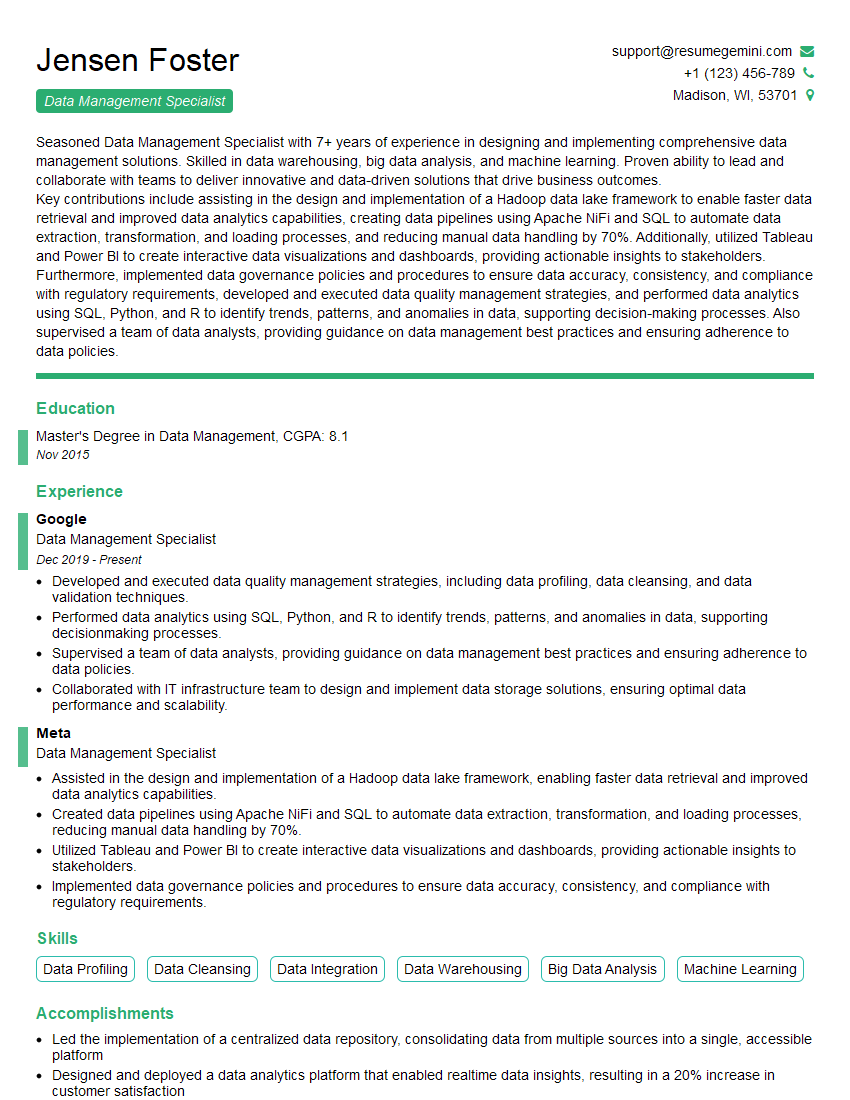

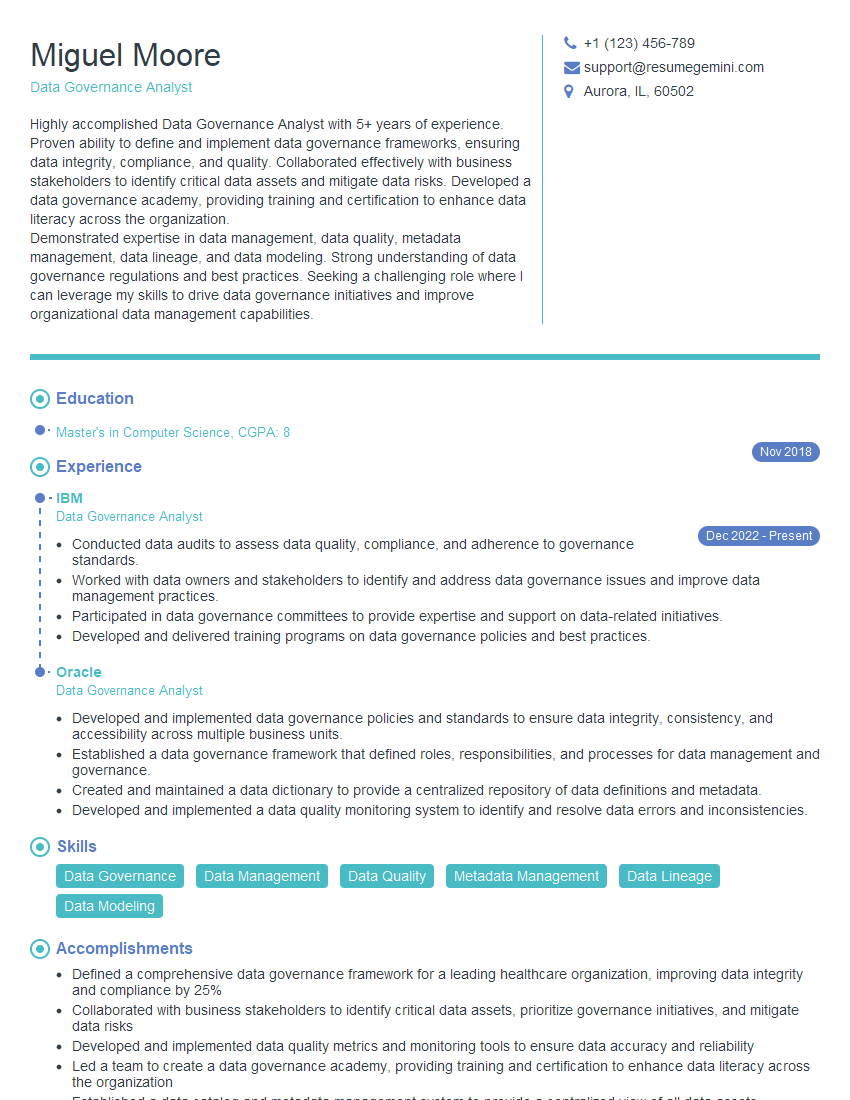

Mastering Data Management and Quality Control is crucial for a successful and rewarding career in today’s data-driven world. These skills are highly sought after, opening doors to numerous opportunities for growth and advancement. To maximize your job prospects, creating a strong, ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional and effective resume, showcasing your skills and experience to potential employers. ResumeGemini provides examples of resumes tailored to Data Management and Quality Control roles, giving you a head start in crafting the perfect application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent a social media marketing agency that creates 15 engaging posts per month for businesses like yours. Our clients typically see a 40-60% increase in followers and engagement for just $199/month. Would you be interested?”

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?