Cracking a skill-specific interview, like one for Embedded System Programming, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Embedded System Programming Interview

Q 1. Explain the difference between a process and a thread.

In the world of embedded systems, both processes and threads are ways to execute code, but they differ significantly in their resource management and interaction.

A process is an independent, self-contained execution environment. Think of it as a completely separate apartment in a building. Each process has its own memory space, system resources, and security context. If one process crashes, it doesn’t necessarily affect others. Creating a new process is a relatively heavyweight operation, requiring significant system overhead.

A thread, on the other hand, is a lightweight unit of execution within a process. Imagine threads as different roommates sharing the same apartment (the process). They share the same memory space and resources, but have their own execution path. Creating and managing threads is much faster and less resource-intensive than processes. However, since they share memory, careful synchronization mechanisms are needed to avoid data corruption.

Example: A web browser might run as a single process, but within that process, there might be multiple threads handling different tasks like rendering web pages, downloading images, and handling user input simultaneously. If one thread crashes, the entire browser might not necessarily crash.

Q 2. What is a real-time operating system (RTOS)?

A Real-Time Operating System (RTOS) is a specialized operating system designed to handle tasks with strict timing constraints. Unlike general-purpose operating systems like Windows or macOS, which prioritize responsiveness and user experience, RTOSes prioritize determinism and predictability. They guarantee that tasks are executed within defined deadlines, crucial for applications where timing is critical.

Key Characteristics of RTOS:

- Predictable Timing: Tasks execute within guaranteed time frames.

- Deterministic Behavior: The system’s response is consistent and predictable.

- Real-time Scheduling Algorithms: RTOSes employ sophisticated algorithms to ensure timely task execution (e.g., priority-based scheduling, round-robin scheduling).

- Low Latency: Minimal delay between an event and the system’s response.

- Resource Management: Efficient management of memory, processors, and peripherals.

Real-world examples: RTOSes are commonly used in automotive control systems, industrial automation, medical devices, and aerospace applications where precise timing is crucial for safety and functionality. Think of an airbag deployment system – its operation must be instantaneous and reliable.

Q 3. Describe different types of memory in embedded systems.

Embedded systems utilize various types of memory, each with specific characteristics and purposes:

- RAM (Random Access Memory): Volatile memory used for storing program instructions and data currently in use. It’s fast but loses its contents when power is removed. There are various types of RAM, including SRAM (Static RAM) known for speed and cost but high power consumption and DRAM (Dynamic RAM) for high density and lower cost but slower access.

- ROM (Read-Only Memory): Non-volatile memory containing firmware, bootloaders, and other permanent data. Data cannot be easily modified after manufacturing or programming. Various types include PROM, EPROM, EEPROM and Flash memory.

- Flash Memory: A type of non-volatile memory that can be electronically erased and reprogrammed. It is commonly used to store firmware, operating systems, and configuration data. It’s slower than RAM but more durable and retains data even when power is off.

- EEPROM (Electrically Erasable Programmable Read-Only Memory): A type of non-volatile memory that can be individually reprogrammed, allowing for flexible updates of specific data bytes.

- Register: Very fast memory located directly within the CPU used to temporarily store data for immediate processing.

The choice of memory type depends on the application’s needs regarding speed, cost, power consumption, and data retention requirements.

Q 4. Explain the concept of memory mapping.

Memory mapping is a technique that assigns portions of physical memory addresses to specific peripheral devices or memory regions. Instead of using unique addresses for accessing peripherals and other memory regions, a memory map provides a consistent view of the entire memory system. Imagine it as a city map where each address represents a location; a peripheral device might be a building, and RAM would be a neighborhood.

How it works: The Memory Management Unit (MMU) plays a key role in mapping logical addresses (used by the program) to physical addresses (actual addresses in RAM or peripherals). The MMU translates these addresses, enabling software to interact with peripherals and other memory resources in a unified manner without needing to use separate access mechanisms.

Example: A microcontroller might map a specific memory address range to an ADC (Analog-to-Digital Converter). By writing to that address range, the software can initiate an ADC conversion, and reading from that range will retrieve the converted digital value.

Benefits:

- Simplified Programming: Access to peripherals becomes easier because you use memory addresses rather than specific device registers.

- Efficient Resource Management: Better utilization of memory and other resources.

- Portability: Code can be easily ported between similar platforms.

Q 5. What are interrupts and how do they work?

Interrupts are signals that temporarily suspend the normal execution of a program to handle urgent events. They are like a phone call interrupting your meeting – important enough to pause your current task and deal with the call first. These signals originate from hardware or software and alert the processor to attend a specific event.

How they work: When an interrupt occurs, the processor:

- Saves the current program’s state (registers, program counter).

- Determines the source of the interrupt.

- Jumps to a specific interrupt service routine (ISR) associated with the interrupt source.

- Executes the ISR to handle the event.

- Restores the saved program state and resumes execution from where it was interrupted.

Examples of interrupt sources: Timer interrupts, external hardware signals (buttons, sensors), serial communication events.

Q 6. How do you handle interrupts in an embedded system?

Handling interrupts effectively in embedded systems involves several key steps:

- Interrupt Vector Table: An interrupt vector table maps interrupt sources to their corresponding ISRs. It provides the address where the processor should jump to when a specific interrupt occurs.

- Interrupt Service Routines (ISRs): These are short, efficient functions designed to handle a specific interrupt event. They must be concise to minimize the time the main program is interrupted.

- Interrupt Prioritization: Assigning priorities to interrupts ensures that more critical interrupts (e.g., those related to safety) are handled before less critical ones (e.g., a user button press).

- Interrupt Disabling/Enabling: Disabling interrupts prevents further interrupts from occurring during critical sections of the code (like an ISR), ensuring data consistency. Enabling interrupts allows the system to respond to new events.

- Interrupt Latency: Minimizing the time taken by the ISR is crucial to ensure real-time responsiveness. This could involve optimizing the code and carefully managing resources.

Example: In a system controlling a motor, a timer interrupt might be used to periodically check the motor’s speed and adjust its control signals. A higher priority interrupt from a safety sensor might temporarily halt the motor if a dangerous condition is detected. Properly handling both of these interrupts would ensure both the motor’s functional control and safety.

Q 7. Explain the concept of context switching.

Context switching is the process of saving the state of a currently running task (thread or process) and restoring the state of another task to allow it to run. It’s like switching between different TV channels; you pause one channel, switch to another, and then resume the first when you’re ready.

How it works: The operating system saves the context of the current task, including:

- Registers: The values stored in the CPU’s registers.

- Program Counter: The address of the next instruction to be executed.

- Stack Pointer: The location of the top of the stack.

- Other process-specific information: Memory mappings, open files, etc.

Then, the OS loads the context of the next task, allowing it to resume execution from where it left off. This allows the OS to manage multiple tasks concurrently, even on a single-core processor by rapidly switching between them.

Importance in RTOS: Efficient context switching is crucial in RTOSes for ensuring real-time responsiveness. The time taken for context switching needs to be predictable and minimal to meet strict timing requirements. Inefficient context switching could lead to missed deadlines and system instability.

Q 8. What are semaphores and mutexes, and when would you use each?

Semaphores and mutexes are synchronization primitives used in concurrent programming to control access to shared resources and prevent race conditions. Think of them as traffic signals for your processes.

A mutex (mutual exclusion) is a binary semaphore—it can only be in one of two states: locked or unlocked. Only one task can hold the mutex at a time. Imagine a single-lane bridge: only one car can cross at a time. You’d use a mutex when you need to protect a shared resource that only one task should access at any given moment, such as a global variable or a hardware peripheral.

A semaphore, on the other hand, can have a count greater than one. It represents a pool of resources. Tasks can acquire the semaphore (decrementing the count) and release it (incrementing the count). Think of a parking lot with multiple parking spaces: multiple cars can park simultaneously, up to the capacity of the lot. You’d use a semaphore when multiple tasks can access a shared resource concurrently, but with a limited number of simultaneous accesses.

Example: Imagine a printer shared by multiple tasks. A mutex would be appropriate if only one task should print at a time. A semaphore might be used if multiple tasks can send print jobs concurrently, but the printer can only handle a certain number of jobs simultaneously (e.g., a semaphore with a count equal to the number of printer buffers).

Q 9. Explain different methods of inter-process communication (IPC).

Inter-process communication (IPC) allows different processes or tasks to exchange data and synchronize their actions. Several methods exist, each with its strengths and weaknesses:

- Shared Memory: Processes share a common memory region. This is the fastest method but requires careful synchronization to avoid race conditions. Think of it like a shared whiteboard where multiple people can write at the same time—you need rules to prevent chaos.

- Message Queues: Processes communicate by sending and receiving messages asynchronously. This provides better decoupling than shared memory but introduces some overhead. Imagine sending emails: you can send a message without knowing if the recipient is immediately available.

- Pipes: Unidirectional or bidirectional communication channels. Think of a water pipe; data flows in one direction. Often used for simple communication between parent and child processes.

- Sockets: Used for network communication between processes on the same machine or across a network. Think of it like making a phone call—it’s a connection between two distinct entities.

- Signals: Asynchronous notifications sent from one process to another to signal events. Imagine pressing a button to interrupt a process.

The choice of IPC mechanism depends on factors like the nature of the data being exchanged, the required level of synchronization, and the performance requirements.

Q 10. What is a critical section?

A critical section is a code segment that accesses shared resources. It’s crucial that only one task executes the critical section at any given time to prevent data corruption or other inconsistencies. Think of it as a single-occupancy restroom: only one person can be inside at a time. If multiple people try to enter simultaneously, you’ll get a mess!

Example: Updating a global variable from multiple threads without proper synchronization can lead to unexpected results. The code segment that updates the variable is the critical section. If multiple threads enter the critical section simultaneously, the final value of the global variable will be unpredictable.

Q 11. How do you prevent race conditions?

Race conditions occur when multiple tasks access and manipulate shared resources concurrently, leading to unpredictable behavior. The outcome depends on the unpredictable order in which the tasks execute. To prevent race conditions, you need to ensure mutual exclusion within critical sections. This can be achieved using synchronization primitives like:

- Mutexes: Ensure only one task can access the shared resource at a time.

- Semaphores: Control access to a shared resource by multiple tasks, limiting concurrent access.

- Disabling Interrupts: (Generally discouraged unless absolutely necessary and only for very short critical sections) Prevents context switching, guaranteeing that the current task won’t be interrupted during the critical section. However, this approach can impact system responsiveness and is generally less preferred.

Proper use of these mechanisms ensures that only one task is operating within the critical section at a given time.

Q 12. What is deadlock, and how can you avoid it?

Deadlock is a situation where two or more tasks are blocked indefinitely, waiting for each other to release the resources that they need. Imagine two trains approaching each other on a single-track railway—neither can proceed until the other moves, resulting in a standstill. This is a classic deadlock scenario.

Avoiding Deadlock:

- Mutual Exclusion: This is inherent to resource sharing, so you can’t avoid it completely.

- Hold and Wait: Avoid allowing a task to hold one resource while waiting for another. Request all resources at once.

- No Preemption: If a task can’t acquire a needed resource, don’t force it to release other resources. Instead, let it wait.

- Circular Wait: The most crucial condition to break. Implement a strict resource ordering to prevent circular dependencies. Assign an order to all resources. Tasks must request resources in that order.

Careful resource management and appropriate resource ordering are essential to prevent deadlocks in embedded systems.

Q 13. Explain the concept of priority inversion.

Priority inversion occurs when a lower-priority task holds a resource that a higher-priority task needs. The higher-priority task is blocked, while the lower-priority task continues to execute, inverting the expected priority order. Imagine a slower pedestrian holding a gate that a high-speed train wants to cross.

Example: A high-priority task needs to access a sensor, but a low-priority task currently holds a lock on the sensor. The high-priority task will be blocked until the low-priority task releases the sensor. This can lead to significant performance degradation.

Solutions: Priority inheritance or priority ceiling protocols can be used to mitigate priority inversion. Priority inheritance temporarily raises the priority of the lower-priority task holding the resource to the priority of the waiting higher-priority task, ensuring that the resource is released quickly.

Q 14. How do you debug an embedded system?

Debugging embedded systems is challenging due to their resource constraints and lack of a full-blown operating system. Strategies include:

- Print Statements (printf debugging): Simple but effective for basic debugging. Insert print statements strategically in your code to monitor variable values and program flow. However, be mindful of the limited output buffer space.

- Hardware Debuggers (JTAG, SWD): Powerful tools that allow you to step through your code, examine registers, set breakpoints, and single-step through instructions. Essential for serious debugging.

- Logic Analyzers: Capture and analyze digital signals on a circuit, revealing timing issues or incorrect data transmission. Useful for identifying hardware-related problems.

- Oscilloscope: Analyze analog signals to detect voltage levels, timing issues, or noise.

- Software Trace Tools: These capture program execution traces, which are very helpful for analyzing timing and resource usage. They can offer deep insights into events and timing relationships.

- Simulation: Before deploying to actual hardware, simulate your system to detect errors earlier in development.

The choice of debugging techniques depends on the complexity of the system, the nature of the bug, and the available resources.

Q 15. What are the advantages and disadvantages of using an RTOS?

Real-Time Operating Systems (RTOS) are crucial for embedded systems requiring precise timing and multitasking. They offer significant advantages, but also come with drawbacks.

- Advantages:

- Deterministic behavior: RTOSes provide predictable task execution times, crucial for applications with strict timing constraints, like motor control or real-time data acquisition. This is in contrast to a simple loop-based approach where task execution time can vary depending on the load.

- Multitasking: They allow concurrent execution of multiple tasks, efficiently managing resources and maximizing system utilization. Imagine a system needing to simultaneously read sensor data, control actuators, and communicate over a network – an RTOS handles this elegantly.

- Resource management: RTOSes provide mechanisms for managing memory, peripherals, and interrupts effectively, preventing conflicts and ensuring system stability.

- Modular design: Tasks are typically designed as independent modules, making development, testing, and maintenance easier. This modularity improves code reusability and reduces complexity.

- Disadvantages:

- Increased complexity: Implementing and managing an RTOS adds complexity compared to a bare-metal approach. There’s a learning curve associated with understanding scheduling algorithms, task synchronization primitives, and memory management.

- Overhead: RTOSes introduce some runtime overhead due to task switching, context saving, and inter-process communication. This can be significant for resource-constrained systems.

- Memory footprint: RTOS kernels require a certain amount of memory, which can be a limiting factor in small embedded systems with limited RAM.

- Real-time constraints: While designed for real-time applications, the choice of RTOS and its configuration need careful consideration to meet specific timing deadlines. An improperly configured RTOS could miss crucial deadlines.

For example, an automotive engine control unit (ECU) would benefit immensely from an RTOS due to its strict timing requirements, while a simple thermostat might not need the added complexity and overhead.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain different debugging techniques used in embedded systems.

Debugging embedded systems can be challenging due to limited access and the real-time nature of operations. Several techniques are employed:

- JTAG (Joint Test Action Group): This hardware debugging interface allows direct access to the microcontroller’s internal registers and memory. It facilitates single-stepping through code, setting breakpoints, and inspecting variables. It’s the workhorse of low-level debugging.

- In-circuit emulators (ICE): ICEs offer more advanced debugging capabilities than JTAG, often including real-time tracing, which logs execution details for later analysis. They can replace the target microcontroller entirely, providing a more controlled debugging environment.

- Serial wire debugging (SWD): A simpler and more efficient alternative to JTAG, SWD is used by many ARM-based microcontrollers. It’s frequently preferred for its ease of use and lower pin count.

- Print statements (printf debugging): While simple, this method involves strategically placing

printfstatements in the code to output diagnostic information through a serial port or other communication channel. It is invaluable for quick checks but is less effective for complex issues. - Logic analyzers: These tools capture digital signals on multiple pins simultaneously, allowing for detailed analysis of communication protocols, timing issues, and signal integrity problems. They are indispensable when dealing with complex timing or hardware interaction issues.

- Oscilloscope: Useful for visually examining analog signals and detecting issues like noise or signal distortion. Particularly crucial when debugging systems with analog components.

- Debuggers integrated into IDEs: Modern Integrated Development Environments (IDEs) often integrate debugging functionalities. They offer features like stepping through code, setting breakpoints, viewing variables, and evaluating expressions all within a graphical interface.

For instance, when debugging a sensor reading issue, I might start with printf statements to check if the data is being read correctly. If the problem persists, I’d then use JTAG or SWD to step through the code and examine register values to pinpoint the exact source of the error. In cases of complex timing interactions, I might use a logic analyzer for deep analysis.

Q 17. What are the various communication protocols used in embedded systems?

Embedded systems employ a variety of communication protocols depending on the application requirements. Factors like data rate, distance, power consumption, and cost all influence the choice.

- Serial communication:

- UART (Universal Asynchronous Receiver/Transmitter): Simple, widely used for low-speed communication.

- SPI (Serial Peripheral Interface): High-speed, synchronous communication for connecting multiple peripherals.

- I2C (Inter-Integrated Circuit): Low-speed, multi-master communication protocol for connecting multiple devices on a bus.

- Network communication:

- Ethernet: Used for high-speed, long-distance communication. Essential for systems requiring significant network bandwidth like industrial control or networking appliances.

- CAN (Controller Area Network): Robust, real-time communication protocol widely used in automotive and industrial applications.

- Wi-Fi: Wireless communication offering flexibility and convenience, often used for IoT (Internet of Things) devices.

- Bluetooth: Low-power, short-range wireless communication, often used in consumer electronics and personal area networks.

- Other protocols:

- USB (Universal Serial Bus): Widely used for data transfer and power delivery.

- LIN (Local Interconnect Network): Low-cost, single-master communication system used in automotive applications.

Choosing the right protocol is crucial. For example, a low-cost, battery-powered sensor node might use I2C or Bluetooth to conserve power, while a high-performance industrial control system might leverage Ethernet or CAN for its speed and reliability.

Q 18. Explain the differences between SPI, I2C, and UART.

SPI, I2C, and UART are common serial communication protocols, each with distinct characteristics.

| Feature | SPI | I2C | UART |

|---|---|---|---|

| Data lines | MOSI, MISO, SCK (at least 3) | SDA, SCL (2) | TXD, RXD (2) |

| Clocking | Synchronous (clock line provided) | Synchronous (clock line provided) | Asynchronous (no dedicated clock line) |

| Master/Slave | Typically one master, multiple slaves | Multiple masters possible | Typically one master, one slave |

| Speed | High speed | Medium to low speed | Low to medium speed |

| Complexity | Medium | Low | Low |

| Distance | Short to medium | Short | Short to medium |

| Error detection | Usually no built-in mechanism | Usually no built-in mechanism | Usually no built-in mechanism |

SPI (Serial Peripheral Interface) is best suited for high-speed, full-duplex communication with multiple peripherals, but it can become complex with multiple devices. I2C (Inter-Integrated Circuit) is efficient for connecting multiple devices with a simpler setup, but it’s slower. UART (Universal Asynchronous Receiver/Transmitter) is simple and suitable for low-speed, point-to-point communication. Consider an application like an LCD screen: SPI is good for the high data rate needed to refresh the screen, whereas a low-speed sensor might interface better with I2C. A simple debugging output might utilize UART due to its simplicity and prevalence of support.

Q 19. How would you design a watchdog timer?

A watchdog timer (WDT) is a crucial safety mechanism in embedded systems. It acts as a fail-safe, restarting the system if the main application software malfunctions or hangs. The design involves several key components:

- Timer counter: A counter that decrements at a fixed rate (e.g., using a timer peripheral in the microcontroller).

- Load value: An initial value loaded into the counter. This value determines the timeout period.

- Reset mechanism: A mechanism to periodically reset the timer counter. This is typically done by the main application code.

- Timeout action: The action to take when the counter reaches zero (typically a system reset).

Design steps:

- Choose a timer peripheral: Select an appropriate timer on the microcontroller that suits the desired timeout period.

- Configure the timer: Set the timer’s prescaler, clock source, and interrupt configuration (if used for interrupt-based methods).

- Implement the reset mechanism: In the main application loop, periodically (before the timeout) reset the timer’s counter to the load value. The frequency of this reset should be chosen to avoid unnecessary resets while providing adequate time to detect issues.

- Handle the timeout: Implement the response to the timer reaching zero, typically a system reset. The precise method can vary depending on the microcontroller architecture.

// Example pseudo-code (interrupt-based): void watchdog_init(uint16_t timeout) { // Configure timer with timeout value } void watchdog_reset() { // Reset timer counter } void watchdog_isr() { // System reset } int main() { watchdog_init(1000); // Timeout of 1000 timer counts while(1) { // Application code watchdog_reset(); // Reset watchdog timer periodically } }

A well-designed watchdog timer ensures system reliability, preventing indefinite hangs or malfunctions that might lead to unsafe conditions. The timeouts need careful consideration, balancing the need for prompt response to failures with avoidance of spurious resets.

Q 20. How do you manage power consumption in embedded systems?

Power management in embedded systems is crucial, particularly for battery-powered devices. It involves optimizing the design and code to minimize energy consumption without sacrificing performance.

- Hardware choices: Selecting low-power components, such as a microcontroller with low standby current, energy-efficient memory, and power-saving peripherals is paramount.

- Software techniques: Implementing efficient algorithms and code optimizations to reduce CPU usage, such as avoiding unnecessary computations or operations.

- Power-saving modes: Utilizing the microcontroller’s low-power modes (sleep, idle) when the system is idle or not performing computationally intensive tasks. During sleep, many peripherals are shut down, resulting in minimal power draw.

- Clock management: Dynamically adjusting the clock speed of the microcontroller. Running at a lower clock speed reduces power consumption but also slows down processing.

- Peripheral management: Disabling unused peripherals to save power. This is often handled via microcontroller configuration registers.

- Event-driven architecture: Design the application using an event-driven approach to reduce CPU activity. The system only activates when events (interrupts, sensor data) occur, minimizing the time spent in active processing.

In a real-world context, a wearable device needs to optimize power consumption to maximize battery life. Techniques such as entering low-power sleep modes between sensor readings or lowering the microcontroller’s clock speed during periods of inactivity extend battery life considerably. Similarly, IoT devices deployed remotely also rely heavily on these strategies.

Q 21. Explain different power management techniques.

Several power management techniques are available to optimize energy use in embedded systems. These often work in concert.

- Sleep modes: Microcontrollers offer various sleep modes, each with different power consumption levels and wake-up times. These range from ‘idle’ modes with low power consumption and fast wake up, to ‘deep sleep’ modes that consume very little power but require longer wake up times.

- Clock gating: Disabling the clock signal to individual peripherals or modules when not in use. This prevents these components from consuming power.

- Power gating: Completely cutting off power to unused blocks of the system, providing more aggressive power savings than clock gating. But it often requires longer wake-up times.

- Dynamic voltage scaling (DVS): Adjusting the voltage supplied to the microcontroller dynamically to match the computational needs. Lower voltage reduces power consumption, but the trade-off is often lower processing speed.

- Power budgeting: Allocating a fixed amount of power to each part of the system. This helps ensure that no single component consumes excessively, and establishes boundaries for design.

- Thermal management: Controlling the temperature of the system helps maintain efficiency and prevent overheating, which increases power consumption. Heat sinks or other cooling mechanisms may be necessary.

The selection of techniques depends on the specific requirements. A battery-operated sensor node might prioritize deep sleep modes and clock gating, while a high-performance system might use DVS to balance power consumption with performance needs. Effective power management requires a holistic approach, considering hardware and software techniques together.

Q 22. What is the difference between polling and interrupt-driven input/output?

Polling and interrupt-driven I/O are two fundamentally different ways an embedded system handles input and output. Imagine you’re waiting for a letter – polling is like constantly checking your mailbox, while interrupt-driven I/O is like having the postman ring your doorbell.

- Polling: The microcontroller repeatedly checks the status of an I/O device (e.g., a sensor) in a loop. This is simple to implement but highly inefficient, wasting processing power when nothing is happening. Think of a continuously running

while(1) { checkSensor(); }loop. If the sensor updates infrequently, most of the CPU time is wasted. - Interrupt-driven I/O: The I/O device triggers an interrupt when it has data ready. This interrupt suspends the current task, allowing the microcontroller to immediately process the data, and then resumes the previous task. This is far more efficient for infrequent events as the CPU is free to perform other tasks until the interrupt occurs. An example would be configuring a sensor to generate an interrupt when its value changes, invoking an interrupt service routine (ISR) to handle the new data.

In summary, polling is simple but inefficient, while interrupt-driven I/O is more complex but efficient, especially when dealing with asynchronous events. The best choice depends on the application’s requirements and the frequency of I/O events. High-frequency events are better suited to DMA (Direct Memory Access) for highest efficiency.

Q 23. What are the advantages and disadvantages of using assembly language in embedded systems?

Assembly language offers unparalleled control over the hardware but comes with significant drawbacks. It’s a trade-off between performance and developer productivity.

- Advantages:

- Fine-grained control: Direct manipulation of registers and memory addresses allows for highly optimized code, crucial for resource-constrained systems.

- Performance: Assembly code can be significantly faster than high-level languages because it directly interacts with the hardware.

- Hardware interaction: Access to specialized hardware features might not be possible through high-level languages.

- Disadvantages:

- Complexity: Assembly language is difficult to learn, read, and maintain. It’s highly architecture-specific, meaning code written for one processor won’t work on another.

- Portability: Code isn’t portable across different architectures. Porting to a new microcontroller requires significant rewriting.

- Development time: Writing assembly code takes considerably longer compared to high-level languages like C.

Example: While a C function to toggle a pin might be a few lines, the equivalent assembly could be much longer and more intricate, reflecting the low-level nature of the operations. However, in critical real-time applications requiring the absolute highest performance or very specific hardware control, the advantages can outweigh the disadvantages.

Q 24. How do you deal with memory leaks in embedded systems?

Memory leaks in embedded systems are serious because they lead to system instability or crashes, particularly in systems with limited memory. They occur when dynamically allocated memory isn’t properly freed. The key is careful memory management using techniques like:

- RAII (Resource Acquisition Is Initialization): In C++, using classes with destructors ensures that resources are released when objects go out of scope.

- Explicit deallocation: In C, remember to use

free()for every call tomalloc(). Keep a strict record of every memory allocation to ensure proper deallocation. Tools like Valgrind (for Linux-based systems) can help identify memory leaks. - Static memory allocation: When possible, allocate memory statically to avoid dynamic allocation altogether. This eliminates the possibility of memory leaks associated with forgetting to free memory.

- Memory pool allocation: Instead of using the standard heap allocator, use a custom memory pool allocator. This can be more efficient and prevent fragmentation. This is particularly useful for small, fixed-size allocations.

- Careful use of pointers: Avoid dangling pointers or memory overwrites, as these can lead to memory corruption and leaks.

In practice, careful coding practices, static analysis tools, and thorough testing are essential to prevent memory leaks. Employing robust debugging tools helps pinpoint issues during development and testing. Memory debuggers allow step-by-step monitoring of memory allocation and deallocation, making it much easier to catch errors early in the development lifecycle.

Q 25. How would you test your embedded system code?

Testing embedded systems requires a multi-faceted approach, combining different testing methodologies to achieve high code quality and reliability. It’s crucial to test at multiple levels:

- Unit testing: Each module or function is tested independently to verify its correct functionality. Mocks and stubs can simulate dependencies.

- Integration testing: Modules are combined and tested together to ensure seamless interaction.

- System testing: The entire system is tested as a whole to ensure all components work together correctly. This is where you might use a hardware-in-the-loop simulator.

- Hardware-in-the-loop (HIL) testing: The embedded system is connected to a simulated environment to test its interaction with the real-world hardware.

- Stress testing: The system is subjected to extreme conditions (high temperature, heavy loads, etc.) to verify its robustness and stability.

For unit testing, tools like Unity or CppUTest (for C/C++) are invaluable, providing frameworks for writing test cases, running them, and generating reports. For embedded systems, the JTAG debugger helps in monitoring registers, memory, and variables during testing. Logging facilities, both in debug builds and in a limited way in the final release, are essential for monitoring system behavior and identifying issues. I would use a combination of automated unit and integration tests, supplemented by manual system and stress testing using a real or simulated hardware environment.

Q 26. Describe your experience with different microcontrollers.

My experience spans various microcontroller families, including ARM Cortex-M (e.g., STM32, NXP LPC), AVR (e.g., ATmega), and PIC microcontrollers. I’ve worked extensively with STM32F4 and STM32F7 series chips in several projects, leveraging their advanced peripherals like DMA, timers, and communication interfaces (SPI, I2C, UART). This includes projects involving real-time control, data acquisition, and communication protocols.

With AVR microcontrollers, I’ve focused on projects requiring simple and low-power solutions. I’ve found their ease of use and extensive community support particularly beneficial for rapid prototyping. My experience with PIC microcontrollers is largely based on projects involving industrial automation, where their specific peripheral sets proved advantageous. Each family has its strengths and weaknesses, and the choice of microcontroller depends heavily on the specific project requirements. The factors involved include cost, available memory, processing power, and peripheral needs.

Q 27. How do you handle different clock sources in an embedded system?

Handling multiple clock sources in an embedded system requires careful configuration and management to ensure the system operates correctly. Microcontrollers often have multiple clock sources, including an internal RC oscillator, an external crystal oscillator, and possibly a PLL (Phase-Locked Loop) for higher frequencies.

The choice of clock source depends on factors like accuracy, stability, and power consumption. Accurate timing often requires an external crystal oscillator, but it comes with higher power consumption. The internal RC oscillator is less accurate but highly power-efficient. A PLL allows scaling up the clock frequency for faster processing.

The microcontroller’s clock system usually requires setting up the clock source through registers and configuring the PLL (if used). It’s crucial to consider the system’s timing requirements, including the speed of peripherals and the real-time constraints of the application, when choosing and configuring clock sources. Incorrect clock configuration could lead to malfunctioning peripherals, timing errors, and system instability. A well-structured clock configuration is usually set up during the initialization phase of the embedded software.

Q 28. Explain your experience with version control systems (e.g., Git).

I have extensive experience with Git, using it daily for managing code in my projects. My proficiency includes branching, merging, rebasing, and resolving merge conflicts. I utilize Git’s branching capabilities to manage multiple features concurrently, ensuring that individual development efforts don’t interfere with one another.

I regularly use pull requests (or merge requests) to facilitate code review and collaboration within teams. This promotes code quality by allowing peers to review and provide feedback on proposed changes before merging them into the main branch. My experience also includes using Git for collaborative development, utilizing remote repositories on platforms like GitHub and GitLab, working with different workflow strategies such as Gitflow, and resolving merge conflicts using various conflict resolution strategies.

Beyond the basics, I am comfortable with advanced Git commands and workflows, including cherry-picking, interactive rebasing, and using Git hooks. My skillset in Git has been crucial in managing the evolution of codebases throughout numerous projects ensuring version control, enabling easy rollback to previous versions if necessary, and facilitating collaborative development practices.

Key Topics to Learn for Embedded System Programming Interview

- Real-Time Operating Systems (RTOS): Understanding concepts like scheduling algorithms (round-robin, priority-based), task management, inter-process communication (IPC) mechanisms, and RTOS selection criteria is crucial. Practical application: Designing a system with real-time constraints like a flight controller or industrial automation system.

- Microcontrollers and Microprocessors: Familiarity with different architectures (ARM, AVR, PIC), memory organization (RAM, ROM, Flash), peripherals (timers, UART, SPI, I2C), and the intricacies of working with register-level programming. Practical application: Developing firmware for a sensor node or a control unit.

- Embedded C Programming: Mastering pointers, memory management, bit manipulation, and working with embedded libraries. Practical application: Implementing efficient algorithms and data structures within resource-constrained environments.

- Hardware-Software Interaction: Understanding the interaction between hardware and software, including interrupt handling, DMA, and device drivers. Practical application: Integrating sensors and actuators with the microcontroller.

- Debugging and Testing: Proficiency in using debugging tools, developing effective testing strategies (unit testing, integration testing), and troubleshooting embedded systems. Practical application: Identifying and resolving issues in real-world embedded systems.

- Low-Level Programming Concepts: A strong understanding of memory management, bitwise operations, and working directly with hardware registers. Practical application: Optimizing code for performance and resource utilization.

- Design Patterns and Best Practices: Familiarity with design patterns relevant to embedded systems and understanding coding best practices for maintainability, readability, and robustness. Practical application: Developing modular, scalable, and easily maintainable embedded software.

Next Steps

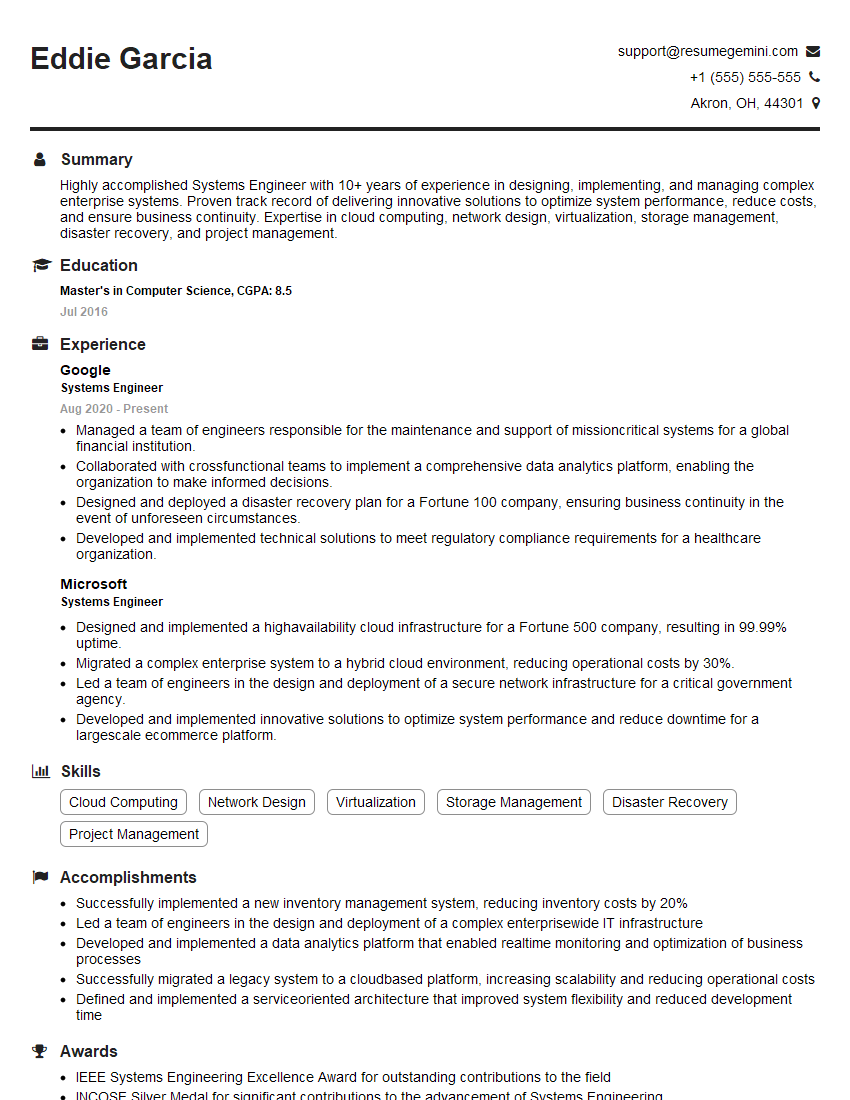

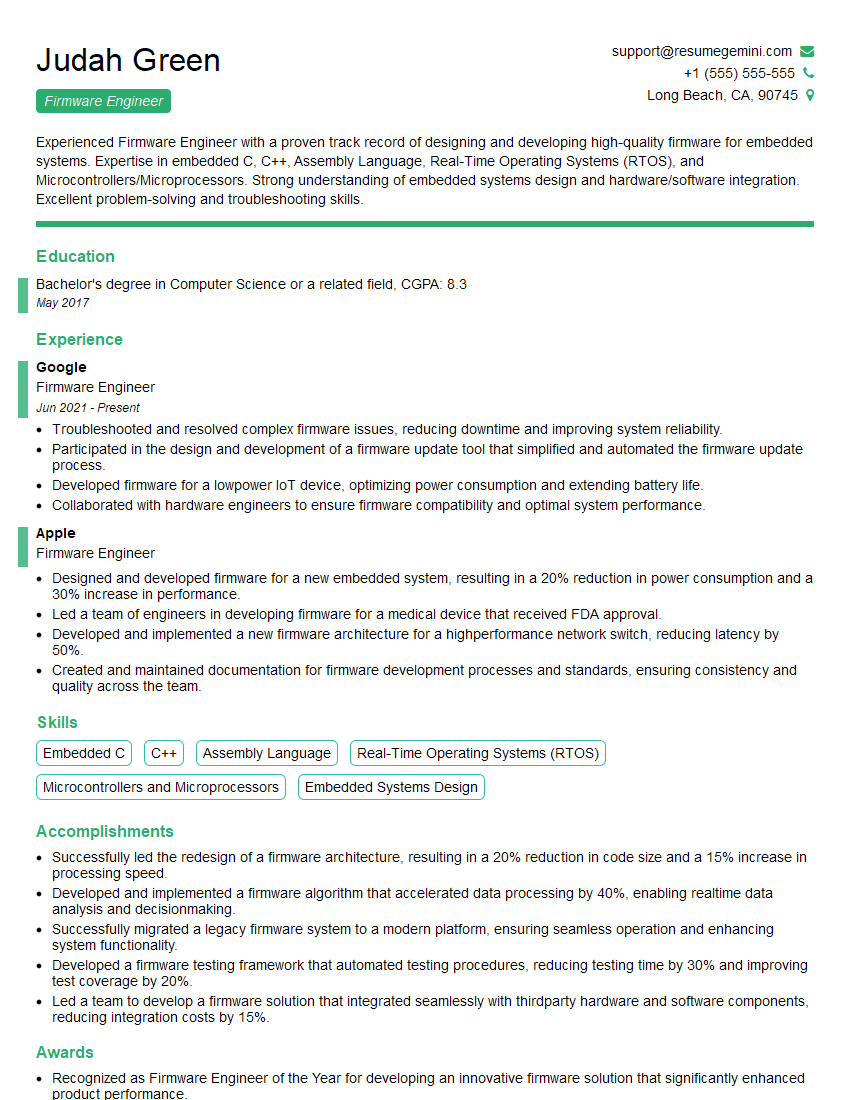

Mastering Embedded System Programming opens doors to exciting and rewarding careers in various industries, from automotive and aerospace to healthcare and consumer electronics. To maximize your job prospects, creating a strong, ATS-friendly resume is essential. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. They offer examples of resumes tailored specifically to Embedded System Programming to give you a head start. Invest the time to craft a compelling resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?