Unlock your full potential by mastering the most common Knowledge of Specialized Terminology interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Knowledge of Specialized Terminology Interview

Q 1. Define ‘polymorphism’ in the context of object-oriented programming.

Polymorphism, in object-oriented programming, refers to the ability of an object to take on many forms. It’s a powerful concept that allows you to write code that can work with objects of different classes without needing to know their specific type. Think of it like a universal remote: it can control various devices (TV, DVD player) even though they are different.

This is achieved primarily through inheritance and interfaces. Inheritance lets you create subclasses that inherit properties and methods from a superclass, while interfaces define a contract that different classes can implement. Both mechanisms allow you to treat objects of different classes uniformly through a common interface or parent class.

Example: Imagine you have a Shape class with a method draw(). Subclasses like Circle, Square, and Triangle inherit from Shape and each implement their own version of the draw() method. You can then have a list of Shape objects containing instances of all three subclasses. Calling draw() on each object in the list will result in the correct shape being drawn, even though the code doesn’t explicitly know the type of each shape.

class Shape { public virtual void draw() { /*Generic drawing logic*/ } } class Circle : Shape { public override void draw() { /*Circle drawing logic*/ } } class Square : Shape { public override void draw() { /*Square drawing logic*/ } }Q 2. Explain the difference between ‘epidemiology’ and ‘etiology’.

While both epidemiology and etiology are crucial in understanding diseases, they focus on different aspects. Epidemiology studies the distribution and determinants of health-related states or events in specified populations, and the application of this study to the control of health problems. Think of it as investigating ‘who gets sick, where, and when?’ It’s about the patterns of disease in the population.

Etiology, on the other hand, focuses on the causes or origins of a disease. It seeks to identify the specific factors (biological, environmental, behavioral) that lead to the development of a particular illness. This is more about the ‘why’ – the underlying mechanisms causing the disease.

Example: An epidemiological study might reveal that a higher incidence of lung cancer is observed in a particular city. Etiological research would then delve into the potential causes, such as air pollution, smoking habits, or genetic predisposition in that population.

Q 3. What is the significance of ‘due diligence’ in a financial context?

In finance, ‘due diligence’ is a comprehensive process of investigation and verification undertaken before a significant business decision, such as a merger, acquisition, or investment. It aims to minimize risk by uncovering potential problems and ensuring that all relevant information is considered.

This involves a thorough examination of financial records, legal documents, operational procedures, and other relevant data. The goal is to ascertain the accuracy and completeness of information provided, identifying any discrepancies or red flags that might impact the investment or transaction.

Example: Before a company acquires another, it would conduct due diligence to assess the target company’s financial health, legal compliance, and market position. This could involve reviewing financial statements, auditing contracts, and evaluating the target’s intellectual property portfolio. Failure to perform adequate due diligence could lead to significant financial losses or legal issues.

Q 4. Describe the concept of ‘regression analysis’ in statistics.

Regression analysis is a statistical method used to model the relationship between a dependent variable and one or more independent variables. It aims to find the best-fitting line (or curve) that describes this relationship, enabling predictions of the dependent variable based on the independent variables.

There are various types of regression analysis, such as linear regression (for linear relationships), multiple regression (for multiple independent variables), and logistic regression (for binary dependent variables). The process involves estimating parameters (coefficients) that quantify the effect of each independent variable on the dependent variable.

Example: A real estate agent might use regression analysis to predict house prices based on factors like size, location, and age. The independent variables would be size, location, and age, and the dependent variable would be the house price. The analysis would produce an equation that estimates the price based on these factors, allowing the agent to make more informed pricing decisions.

Q 5. What are the key differences between ‘Agile’ and ‘Waterfall’ methodologies?

Agile and Waterfall are two contrasting project management methodologies. Waterfall follows a sequential approach, with each phase (requirements, design, implementation, testing, deployment) completed before the next begins. It’s rigid and well-defined, best suited for projects with stable requirements.

Agile, on the other hand, emphasizes iterative development and flexibility. Projects are broken into smaller increments (sprints), with continuous feedback and adaptation throughout the process. It’s more responsive to changing requirements and better suited for complex or uncertain projects.

- Waterfall: Linear, sequential, inflexible, detailed upfront planning.

- Agile: Iterative, incremental, flexible, adaptable to change, collaborative.

Example: Building a bridge (stable requirements) might be better suited to the Waterfall model, whereas developing a software application with evolving user needs is better managed using an Agile approach.

Q 6. Explain the meaning of ‘GDPR’ and its implications for data privacy.

GDPR, or the General Data Protection Regulation, is a European Union regulation on data protection and privacy for all individuals within the EU and the European Economic Area. It aims to give individuals more control over their personal data and holds organizations accountable for how they handle it.

Implications for data privacy: GDPR mandates several key principles, including:

- Consent: Organizations must obtain explicit consent for processing personal data.

- Data minimization: Collect only necessary data.

- Data security: Implement appropriate technical and organizational measures to protect data.

- Data subject rights: Individuals have rights to access, rectify, erase, and restrict their data.

Non-compliance can result in significant fines and reputational damage. Essentially, GDPR shifts the responsibility of data protection to the organization handling the data, requiring a proactive and transparent approach to data privacy.

Q 7. Define ‘intellectual property’ and list three key aspects.

Intellectual property (IP) refers to creations of the mind, such as inventions; literary and artistic works; designs; and symbols, names, and images used in commerce.

Three key aspects of intellectual property are:

- Patents: Grant exclusive rights to inventors for their inventions (e.g., a new drug or technology).

- Copyrights: Protect original creative works, such as books, music, and software.

- Trademarks: Protect brand names and logos used in commerce to distinguish goods and services.

Protecting intellectual property is crucial for businesses to safeguard their competitive advantage and prevent unauthorized use of their creations. This protection can be achieved through registration and enforcement of IP rights.

Q 8. What is the difference between ‘synecdoche’ and ‘metonymy’?

Both synecdoche and metonymy are figures of speech where one thing is substituted for another, but they differ in the nature of the substitution.

Synecdoche uses a part to represent the whole, or vice versa. For example, saying “wheels” to refer to a car, or “sails” to refer to a ship. In these cases, a part (wheels, sails) stands in for the whole (car, ship). Another example is using “hands” to represent workers.

Metonymy substitutes a related concept or attribute for the thing itself. The substitution is based on association, rather than part-whole. For instance, saying “the White House announced” instead of “the president announced.” Here, “White House” stands in for the institution or the people representing it. Similarly, using “crown” to represent royalty, or “the pen is mightier than the sword” where “pen” signifies writing and “sword” signifies warfare.

In short, synecdoche is about parts and wholes, while metonymy is about related concepts.

Q 9. Explain the concept of ‘semantic ambiguity’ in linguistics.

Semantic ambiguity arises when a word, phrase, or sentence has more than one possible meaning, due to the multiple senses of the words used. This ambiguity isn’t caused by grammatical structure (like syntactic ambiguity), but by the meaning of the words themselves.

For example, the sentence “I saw the bat” could mean you saw a flying mammal (a bat) or a piece of sporting equipment (a bat). The ambiguity stems from the multiple meanings of the word “bat”.

Another example: “The man went to the bank.” This could refer to a financial institution or a riverbank. The context often helps resolve the ambiguity, but sometimes the meaning remains uncertain without further clarification.

Semantic ambiguity can cause problems in communication, especially in legal documents, technical specifications, and software instructions, where precision is crucial. Careful word choice and defining terms precisely helps prevent it.

Q 10. Describe the process of ‘risk mitigation’ in project management.

Risk mitigation in project management is the process of identifying, analyzing, and reducing the likelihood or impact of potential risks that could jeopardize the project’s success.

The process generally involves these steps:

- Risk Identification: Brainstorming potential problems (e.g., delays, budget overruns, technical issues, resource shortages).

- Risk Analysis: Assessing the likelihood and potential impact of each identified risk. This often involves using a risk matrix that plots likelihood against impact.

- Risk Response Planning: Developing strategies to address each risk. Common responses include avoidance (eliminating the risk), mitigation (reducing its likelihood or impact), transference (shifting the risk to another party, like an insurance company), and acceptance (acknowledging the risk and planning for its potential consequences).

- Risk Monitoring and Control: Continuously tracking identified risks, assessing their status, and adjusting responses as needed throughout the project lifecycle.

For example, if a project is reliant on a specific supplier, a risk mitigation strategy might involve identifying alternative suppliers, negotiating contracts with favorable terms, or holding a buffer stock of materials.

Q 11. What is the significance of ‘HIPAA’ in healthcare?

HIPAA, the Health Insurance Portability and Accountability Act of 1996, is a US law designed to protect sensitive patient health information (PHI). It sets standards for the privacy, security, and transmission of electronic protected health information.

Its significance lies in ensuring patient confidentiality and trust in the healthcare system. HIPAA violations can result in significant penalties for healthcare providers, insurance companies, and other covered entities that fail to comply with its regulations.

Key aspects of HIPAA include:

- Privacy Rule: Governs the use and disclosure of PHI.

- Security Rule: Sets security standards for electronic PHI.

- Breach Notification Rule: Requires notification of individuals and authorities in case of a data breach.

HIPAA’s impact extends beyond just legal compliance; it fosters patient trust and protects individuals from potential harm associated with unauthorized access or disclosure of their medical records.

Q 12. Define ‘SQL injection’ and explain its security implications.

SQL injection is a code injection technique used to attack data-driven applications. It exploits vulnerabilities in how applications handle user-supplied input that contains SQL code.

Attackers craft malicious SQL code that’s embedded within seemingly normal user input (e.g., a username or search query). When the application processes this input without proper sanitization, the malicious SQL code is executed, potentially granting the attacker unauthorized access to the database.

For instance, imagine a login form that doesn’t sanitize user input. An attacker might enter a username like ' OR '1'='1 and a password field left empty. The resulting SQL query might look like: SELECT * FROM users WHERE username = '' OR '1'='1'. The condition '1'='1' is always true, granting the attacker access regardless of the password.

The security implications are severe: attackers can steal sensitive data, modify or delete data, and disrupt application functionality. Prevention involves using parameterized queries, input validation, and escaping special characters to prevent malicious code from being interpreted as SQL commands.

Q 13. Explain the term ‘blockchain technology’ in simple terms.

Imagine a digital ledger that’s shared publicly and is cryptographically secured. That’s essentially what blockchain technology is. It’s a decentralized, distributed database that records and verifies transactions in “blocks” that are chained together chronologically and cryptographically linked.

Each block contains a set of validated transactions, a timestamp, and a hash (a unique digital fingerprint) of the previous block. This chaining makes it extremely difficult to alter past transactions without detection. The decentralized nature means no single entity controls the blockchain, enhancing transparency and security.

Blockchain is used in cryptocurrencies like Bitcoin, but its applications extend beyond finance, including supply chain management, voting systems, and digital identity verification.

Q 14. What is the difference between ‘depreciation’ and ‘amortization’?

Both depreciation and amortization are methods of allocating the cost of an asset over its useful life, but they apply to different types of assets.

Depreciation is used for tangible assets (physical assets you can touch) like buildings, machinery, and vehicles. It accounts for the wear and tear, obsolescence, and decline in value of these assets over time.

Amortization is used for intangible assets (non-physical assets) such as patents, copyrights, trademarks, and goodwill. It spreads the cost of these assets over their useful life, reflecting their gradual consumption or expiration.

The methods used to calculate depreciation (e.g., straight-line, declining balance) and amortization (usually straight-line) can vary, but the core concept of spreading the cost over time remains the same. Both have accounting implications for tax purposes and financial reporting.

Q 15. Define ‘API’ and explain its function in software development.

An API, or Application Programming Interface, is essentially a messenger that allows different software systems to talk to each other. Think of it as a waiter in a restaurant: you (one application) place your order (a request) with the waiter (the API), who then takes your order to the kitchen (another application) and brings back your food (the response).

In software development, APIs define how different parts of an application or different applications interact. They specify the types of requests that can be made, the data formats used, and the responses expected. This allows developers to build modular and reusable components, accelerating development and promoting interoperability. For instance, a weather app might use a weather API to fetch real-time weather data, and a social media platform might use a payment gateway API to process transactions.

A simple example is using a mapping API like Google Maps API. Your application makes a request to the API providing a location, and the API responds with map data which your application then renders for the user.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of ‘hypothesis testing’ in scientific research.

Hypothesis testing is a crucial element of the scientific method. It’s a formal process used to determine if there’s enough evidence from a sample of data to support a claim about a population. Imagine you have a hypothesis – a testable statement about the world – such as ‘drinking coffee improves memory.’ Hypothesis testing lets you see if your data supports or refutes this claim.

The process generally involves:

- Formulating a null hypothesis (H₀): This is the statement that you are trying to disprove. In our example, the null hypothesis might be: ‘Drinking coffee has no effect on memory.’

- Formulating an alternative hypothesis (H₁): This is the statement you’re trying to prove. For our example: ‘Drinking coffee improves memory.’

- Collecting data: Conducting an experiment where one group drinks coffee and another doesn’t, then measuring their memory scores.

- Performing statistical tests: Using statistical techniques (e.g., t-tests, ANOVA) to determine the probability of observing the data if the null hypothesis were true (p-value).

- Interpreting results: If the p-value is below a pre-determined significance level (e.g., 0.05), we reject the null hypothesis, suggesting there’s enough evidence to support the alternative hypothesis. Otherwise, we fail to reject the null hypothesis.

It’s important to note that hypothesis testing doesn’t ‘prove’ anything definitively. It only provides evidence to support or refute a claim based on the available data. There’s always a chance of making errors (Type I or Type II errors).

Q 17. What is the meaning of ‘SWOT analysis’?

A SWOT analysis is a strategic planning tool used to evaluate the Strengths, Weaknesses, Opportunities, and Threats involved in a project or in a business venture. It involves identifying internal factors (strengths and weaknesses) and external factors (opportunities and threats) that can affect the success of an initiative.

For example, a new restaurant might have:

- Strengths: Excellent chef, unique menu, convenient location.

- Weaknesses: Lack of marketing experience, limited seating capacity.

- Opportunities: Growing demand for healthy food options, potential for catering services.

- Threats: Increasing competition, rising food costs, negative online reviews.

By systematically analyzing these factors, businesses can develop strategies to leverage strengths, address weaknesses, capitalize on opportunities, and mitigate threats.

Q 18. Define ‘bioinformatics’ and its applications.

Bioinformatics is an interdisciplinary field that combines biology, computer science, statistics, and mathematics to analyze and interpret biological data. Essentially, it’s using computational tools to understand life’s complex processes. Imagine biology generating massive amounts of data, like genetic sequences or protein structures, and bioinformatics providing the tools to analyze this data effectively.

Applications of bioinformatics include:

- Genomics: Analyzing entire genomes to understand gene function, evolution, and disease susceptibility.

- Proteomics: Studying proteins and their interactions within cells and organisms.

- Drug discovery: Identifying potential drug targets and designing new drugs.

- Evolutionary biology: Reconstructing evolutionary relationships between species.

- Personalized medicine: Tailoring medical treatments to an individual’s genetic makeup.

An example is using sequence alignment algorithms to compare DNA sequences from different organisms, helping to identify evolutionary relationships or regions crucial for functionality.

Q 19. Explain the difference between ‘quantitative’ and ‘qualitative’ research.

Quantitative research and qualitative research represent different approaches to understanding a phenomenon. The key difference lies in the type of data collected and how it’s analyzed.

- Quantitative research focuses on numerical data and statistical analysis. It aims to measure and quantify variables to establish relationships, test hypotheses, and make generalizations about a population. Think surveys with multiple-choice questions, experiments measuring physical quantities, and statistical analysis of large datasets. Examples include clinical trials measuring the effectiveness of a drug or surveys assessing customer satisfaction.

- Qualitative research focuses on non-numerical data, such as text, images, or audio. It aims to explore meanings, interpretations, and experiences to gain a deeper understanding of a phenomenon. This could involve conducting in-depth interviews, analyzing open-ended survey responses, or observing behavior in natural settings. Examples include ethnographies studying cultural practices or focus groups exploring consumer preferences.

In short, quantitative research is about measuring and quantifying, while qualitative research is about exploring and understanding.

Q 20. What is the significance of ‘peer review’ in academic publishing?

Peer review is a critical process in academic publishing that ensures the quality and validity of research before publication. Before a research paper is accepted into a journal, it is sent to other experts (peers) in the same field who critically evaluate the work for its rigor, originality, and significance.

The peer reviewers assess various aspects, including:

- Methodology: The soundness of the research design and methods used.

- Data analysis: The appropriateness and accuracy of the data analysis techniques.

- Results: The clarity and validity of the findings.

- Interpretation: The interpretation and discussion of the results.

- Overall significance: The contribution of the research to the field.

Peer review helps to filter out flawed research, improve the quality of published work, and maintain the integrity of the academic literature. While not perfect, it’s an essential gatekeeping mechanism ensuring that only robust and reliable research gets published.

Q 21. Define ‘machine learning’ and explain its key algorithms.

Machine learning (ML) is a branch of artificial intelligence (AI) that focuses on enabling computers to learn from data without being explicitly programmed. Instead of relying on predefined rules, ML algorithms identify patterns, make predictions, and improve their performance over time based on the data they are exposed to. Think of it as teaching a computer to learn like a human, through experience.

Key machine learning algorithms include:

- Supervised learning: Algorithms that learn from labeled data (data with known inputs and outputs). Examples include linear regression (predicting a continuous value), logistic regression (predicting a categorical value), and support vector machines (SVM) (classification and regression).

- Unsupervised learning: Algorithms that learn from unlabeled data (data without known outputs). Examples include k-means clustering (grouping similar data points), principal component analysis (PCA) (dimensionality reduction), and association rule mining (finding relationships between variables).

- Reinforcement learning: Algorithms that learn through trial and error by interacting with an environment. The algorithm receives rewards for desirable actions and penalties for undesirable actions, learning to maximize its cumulative reward. An example is a game-playing AI that learns to win a game by trying different strategies and receiving feedback on its performance.

These algorithms power many applications, including image recognition, spam filtering, recommendation systems, and self-driving cars.

Q 22. Explain the term ‘supply chain management’.

Supply chain management (SCM) encompasses the planning, execution, and control of all activities involved in sourcing raw materials, transforming them into finished goods, and delivering them to the end consumer. It’s essentially managing the entire flow of goods and services, from origin to consumption.

Think of it like a relay race: each stage – sourcing, manufacturing, warehousing, distribution, and retail – is a leg, and SCM ensures a smooth and efficient handover between each team member to reach the finish line (the customer) quickly and effectively.

- Planning: Forecasting demand, sourcing suppliers, scheduling production.

- Sourcing: Selecting and managing suppliers, negotiating contracts, ensuring quality control.

- Manufacturing: Production planning, quality control, inventory management.

- Distribution: Warehousing, transportation, logistics, order fulfillment.

- Retail: Sales, customer service, returns management.

Effective SCM leads to reduced costs, improved efficiency, enhanced customer satisfaction, and increased profitability. For example, a company might use SCM strategies to optimize inventory levels, preventing stockouts and minimizing storage costs, or leverage real-time data to anticipate disruptions and proactively mitigate risks.

Q 23. Describe the difference between ‘encryption’ and ‘decryption’.

Encryption and decryption are two sides of the same coin in cryptography – the art of securing communication and data. Encryption is the process of converting readable data (plaintext) into an unreadable format (ciphertext) using an encryption algorithm and a secret key. Decryption is the reverse process – converting ciphertext back into plaintext using the same algorithm and key.

Imagine sending a secret message using a code. Encryption is like writing your message using the code, making it unreadable to anyone without the key. Decryption is then using the key to translate the code back into your original message.

Encryption Example (Conceptual):

Plaintext: ‘Hello World’

Encryption (with a key): ‘jgnnq yqtnf’

Decryption (with the same key): ‘Hello World’

Different encryption methods, such as AES (Advanced Encryption Standard) and RSA (Rivest-Shamir-Adleman), use different algorithms and varying key lengths, offering different levels of security. The strength of the encryption depends on the algorithm’s complexity and the length of the key.

Q 24. What is the meaning of ‘Bayesian inference’?

Bayesian inference is a statistical method used to update our beliefs about something based on new evidence. It works by combining prior knowledge (what we already believe) with new data (evidence) to obtain a posterior belief – a refined understanding of the probability of an event.

Think of it as a detective investigating a case. The detective initially has some hunches (prior knowledge). As they gather more evidence (new data), they update their suspicions (posterior belief), getting closer to solving the case.

Formula (Simplified):

Posterior Belief = (Prior Belief * Likelihood of Evidence) / Evidence Probability

Bayesian inference is used across various fields, such as machine learning, medical diagnosis, and spam filtering. For example, a spam filter might initially have a prior belief that a certain email is spam based on its sender address. If the email contains certain keywords commonly found in spam emails (evidence), the filter updates its belief, making it more likely to classify the email as spam.

Q 25. Explain the concept of ‘digital forensics’.

Digital forensics is the application of scientific methods to recover and analyze data from digital devices, such as computers, smartphones, and servers, to investigate cybercrimes or incidents. It involves identifying, preserving, extracting, analyzing, and presenting digital evidence in a legally sound manner.

Imagine a detective investigating a murder where the killer left behind a digital footprint – emails, browsing history, or deleted files. Digital forensics specialists would carefully collect this evidence, ensuring its integrity, and then analyze it to identify the suspect and reconstruct the crime.

- Data Recovery: Recovering deleted files, restoring corrupted data.

- Network Forensics: Analyzing network traffic to identify intrusions and malicious activity.

- Mobile Forensics: Extracting data from smartphones and other mobile devices.

- Cloud Forensics: Investigating data stored in cloud services.

Digital forensics requires specialized tools and expertise to handle various file systems, data formats, and encryption techniques. The process strictly adheres to legal and ethical guidelines to ensure the admissibility of evidence in court.

Q 26. What is the difference between ‘A/B testing’ and ‘multivariate testing’?

A/B testing and multivariate testing are both experimental methods used to compare different versions of something (e.g., a website, ad, or email) to determine which performs better. The key difference lies in the number of variables tested.

A/B testing compares two versions – version A (the control) and version B (the variant) – by varying only one element at a time (e.g., the headline of an ad). This helps isolate the impact of that single change.

Multivariate testing, on the other hand, simultaneously tests multiple variations of several elements (e.g., headline, image, call-to-action button). This allows for a more comprehensive understanding of how different combinations of elements interact to influence performance.

Analogy: Imagine testing a recipe. A/B testing is like changing only one ingredient (e.g., replacing sugar with honey) and comparing the taste of the two versions. Multivariate testing is like simultaneously changing multiple ingredients (sugar, flour, baking powder) and comparing many versions to find the best combination.

Multivariate testing is more complex and requires more data to achieve statistically significant results, but it provides a deeper understanding of user preferences and can lead to more significant improvements.

Q 27. Define ‘natural language processing (NLP)’ and its applications.

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that focuses on enabling computers to understand, interpret, and generate human language. It bridges the gap between human communication and computer understanding.

Imagine a computer that can read and understand a news article, summarize it, or even write its own story. That’s the power of NLP.

- Text analysis: Sentiment analysis (determining the emotional tone of text), topic extraction, named entity recognition (identifying people, places, and organizations).

- Machine translation: Translating text from one language to another.

- Chatbots: Creating interactive conversational agents that can understand and respond to user queries.

- Text generation: Automatically generating human-like text, such as summaries or creative writing.

NLP applications are widespread, from virtual assistants like Siri and Alexa to search engines, spam filters, and medical diagnosis tools. For example, NLP algorithms can analyze customer reviews to understand opinions about a product, or translate documents for global business operations.

Q 28. What is the significance of ‘Agile methodologies’ in software development?

Agile methodologies are iterative and incremental approaches to software development that emphasize flexibility, collaboration, and customer satisfaction. Unlike traditional ‘waterfall’ methods, which follow a rigid linear process, agile methods embrace change and adapt to evolving requirements throughout the project lifecycle.

Think of it as building with LEGOs. Instead of meticulously planning the entire LEGO castle before starting, agile methods involve building smaller sections iteratively, testing them, and making adjustments based on feedback. This allows for quicker progress, easier adaptations to changes in design, and improved quality.

- Iterative development: Breaking down the project into smaller, manageable iterations (sprints) with frequent releases.

- Continuous feedback: Regular collaboration with stakeholders (customers, product owners) to gather feedback and adapt to changing requirements.

- Adaptive planning: Adjusting plans and priorities based on feedback and new insights.

- Self-organizing teams: Empowering development teams to manage their own work and make decisions collaboratively.

Agile methodologies, such as Scrum and Kanban, have become widely adopted due to their ability to deliver software faster, improve quality, and enhance stakeholder satisfaction. They are particularly useful for projects with uncertain or changing requirements.

Key Topics to Learn for Knowledge of Specialized Terminology Interview

- Understanding the Industry Jargon: Familiarize yourself with the common terms, acronyms, and abbreviations specific to your target industry. This demonstrates a foundational understanding and commitment to the field.

- Contextual Application of Terminology: Practice using specialized terms correctly within the context of realistic scenarios. Prepare examples showcasing how you’ve applied this knowledge to solve problems or contribute to projects.

- Defining and Differentiating Key Concepts: Be prepared to clearly explain complex concepts and differentiate between similar-sounding terms. Practice concise and accurate definitions.

- Staying Current with Industry Trends: Demonstrate awareness of evolving terminology and new developments within the field. Show that you are a proactive learner who keeps your knowledge up-to-date.

- Effective Communication: Practice articulating complex ideas clearly and concisely using precise terminology. This demonstrates strong communication skills, vital for any role.

- Problem-Solving with Terminology: Prepare to discuss how your understanding of specialized terminology helps you approach and solve problems efficiently and effectively. Use specific examples from your past experiences.

Next Steps

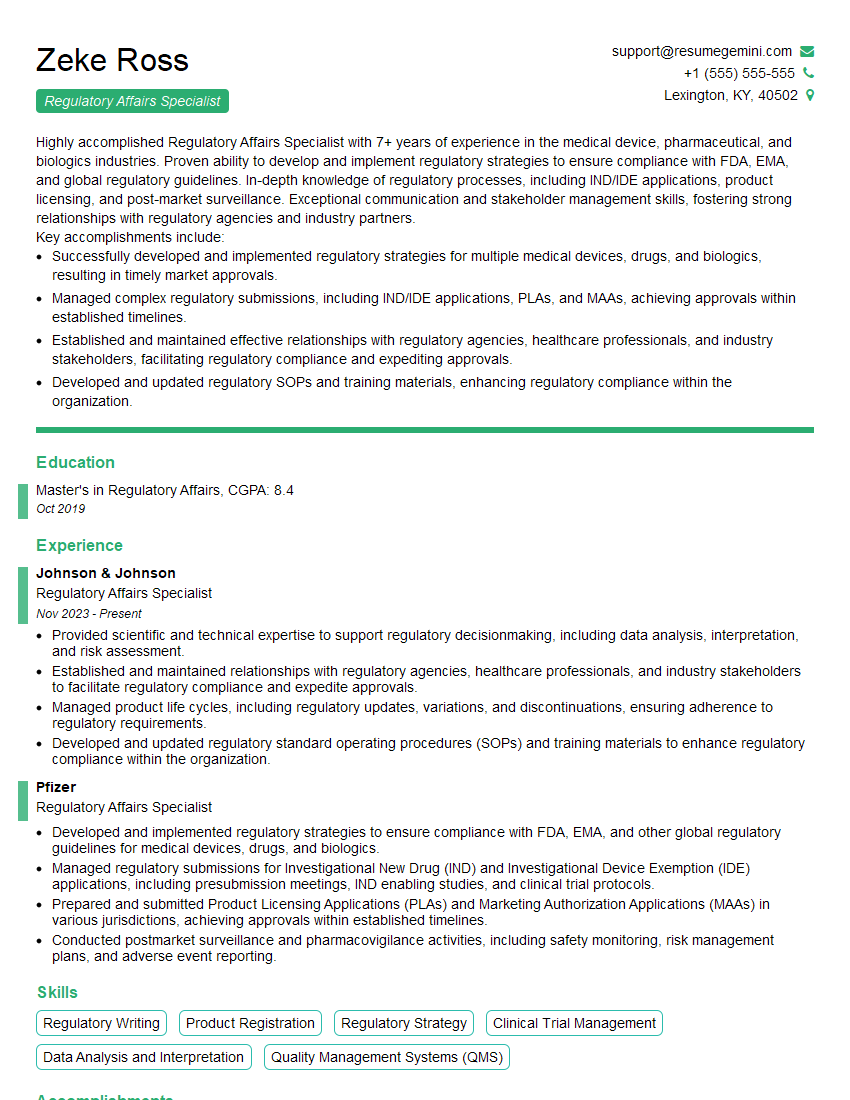

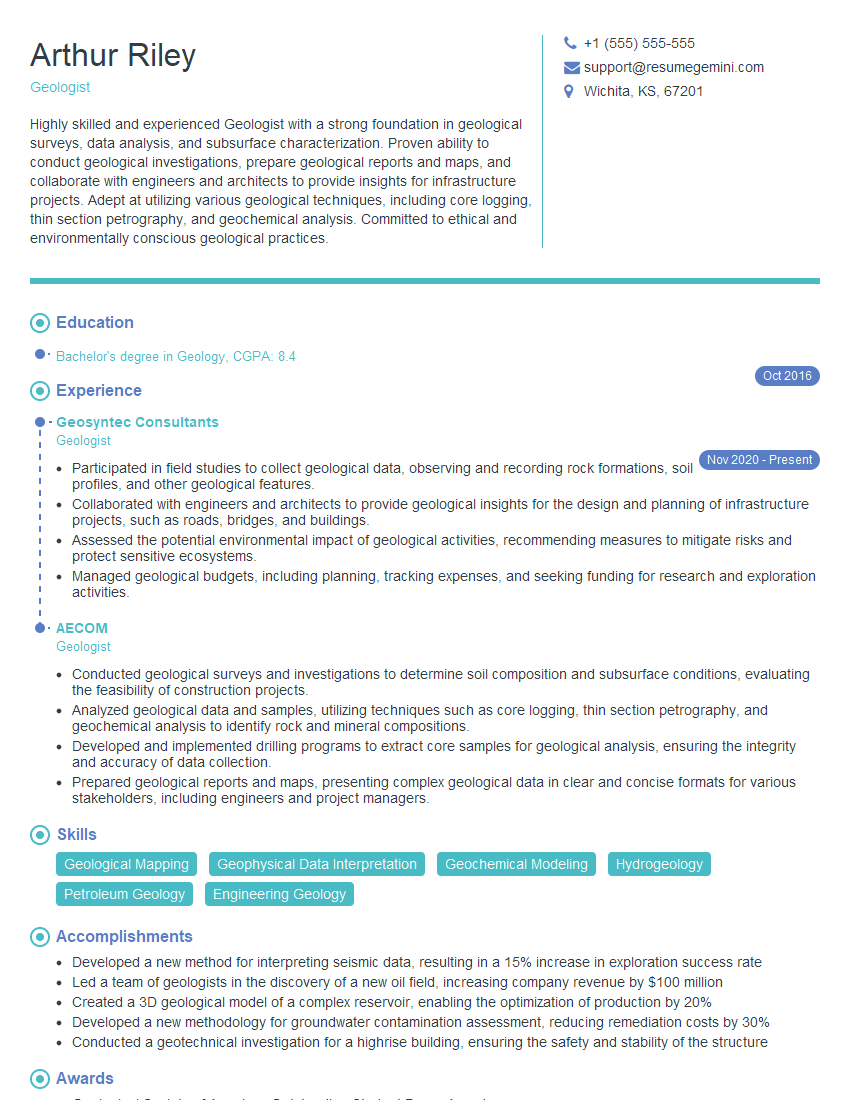

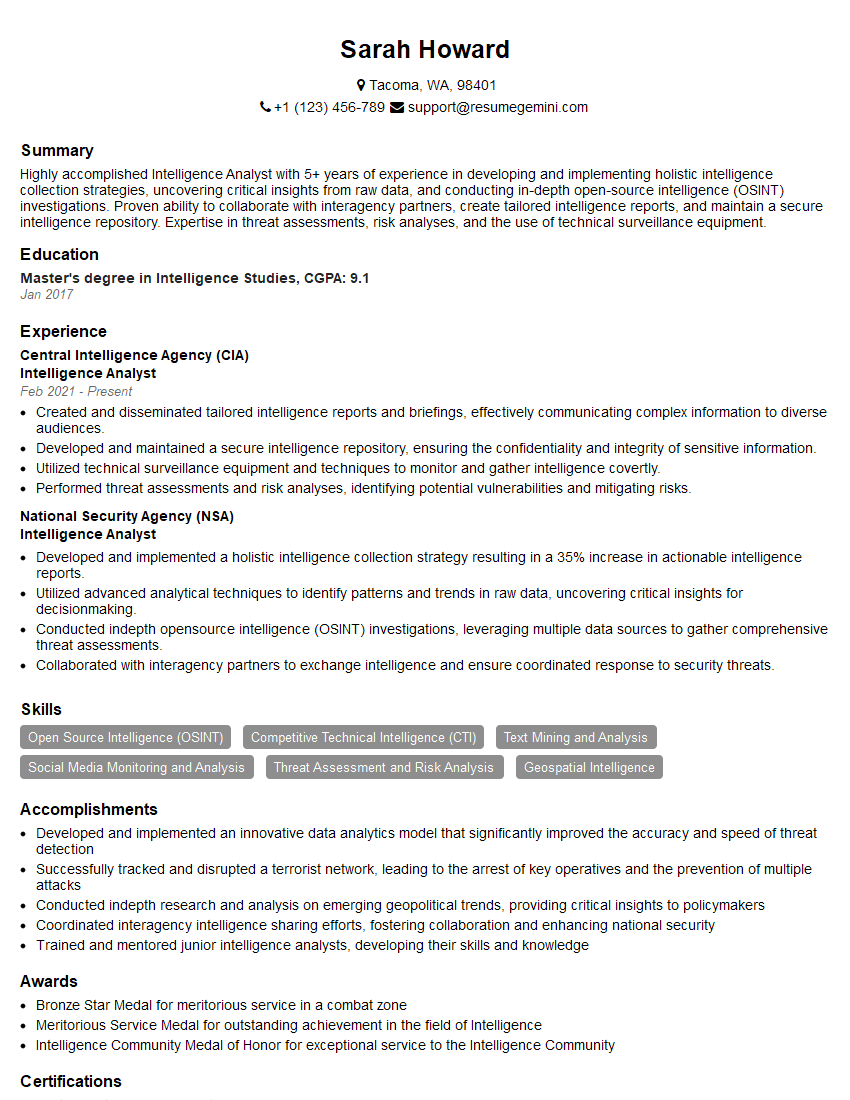

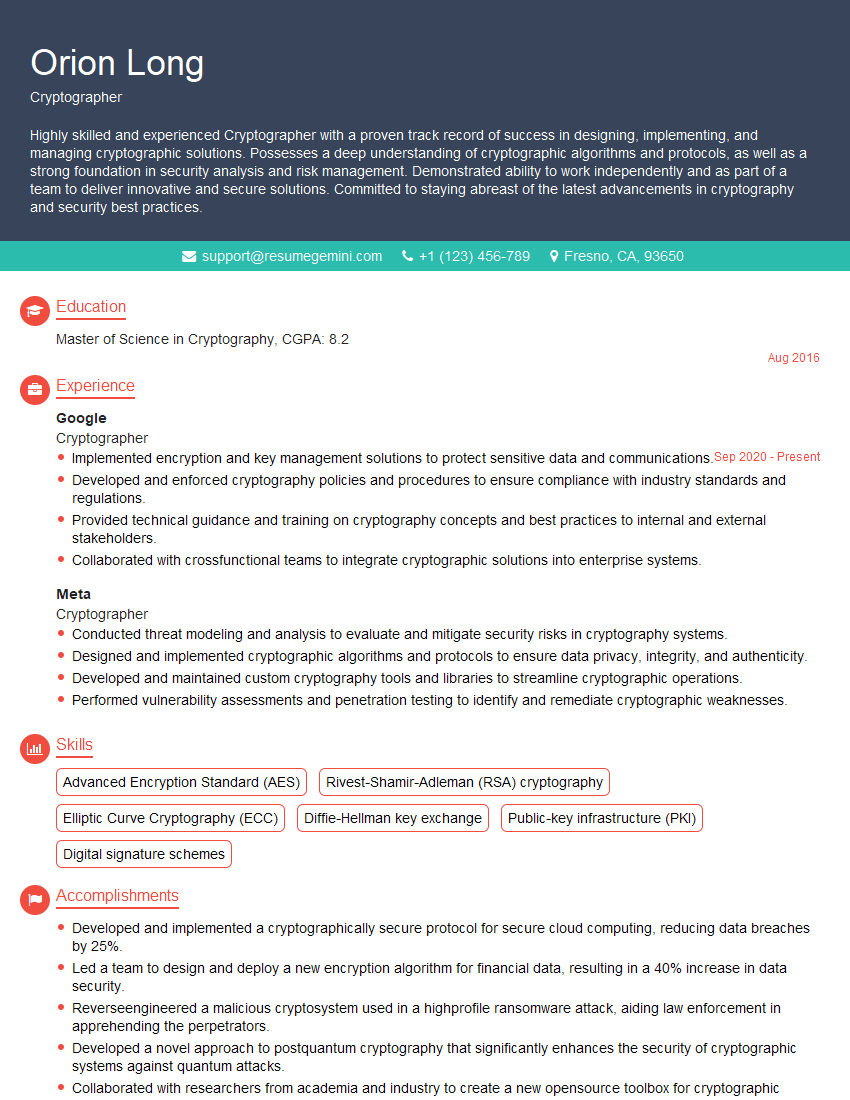

Mastering specialized terminology is crucial for career advancement. It demonstrates expertise, professionalism, and a deep understanding of your chosen field, opening doors to higher-level positions and increased earning potential. To maximize your job prospects, crafting an ATS-friendly resume is essential. A well-structured resume that highlights your knowledge of specialized terminology will significantly improve your chances of getting noticed by recruiters and landing interviews. We strongly encourage you to utilize ResumeGemini, a trusted resource, to build a compelling and effective resume that showcases your skills and experience. Examples of resumes tailored to highlight Knowledge of Specialized Terminology are available to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?