Are you ready to stand out in your next interview? Understanding and preparing for Named Entity Recognition interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Named Entity Recognition Interview

Q 1. Explain the concept of Named Entity Recognition (NER).

Named Entity Recognition (NER) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. Think of it as teaching a computer to understand who, what, when, and where in a sentence. For example, in the sentence “Barack Obama was born in Honolulu, Hawaii,” NER would identify “Barack Obama” as a PERSON, “Honolulu” and “Hawaii” as LOCATIONS.

Q 2. Describe different approaches to NER (e.g., rule-based, statistical, deep learning).

There are several approaches to NER, each with its strengths and weaknesses:

- Rule-based NER: This approach relies on handcrafted rules and gazetteers (lists of known entities). It’s relatively simple to implement but requires significant linguistic expertise and struggles with variations and unseen entities. Imagine building a rule like: “If a word is capitalized and followed by a comma and another capitalized word, it might be a location.” This approach is brittle and prone to errors.

- Statistical NER: These methods use machine learning algorithms, like Hidden Markov Models (HMMs) or Conditional Random Fields (CRFs), to learn patterns from labeled data. They are more robust than rule-based methods but still require a substantial amount of labeled training data.

- Deep Learning NER: This approach leverages neural networks, often Recurrent Neural Networks (RNNs) like LSTMs or GRUs, or more recently, Transformer-based models like BERT or RoBERTa. These models excel at capturing complex contextual information and often achieve state-of-the-art performance. They require less feature engineering than statistical methods but necessitate large amounts of training data and significant computational resources.

Q 3. What are the key challenges in NER?

NER faces several challenges:

- Ambiguity: Words can have multiple meanings; “Apple” could refer to the fruit or the technology company. Context is crucial for disambiguation.

- Nested Entities: Entities can be nested within each other. For example, “Bill Gates, the founder of Microsoft,” contains nested entities: “Bill Gates” (PERSON) and “Microsoft” (ORGANIZATION) within a larger description.

- Name Variations: People and organizations often have multiple names or abbreviations, leading to inconsistencies.

- New Entities: NER models struggle to identify entities that are not present in their training data, like newly formed companies or newly popularized names.

- Data Sparsity: Obtaining sufficient labeled data for training, especially for low-resource languages, is a significant hurdle.

- Cross-lingual NER: Building NER systems for multiple languages requires significant effort and linguistic expertise.

Q 4. Explain the difference between gazetteers and word embeddings in NER.

Gazetteers and word embeddings play distinct roles in NER:

- Gazetteers: These are lists of known entities, such as names of people, places, and organizations. They are often used as external knowledge sources to improve NER accuracy, especially for known entities. For example, a gazetteer might contain a list of all countries in the world. If a word is found in the gazetteer, the NER system has higher confidence in classifying it as a LOCATION.

- Word Embeddings: These are dense vector representations of words that capture semantic relationships between words. Word embeddings, such as Word2Vec or GloVe, capture the contextual meaning of words and allow the NER model to learn relationships between words, even those it has not seen before. For example, “King” and “Queen” will have similar word embeddings because they are semantically related. This helps the model to generalize to unseen entities.

In essence, gazetteers provide explicit knowledge about known entities, while word embeddings provide implicit knowledge about semantic relationships between words, both contributing to improved NER performance.

Q 5. How do you evaluate the performance of a NER system?

Evaluating NER system performance involves comparing the system’s output to a gold standard (human-annotated data). This typically involves computing precision, recall, and F1-score on a held-out test set. It’s common to use a confusion matrix to visualize the types of errors the system makes.

The process usually involves:

- Preparing a test set: A separate dataset, not used during training, is annotated by human experts with the correct named entity labels.

- Running the NER system: The system processes the test set and predicts the named entities.

- Comparing predictions to gold standard: The system’s predictions are compared to the human annotations to identify true positives, false positives, and false negatives.

- Calculating metrics: Metrics like precision, recall, and F1-score are calculated to quantify the system’s performance.

Q 6. What metrics are commonly used to evaluate NER models (e.g., precision, recall, F1-score)?

Common metrics for evaluating NER models include:

- Precision: The proportion of correctly identified entities out of all entities identified by the system. High precision means few false positives.

- Recall: The proportion of correctly identified entities out of all entities present in the gold standard. High recall means few false negatives.

- F1-score: The harmonic mean of precision and recall. It provides a balanced measure of the system’s performance, considering both precision and recall. A high F1-score indicates good overall performance.

These metrics are often calculated for each entity type (e.g., PERSON, LOCATION, ORGANIZATION) separately and then averaged to get an overall score. Considering different entity types separately helps identify specific weaknesses in the system’s performance.

Q 7. Explain the concept of named entity disambiguation.

Named Entity Disambiguation (NED) is the task of identifying the correct referent for a named entity mention. Simply put, it’s about figuring out which specific entity a mention refers to from a set of possibilities. For instance, the mention “Washington” could refer to George Washington, the state of Washington, or Washington D.C. NED aims to resolve this ambiguity and link the mention to the correct entry in a knowledge base.

NED uses various methods including knowledge base lookups, contextual information, and machine learning techniques to disambiguation entity mentions. It is critical for downstream tasks that rely on accurate entity linking, such as knowledge graph construction or question answering.

Q 8. What are some common NER tagging schemes (e.g., IOB, BIO)?

Named Entity Recognition (NER) tagging schemes are crucial for structuring the output of an NER system. They define how we label the entities within a text. Two common schemes are IOB and BIO:

- IOB (Inside-Outside-Beginning): This scheme uses three tags:

B-(Beginning),I-(Inside), andO(Outside).B-ENTITYindicates the beginning of an entity,I-ENTITYsignifies words inside the entity, andOmeans the word is not part of any entity. - BIO (Beginning-Inside-Outside): Similar to IOB, but replaces the

B-tag with a uniqueB-ENTITYtag for each word at the beginning of an entity. This avoids ambiguity present in IOB, especially when dealing with overlapping entities.

Example (IOB):

Sentence: “Barack Obama was born in Honolulu, Hawaii.”

Tags: B-PER I-PER O O O B-GPE I-GPE

Example (BIO):

Sentence: “Barack Obama was born in Honolulu, Hawaii.”

Tags: B-PER I-PER O O O B-GPE I-GPE

The choice between IOB and BIO depends on the specific application and the NER model’s capabilities. BIO is generally preferred for its clarity and ability to handle complex scenarios.

Q 9. How does context influence NER?

Context is paramount in NER. A single word can have different entity types depending on its surroundings. For instance, ‘Washington’ could be a person (George Washington), a location (Washington D.C.), or an organization (Washington State University). The model needs to understand the surrounding words and potentially even the entire sentence to disambiguate the correct entity type.

Consider these examples:

- “The Washington Monument is impressive.” (Location)

- “Washington was the first president.” (Person)

Contextual understanding can be achieved using various techniques, including:

- n-gram features: considering sequences of words around the target word.

- Part-of-speech (POS) tagging: incorporating grammatical information to understand word roles.

- Word embeddings: leveraging semantic relationships between words.

Essentially, context allows the NER system to resolve ambiguity and achieve higher accuracy by considering the wider linguistic environment.

Q 10. How do you handle out-of-vocabulary (OOV) words in NER?

Out-of-Vocabulary (OOV) words are words not seen during the training phase of the NER model. Handling them is crucial because new words, names, and organizations constantly emerge. Several strategies can mitigate the impact of OOV words:

- Character-level models: These models process text at the character level, making them less sensitive to OOV words. They can learn patterns within words, even if the entire word is unknown.

- Word embeddings with subword information (e.g., Byte Pair Encoding, WordPiece): These methods decompose words into smaller units (subwords). Even if a full word is OOV, its subwords might be known, allowing the model to make informed predictions.

- Using gazetteers or knowledge bases: Pre-defined lists of entities (e.g., locations, organizations) can be used to identify OOV words that are likely to be named entities.

- Rule-based approaches: Custom rules, leveraging capitalization patterns or other linguistic cues, can help identify potential entities even when the words are OOV.

- Transfer learning: Pretraining on a large corpus and then fine-tuning on a specific NER task can improve performance on unseen words.

The best strategy is often a combination of these approaches. This ensures robustness and reduces the impact of OOV words on NER accuracy.

Q 11. Describe different types of named entities (e.g., person, location, organization).

Named entities represent real-world objects that have a proper name. They can be broadly classified into several categories, although the precise categories might vary based on the dataset or application. Common types include:

- Person (PER): Names of individuals, e.g., “Barack Obama,” “Jane Doe.”

- Location (LOC): Geographic entities such as countries, cities, and regions, e.g., “United States,” “London,” “California.”

- Organization (ORG): Companies, institutions, and groups, e.g., “Google,” “University of Oxford,” “World Health Organization.”

- Date (DATE): Specific dates, times, and durations, e.g., “July 4th, 1776,” “2024,” “next week.”

- Money (MONEY): Monetary values, e.g., “$100,” “€500,” “ten dollars.”

- Percentage (PERCENT): Percentage values, e.g., “25%, ” “fifty percent.”

- Time (TIME): Specific times and time periods, e.g., “10:00 AM,” “noon,” “midnight.”

Some NER systems also include more granular categories or application-specific entity types.

Q 12. How can you improve the accuracy of an NER system?

Improving NER accuracy involves a multi-pronged approach. Here’s a breakdown:

- Better Data: High-quality, accurately annotated training data is crucial. More data generally leads to better performance. Addressing data imbalances and noisy data through cleaning and augmentation is also critical.

- Advanced Models: Explore state-of-the-art deep learning models like transformers (BERT, RoBERTa) which are well-suited for capturing complex contextual information. Consider exploring ensemble methods combining multiple models.

- Feature Engineering: Carefully chosen features such as part-of-speech tags, gazetteers, and word embeddings significantly enhance accuracy. Experiment to find which features work best for your specific data and model.

- Hyperparameter Tuning: Optimizing the model’s hyperparameters (e.g., learning rate, batch size, embedding dimensions) through techniques like grid search or Bayesian optimization can significantly improve performance.

- Regularization Techniques: Techniques like dropout or weight decay can prevent overfitting and improve generalization to unseen data.

- Handling Ambiguity: Develop strategies for handling ambiguous cases (e.g., using context or external knowledge bases to resolve conflicts).

Iterative experimentation and evaluation are vital. Continuously monitoring the model’s performance and making necessary adjustments are essential for achieving optimal accuracy.

Q 13. Explain the role of feature engineering in NER.

Feature engineering is the process of designing and selecting informative features that help the NER model learn patterns and make better predictions. It’s a critical step, significantly impacting the system’s performance. Effective feature engineering often involves domain expertise.

Examples of useful features:

- Lexical features: Word itself, its capitalization, prefix/suffix, length.

- Contextual features: Surrounding words (n-grams), part-of-speech tags of surrounding words.

- Gazetteer features: Whether the word is present in a pre-defined list of known entities (e.g., a list of cities).

- Word embeddings: Vector representations of words that capture semantic information.

- Character n-grams: Sequences of characters which can be useful when dealing with OOV words or morphological variations.

The choice of features depends on the model and the dataset. It’s often an iterative process – experimenting and evaluating different feature combinations to determine the best approach for a specific task. Poor feature engineering can lead to suboptimal performance, while thoughtful feature engineering can greatly improve accuracy.

Q 14. What are some popular NER libraries or tools (e.g., spaCy, Stanford NER)?

Several popular NER libraries and tools offer pre-trained models and functionalities to simplify the NER process. Some prominent examples are:

- spaCy: A powerful Python library providing pre-trained models for various languages, fast processing speed, and easy-to-use APIs. It offers a good balance between speed and accuracy.

- Stanford NER: A Java-based tool with a long history and robust performance. It’s known for its accuracy but can be less user-friendly compared to spaCy.

- NLTK: Python’s Natural Language Toolkit also provides NER capabilities through various modules, often requiring more manual configuration.

- Flair: A flexible and extensible framework allowing for customized models and easy integration with other NLP tools. It offers both pre-trained and trainable models.

- Hugging Face Transformers: This library provides access to a vast array of pre-trained transformer-based NER models, offering high accuracy but requiring more technical expertise to utilize effectively.

The best choice depends on your project’s requirements, programming language preference, desired level of customization, and available computational resources. For many users, spaCy provides an excellent starting point due to its ease of use and performance.

Q 15. How do you handle nested named entities?

Handling nested named entities, where one entity is contained within another, requires careful consideration of the entity boundaries. Imagine the sentence: “Barack Obama, the former President of the United States, visited London.”

Here, “Barack Obama” is a PERSON entity, but it’s nested within a larger entity describing his role: “the former President of the United States” (which could be considered a TITLE or ORG depending on the granularity). Simple NER models often miss this nesting.

Several strategies address this:

- Hierarchical models: These models are designed to recognize entities at multiple levels. They might first identify the broader entity (e.g., “the former President of the United States”) and then the nested entity within it (e.g., “Barack Obama”).

- Recursive neural networks: These networks can effectively handle the hierarchical structure of nested entities by processing the text recursively, building up representations from smaller to larger entities.

- IOB tagging with extensions: Instead of the standard IOB (Inside, Outside, Beginning) tagging scheme, you can use schemes like IOBES (Inside, Outside, Beginning, End, Single) or more sophisticated extensions to explicitly represent nested entities. For instance, you could use a distinct tag to indicate the beginning and end of each level of nesting.

The choice of approach depends on the complexity of the nesting and the resources available. For simpler cases, modifying the IOB scheme might suffice. For more complex scenarios, hierarchical models or recursive networks are needed.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you deal with ambiguous named entities?

Ambiguous named entities are a common challenge. Consider the sentence: “Apple released a new product.”

Here, “Apple” could refer to the technology company or the fruit. Resolving this ambiguity requires context and additional information. Several techniques help:

- Contextual information: The surrounding words offer crucial clues. If the sentence is about electronics, “Apple” is more likely the company. Algorithms can leverage this by analyzing n-grams or word embeddings.

- Gazetteers/Knowledge bases: A gazetteer is a list of known entities (like company names, locations, etc.). Matching against a gazetteer can help disambiguate. Knowledge bases provide richer semantic information to resolve ambiguities.

- Machine learning classifiers: Train a classifier to predict the correct entity type based on features extracted from the context and gazetteer information. This could involve features like part-of-speech tags, word embeddings, and entity type probabilities from the gazetteer.

- Word sense disambiguation (WSD): Techniques from WSD can be applied to select the most appropriate meaning of an ambiguous word given the context.

A combination of these methods is often most effective. For example, you could use a gazetteer to identify possible entities, then employ a classifier that considers contextual information to select the most probable sense.

Q 17. Explain the difference between NER and part-of-speech tagging.

While both NER and part-of-speech (POS) tagging are crucial steps in natural language processing (NLP), they serve different purposes.

Part-of-speech tagging assigns grammatical tags to words (e.g., noun, verb, adjective, adverb). It focuses on the grammatical role of each word in a sentence. Example: “The quick brown fox jumps over the lazy dog.” POS tagging might label “The” as a determiner, “quick” as an adjective, “brown” as an adjective, “fox” as a noun, etc.

Named Entity Recognition (NER) identifies and classifies named entities, which are real-world objects like people, organizations, locations, dates, etc. It goes beyond grammatical role to identify meaningful, named objects. In the same sentence, NER would identify “brown fox” as a possible entity (although it may need more context to be certain). It may identify “lazy dog” as another potential entity as well.

In essence, POS tagging is about grammar, while NER is about identifying real-world objects mentioned in the text. They often work together – the grammatical information from POS tagging can be a valuable feature for improved NER.

Q 18. How can you incorporate external knowledge sources into an NER system?

Incorporating external knowledge sources significantly boosts NER performance. These sources provide valuable context and information that models alone may lack.

Here’s how it works:

- Gazetteers: Pre-compiled lists of entities (e.g., DBpedia, Freebase, GeoNames). Matching words or phrases against these lists provides strong evidence for entity recognition. For instance, finding “London” in a gazetteer strongly suggests it’s a location.

- Knowledge graphs: Structured repositories of information linking entities and their properties (e.g., Wikidata, Knowledge Graph). They provide richer context and relationships, helping disambiguate and refine entity recognition. Knowing that “Apple” is linked to “technology” in a knowledge graph can help disambiguate it from the fruit.

- Word embeddings pre-trained on large corpora: Models like Word2Vec and GloVe can capture semantic relationships between words. These embeddings can be used as features in NER models, improving their ability to recognize entities in context.

- Ontologies: These formal representations of knowledge define classes, properties, and relationships between entities. They help organize information and provide a structured way to incorporate knowledge into the NER system.

The integration methods vary; some methods use gazetteer lookups as features, while others incorporate knowledge graph information directly into the model’s architecture. The choice depends on the specific knowledge source and the NER model used.

Q 19. Discuss the application of NER in different domains (e.g., finance, healthcare, social media).

NER finds widespread application across various domains:

- Finance: Identifying company names, financial instruments (stocks, bonds), dates, and amounts in financial news and reports for risk assessment, algorithmic trading, and regulatory compliance.

- Healthcare: Extracting medical entities like diseases, medications, symptoms, and treatments from medical records for patient care, clinical research, and drug discovery. This is crucial for streamlining healthcare operations and improving patient outcomes.

- Social Media: Analyzing social media posts to identify people, locations, organizations, and sentiments. This is useful for market research, brand monitoring, and understanding public opinion. Imagine tracking mentions of a product or company across various social media platforms.

- Legal: Extracting key entities such as people, organizations, locations, dates, and legal terms from legal documents. This aids in legal research and document analysis, particularly in areas such as contract review and due diligence.

- News and Media: Identifying key people, places, organizations and events from news articles which allows for efficient information retrieval, topic modeling, and summarization. Think about building a news aggregator that automatically categorizes news based on the entities it extracts.

The specific NER models and entity types vary depending on the domain, but the core principle remains the same: identifying and classifying named entities to extract meaningful information from text.

Q 20. What are some common errors in NER systems, and how can they be addressed?

NER systems are prone to various errors:

- Boundary errors: Incorrectly identifying the beginning or end of an entity. For example, identifying only “Barack” instead of “Barack Obama”.

- Type errors: Assigning the wrong type to an entity. Misclassifying “Apple” as a fruit when it’s the company.

- Unknown entities: Failing to recognize entities not present in the training data. A novel company name might be missed.

- Nested entities (as discussed previously): Misidentifying or overlooking nested entities.

- Ambiguous entities (as discussed previously): Selecting the wrong interpretation of an ambiguous entity.

Addressing these errors requires a multi-pronged approach:

- Improving training data: More data, better annotation, and handling of ambiguous cases.

- Feature engineering: Adding features that capture context (e.g., part-of-speech tags, gazetteer information) and semantic information.

- Model selection: Choosing a model that is suitable for the task and data (e.g., using a deep learning model for better performance).

- Model fine-tuning and hyperparameter optimization: Carefully tuning model parameters to improve performance on the specific task and data.

- Post-processing: Applying rules or algorithms to correct common errors or resolve ambiguities detected.

A combination of these strategies improves accuracy and robustness.

Q 21. Explain how transfer learning can be applied to NER.

Transfer learning is incredibly valuable in NER, especially when data for a specific domain is scarce. The idea is to leverage knowledge gained from a related, data-rich domain to improve performance on a data-poor domain.

Here’s how it applies:

- Pre-trained models: Start with a pre-trained NER model trained on a large, general-purpose corpus (e.g., Wikipedia, news articles). This model has already learned general features of language and entity recognition.

- Fine-tuning: Adapt the pre-trained model to the specific domain by fine-tuning it on a smaller dataset from that domain. This involves adjusting the model’s weights based on the new data. This is significantly more efficient than training a model from scratch.

- Feature extraction: Use a pre-trained model to extract features (embeddings, contextual representations) from the text. These features can then be used as input to a simpler model trained on the specific domain.

- Domain adaptation techniques: Employ techniques like domain adversarial training to minimize the differences between the source (pre-trained) and target (specific domain) domains.

For example, a model pre-trained on general news data can be fine-tuned on a smaller dataset of medical texts to create a specialized medical NER system. This significantly reduces the need for extensive medical text annotation, saving time and resources.

Q 22. Describe your experience with different deep learning architectures for NER (e.g., CNNs, RNNs, Transformers).

My experience with deep learning architectures for NER spans several popular models. Each has its strengths and weaknesses, making the choice dependent on the specific task and dataset.

- Recurrent Neural Networks (RNNs), particularly LSTMs and GRUs: RNNs excel at capturing sequential information crucial for NER. They process words one by one, maintaining a hidden state that reflects the context. However, they can struggle with long-range dependencies, meaning the model might forget information from earlier in the sentence. I’ve used LSTMs extensively in projects with moderate sentence lengths and achieved good results. For example, in a project involving medical text analysis, LSTMs effectively identified named entities like diseases and medications.

- Convolutional Neural Networks (CNNs): CNNs are adept at identifying local patterns within words and their surrounding context. They are often used in conjunction with RNNs to capture both local and sequential information. I’ve integrated CNNs to enhance the feature extraction capabilities of RNN-based NER models, resulting in improved performance on noisy datasets.

- Transformers (e.g., BERT, RoBERTa, XLNet): Transformers have revolutionized NER with their attention mechanisms. They can capture long-range dependencies more effectively than RNNs, leading to significant performance improvements. The pre-trained models offer a considerable advantage, allowing for fine-tuning on smaller datasets. In a recent project analyzing social media data, a fine-tuned BERT model significantly outperformed previous RNN-based approaches in identifying location and person entities, particularly in complex, informal language.

Choosing the right architecture often involves experimentation and benchmarking. Factors like dataset size, sentence length, and the complexity of the entities being recognized all influence the decision.

Q 23. How do you handle noisy or unstructured data in NER?

Handling noisy and unstructured data is a critical aspect of real-world NER. Strategies range from data preprocessing techniques to employing robust model architectures.

- Data Cleaning and Preprocessing: This crucial initial step involves removing irrelevant characters, handling inconsistencies, and standardizing formats. For example, removing irrelevant HTML tags from web scraped data or correcting spelling mistakes are standard practice.

- Regular Expressions and Heuristics: These can help identify and correct common errors or extract relevant information from unstructured text before feeding it to the NER model. For example, a regex could be used to identify and standardize date formats.

- Robust Model Architectures: Models like transformers, with their ability to handle noisy input, are particularly well-suited to unstructured data. Further, incorporating techniques like data augmentation (creating slightly altered versions of existing data points to increase robustness) can improve model performance.

- Active Learning: This iterative approach focuses on selecting the most informative samples from the noisy data for annotation, making the process more efficient and leading to a cleaner training dataset. By prioritizing the most ambiguous cases, active learning significantly improves the overall model performance.

The best approach is often a combination of these methods. For instance, I’ve successfully used a combination of data cleaning, regular expressions, and a transformer-based model to extract entities from noisy web forum posts.

Q 24. How do you evaluate the performance of different NER models and choose the best one?

Evaluating NER models involves using standard metrics and comparing performance across different models. The choice of the best model depends on the specific application and priorities.

- Precision, Recall, and F1-score: These are fundamental metrics that assess the accuracy of the NER model. Precision measures the proportion of correctly identified entities among all identified entities, recall measures the proportion of correctly identified entities among all actual entities, and the F1-score balances precision and recall.

- Confusion Matrix: This visual representation helps identify specific errors made by the model, such as confusing one type of entity with another. This detailed analysis guides further model improvements.

- Cross-Validation: This technique involves splitting the dataset into multiple folds and training the model on different subsets to get a more reliable estimate of the model’s generalization performance and reduce overfitting.

- Human Evaluation: While automatic metrics are essential, human evaluation is crucial, particularly for nuanced tasks. Human judges can assess the model’s output for subjective aspects and identify errors not captured by automatic metrics.

Ultimately, the ‘best’ model is the one that best balances performance on these metrics with considerations like computational cost and deployment feasibility. I often use a combination of these evaluation methods to make informed decisions.

Q 25. Describe your experience with deploying NER models in a production environment.

Deploying NER models to production requires careful planning and consideration of various factors. I have experience deploying models using both cloud-based platforms and on-premise solutions.

- Model Optimization: Reducing model size and improving inference speed are crucial for efficient deployment. Techniques like quantization and pruning can significantly reduce resource consumption without substantial performance loss. In one project, model pruning reduced the size by 50% with a minimal impact on accuracy.

- API Development: Creating a RESTful API allows seamless integration with other systems and simplifies access to the NER model’s capabilities. I’ve used frameworks like Flask and FastAPI to create efficient and scalable APIs.

- Monitoring and Maintenance: Continuous monitoring of the model’s performance is critical. This involves tracking key metrics and addressing issues like concept drift (the model’s performance degrading over time due to changes in the input data).

- Scalability: The deployment infrastructure must be capable of handling varying workloads. Cloud-based platforms offer inherent scalability, whereas on-premise solutions require careful capacity planning.

Successful deployment demands a holistic approach encompassing model optimization, API development, continuous monitoring, and scalability. Choosing the right infrastructure depends on the specific needs of the project and the available resources.

Q 26. What are some ethical considerations related to NER?

Ethical considerations in NER are paramount, particularly concerning bias, privacy, and fairness. It’s crucial to be mindful of potential harms and proactively mitigate them.

- Bias in Data and Models: NER models can inherit and amplify biases present in the training data. For example, a model trained on biased news articles might misrepresent certain groups. Addressing this requires careful data curation, bias detection techniques, and fairness-aware model training.

- Privacy Concerns: NER systems often process sensitive personal information. Protecting this data is crucial. This involves anonymization techniques and adherence to relevant privacy regulations like GDPR.

- Misinformation and Manipulation: NER can be used to extract information for malicious purposes, such as manipulating public opinion or spreading misinformation. This underscores the need for responsible development and deployment of NER systems.

- Transparency and Explainability: Understanding how a model arrives at its predictions is vital. Explainable AI (XAI) techniques can increase transparency and build trust.

Ethical NER necessitates a proactive approach, addressing biases, protecting privacy, preventing misuse, and promoting transparency throughout the entire lifecycle of the system.

Q 27. How would you approach building an NER system for a new language?

Building an NER system for a new language presents unique challenges due to the lack of labeled data. A robust approach involves leveraging available resources and employing transfer learning techniques.

- Data Acquisition and Annotation: Gathering and annotating sufficient data is crucial, even if it’s a small dataset initially. Crowdsourcing platforms and linguistic experts can assist in this process.

- Transfer Learning: Using pre-trained multilingual models can significantly reduce the need for large amounts of labeled data in the new language. Fine-tuning these models on a smaller dataset in the target language can yield impressive results.

- Cross-lingual Embeddings: These techniques leverage information from high-resource languages to improve performance in low-resource languages. This can be a powerful technique when dealing with languages with limited available data.

- Morphological Analysis: Understanding the morphology of the language (how words are formed) can help identify and extract entities more effectively. This is particularly useful in languages with complex morphology.

A successful approach typically involves a combination of these techniques. Starting with a multilingual model and fine-tuning it with limited data while incorporating morphological analysis is a practical strategy.

Q 28. How would you improve the performance of an existing NER system?

Improving an existing NER system often involves a combination of strategies focusing on data, model, and feature engineering.

- Data Augmentation: Generating synthetic data that expands the dataset can boost model performance, particularly when dealing with imbalanced classes or limited data.

- Hyperparameter Tuning: Experimenting with different model parameters (learning rate, batch size, etc.) can significantly improve results. Techniques like grid search and Bayesian optimization can automate this process.

- Feature Engineering: Adding relevant features to the model can enhance its ability to identify entities. For example, incorporating part-of-speech tags or gazetteers (lists of known entities) can improve accuracy.

- Ensemble Methods: Combining predictions from multiple models can lead to improved overall performance. Techniques like voting or stacking can be used to aggregate the predictions.

- Addressing Class Imbalance: Techniques like oversampling the minority class or using cost-sensitive learning can improve performance when dealing with imbalanced datasets (where some entities are much rarer than others).

Improving an existing system requires a systematic approach involving data analysis, model refinement, and careful evaluation to assess the impact of each change.

Key Topics to Learn for Named Entity Recognition Interview

- Fundamentals of NER: Understand the core concepts, including the definition of Named Entities, different entity types (person, location, organization, etc.), and the challenges in NER like ambiguity and variation.

- Rule-based NER: Explore the mechanics of rule-based systems, including pattern matching, gazetteers, and their limitations. Consider scenarios where this approach would be suitable.

- Statistical NER: Dive into probabilistic models like Hidden Markov Models (HMMs) and Conditional Random Fields (CRFs). Understand their strengths and weaknesses in handling complex linguistic phenomena.

- Deep Learning for NER: Familiarize yourself with neural network architectures like Recurrent Neural Networks (RNNs), LSTMs, and Transformers (BERT, RoBERTa) used for NER. Be ready to discuss their advantages and architectural differences.

- Evaluation Metrics: Master the common metrics used to evaluate NER models, such as precision, recall, F1-score, and their implications for model selection and improvement. Understand the importance of choosing appropriate metrics based on the task at hand.

- Data Preprocessing and Feature Engineering: Discuss the crucial role of data cleaning, normalization, and feature selection in enhancing NER model performance. Be prepared to describe various feature engineering techniques.

- Practical Applications: Be ready to discuss real-world applications of NER, such as information extraction, question answering, text summarization, and knowledge graph construction. Consider examples from your own experience or research.

- Addressing Challenges: Prepare to discuss common challenges in NER, such as handling out-of-vocabulary words, nested entities, and ambiguous naming conventions. Be ready to suggest potential solutions and mitigation strategies.

- Model Selection and Deployment: Understand the factors to consider when choosing the right NER model for a specific task, including computational resources, data size, and desired accuracy. Be familiar with model deployment considerations.

Next Steps

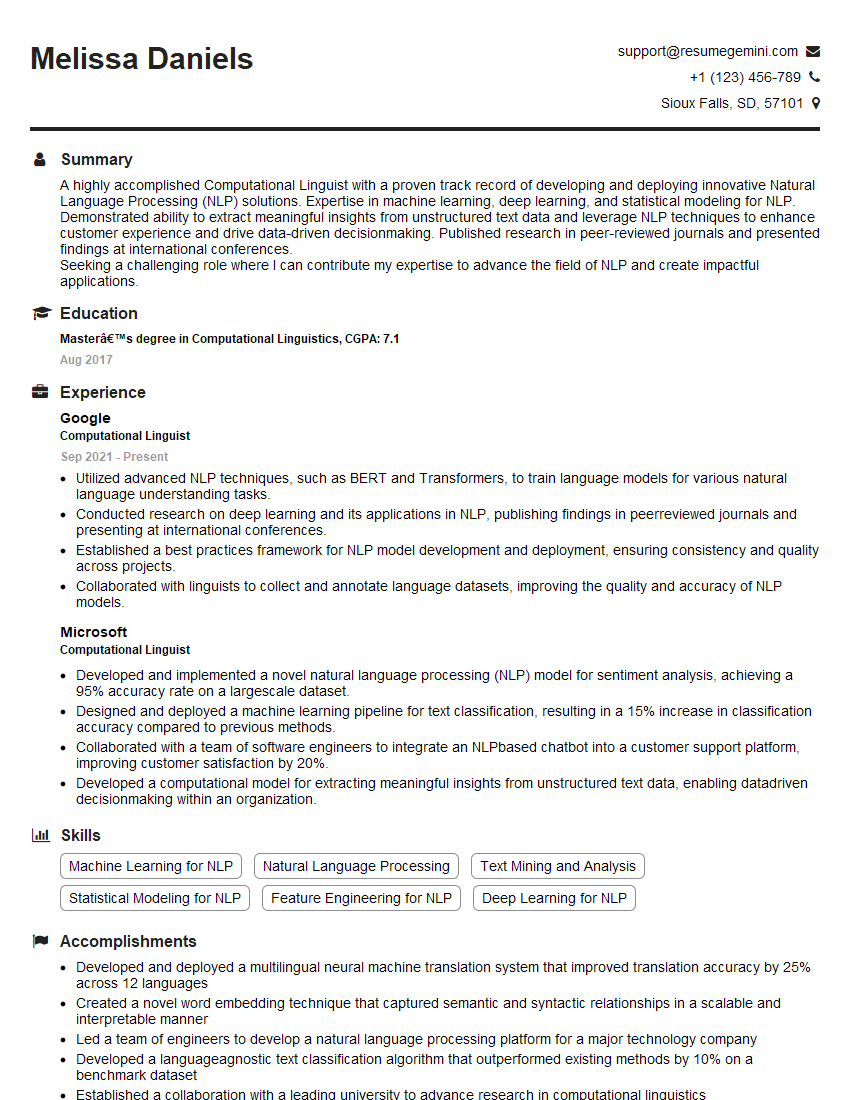

Mastering Named Entity Recognition opens doors to exciting careers in various fields, including natural language processing, data science, and artificial intelligence. A strong understanding of NER significantly enhances your marketability and positions you for success in competitive job markets. To maximize your job prospects, create a compelling and ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource to help you craft a professional resume that showcases your expertise. We provide examples of resumes tailored to Named Entity Recognition to help you build a winning application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?