Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Proficiency in Microsoft Office Suite, Statistical Software, and PV Databases interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Proficiency in Microsoft Office Suite, Statistical Software, and PV Databases Interview

Q 1. Explain your experience with Microsoft Excel’s pivot tables and their applications in data analysis.

Pivot tables in Microsoft Excel are powerful tools for summarizing and analyzing large datasets. Think of them as interactive summaries that allow you to quickly drill down into your data to uncover trends and patterns. They dynamically rearrange and aggregate data based on your selections, providing a concise and insightful view.

For example, imagine you have a sales dataset with information on sales region, product category, and sales figures. Using a pivot table, you can easily summarize total sales by region, compare sales across different product categories within a specific region, or even analyze sales trends over time. You can easily add, remove, or rearrange fields to explore different perspectives of your data. This allows for rapid exploration and identification of key performance indicators (KPIs) without writing complex formulas.

In a professional setting, I’ve used pivot tables extensively for sales performance analysis, identifying top-performing products and regions, and pinpointing areas needing improvement. I’ve also used them for budget analysis, identifying cost overruns and areas for potential savings. The flexibility of pivot tables allows for quick adaptation to changing analytical needs.

Q 2. Describe your proficiency in using VBA (Visual Basic for Applications) in Excel.

My VBA proficiency extends beyond basic macro recording. I can write efficient and robust VBA code to automate repetitive tasks, create custom functions, and interact with other applications. This allows me to significantly increase my productivity and handle complex data manipulations that would be tedious or impossible to do manually.

For instance, I’ve developed VBA code to automatically generate reports, cleanse and format large datasets, and integrate data from various sources. I often use loops, conditional statements, and array processing techniques to streamline my code. I also prioritize error handling and robust code design to ensure reliability and maintainability.

Sub ExampleMacro()

'Declare variables

Dim ws As Worksheet

Set ws = ThisWorkbook.Sheets("Sheet1")

'Perform operations on the worksheet

ws.Range("A1").Value = "Hello, VBA!"

End SubThis simple example demonstrates a basic VBA macro. In reality, I work with more complex projects involving dynamic data processing and interactive user interfaces.

Q 3. How familiar are you with different statistical software packages (e.g., R, SPSS, SAS, Python)?

I’m proficient in several statistical software packages, including R, SPSS, and Python (with libraries like pandas, NumPy, and Scikit-learn). My experience encompasses data manipulation, statistical modeling, and visualization in each of these platforms. The choice of software often depends on the specific task and dataset. For instance, R excels in statistical modeling and data visualization, while Python offers a broader range of functionalities including machine learning capabilities. SPSS provides a user-friendly interface ideal for users less familiar with coding.

In my previous roles, I utilized R for advanced statistical modeling and creating publication-ready visualizations, Python for data wrangling and machine learning projects, and SPSS for quick data exploration and straightforward statistical analyses. My ability to adapt to different software packages ensures I can tackle any statistical challenge efficiently and effectively.

Q 4. Explain your experience with data cleaning and preprocessing techniques in statistical software.

Data cleaning and preprocessing are crucial steps in any data analysis workflow, ensuring the reliability and accuracy of the results. My experience involves a range of techniques, including handling missing values, outlier detection, data transformation, and feature engineering. In R, for example, I frequently use packages like dplyr for data manipulation and ggplot2 for visualization.

In a recent project, I dealt with a dataset containing numerous missing values. I investigated the reasons behind the missingness (missing completely at random, missing at random, or missing not at random) and applied appropriate imputation techniques, such as mean/median imputation for numerical variables and mode imputation or creating a separate category for categorical variables, depending on the nature of the data and the amount of missing information. I also used data visualization to identify and handle outliers.

Q 5. How do you handle missing data in your datasets?

Handling missing data is a critical aspect of data analysis. The approach depends on the nature of the missing data (missing completely at random, missing at random, or missing not at random) and the amount of missing data. I typically employ a combination of techniques.

- Deletion: Removing rows or columns with missing data is a simple approach but can lead to information loss if missing data is not random.

- Imputation: Replacing missing values with estimated values using techniques like mean/median imputation, mode imputation, k-nearest neighbors, or multiple imputation. The choice depends on the data type and the nature of the missing data.

- Model-based approaches: Using statistical models to predict missing values based on available data. This approach is often more accurate but requires more expertise.

The best approach depends on the specific context. For instance, if the amount of missing data is minimal and its pattern is random, deletion might be suitable. However, for larger amounts of missing data or non-random patterns, imputation or model-based methods are usually preferred.

Q 6. Describe your understanding of various statistical tests (e.g., t-test, ANOVA, chi-square test).

I have a solid understanding of various statistical tests. These tests allow us to draw inferences about populations based on sample data. Here are some examples:

- T-test: Compares the means of two groups to determine if there’s a statistically significant difference. For example, comparing the average height of men and women.

- ANOVA (Analysis of Variance): Compares the means of three or more groups. For example, comparing the yield of a crop under different fertilization treatments.

- Chi-square test: Tests the independence of two categorical variables. For example, determining if there’s an association between smoking and lung cancer.

The choice of test depends on the type of data and the research question. It’s crucial to correctly interpret the p-value and effect size to draw valid conclusions.

Q 7. How would you interpret the results of a regression analysis?

Interpreting regression analysis involves understanding the relationships between a dependent variable and one or more independent variables. The output typically includes:

- Coefficients: Indicate the change in the dependent variable associated with a one-unit change in an independent variable, holding other variables constant.

- R-squared: Represents the proportion of variance in the dependent variable explained by the independent variables. A higher R-squared indicates a better fit.

- P-values: Indicate the statistical significance of the coefficients. A low p-value suggests that the relationship between the independent and dependent variable is statistically significant.

- Confidence intervals: Provide a range of plausible values for the coefficients.

For example, in a regression model predicting house prices (dependent variable) based on size and location (independent variables), a positive coefficient for size indicates that larger houses tend to have higher prices, holding location constant. The R-squared would tell us how well the model explains variations in house prices based on these factors. P-values would indicate the significance of each factor’s contribution to the model.

It is important to consider the context and limitations of the model when interpreting the results. Correlation does not imply causation. And the model’s predictive power should be assessed using appropriate metrics such as Mean Absolute Error or Root Mean Squared Error.

Q 8. What are your preferred methods for data visualization?

My preferred methods for data visualization depend heavily on the type of data and the insights I’m aiming to communicate. For exploring relationships between variables, I frequently use scatter plots and correlation matrices. When showcasing the distribution of a single variable, histograms and box plots are invaluable. For visualizing trends over time, line charts are essential. Finally, when dealing with categorical data and proportions, bar charts and pie charts are effective, though I’m mindful of avoiding pie charts for too many categories as they become difficult to interpret.

In terms of tools, I’m highly proficient with Microsoft Excel’s charting capabilities, which are excellent for quick visualizations and simpler datasets. For more complex datasets and sophisticated visualizations, I often leverage tools like Tableau or Power BI, which allow for interactive dashboards and the creation of more dynamic representations. For instance, in a recent project analyzing sales data, I used a Tableau dashboard to show regional sales performance over time, incorporating interactive filtering to allow users to drill down into specific product categories and time periods. This allowed for more nuanced analysis and insightful exploration of the data.

Q 9. Explain your experience working with PV databases (e.g., relational databases, NoSQL databases).

My experience with PV databases (Pharmacovigilance databases) spans various types, including relational databases like PostgreSQL and SQL Server, and NoSQL databases such as MongoDB. In relational databases, I’ve worked extensively with structured data, implementing robust schema designs for efficient data storage and retrieval. This includes experience with designing tables, defining relationships between tables (one-to-many, many-to-many), and implementing constraints to ensure data integrity. For example, I designed a relational database schema for tracking adverse events, incorporating fields for patient demographics, medication details, event descriptions, and outcomes.

With NoSQL databases, I’ve worked with handling semi-structured and unstructured data, particularly text-based information from case reports. The flexibility of NoSQL is particularly beneficial when dealing with rapidly evolving data structures or handling large volumes of unstructured data. For instance, I’ve used MongoDB to store and analyze free-text descriptions of adverse events, using techniques like natural language processing to identify patterns and key terms.

Q 10. Describe your experience with SQL queries and database management systems.

I’m highly proficient in SQL, using it regularly to query, manipulate, and manage data in various relational database systems. My SQL skills encompass a wide range of functionalities, from basic SELECT statements to advanced techniques like joins, subqueries, window functions, and stored procedures. I’m comfortable optimizing queries for performance and handling large datasets efficiently.

For example, I’ve frequently used JOIN statements to combine data from multiple tables to generate comprehensive reports. A typical example might be joining a patient demographics table with an adverse event table to analyze the incidence of a specific adverse event among different age groups: SELECT p.age_group, COUNT(*) AS event_count FROM patients p JOIN adverse_events ae ON p.patient_id = ae.patient_id WHERE ae.event_name = 'Specific Adverse Event' GROUP BY p.age_group;

Beyond querying, I have experience in database administration tasks including user management, schema design, data backup and recovery, and performance tuning. My understanding of database management systems extends to both cloud-based solutions (like AWS RDS or Azure SQL Database) and on-premise systems.

Q 11. How do you ensure data integrity and accuracy in a PV database?

Ensuring data integrity and accuracy in a PV database is paramount. My approach involves a multi-layered strategy. Firstly, I implement strict data validation rules at the point of data entry, ensuring that data conforms to predefined formats and constraints. This often involves using data validation tools within the database management system or implementing custom validation logic within applications that interact with the database. For example, I might enforce constraints to ensure that dates are in a valid format and that numerical values fall within a reasonable range.

Secondly, I use data quality checks and audits regularly to identify and correct inconsistencies or errors. This might involve running SQL queries to identify missing values, duplicates, or outliers. Thirdly, I leverage version control and tracking mechanisms to maintain a history of changes made to the database, allowing for rollback if necessary and providing an audit trail for compliance purposes. Finally, regular data backups are crucial to prevent data loss in case of unforeseen circumstances. I usually implement a robust backup and recovery strategy that ensures data availability and business continuity.

Q 12. What are the differences between various database types (e.g., relational vs. NoSQL)?

Relational databases (RDBMS) and NoSQL databases represent fundamentally different approaches to data management. RDBMS, such as MySQL or PostgreSQL, organize data into structured tables with predefined schemas. They excel at handling structured data with well-defined relationships between different entities. They enforce data integrity through constraints and provide ACID properties (Atomicity, Consistency, Isolation, Durability) guaranteeing reliable transactions. This is beneficial for data that adheres to a rigid structure, like financial transactions or customer information.

NoSQL databases, on the other hand, offer more flexibility in handling unstructured or semi-structured data. They don’t require a predefined schema, making them adaptable to evolving data structures. Examples include MongoDB (document database) and Cassandra (wide-column store). NoSQL databases are particularly useful for handling large volumes of data with variable structures, such as social media posts, sensor data, or large-scale text analysis. The choice between relational and NoSQL depends heavily on the specific requirements of the application and the nature of the data being managed.

Q 13. Describe your experience with data mining and extracting insights from large datasets.

I have extensive experience in data mining, employing various techniques to extract meaningful insights from large datasets. My approach often involves a combination of statistical methods and machine learning algorithms. This includes exploratory data analysis (EDA) to understand the data’s characteristics, feature engineering to create new variables that improve model performance, and model selection to choose the most appropriate algorithms for the task at hand.

For instance, in a project involving the analysis of adverse event reports, I utilized natural language processing (NLP) techniques to extract key terms and concepts from free-text descriptions. I then applied machine learning algorithms such as topic modeling to identify recurring themes and patterns in adverse events, helping to prioritize investigations and identify potential safety signals. In other projects, I have used clustering algorithms to group similar patients based on their characteristics and adverse event profiles, allowing for more targeted analysis and potentially the identification of subgroups at higher risk. The visualization of these findings is critical for effective communication.

Q 14. How proficient are you in creating reports and dashboards using data visualization tools?

I’m highly proficient in creating reports and dashboards using various data visualization tools. My skills extend from simple Excel reports to interactive dashboards built using Tableau, Power BI, or similar business intelligence tools. I focus on creating clear, concise, and visually appealing visualizations that effectively communicate key insights to different audiences. My approach emphasizes data storytelling, presenting the data in a way that is easily understandable and actionable.

For example, in a recent project, I created a Power BI dashboard that tracked key performance indicators (KPIs) related to the efficiency of the pharmacovigilance process. This dashboard displayed metrics such as case processing time, report completeness, and the number of serious adverse events, allowing stakeholders to monitor the system’s performance and identify areas for improvement. The dashboard incorporated interactive elements, such as drill-down capabilities and customizable filters, to allow users to explore the data at different levels of granularity.

Q 15. Explain your experience in using Microsoft PowerPoint for data presentation.

My experience with Microsoft PowerPoint for data presentation extends beyond simply creating slides; I leverage its features to craft compelling narratives around data. I start by understanding the audience and the key message. Then, I strategically choose chart types (bar charts for comparisons, line charts for trends, scatter plots for correlations) to effectively visualize the data. I avoid chart junk and ensure clear labeling. For instance, in a presentation on sales performance, instead of using a dense table, I’d employ a visually appealing bar chart showing year-over-year growth, highlighting key trends with annotations and callouts. Furthermore, I utilize PowerPoint’s animation features subtly to guide the audience’s attention and improve information retention. Finally, I always ensure the presentation’s design is consistent, professional, and aligns with the overall branding.

For example, in a recent presentation to senior management on marketing campaign effectiveness, I used interactive charts embedded within PowerPoint, allowing for real-time data exploration and drill-down capabilities. This interactive approach significantly improved audience engagement and understanding compared to a static presentation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you communicate complex data insights to a non-technical audience?

Communicating complex data insights to a non-technical audience requires translating technical jargon into plain language and focusing on the story the data tells. Instead of using statistical terms like ‘regression analysis,’ I’d explain the findings in a way that everyone can grasp, for example, ‘our analysis shows a strong relationship between advertising spend and sales’. I heavily rely on visual aids such as charts and graphs, keeping them simple and easy to understand. Analogies and real-world examples are incredibly helpful in bridging the gap between technical details and audience comprehension. For example, explaining the concept of standard deviation using a relatable analogy of the average height of people in a room, helps contextualize the data point’s significance.

A powerful technique is to focus on the ‘so what?’ factor – clearly articulating the implications and recommendations based on the data. This ensures the audience understands the relevance and impact of the findings, making the data meaningful and actionable.

Q 17. Describe a challenging data analysis project you have undertaken and how you overcame obstacles.

One challenging project involved analyzing customer churn for a telecommunications company. The initial dataset was incredibly messy, containing inconsistencies, missing values, and duplicate entries. The biggest obstacle was the lack of a clear definition of ‘churn’ across different departments, leading to discrepancies in the data. To overcome this, I first implemented rigorous data cleaning procedures using Python and Pandas, handling missing data through imputation techniques and resolving inconsistencies by collaborating with relevant departments to define standardized churn criteria. I then explored various predictive modeling techniques, such as logistic regression and survival analysis, to identify key predictors of churn. Evaluating multiple models with metrics like AUC (Area Under the Curve) helped me select the best performing model, which was then deployed to improve customer retention strategies. This project highlighted the importance of clear communication and collaboration across teams in data analysis projects.

Q 18. What is your experience with data validation and quality control procedures?

Data validation and quality control are paramount to any data analysis project. My approach involves a multi-step process. First, I perform data profiling to understand the data’s structure, identify potential issues like missing values, outliers, and inconsistencies. Then, I implement data validation rules using tools such as SQL constraints and Python libraries like Pandas. These rules verify data integrity, ensuring it conforms to predefined standards. For example, I might check if date formats are consistent, age values are realistic, and numerical data falls within expected ranges. Throughout the process, I document all validation checks and their results, ensuring traceability and accountability. Data quality reporting is also a key component, allowing me to track progress and identify areas for improvement. For large datasets, I leverage automated validation tools to improve efficiency.

Q 19. How would you approach building a predictive model using statistical software?

Building a predictive model using statistical software involves a structured approach. I begin by clearly defining the problem and identifying the target variable. Then, I perform exploratory data analysis (EDA) to understand the data’s characteristics and identify potential relationships between variables. This involves using techniques like data visualization and summary statistics. Based on the EDA, I select appropriate features (independent variables) and choose a suitable statistical model such as linear regression, logistic regression, or decision trees. The model is then trained on a portion of the data, and its performance is evaluated using metrics relevant to the problem (e.g., accuracy, precision, recall for classification; RMSE, MAE for regression). Finally, I fine-tune the model by adjusting parameters and evaluating its performance on a separate test dataset. Software like R or Python with libraries like scikit-learn are invaluable for this process.

For example, if predicting customer purchase behavior, I might use logistic regression to model the probability of a purchase based on variables like demographics, purchase history, and browsing behavior.

Q 20. Explain your understanding of different data modeling techniques.

Data modeling techniques are crucial for organizing and representing data effectively. I’m familiar with various techniques, including relational models (using SQL databases), dimensional models (data warehouses), and NoSQL models (for unstructured data). Relational models organize data into tables with rows and columns, linked through relationships. Dimensional models organize data around business dimensions (e.g., time, product, customer) to support analytical queries efficiently. NoSQL databases offer flexibility for handling diverse data types, including JSON or XML. The choice of technique depends on the specific needs of the project. For example, a relational model is well-suited for transactional data, while a dimensional model is preferred for analytical reporting and business intelligence.

Q 21. What are some common issues encountered when working with large datasets?

Working with large datasets presents several challenges. One major issue is storage and processing limitations. Handling terabytes or petabytes of data requires specialized infrastructure like cloud computing platforms or distributed processing frameworks (like Hadoop or Spark). Another challenge is the increased computational time required for data cleaning, transformation, and model training. Optimizing algorithms and utilizing parallel processing become crucial for efficiency. Moreover, large datasets often come with increased complexity in data quality issues. Robust data validation and cleaning procedures are necessary to handle inconsistencies, missing values, and outliers. Data visualization also becomes more complex; specialized techniques and tools are needed to effectively represent insights from large datasets. Finally, managing the memory footprint of analyses is critical to avoid crashes or excessively long run times.

Q 22. How do you prioritize tasks when managing multiple data analysis projects?

Prioritizing tasks in data analysis projects requires a structured approach. I typically use a combination of methods, starting with a clear understanding of project goals and deadlines. I then employ techniques like the Eisenhower Matrix (urgent/important), MoSCoW method (Must have, Should have, Could have, Won’t have), or simply ranking tasks by their impact on the overall project objectives. For example, if I’m working on multiple projects involving A/B testing website designs, market research analysis, and customer segmentation, I might prioritize the A/B testing based on its immediate impact on revenue, followed by customer segmentation as it’s crucial for long-term strategy, and finally, market research which may have a longer timeline for impact.

Using project management tools like Microsoft Project or Asana helps immensely in visualizing dependencies and tracking progress. Regularly reviewing and adjusting priorities based on changing circumstances is key; this often involves close communication with stakeholders to ensure alignment and address any unexpected issues.

Q 23. How do you ensure the security and privacy of sensitive data?

Data security and privacy are paramount. My approach involves a multi-layered strategy. First, I adhere strictly to company policies and any relevant data protection regulations (like GDPR or CCPA). This includes using strong passwords, enabling two-factor authentication, and understanding access control mechanisms. Secondly, I ensure data is encrypted both in transit and at rest, utilizing tools like encryption software and secure cloud storage services. For example, when working with sensitive customer data, I’d make sure all files are encrypted using AES-256 encryption before uploading them to cloud storage. Thirdly, I always follow the principle of least privilege, granting users only the necessary access rights to perform their tasks. Finally, I maintain detailed audit trails to track all data access and modifications, allowing for thorough investigations if necessary.

Q 24. Describe your experience with version control systems (e.g., Git) for data projects.

While I haven’t extensively used Git in past roles (mention specific examples if applicable), I am proficient in the core concepts of version control and understand its crucial role in collaborative data projects. I’m familiar with branching, merging, and resolving conflicts. The benefit of Git in data analysis is clear: it allows for tracking changes in code, datasets, and analysis scripts, making collaboration easier and troubleshooting errors simpler. Imagine a scenario where multiple team members are working on the same data cleaning script. Git allows for each person to work independently, merge their changes later, and resolve any conflicts in a structured manner. This prevents overwriting changes and makes tracing the evolution of the analysis much simpler. I am eager to expand my Git proficiency in a new role.

Q 25. How do you stay up-to-date with the latest advancements in data analysis and technology?

Staying current in the rapidly evolving field of data analysis requires a proactive approach. I regularly follow industry blogs, publications, and online courses (like Coursera or edX) to stay abreast of new techniques and technologies. I actively participate in online communities and attend webinars to learn from other professionals and engage in discussions. For example, I regularly read Towards Data Science articles and follow prominent data scientists on Twitter. Conferences and workshops are also valuable for networking and gaining in-depth knowledge, exposing me to cutting-edge tools and techniques such as the latest advancements in machine learning algorithms or the newest features in PV database management systems. I also dedicate time to experimenting with new tools and techniques on personal projects to solidify my understanding and build practical experience.

Q 26. What are your strengths and weaknesses as a data analyst?

My strengths lie in my analytical skills, attention to detail, and problem-solving abilities. I’m proficient in a wide range of statistical software (mention specific software like R, Python, SAS, etc.) and data visualization tools, enabling me to efficiently process and interpret complex datasets. I’m also a strong communicator; I can effectively convey my findings and recommendations to both technical and non-technical audiences. A weakness I’m actively working on is delegation. While I’m capable of handling multiple projects simultaneously, learning to delegate effectively will allow me to manage even larger workloads more efficiently and empower team members.

Q 27. What are your salary expectations?

My salary expectations are in line with the industry standard for a data analyst with my experience and skillset in this region. Considering my expertise in the Microsoft Office Suite, statistical software, and PV databases, and based on my research of similar roles, I am targeting a salary range of [Insert Salary Range]. I am, however, flexible and open to discussing this further based on the specific responsibilities and benefits package offered.

Q 28. Do you have any questions for me?

Yes, I have a few questions. First, can you describe the team dynamics and collaborative environment within the data analysis department? Second, what are the company’s future plans and priorities for data analysis initiatives? Finally, what opportunities are available for professional development and growth within the company?

Key Topics to Learn for Proficiency in Microsoft Office Suite, Statistical Software, and PV Databases Interview

- Microsoft Office Suite:

- Advanced Excel functionalities: PivotTables, VLOOKUP/HLOOKUP, Macros, Data Analysis tools, charting techniques.

- Efficient Word document creation and formatting: Styles, templates, mail merge, advanced formatting options.

- PowerPoint presentations: Effective slide design, data visualization, presentation delivery techniques.

- Practical application: Demonstrate your ability to analyze complex datasets in Excel, create professional reports in Word, and deliver compelling presentations in PowerPoint.

- Statistical Software (e.g., R, SPSS, SAS):

- Data cleaning and preprocessing: Handling missing values, outlier detection, data transformation.

- Descriptive and inferential statistics: Hypothesis testing, regression analysis, ANOVA.

- Data visualization: Creating informative and insightful charts and graphs.

- Practical application: Showcase your ability to perform statistical analysis, interpret results, and communicate findings effectively.

- PV Databases (e.g., relational databases, NoSQL databases):

- Database design principles: Understanding relational models, normalization, and database schemas.

- SQL queries: Writing efficient and complex queries for data retrieval and manipulation.

- Data integrity and security: Implementing measures to ensure data accuracy and protection.

- Practical application: Demonstrate your proficiency in querying databases, managing data, and ensuring data quality.

- Problem-Solving Approach:

- Articulate your problem-solving methodology when faced with data analysis challenges.

- Highlight your ability to break down complex problems into manageable steps.

- Demonstrate your capacity to adapt your approach based on the specific problem and available tools.

Next Steps

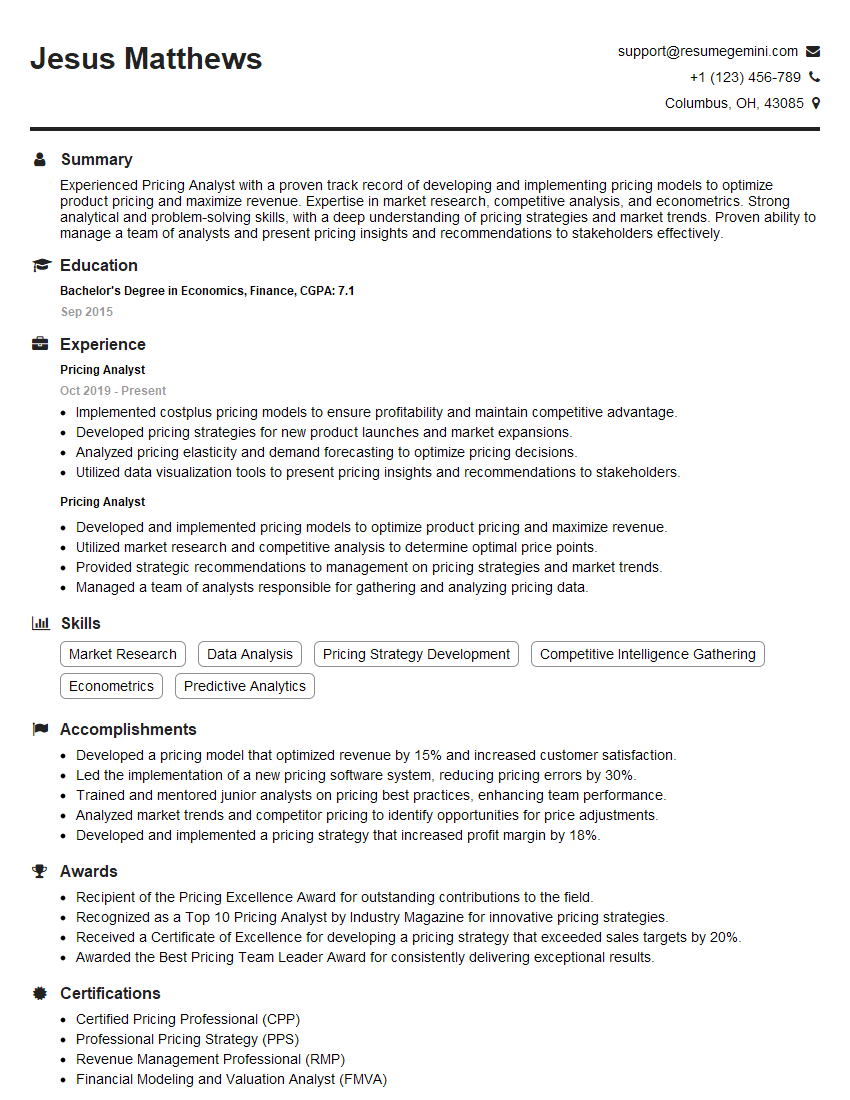

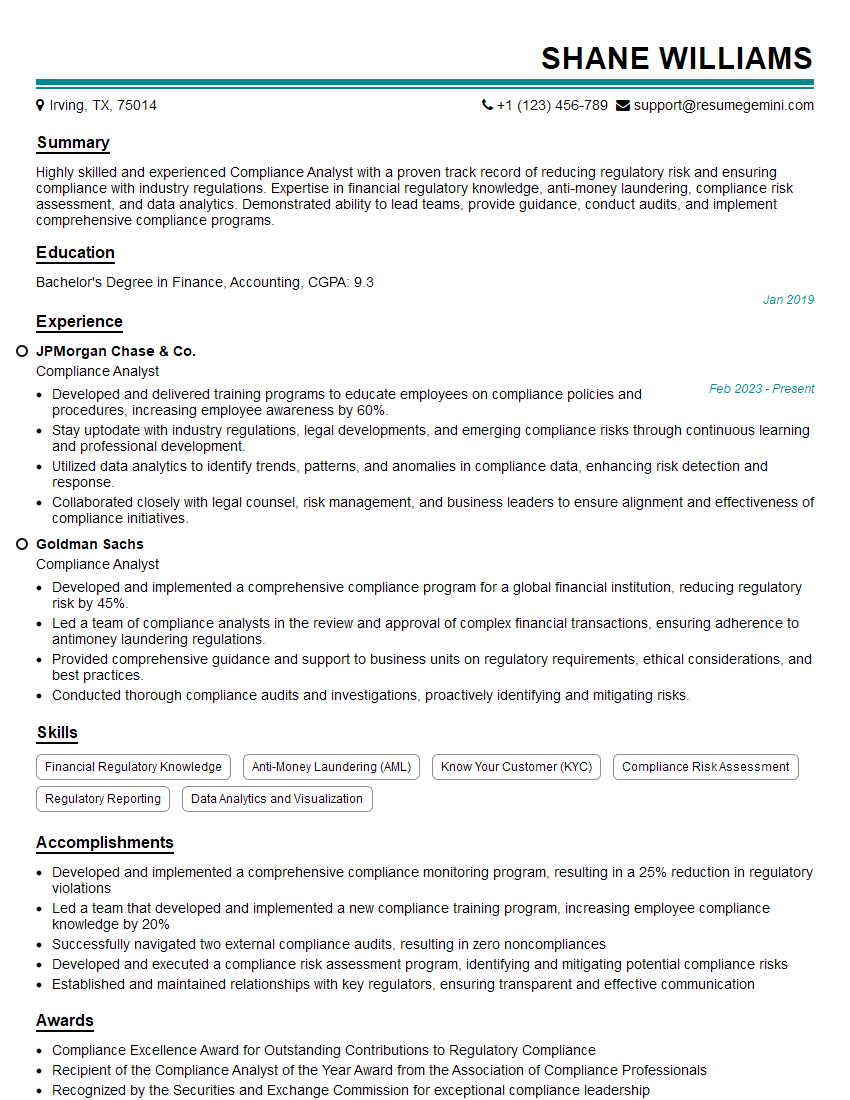

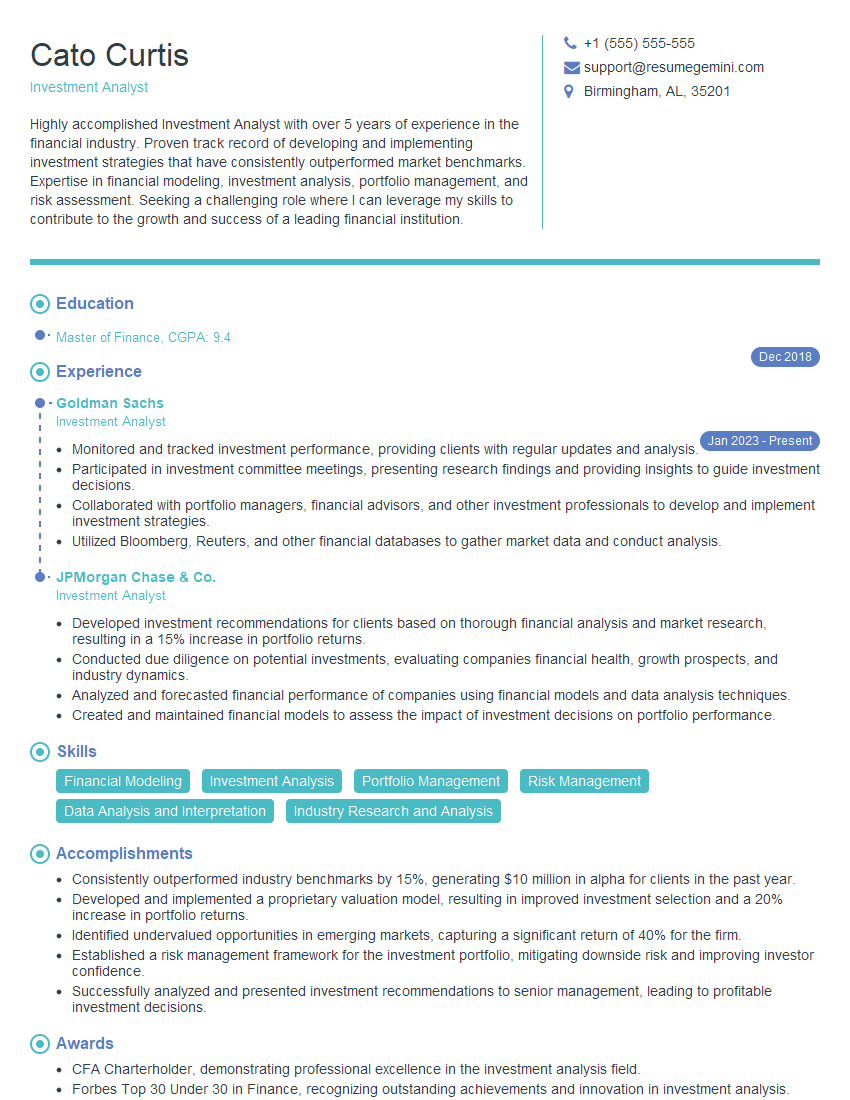

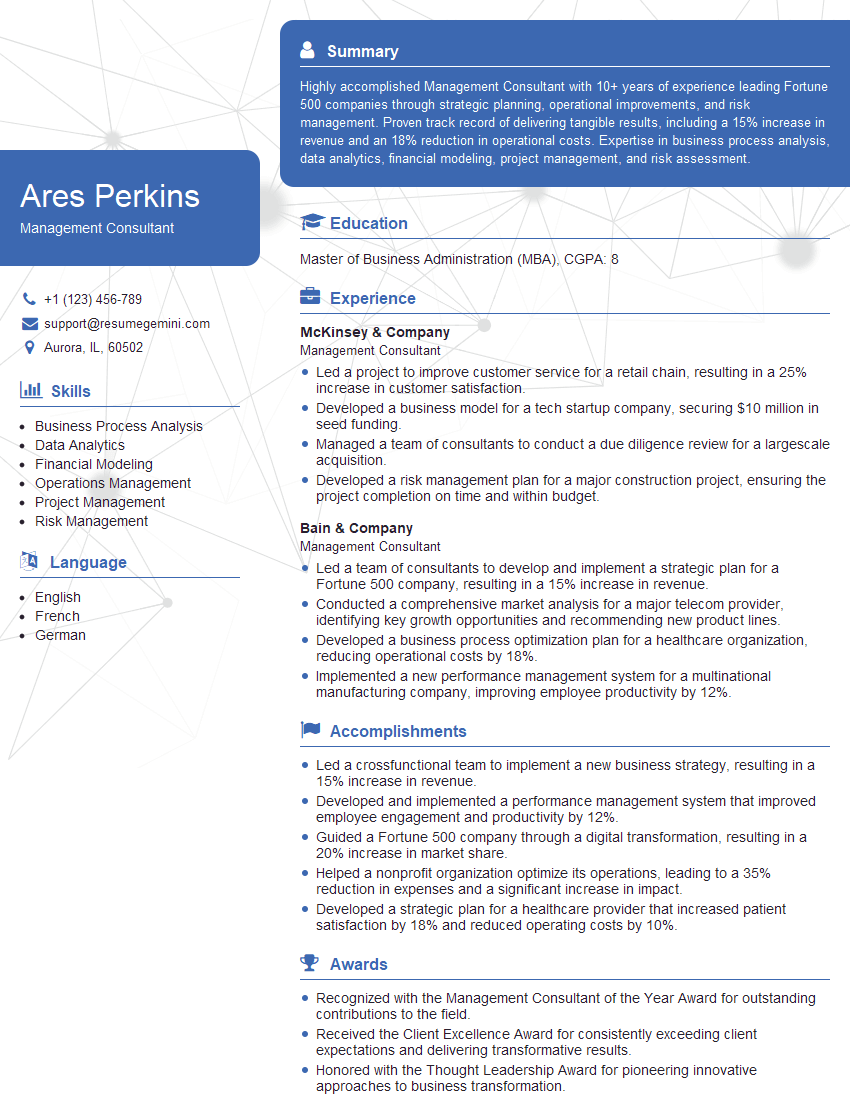

Mastering Proficiency in Microsoft Office Suite, Statistical Software, and PV Databases is crucial for career advancement in many data-driven fields. A strong command of these tools opens doors to exciting opportunities and enhances your value to potential employers. To maximize your job prospects, focus on crafting an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume. Examples of resumes tailored to Proficiency in Microsoft Office Suite, Statistical Software, and PV Databases are available to guide you. Let’s work together to help you secure your dream job!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

hello,

Our consultant firm based in the USA and our client are interested in your products.

Could you provide your company brochure and respond from your official email id (if different from the current in use), so i can send you the client’s requirement.

Payment before production.

I await your answer.

Regards,

MrSmith

These apartments are so amazing, posting them online would break the algorithm.

https://bit.ly/Lovely2BedsApartmentHudsonYards

Reach out at [email protected] and let’s get started!

Take a look at this stunning 2-bedroom apartment perfectly situated NYC’s coveted Hudson Yards!

https://bit.ly/Lovely2BedsApartmentHudsonYards

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?