Cracking a skill-specific interview, like one for Proficient in using video compression formats, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Proficient in using video compression formats Interview

Q 1. Explain the difference between intra-frame and inter-frame compression.

Intra-frame and inter-frame compression are two fundamental approaches in video encoding, differing in how they achieve data reduction. Think of it like painting a picture: intra-frame is like painting each frame independently, while inter-frame is like only painting the parts that change between frames.

Intra-frame compression (also known as I-frames) encodes each frame independently, without referencing any other frames. Each frame is compressed as a still image, using techniques like DCT (Discrete Cosine Transform) and quantization. This results in larger file sizes per frame but allows for random access to any frame within the video, because each frame is complete.

Inter-frame compression (using P-frames and B-frames) leverages the similarities between consecutive frames. It identifies differences between the current frame and a previously encoded reference frame (usually an I-frame or a P-frame). Only the differences, or motion vectors, are encoded, resulting in significantly smaller file sizes. However, this method requires decoding previous frames to reconstruct a given frame, making random access slower and more complex.

In short: I-frames are self-contained, large, and offer random access. P- and B-frames are smaller, efficient, but require sequential decoding.

Q 2. What are the advantages and disadvantages of H.264, H.265 (HEVC), and VP9?

H.264, H.265 (HEVC), and VP9 are all widely used video codecs, each with its own strengths and weaknesses:

- H.264 (AVC): A mature codec, widely supported across devices. It offers a good balance between compression efficiency and computational complexity. However, it’s less efficient than newer codecs.

- H.265 (HEVC): Significantly more efficient than H.264, offering roughly 50% better compression at the same quality level. This means smaller file sizes or higher quality for the same bitrate. However, it demands more processing power for encoding and decoding.

- VP9: An open-source codec developed by Google, offering comparable efficiency to H.265 in many scenarios. It’s a strong contender for streaming applications, particularly with its royalty-free licensing. It too, requires more processing power than H.264.

Advantages and Disadvantages Summary Table:

| Codec | Advantages | Disadvantages |

|---|---|---|

| H.264 | Wide support, good balance of efficiency and complexity | Less efficient than H.265/VP9 |

| H.265 | High compression efficiency | Higher computational complexity, less widespread support in older devices |

| VP9 | High compression efficiency, royalty-free | Higher computational complexity, support may be less ubiquitous than H.264 |

The best codec depends on the specific application and priorities. For example, H.264 might be preferred for wide compatibility on older devices, while H.265 or VP9 are better choices for high-quality streaming or archiving where smaller file sizes are paramount.

Q 3. Describe the concept of quantization in video compression.

Quantization is a crucial step in video compression that reduces the amount of data needed to represent the video. Imagine you have a detailed photograph. Quantization is like simplifying that photo by reducing the number of colors. You lose some detail, but the file size becomes significantly smaller.

In video compression, the Discrete Cosine Transform (DCT) transforms the pixel data into frequency coefficients. Quantization then scales these coefficients, effectively reducing their precision. Larger quantization values lead to greater data reduction but also more visible artifacts like blockiness or loss of detail. Smaller values retain more detail but result in larger file sizes. The choice of quantization level is a key parameter affecting the balance between compression and quality.

Think of it like rounding numbers. Instead of storing the precise value of each coefficient, you round it to a nearby value. This process reduces the overall amount of data needed to represent the image or video but introduces some loss of precision.

Q 4. How does motion estimation work in video compression?

Motion estimation is a core component of inter-frame compression, figuring out how image content moves between frames. It dramatically reduces the size of video files by avoiding the need to re-encode unchanging areas. It’s a sophisticated process that involves comparing blocks of pixels in consecutive frames to find the best match.

The process typically involves:

- Block Partitioning: The current frame is divided into blocks of pixels.

- Search Algorithm: For each block, the algorithm searches within a defined search window in the previous frame to find the most similar block. The position of the best-matching block is the motion vector.

- Motion Vector Encoding: The motion vectors are encoded instead of the entire block of pixels. The algorithm only encodes the differences between the current block and the matched block in the previous frame (residuals).

Different search algorithms are used, each with trade-offs between speed and accuracy. More sophisticated algorithms can handle more complex motion but require more processing power. The motion vectors are crucial for efficient compression because they significantly reduce redundancy by only encoding the changes between frames.

Q 5. Explain the role of bitrate in video quality and file size.

Bitrate is the amount of data transmitted per unit of time, typically measured in bits per second (bps) or kilobits per second (kbps). It directly impacts both video quality and file size. A higher bitrate means more data is used to represent each second of video.

Impact on Video Quality: Higher bitrates generally lead to better video quality. More data allows for finer details, smoother motion, and less compression artifacts. Lower bitrates result in lower quality, with noticeable artifacts such as blockiness, blurring, and loss of detail. Think of it like using a higher resolution for a picture; the higher resolution offers more details.

Impact on File Size: A higher bitrate results in a larger file size because more data is being stored. Lower bitrates result in smaller file sizes, but at the cost of video quality. The relationship is directly proportional: increasing the bitrate increases the file size proportionally, and vice-versa.

In professional settings, the bitrate is carefully chosen to balance quality requirements with storage space or bandwidth constraints. For instance, streaming platforms often use adaptive bitrate streaming (ABR), which dynamically adjusts the bitrate based on the viewer’s network conditions, ensuring a smooth viewing experience.

Q 6. What is a GOP (Group of Pictures) and why is it important?

A Group of Pictures (GOP) is a sequence of frames in a video stream that starts with an I-frame (intra-coded frame) and includes subsequent P-frames (predictive-coded frames) and possibly B-frames (bidirectionally-predicted frames). It’s a fundamental structure in video compression.

Importance of GOPs:

- Random Access Points: I-frames act as random access points. You can start playing the video from any I-frame without needing to decode prior frames. This is crucial for features like seeking and chapter selection.

- Error Resilience: If there’s an error during transmission or storage, the damage is usually limited to a single GOP. The next I-frame allows the decoder to resume without significant corruption. This provides robustness in unreliable networks or storage media.

- Compression Efficiency: The structure allows for efficient inter-frame prediction. P-frames predict based on prior frames, and B-frames predict from both past and future frames within the GOP.

The GOP size (length of the sequence) is a design parameter. A smaller GOP allows more frequent random access points, improving error resilience but potentially reducing compression efficiency. A larger GOP improves compression but decreases random access and error resilience.

Q 7. What are some common video container formats and their characteristics?

Video container formats are like wrappers that hold the encoded video and audio data along with metadata. They don’t compress the video itself but organize it for storage and playback.

- MP4 (MPEG-4 Part 14): A widely used, versatile container supporting various codecs like H.264, H.265, and AAC audio. It’s commonly used for online videos, mobile devices, and digital distribution. It offers good compatibility and features.

- MOV (QuickTime File Format): Developed by Apple, commonly used for high-quality video. It supports various codecs, but its compatibility might be less widespread than MP4.

- AVI (Audio Video Interleave): An older format with support for a variety of codecs, but it can be less efficient than modern containers like MP4.

- MKV (Matroska Video): An open-source container known for its flexibility and support for multiple audio and video tracks, subtitles, and chapters. It’s popular for high-definition videos and archiving, but support might not be as universal as MP4.

- WebM: Designed for web-based streaming, it typically uses VP8 or VP9 video codecs and Vorbis or Opus audio. It’s optimized for online playback and is often used with HTML5 video.

The choice of container format depends on factors such as compatibility, desired codec support, and specific application requirements. For web delivery, WebM is often preferred, while MP4 is a versatile choice for most other scenarios.

Q 8. Describe your experience with various video encoding tools (e.g., FFmpeg, x264, HandBrake).

My experience with video encoding tools is extensive, encompassing command-line tools like FFmpeg and dedicated applications such as HandBrake. FFmpeg is my go-to for intricate control over the encoding process, allowing me to fine-tune parameters for optimal results. I frequently use it for batch processing and complex workflows, such as transcoding large archives of video files for various platforms. For simpler tasks or user-friendly interfaces, HandBrake provides a streamlined approach, ideal for quick conversions and preset applications. x264, while often used within FFmpeg or other tools, is a powerful encoder specifically for the H.264 codec. I’ve leveraged its advanced options for achieving exceptional quality at lower bitrates. I’m proficient in utilizing various codecs with these tools, including H.264 (x264), H.265 (x265), VP9, and AV1, selecting the most appropriate codec based on the target platform and desired balance between quality and file size.

For instance, I recently used FFmpeg to create a series of optimized video clips for a social media campaign. The original videos were high-resolution, requiring compression for online streaming. By using FFmpeg’s advanced features, I could ensure consistent video quality across various devices while keeping file sizes small enough to avoid long loading times.

Q 9. Explain how to optimize video compression settings for different platforms and bandwidths.

Optimizing video compression settings for different platforms and bandwidths requires a nuanced understanding of the target audience and their viewing capabilities. Factors to consider include screen resolution, bitrate, and the codec used. For instance, high-resolution displays (e.g., 4K) demand higher bitrates for acceptable quality, while lower-resolution devices may perform better with lower bitrates. Bandwidth restrictions further impact the choices. Mobile users often have limited bandwidth compared to desktop users with high-speed connections.

My approach usually involves testing different settings and analyzing the results. A high bitrate yields better quality but results in larger file sizes and increased bandwidth requirements. Lowering the bitrate reduces file size and bandwidth needs but potentially sacrifices some video quality. I often start with a target bitrate and then fine-tune it, monitoring the output for noticeable quality loss. The choice of codec also plays a significant role, with H.265 (HEVC) generally offering better compression ratios compared to H.264 (AVC) at the cost of increased encoding complexity.

For example, preparing a video for YouTube, where bandwidth is less of a concern, allows for a higher bitrate, maintaining better visual fidelity. In contrast, optimizing for mobile devices might involve selecting a lower bitrate and using a more efficient codec like H.265 to ensure smooth playback.

Q 10. How do you balance video quality and file size during compression?

Balancing video quality and file size is a constant trade-off in video compression. There’s no single perfect answer; it depends entirely on the context. Think of it like baking a cake: you want it to be delicious (high quality), but you also don’t want it to be too big (small file size). Too much emphasis on one aspect compromises the other.

My strategy usually involves iterative adjustments. I begin with a high-quality setting, then gradually reduce the bitrate while visually inspecting the output. Tools like FFmpeg allow for precise control over parameters such as quantization parameters (QP), which directly influence the compression level. Lower QP values result in higher quality but larger file sizes, while higher QP values reduce file size but also introduce more compression artifacts. I also consider the use of two-pass encoding, which allows for more accurate rate control and minimizes quality loss.

For critical applications like high-definition broadcasts, maintaining quality takes priority, even if it results in larger file sizes. For online streaming where bandwidth is limited, smaller file sizes are more important, even if it means accepting slightly lower quality.

Q 11. What are some common video compression artifacts and how can they be minimized?

Common video compression artifacts include blocking, ringing, mosquito noise, and macroblocking. Blocking manifests as square blocks, especially in homogenous areas, indicating excessive compression. Ringing appears as halos or artificial edges around sharp transitions, a result of aggressive filtering. Mosquito noise looks like a swarm of small dots or noise, usually in areas with fine detail. Macroblocking is a severe form of blocking, visible as larger, more pronounced square blocks.

Minimizing these artifacts involves careful selection of compression parameters and codec. Choosing a more efficient codec, such as H.265 over H.264, can significantly reduce artifacts at the same bitrate. Adjusting parameters like QP, rate control method, and the use of filters during encoding can help fine-tune the balance between compression and visual quality. Experimentation and analysis are key; observing how different settings impact the final output is crucial.

For example, if I notice excessive blocking in a compressed video, I might lower the QP value or select a different rate control algorithm to distribute the compression more evenly across the video frames. Similarly, if ringing is prominent, I might adjust the filter settings in the encoder to reduce the level of filtering.

Q 12. What is rate control in video encoding and how does it work?

Rate control in video encoding manages the bitrate of the compressed video to ensure a consistent quality throughout. It’s essentially the process of assigning bits to each frame or group of frames to meet a target bitrate. Imagine you have a certain amount of space to fill with words (bits) to describe an image (video frame). Rate control strategically allocates those words, giving more to complex scenes needing more detail and fewer to simple scenes.

Several methods exist, including Constant Bitrate (CBR) and Variable Bitrate (VBR). CBR allocates a fixed number of bits per second, providing consistent bandwidth consumption but potentially sacrificing quality in complex scenes. VBR dynamically adjusts the bitrate based on the complexity of each scene, resulting in better quality but fluctuating bandwidth usage. Two-pass encoding further refines rate control by analyzing the video in a first pass to determine an optimal bit allocation before encoding in a second pass.

The choice between CBR and VBR depends on the application. CBR is suitable for live streaming, where consistent bandwidth is crucial. VBR is preferred for files meant for storage or on-demand viewing, where quality is prioritized.

Q 13. Explain the concept of chroma subsampling.

Chroma subsampling is a technique used to reduce the size of video files by reducing the resolution of the color information (chroma) while maintaining the full resolution of the luminance (brightness) information. Our eyes are more sensitive to luminance than chroma, making this a viable compression strategy.

It’s expressed as a ratio, such as 4:2:0, 4:2:2, or 4:4:4. In 4:2:0, the most common format, the chroma resolution is half the horizontal and vertical resolution of the luminance. This means that only half the color information is stored, resulting in significant file size reduction without significant perceived quality loss. 4:2:2 retains more color detail, especially in horizontal lines, and 4:4:4 retains full color resolution.

The choice of chroma subsampling depends on the application and the desired balance between quality and file size. 4:2:0 is commonly used for web videos and streaming applications due to its good compression ratio and minimal perceived quality loss. 4:2:2 and 4:4:4 are favored for professional applications, where color accuracy is critical.

Q 14. Describe your experience with different video compression profiles and levels.

My experience with video compression profiles and levels covers a wide range of codecs and their associated settings. Profiles define the features and constraints of an encoding, while levels specify the resolution, frame rate, and bitrate limits. For example, H.264 has various profiles like Baseline, Main, High, and High 10, each supporting different features like B-frames, CAVLC, and higher bit depths. Levels within a profile further define the maximum resolution and bitrate for that encoding.

Understanding these profiles and levels is crucial for selecting the appropriate settings. A high profile might offer better compression, but it could require more processing power and might not be compatible with all devices. Selecting a lower profile ensures compatibility with older devices but might result in slightly larger file sizes. Similarly, selecting a lower level might be necessary for older devices or low-bandwidth conditions.

For instance, I might choose the H.264 High profile with a suitable level for compatibility across a wide range of playback devices while considering the target bandwidth for streaming. If dealing with high-dynamic range (HDR) video, choosing a profile that supports HDR is crucial.

Q 15. How do you troubleshoot video compression issues?

Troubleshooting video compression issues involves a systematic approach. First, I’d identify the symptoms: is the video blurry, pixelated, exhibiting artifacts (visual distortions), or experiencing excessively long encoding times? Then, I’d examine the source material itself. Low-resolution source videos will always result in lower-quality compressed videos, regardless of the chosen codec. Next, I’d analyze the compression settings: the chosen codec (H.264, H.265/HEVC, VP9, AV1), bitrate, frame rate, and resolution all significantly impact the final output. A bitrate that’s too low will lead to significant quality loss, while one that’s too high will result in unnecessarily large file sizes. Similarly, inappropriate frame rates can cause issues. I would also check the hardware and software involved: Insufficient processing power, RAM, or storage space can hinder the encoding process and lead to errors. Finally, I’d explore the encoding software itself, ensuring it’s up-to-date and configured correctly. For example, incorrectly configured GOP (Group of Pictures) size in H.264 encoding can cause macroblocking issues. If the problem persists after checking these points, I’d utilize video analysis tools to inspect the compressed video for specific artifacts to pinpoint the exact cause.

Example: Let’s say a video is exhibiting significant blockiness. This often indicates a bitrate that’s too low for the chosen resolution. I’d increase the bitrate, re-encode, and check the results. If the problem persists, I’d then look at the GOP size and other encoding parameters.

Career Expert Tips:

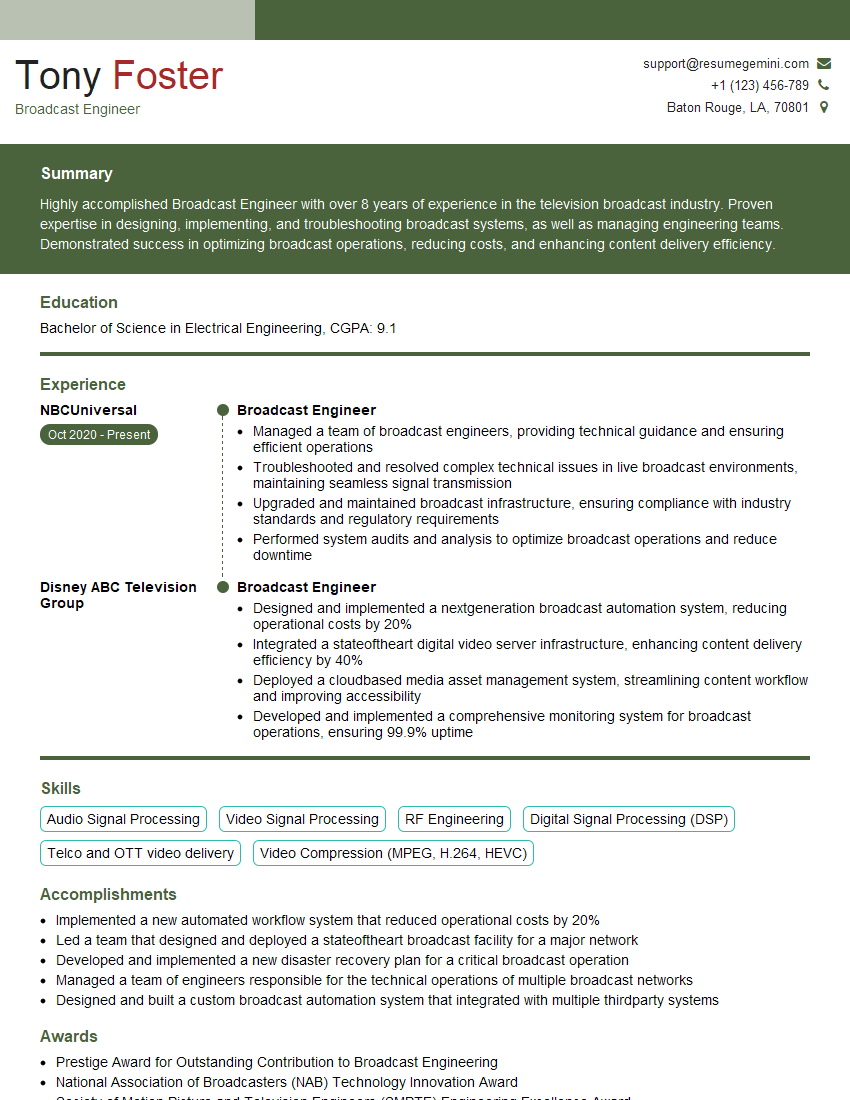

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the key performance indicators (KPIs) for video compression?

Key Performance Indicators (KPIs) for video compression focus on balancing quality and efficiency. These include:

- Bitrate (kbps): This measures the amount of data used per second. Lower bitrates mean smaller file sizes but potentially lower quality. The goal is to find the optimal balance.

- File Size (MB or GB): Smaller file sizes are crucial for efficient storage and delivery, particularly for streaming applications.

- Visual Quality (PSNR, SSIM): PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index) are objective metrics that assess the fidelity of the compressed video compared to the original. Higher scores indicate better quality.

- Encoding Time: Faster encoding times are crucial for efficient workflow, especially in large-scale production environments.

- Compression Ratio: This indicates the level of data reduction achieved. A higher ratio means greater efficiency, but it might come at the cost of quality.

- Client-side Buffering: In streaming, frequent buffering indicates insufficient bandwidth or a bitrate too high for the viewer’s connection.

Example: In a streaming platform, we might aim for a bitrate of 2 Mbps for 720p videos to balance quality and bandwidth efficiency, monitoring file sizes and client buffering rates to fine-tune the bitrate based on user experience.

Q 17. How familiar are you with different video resolutions (e.g., 4K, 8K) and their impact on compression?

I’m very familiar with various video resolutions and their impact on compression. Resolutions like 4K (3840×2160) and 8K (7680×4320) significantly increase the amount of data needing to be compressed compared to 1080p or 720p. This directly translates to larger file sizes and higher bitrates for maintaining comparable visual quality. The higher the resolution, the more computationally intensive the encoding process becomes. For example, encoding an 8K video requires substantially more processing power than a 720p video. The choice of codec also plays a significant role. H.265/HEVC and VP9, designed for higher resolutions and efficiency, are often preferred over H.264 for 4K and 8K content to manage file sizes effectively. Without efficient codecs, 4K and 8K streaming would require enormous bandwidth, making it impractical for many users.

Example: A 10-minute 1080p video might be around 1 GB, while a similar 4K video might be 4-6 GB. This difference highlights the importance of choosing appropriate codecs and bitrates when working with high-resolution content.

Q 18. Describe your experience with video streaming protocols (e.g., RTMP, HLS, DASH).

My experience with video streaming protocols encompasses RTMP (Real-Time Messaging Protocol), HLS (HTTP Live Streaming), and DASH (Dynamic Adaptive Streaming over HTTP). RTMP is a proprietary protocol traditionally used for live streaming, offering low latency but lacking adaptability to varying network conditions. HLS and DASH are both HTTP-based, offering better scalability and adaptability. HLS uses segmented TS (Transport Stream) files, while DASH uses fragmented MP4 files. DASH generally provides more flexibility and better adaptation to dynamic network conditions, allowing for seamless switching between different quality levels based on bandwidth availability. I’ve worked with each protocol in different contexts, selecting the optimal one based on the project’s specific requirements, such as latency needs and scalability.

Example: For a live sports broadcast requiring minimal latency, RTMP might be suitable. For on-demand video delivery across various devices and network conditions, DASH would be preferred due to its adaptive nature.

Q 19. Explain the concept of adaptive bitrate streaming.

Adaptive bitrate streaming (ABR) is a crucial technology for delivering high-quality video over variable network conditions. Instead of encoding a video at a single bitrate, ABR creates multiple versions of the same video at different bitrates (e.g., 240p, 360p, 720p, 1080p). The player on the client device continuously monitors network conditions and dynamically selects the highest-quality version that can be streamed without buffering. This ensures a smooth viewing experience, even if the network connection fluctuates. Protocols like HLS and DASH inherently support ABR, making them ideal choices for streaming video.

Analogy: Imagine a car adjusting its speed to navigate a road with varying traffic conditions. ABR similarly adjusts the video quality based on network bandwidth.

Q 20. What are your experiences with cloud-based video encoding services (e.g., AWS Elemental MediaConvert, Azure Media Services)?

I have extensive experience with cloud-based video encoding services such as AWS Elemental MediaConvert and Azure Media Services. These services offer scalable and cost-effective solutions for encoding large volumes of video content. I’m proficient in configuring encoding presets, managing workflows, and integrating them with other cloud services. For example, I’ve utilized MediaConvert’s powerful presets to optimize encoding settings for different codecs and resolutions, ensuring both high-quality output and efficient storage. The ability to scale encoding tasks automatically based on demand is a key benefit. I’ve leveraged the monitoring and reporting features of these services to optimize encoding processes and identify potential bottlenecks.

Example: In a recent project, we used AWS Elemental MediaConvert to process thousands of videos, automatically scaling the encoding resources to meet the demand. The service’s comprehensive reporting tools enabled us to track encoding times and costs effectively.

Q 21. How do you ensure video compatibility across different devices and browsers?

Ensuring video compatibility across devices and browsers requires careful consideration of several factors. The first is the choice of video codec. While H.264 is widely supported, newer codecs like H.265/HEVC and VP9 offer better compression efficiency, but browser and device support might vary. I use a combination of techniques, including encoding multiple versions of the video using different codecs (H.264, H.265, VP9), and using container formats like MP4, which is broadly compatible. Adaptive bitrate streaming plays a vital role. By offering multiple resolutions, the player can choose the most compatible option based on the device capabilities. It’s also critical to utilize responsive design principles to ensure the video player adapts appropriately to various screen sizes. I’d thoroughly test the video on a wide range of devices and browsers before deployment, utilizing browser developer tools to ensure compatibility.

Example: For a website, we’d deliver videos using an adaptive bitrate streaming solution supporting H.264 and VP9, ensuring that most devices and browsers can play the video smoothly, while also leveraging responsive design for optimum display on various screens. Testing the video on multiple devices (desktops, tablets, and smartphones) running various browsers is crucial before public launch.

Q 22. What is your experience with video transcoding?

Video transcoding is the process of converting a video file from one format to another. This often involves changing the codec (the method of compression), resolution, frame rate, bitrate, and other parameters. My experience encompasses a wide range of transcoding tasks, from simple format conversions for web delivery to complex workflows involving high-resolution, high-bitrate content for professional broadcasting. I’m proficient in using various command-line tools like FFmpeg and also have experience with cloud-based transcoding services like AWS Elemental MediaConvert and Azure Media Services. For example, I’ve worked on projects where we needed to convert 4K footage shot on RED cameras into various formats optimized for streaming on different platforms like YouTube, Vimeo, and Netflix, each with its own specific requirements for resolution, bitrate, and codec. This often involves careful consideration of the target audience’s bandwidth capabilities and device limitations.

Q 23. Explain the concept of perceptual quality metrics in video compression.

Perceptual quality metrics in video compression aim to assess the quality of a compressed video by measuring how it is perceived by the human eye, not just by comparing it pixel-by-pixel to the original. Traditional metrics like Mean Squared Error (MSE) focus on the numerical difference, ignoring the fact that our visual system is less sensitive to certain types of errors. Perceptual metrics, on the other hand, take into account factors like contrast sensitivity and masking effects. Examples include PSNR-HVS (Peak Signal-to-Noise Ratio considering the Human Visual System), VMAF (Video Multimethod Assessment Fusion), and SSIM (Structural Similarity Index). I frequently use VMAF in my work because it better correlates with subjective human perception, allowing for more accurate quality control during compression. For instance, when optimizing for a specific bitrate, VMAF helps determine the settings that deliver the best perceived quality for the least amount of data, saving bandwidth and storage costs while maintaining a satisfactory viewing experience.

Q 24. How do you handle large-scale video encoding and processing workflows?

Handling large-scale video encoding and processing requires a robust and scalable infrastructure. My approach typically involves distributed processing using tools like FFmpeg with parallel processing capabilities, or leveraging cloud-based services with their inherent scalability. I’m experienced in designing and implementing workflows utilizing message queues (like RabbitMQ or Kafka) for asynchronous processing, ensuring that individual encoding tasks don’t block each other. For monitoring and management, I often rely on tools that provide real-time insights into task progress, resource utilization, and error handling. A real-world example involves processing thousands of short video clips for a social media platform. We used a combination of AWS Lambda functions and S3 storage to process the videos in parallel, automatically scaling based on demand. This allowed us to efficiently handle the high volume of uploads while maintaining a quick turnaround time.

Q 25. What are your experiences with video compression optimization for mobile devices?

Optimizing video compression for mobile devices requires careful consideration of several factors, primarily the limited processing power and bandwidth constraints. I typically focus on using codecs like H.265 (HEVC) or VP9, which offer superior compression efficiency compared to older codecs like H.264. Reducing resolution, frame rate, and bitrate are often necessary trade-offs to ensure smooth playback on lower-end devices. Adaptive bitrate streaming (ABR) is crucial; this allows the player to seamlessly switch between different quality levels based on network conditions. I’ve worked on projects where we needed to deliver high-quality video to a broad range of devices, from low-end smartphones to high-end tablets. This often involved creating multiple versions of the same video at different resolutions and bitrates, allowing the client to select the most appropriate version based on the device capabilities and network connection. For instance, I successfully implemented ABR for an educational video streaming platform, resulting in a 30% reduction in data consumption while maintaining comparable video quality.

Q 26. Describe your experience with video compression for VR/AR content.

Video compression for VR/AR content presents unique challenges due to the high resolution, high frame rates, and often stereoscopic nature of the content. Efficient compression is paramount to reduce the bandwidth and storage requirements significantly. I’ve worked with specialized codecs and formats designed for VR/AR, such as Ogg Theora, and explored the use of depth maps to improve compression efficiency. Furthermore, understanding the specific needs of different VR/AR headsets and their hardware capabilities is crucial. For example, I was involved in a project involving 360° video for a VR application. We experimented with different compression techniques to find the optimal balance between quality and file size, considering factors like the viewing distance and the user’s field of view. This often involved using advanced techniques to reduce redundant information in the video, minimizing the overall data size without sacrificing the immersive experience.

Q 27. How do you stay up-to-date with the latest advancements in video compression technology?

Staying up-to-date in the rapidly evolving field of video compression requires a multi-faceted approach. I regularly attend industry conferences and webinars, read academic papers and publications, and follow the work of leading researchers and organizations like the MPEG and VVC standardization groups. I actively participate in online forums and communities dedicated to video compression technology, exchanging ideas and learning from other experts. Furthermore, I experiment with the latest codecs and tools, testing their performance in real-world scenarios to understand their strengths and limitations. This continuous learning ensures that my knowledge remains current and that I can implement the most effective techniques for each specific project.

Q 28. What are your preferred methods for testing the quality of compressed video?

Testing the quality of compressed video involves a combination of objective and subjective methods. Objective methods rely on quantitative metrics like VMAF, PSNR, and SSIM, providing numerical scores to compare different compression settings. However, these metrics don’t always perfectly reflect human perception. Therefore, subjective testing is essential. This involves showing the compressed videos to a panel of viewers and having them rate the quality on a predefined scale. I use a combination of both methods to get a comprehensive understanding of the quality. I use automated tools for objective assessment and carefully design subjective tests with controlled conditions to minimize bias. This integrated approach ensures that the compressed video meets the required quality standards while optimizing for efficiency.

Key Topics to Learn for Proficient in using video compression formats Interview

- Understanding Compression Algorithms: Explore different video compression techniques like H.264, H.265 (HEVC), VP9, and AV1. Understand their strengths, weaknesses, and practical applications in different contexts (e.g., streaming, archiving, broadcast).

- Bitrate and Quality Control: Learn how to balance file size with video quality. Understand the relationship between bitrate, resolution, frame rate, and compression level. Practice adjusting these parameters to achieve optimal results for various target platforms and bandwidths.

- Container Formats: Become familiar with common container formats like MP4, MOV, AVI, and MKV. Understand their functionalities, compatibility with different players and devices, and how they impact the overall workflow.

- Hardware and Software Encoding/Decoding: Explore the role of hardware acceleration (e.g., NVENC, QuickSync) in improving encoding speed and efficiency. Gain familiarity with various software encoding/decoding tools and their features.

- Video Compression Optimization: Learn techniques for optimizing video compression for specific use cases, such as reducing file size without significant quality loss, improving streaming performance, or preparing videos for different platforms (e.g., mobile, web).

- Troubleshooting Compression Issues: Develop your problem-solving skills related to common video compression challenges, such as artifacts, banding, and poor playback performance. Be prepared to discuss strategies for diagnosing and resolving these issues.

- Metadata and other settings: Understand how to manipulate metadata within video files, such as adding or editing chapter markers, subtitles or other information that can improve user experience or searchability.

Next Steps

Mastering video compression formats is crucial for career advancement in many multimedia-related fields. It showcases your technical expertise and problem-solving abilities, opening doors to exciting opportunities. To maximize your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Examples of resumes tailored to showcasing proficiency in video compression formats are available to guide your efforts. Investing time in crafting a strong resume will significantly enhance your chances of landing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Dear Sir/Madam,

Do you want to become a vendor/supplier/service provider of Delta Air Lines, Inc.? We are looking for a reliable, innovative and fair partner for 2025/2026 series tender projects, tasks and contracts. Kindly indicate your interest by requesting a pre-qualification questionnaire. With this information, we will analyze whether you meet the minimum requirements to collaborate with us.

Best regards,

Carey Richardson

V.P. – Corporate Audit and Enterprise Risk Management

Delta Air Lines Inc

Group Procurement & Contracts Center

1030 Delta Boulevard,

Atlanta, GA 30354-1989

United States

+1(470) 982-2456