The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Robot Programming and Simulation interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Robot Programming and Simulation Interview

Q 1. Explain the difference between forward and inverse kinematics.

Forward and inverse kinematics are fundamental concepts in robotics dealing with the relationship between a robot’s joint angles and its end-effector position and orientation. Think of it like this: forward kinematics is like knowing the recipe (joint angles) and figuring out the cake (end-effector pose), while inverse kinematics is the reverse – knowing you want a specific cake (end-effector pose) and needing to find the right recipe (joint angles).

Forward Kinematics: Given a set of joint angles, forward kinematics calculates the resulting position and orientation of the end-effector. This is relatively straightforward, involving a series of transformations (rotations and translations) along the robot’s kinematic chain. We use homogeneous transformation matrices to represent these transformations, multiplying them together to get the final pose.

Example: Consider a simple two-link robotic arm. Given joint angles θ1 and θ2, we can use trigonometric functions and the lengths of the links to calculate the (x, y) coordinates of the end-effector.

Inverse Kinematics: This is considerably more complex. Given the desired position and orientation of the end-effector, inverse kinematics finds the corresponding joint angles. This often involves solving a system of nonlinear equations, which can have multiple solutions (or no solution at all, in the case of unreachable poses). Numerical methods like iterative Newton-Raphson are frequently employed.

Example: For the same two-link arm, knowing the desired (x, y) coordinates, we need to solve for θ1 and θ2. This involves solving trigonometric equations, potentially resulting in multiple solutions reflecting different arm configurations that reach the same point.

Q 2. Describe different robot control architectures (e.g., joint space, Cartesian space).

Robot control architectures determine how we control the robot’s movement. The choice depends on the task and robot characteristics. Two common approaches are joint space control and Cartesian space control.

- Joint Space Control (or Joint-Based Control): This focuses on controlling each joint individually. We specify the desired trajectory in terms of joint angles or joint velocities. This is simpler to implement, especially for robots with many degrees of freedom. It’s suitable for tasks where precise joint movements are crucial.

- Cartesian Space Control (or Task-Based Control): This focuses on controlling the end-effector’s position and orientation directly in Cartesian coordinates (x, y, z, roll, pitch, yaw). This is more intuitive for tasks involving manipulating objects in the environment, as it directly addresses the task space. It often requires more complex calculations, including inverse kinematics.

Other architectures include hybrid approaches combining elements of both, and operational space control which considers the dynamic interactions between the robot and its environment. The selection depends on task specifics and robot capabilities.

Q 3. How do you handle singularities in robot kinematics?

Singularities are configurations where the robot loses one or more degrees of freedom. Imagine a robotic arm fully extended: it can’t easily rotate around its own axis in that position; it’s ‘locked.’ This loss of manipulability is a singularity.

Handling singularities is crucial to avoid jerky motions, unpredictable behavior, and potential damage to the robot. Here’s how we address them:

- Singularity Detection: We continuously monitor the robot’s configuration, checking the determinant of the Jacobian matrix (explained further in the next answer). A determinant close to zero indicates a singularity.

- Singularity Avoidance: Path planning algorithms can be designed to avoid configurations near singularities. This might involve adjusting the trajectory to steer clear of these problematic poses.

- Redundancy Resolution: For robots with redundant degrees of freedom (more joints than necessary for a task), we can exploit the redundancy to find alternative configurations that avoid singularities.

- Pseudoinverse Method: When a singularity is encountered, the pseudoinverse of the Jacobian matrix can be used to find a solution, although it might not be the most optimal one.

Essentially, the goal is to either avoid singularities altogether or manage them gracefully to ensure smooth and safe robot operation.

Q 4. What are Jacobian matrices and their use in robotics?

The Jacobian matrix is a fundamental tool in robotics that relates the joint velocities to the end-effector velocity. Think of it as a mapping between the joint space and the Cartesian space. Each element represents how a change in a specific joint angle affects the velocity of the end-effector along a specific Cartesian axis.

Uses of Jacobian matrices include:

- Inverse Kinematics: Solving the inverse kinematics problem often involves iterative methods that use the Jacobian to update the joint angles based on the error between the current and desired end-effector pose.

- Velocity Control: It enables the conversion of desired end-effector velocities into required joint velocities, allowing for precise control of the robot’s movement.

- Force/Torque Control: It allows mapping forces and torques applied at the end-effector to the corresponding joint torques.

- Singularity Analysis: The determinant of the Jacobian matrix is used to detect singularities, as mentioned earlier.

Example: A 6-DOF robotic arm's Jacobian will be a 6x6 matrix. Each row corresponds to a Cartesian velocity component (x, y, z, roll, pitch, yaw), and each column corresponds to a joint velocity.

Q 5. Explain different robot programming languages (e.g., RAPID, ROS).

Robot programming languages vary in their level of abstraction and target applications.

- RAPID (ABB): A proprietary language used for programming ABB robots. It’s a high-level language with features for motion control, process synchronization, and complex logic. It offers excellent support for the specific functionalities of ABB robots.

- ROS (Robot Operating System): Not a language itself, but a flexible framework providing tools and libraries for building robot applications. It uses C++, Python, and other languages to create modular and reusable components. It’s highly versatile and used in diverse research and industrial settings.

- URScript (Universal Robots): Another proprietary language designed for Universal Robots. Known for its relative simplicity and ease of use, especially suitable for less complex automation tasks.

- KRL (KUKA Robot Language): Similar to RAPID, this is KUKA’s proprietary language, optimized for programming its robots.

The choice of language depends on the robot manufacturer, the complexity of the application, and the programmer’s familiarity with the language and ecosystem.

Q 6. Describe your experience with robot path planning algorithms (e.g., A*, RRT).

Robot path planning involves finding a collision-free path for a robot to move from a start to a goal configuration. Algorithms like A* and RRT are frequently used.

- A*: A graph search algorithm that finds the shortest path by estimating the distance to the goal. It’s effective in relatively structured environments but can struggle with high-dimensional spaces and complex obstacles.

- RRT (Rapidly-exploring Random Tree): A sampling-based algorithm that builds a tree of possible robot configurations by randomly sampling the configuration space. It’s better suited for complex environments and high-dimensional spaces, as it doesn’t explicitly explore all possible paths. It’s particularly useful for robots with many degrees of freedom.

My experience includes using both algorithms, selecting the appropriate one based on the complexity of the environment and the robot’s characteristics. For instance, A* might be preferable for a simple pick-and-place task in a structured warehouse, whereas RRT might be a better choice for navigating a robot through a cluttered, unstructured outdoor environment.

Q 7. How do you ensure collision avoidance in robot programming?

Collision avoidance is paramount in robot programming to prevent damage to the robot, the environment, or personnel. Several strategies are employed:

- Geometric Modeling: Creating accurate 3D models of the robot, its workspace, and obstacles is essential. This allows for collision detection algorithms to function effectively.

- Collision Detection Algorithms: These algorithms identify potential collisions. Examples include bounding volume hierarchies (BVHs) and algorithms based on distance computations. These algorithms are computationally expensive, and efficient data structures are crucial.

- Path Planning with Collision Avoidance: Incorporating collision avoidance directly into the path planning algorithm, as mentioned earlier, ensures the generated path is collision-free. Algorithms such as RRT* (an improved version of RRT) explicitly address collision avoidance.

- Safety Sensors: Physical sensors like laser scanners, proximity sensors, and cameras can provide real-time information about the robot’s surroundings, enabling reactive collision avoidance. This allows for unexpected obstacles to be handled.

- Redundancy and Safe Configurations: If possible, employing robotic designs with redundancy (extra degrees of freedom) allows for safer maneuvers to circumvent obstacles.

A layered approach, combining geometric modeling, collision detection, and safety sensors, is often the most robust strategy for ensuring collision avoidance.

Q 8. Explain the concept of workspace and its relevance to robot design.

A robot’s workspace is the three-dimensional volume within which it can freely maneuver its end-effector. Think of it as the robot’s ‘reach’ – the area it can access without colliding with itself or its surroundings. Understanding and defining the workspace is crucial for robot design because it dictates the robot’s capabilities and limitations. A poorly designed workspace can lead to inefficiencies, reduced productivity, and even safety hazards.

Relevance to Robot Design: The workspace directly influences the robot’s arm length, joint configurations, and the overall design. For example, a robot designed for painting car bodies needs a large workspace to reach all parts of the vehicle, whereas a robot performing micro-surgery requires a smaller, more precise workspace. The shape and volume of the workspace also affect the robot’s ability to perform certain tasks. A robot with a complex, non-convex workspace (one with concave regions) might struggle with certain orientations or trajectories. This is often addressed during robot design through kinematic analysis and simulation to optimize the workspace for the specific application.

Example: A robotic arm used in welding a car chassis requires a large workspace that fully encompasses the chassis’s dimensions. Conversely, a pick-and-place robot in a factory setting might only need a small, defined workspace around a conveyor belt.

Q 9. What are the different types of robot sensors and their applications?

Robots utilize various sensors to perceive their environment and interact with it effectively. These sensors provide crucial feedback for control systems and enable robots to perform complex tasks. Here are some common types:

- Proximity Sensors: Detect the presence of objects without physical contact. Ultrasonic, infrared, and capacitive sensors are examples. Applications include obstacle avoidance, object detection for pick-and-place operations, and safety systems.

- Force/Torque Sensors: Measure the forces and torques applied to the robot’s end-effector. These are essential for delicate tasks requiring precise control, such as assembly or surgery. They allow the robot to adapt to unexpected forces and prevent damage.

- Vision Sensors (Cameras): Provide visual information about the environment. These can range from simple monochrome cameras to sophisticated 3D cameras. Applications include object recognition, navigation, inspection, and quality control.

- Laser Scanners (LIDAR): Create a 3D point cloud of the surrounding environment by emitting laser beams. Used extensively in autonomous navigation and mapping applications, particularly in robotics for self-driving vehicles and mobile robots.

- Inertial Measurement Units (IMUs): Measure acceleration and angular velocity. Useful for robot localization and motion tracking, especially in mobile robots.

- Tactile Sensors: Provide information about contact pressure and surface texture. These are crucial for tasks such as grasping and manipulation of delicate objects.

The choice of sensors depends on the specific application. For example, a robot used in a hazardous environment might require robust proximity sensors and vision systems for obstacle avoidance, whereas a surgical robot would necessitate force/torque sensors and high-resolution vision systems for precise control.

Q 10. Describe your experience with robot vision systems and image processing.

I have extensive experience with robot vision systems and image processing, encompassing various aspects from camera calibration and image acquisition to object recognition and scene understanding. In past projects, I’ve utilized OpenCV and ROS (Robot Operating System) to implement vision-guided robotic systems. This involved designing and implementing algorithms for tasks such as feature extraction, object detection (using techniques like SIFT, SURF, and deep learning-based object detectors), image segmentation, and pose estimation. I’ve also worked with different types of cameras, including monochrome, color, and depth cameras, and integrated them with robot control systems.

For example, in one project, we developed a robotic system for automated bin picking. This involved using a 3D camera to capture images of a bin containing randomly oriented parts. We then developed image processing algorithms to identify the location and orientation of each part, and then used this information to plan the robot’s movements to pick the parts up. This required careful calibration of the camera and robot, and also the use of robust object recognition algorithms that were tolerant to variations in lighting and part orientation.

Another project involved using a vision system to guide a robot arm in assembling electronic components. The vision system ensured accurate placement of the components, which was critical for the functionality of the assembled product.

Q 11. How do you calibrate a robot arm?

Robot arm calibration is the process of precisely determining the relationship between the robot’s joint angles and the position and orientation of its end-effector in the Cartesian coordinate system. Accurate calibration is vital for precise robot control and accurate task execution. This is typically achieved through a multi-step process:

- Geometric Calibration: This involves determining the robot’s kinematic parameters, such as link lengths, joint offsets, and the location of each joint. Techniques like Denavit-Hartenberg (DH) parameters are commonly used to model the robot’s geometry.

- Measurement: The robot’s position and orientation are measured at various points in its workspace using a high-precision measurement system, such as a laser tracker or a CMM (Coordinate Measuring Machine). This establishes the ground truth against which the model is compared.

- Parameter Optimization: A calibration algorithm adjusts the robot’s kinematic parameters to minimize the difference between the measured and model-predicted positions. This often involves iterative optimization techniques, such as least-squares methods.

- Validation: The calibrated robot’s accuracy is verified by repeating measurements at various points and checking for consistency.

Software Tools: Specialized software packages are frequently employed for robot calibration, as they automate the process and aid in parameter optimization.

Real-world Implications: Inaccurate calibration leads to positioning errors, potentially resulting in collisions, defective products, or failed tasks. Accurate calibration is essential for reliable automation in applications like manufacturing, assembly, and surgery.

Q 12. Explain different types of robot end-effectors and their selection criteria.

The end-effector is the robotic equivalent of a hand or a tool. It is the part of the robot that interacts directly with the environment. Different tasks require different end-effectors. Here are some common types:

- Grippers: Mechanical devices used to grasp and manipulate objects. These range from simple two-finger grippers to more complex multi-fingered hands with dexterity capabilities.

- Vacuum Cups: Often used to pick up flat or smooth objects. They are particularly effective for handling delicate or fragile items.

- Magnetic Grippers: These are used to pick up ferromagnetic objects. They are commonly used in material handling.

- Welding Torches: Used for welding operations, requiring precise control and high heat resistance.

- Spray Guns: Used for painting and coating applications.

- Tools: Robots can use a wide range of tools such as screwdrivers, drills, and saws, depending on the required task.

Selection Criteria: The choice of end-effector depends on factors such as the object’s shape, size, weight, material properties, and the task requirements. Other considerations include the required force and precision, as well as the need for adaptability and dexterity. A delicate assembly task might require a multi-fingered gripper with force sensors, whereas a heavy-duty material handling task might necessitate a powerful vacuum gripper.

Q 13. What is a robot teach pendant and how is it used?

A robot teach pendant is a handheld device that allows a human operator to manually guide a robot through a sequence of movements, thereby teaching it a specific task. It is essentially a control panel with buttons, joysticks, and a display screen used to interact with the robot controller. Think of it as a user-friendly interface for programming the robot without needing complex programming languages.

How it’s used: The operator moves the robot arm or end-effector to the desired positions and orientations, and the teach pendant records these positions and orientations. The sequence of recorded movements forms a program that the robot can later execute autonomously. Many teach pendants also allow the operator to adjust parameters such as speed, acceleration, and force limits during the programming process. This allows for fine-tuning of the robot’s movements for optimal performance. Modern teach pendants often incorporate advanced features such as graphical programming interfaces, which can make the process easier and more intuitive.

Example: In a manufacturing setting, a technician might use a teach pendant to guide a robotic arm through the steps involved in assembling a product. The pendant records the robot’s movements, creating a program that the robot can repeat consistently and accurately, increasing productivity and reducing manual labor.

Q 14. Describe your experience with robot simulation software (e.g., Gazebo, V-REP).

I have substantial experience using robot simulation software, primarily Gazebo and V-REP (CoppeliaSim). These tools are indispensable for developing, testing, and debugging robot control algorithms without the need for physical hardware. This reduces development time, costs, and risks. My experience encompasses various aspects, including:

- Robot Modeling: Creating accurate digital models of robots, including their physical dimensions, kinematics, and dynamics. This involves importing CAD models or using built-in modeling tools.

- Environment Creation: Designing and implementing realistic simulations of the robot’s operational environment. This includes obstacles, objects, and other elements that the robot may interact with.

- Sensor Simulation: Simulating the behavior of various sensors, such as cameras, laser scanners, and force/torque sensors, to provide realistic sensory data to the robot control algorithms. This is crucial for testing algorithms’ robustness in real-world scenarios.

- Algorithm Development and Testing: Developing and testing robot control algorithms in a simulated environment before deploying them on physical robots. This helps to identify and correct errors early in the development process.

- Path Planning and Trajectory Generation: Simulating robot movements to ensure smooth and collision-free trajectories.

Example: In a recent project, I used Gazebo to simulate a mobile robot navigating a warehouse environment. This allowed me to thoroughly test the robot’s navigation algorithms in a variety of scenarios before deploying it in the actual warehouse. The simulation allowed for testing different algorithms in a controlled environment and identifying potential problems without risking damage to the physical robot. The visualization capabilities of these platforms are also extremely useful for debugging.

Q 15. How do you validate and verify robot programs?

Validating and verifying robot programs is crucial for ensuring safe and reliable operation. It’s a two-step process:

- Verification: This confirms that the program code functions as intended. We achieve this through simulations, using software like RobotStudio or Gazebo, which allow us to test the program in a virtual environment before deploying it to the physical robot. This avoids costly errors and potential damage to equipment or personnel. For instance, we can simulate a pick-and-place operation, verifying that the robot’s movements are accurate and collision-free.

- Validation: This step confirms that the program meets the specified requirements. We compare the simulated results against real-world expectations. This might involve deploying the program to the physical robot in a controlled environment and then meticulously measuring the robot’s accuracy, speed, and repeatability. Any discrepancies between the simulated and real-world performance are analyzed and addressed. For example, we might compare the actual weight lifted by the robot with the design specifications.

We use a combination of techniques including unit testing, integration testing, and system testing. We may also employ formal methods verification to mathematically prove the correctness of certain program sections. Thorough documentation is critical throughout the entire process, enabling easy troubleshooting and future modifications.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the importance of safety protocols in robot programming and operation.

Safety is paramount in robotics. A single malfunction can have devastating consequences. Safety protocols are implemented at multiple levels:

- Hardware Safety: This involves using safety-rated sensors (e.g., light curtains, emergency stops), actuators, and controllers that meet relevant safety standards (like ISO 10218). These components automatically halt robot operation in hazardous situations.

- Software Safety: This includes programming safety features like speed and torque limits, collision detection and avoidance algorithms, and zone monitoring. We meticulously design our code to incorporate checks and fail-safes to mitigate potential risks. For example, a pick-and-place routine might incorporate a force sensor that stops the operation if excessive resistance is detected.

- Operational Procedures: Clear and concise operational procedures, including lockout/tagout procedures for maintenance, are crucial. Operators need to be properly trained on safe robot operation and emergency response protocols.

- Risk Assessment: A thorough risk assessment is performed to identify potential hazards and implement appropriate mitigation strategies. This involves considering various scenarios and determining the likelihood and severity of potential accidents.

Neglecting safety protocols can lead to injuries, equipment damage, and costly downtime. Prioritizing safety is not just a best practice; it’s a legal and ethical obligation.

Q 17. Describe your experience with industrial communication protocols (e.g., ProfiNet, EtherCAT).

I have extensive experience with industrial communication protocols, including ProfiNet and EtherCAT. These protocols are vital for integrating robots into complex automation systems.

- ProfiNet: I’ve used ProfiNet in various projects to connect robots, PLCs (Programmable Logic Controllers), and other devices in a deterministic and reliable network. Its ability to handle both real-time control and non-real-time data exchange is invaluable in sophisticated industrial environments. I’m familiar with its configuration and troubleshooting, using tools like TIA Portal (Totally Integrated Automation Portal).

- EtherCAT: I’ve also worked extensively with EtherCAT, renowned for its high speed and low latency. Its distributed clocking capability makes it ideal for synchronizing multiple robots or axes in high-precision applications. I’ve utilized its capabilities in applications demanding precise coordination, like multi-robot assembly lines.

Understanding these protocols, including their configuration, diagnostics, and potential limitations, is essential for effective robot integration and system-wide optimization.

Q 18. How do you troubleshoot robot malfunctions?

Troubleshooting robot malfunctions requires a systematic approach. I typically follow these steps:

- Identify the Problem: Carefully observe the robot’s behavior and note any error messages displayed on the controller. Document the exact nature of the malfunction.

- Check the Obvious: Examine the robot’s physical condition for any mechanical issues such as loose connections, damaged cables, or obstructions. Ensure power supply is stable and communication lines are intact.

- Review Program Code: Scrutinize the robot program for logical errors, syntax issues, or unexpected input values. Use debugging tools like breakpoints to step through the code and identify problem areas.

- Consult Logs and Diagnostics: Examine the robot controller’s logs for error messages and diagnostic information. These logs often provide crucial clues about the cause of the malfunction.

- Check Sensors and Actuators: Verify the functionality of sensors and actuators, ensuring they are correctly calibrated and functioning as expected. This might involve testing sensor outputs or replacing faulty components.

- Consult Documentation and Support: Refer to the robot manufacturer’s documentation and contact technical support if needed. They may have encountered similar problems before and can provide valuable insights.

For example, if the robot exhibits erratic movements, we would check for issues in the motor drivers, encoder readings, or the robot’s kinematic model used in the program. A systematic approach allows us to pinpoint the root cause and implement an effective solution.

Q 19. What are the advantages and disadvantages of different robot manipulators?

Different robot manipulators (arms) have various advantages and disadvantages depending on the application:

- Articulated Arms (Revolute): These are the most common type, offering high dexterity and reach. They’re highly versatile but their complex kinematics can make programming more challenging.

- Cartesian Robots (Gantry): These robots move along three linear axes, ideal for tasks requiring precise positioning in a defined workspace. They’re simpler to program but have limited reach and dexterity.

- SCARA Robots (Selective Compliance Assembly Robot Arm): These are well-suited for assembly tasks requiring high speed and accuracy in a planar workspace. They are less versatile than articulated arms.

- Delta Robots (Parallel): These are incredibly fast and precise, particularly well-suited for pick-and-place applications with high throughput. They have a limited workspace.

The choice of manipulator depends on factors like the application’s workspace, required dexterity, speed, payload capacity, and the level of precision needed. For example, a delta robot would be ideal for high-speed packaging but unsuitable for welding due to its limited reach.

Q 20. Explain your experience with robot dynamics and control.

Robot dynamics and control are fundamental aspects of robotics. Understanding robot dynamics involves modeling the robot’s motion, including inertia, friction, and gravity effects. This knowledge is essential for designing accurate and efficient control algorithms.

My experience includes working with different control techniques such as:

- PID Control (Proportional-Integral-Derivative): This classic control method is widely used for regulating robot joint positions and velocities. I have experience tuning PID controllers to achieve optimal performance, considering factors like stability, responsiveness, and overshoot.

- Advanced Control Techniques: I have experience implementing more advanced control algorithms like adaptive control, which adapts to changing robot dynamics, and force/torque control, enabling robots to interact with their environment in a compliant manner.

For instance, in a collaborative robot application, we would utilize force/torque control to enable safe interaction between the robot and a human worker. Precise modeling of robot dynamics is crucial for accurate path planning and collision avoidance.

Q 21. How do you handle robot programming errors and debugging?

Handling robot programming errors and debugging is a daily occurrence. I employ several techniques:

- Systematic Debugging: I use a systematic approach, breaking down the code into smaller modules and testing each module individually. This allows me to isolate the source of errors more efficiently.

- Using Debugging Tools: I use the robot controller’s integrated debugging tools, such as breakpoints, stepping through code, and inspecting variables. This provides insights into the program’s execution flow and the values of variables at different points.

- Simulation: I use robot simulation software extensively to test and debug programs in a virtual environment before deploying them to the real robot. This minimizes the risk of damaging equipment or causing safety hazards.

- Code Reviews: Peer code reviews are invaluable for catching errors and improving code quality. A fresh pair of eyes can often identify subtle errors that I might have missed.

- Logging and Monitoring: I employ thorough logging to track the program’s execution, including sensor readings, motor commands, and other relevant data. This helps in identifying the root cause of errors during runtime.

For example, if a robot fails to grasp an object, I would check the program’s logic for grasping, verify sensor readings, and analyze the robot’s trajectory. Logging can reveal subtle issues such as unexpected sensor noise or minor discrepancies in the robot’s position.

Q 22. Describe your experience with different types of robot actuators.

Robot actuators are the muscles of a robot, responsible for generating movement. My experience encompasses a wide range of actuator types, each with its own strengths and weaknesses. These include:

- Electric Motors: These are prevalent due to their precision, controllability, and ease of integration. I’ve worked extensively with servo motors, stepper motors, and brushless DC motors in applications ranging from precise assembly tasks to larger-scale material handling. For example, I used servo motors in a collaborative robot arm for delicate assembly operations, requiring precise positioning and torque control. Stepper motors were ideal for a pick-and-place application demanding repeatable movements.

- Hydraulic Actuators: These are powerful and suitable for heavy-duty applications needing high force and torque. I’ve been involved in projects using hydraulic actuators for large industrial robots in manufacturing settings, particularly for tasks like heavy lifting and material forming. A challenge here is often managing the complexity of the hydraulic system, including pressure regulation and leak prevention.

- Pneumatic Actuators: Simpler and often cheaper than hydraulic actuators, pneumatics are suitable for simpler applications. I’ve utilized pneumatic cylinders in applications requiring rapid, forceful movements, such as automated clamping mechanisms. Maintaining the air pressure and minimizing leaks remains a crucial aspect of their operation.

- Piezoelectric Actuators: These offer extremely fine positioning capabilities, making them ideal for micro-manipulation and nanotechnology applications. Although I haven’t worked directly with them in large-scale industrial settings, I’ve studied their application and integration in precision assembly scenarios, where incredibly small movements are required.

Choosing the right actuator depends heavily on the specific application requirements, considering factors like payload capacity, speed, precision, power consumption, cost, and environmental conditions.

Q 23. What are the ethical considerations in robotics?

Ethical considerations in robotics are paramount and are becoming increasingly important as robots become more integrated into society. Key ethical concerns include:

- Safety: Ensuring robots operate safely around humans is critical. This involves designing robots with inherent safety features, implementing robust safety protocols, and considering potential failure modes. For instance, I’ve been involved in designing emergency stop mechanisms and implementing safety zones to prevent collisions.

- Bias and Discrimination: AI algorithms used to control robots can inherit biases present in the training data, leading to unfair or discriminatory outcomes. Mitigating this requires careful data selection, algorithm design, and ongoing monitoring for bias.

- Job Displacement: Automation through robotics can lead to job displacement in certain sectors. Addressing this requires proactive measures like retraining programs and considering the societal impact of automation.

- Privacy: Robots equipped with sensors may collect data that could compromise personal privacy. Clear data governance policies and robust security measures are crucial.

- Accountability: Determining responsibility in case of accidents or malfunctions involving robots is complex. Clear guidelines and legal frameworks are needed to define liability.

- Autonomous Weapons Systems: The development of lethal autonomous weapons raises significant ethical concerns about accountability, potential for unintended harm, and the dehumanization of warfare. This is a complex issue requiring international cooperation and careful consideration.

Addressing these ethical concerns requires a multidisciplinary approach involving engineers, ethicists, policymakers, and the public. It’s crucial to develop ethical guidelines and regulations that govern the design, development, and deployment of robots.

Q 24. Explain your experience with integrating robots into existing production lines.

Integrating robots into existing production lines requires a methodical approach. My experience involves careful planning, risk assessment, and collaborative teamwork. Here’s a breakdown of my process:

- Needs Assessment: First, a thorough assessment identifies bottlenecks, inefficiencies, and areas where automation can improve productivity and quality. This involves analyzing the current production line, understanding the existing equipment and processes, and defining specific tasks for the robot.

- Robot Selection: Choosing the appropriate robot is critical. This depends on factors like payload capacity, reach, speed, precision, and the environmental conditions of the production line. I’ve considered factors such as the available workspace, the type of parts being handled, and any safety requirements.

- Integration Planning: This stage focuses on the physical integration of the robot into the production line. This includes designing the robot’s workspace, selecting the necessary end-effectors (grippers, tools), developing safety protocols, and designing the communication interface between the robot and the existing machinery. Simulation software plays a key role here allowing for virtual testing and optimization before physical implementation.

- Programming and Testing: Once the robot is physically integrated, it must be programmed to perform the desired tasks. This usually involves writing code to control the robot’s movements, coordinate its actions with other machines on the line, and integrate with existing supervisory control systems. Thorough testing is essential to ensure that the robot performs accurately and reliably.

- Training and Support: Finally, training for operators and maintenance personnel is critical. This ensures that they can effectively operate and maintain the robotic system.

In one project, I integrated a robotic arm into a packaging line, significantly improving throughput and reducing manual labor. This involved careful planning of the robot’s workspace to avoid collisions with existing conveyors and other equipment.

Q 25. How do you optimize robot performance?

Optimizing robot performance involves a multi-faceted approach that focuses on both hardware and software aspects. Key strategies include:

- Motion Planning Optimization: Efficient path planning algorithms can significantly reduce cycle times and improve energy efficiency. I often use advanced algorithms like Rapidly-exploring Random Trees (RRT) and A* search to find optimal paths, avoiding obstacles and minimizing joint movements.

- Control System Tuning: Fine-tuning the robot’s control parameters, such as gains in Proportional-Integral-Derivative (PID) controllers, can improve accuracy, stability, and responsiveness. This often involves iterative adjustments and real-time monitoring of the robot’s performance. I use data analysis and visualization tools to identify areas for improvement.

- Hardware Upgrades: Sometimes, optimizing performance requires upgrading hardware components. This might involve using faster processors, more powerful actuators, or more precise sensors. For example, I upgraded a robot’s end-effector to increase its gripping force, thus enabling it to handle heavier parts.

- Software Optimization: Streamlining the robot’s control software can improve its efficiency. This can involve code optimization, reducing unnecessary computations, and improving data processing speeds.

- Predictive Maintenance: Using sensor data and machine learning algorithms to predict potential equipment failures allows for proactive maintenance, minimizing downtime and maximizing robot uptime.

Optimizing robot performance is an iterative process. Continuous monitoring, data analysis, and adjustments are crucial to achieving optimal results.

Q 26. Describe your experience with ROS (Robot Operating System).

ROS (Robot Operating System) is a powerful framework that simplifies robot software development. My experience with ROS includes:

- Node Development: I’ve extensively developed ROS nodes using C++ and Python to perform various tasks, such as controlling robot actuators, processing sensor data, and implementing robot control algorithms. For example, I’ve written a node to control a robotic arm using inverse kinematics and another to fuse data from multiple sensors to create a more robust perception system.

- Topic and Service Communication: I have a strong understanding of ROS communication mechanisms, using topics for real-time data streaming and services for requesting specific actions. This allowed for modular and efficient software design, where individual nodes handle specific tasks and communicate through ROS mechanisms.

- ROS Packages and Libraries: I’m proficient in utilizing various ROS packages and libraries, leveraging existing tools and functionalities to reduce development time and improve code reliability. I’ve used navigation stacks, visualization tools (RViz), and control libraries to simplify complex tasks.

- ROS Simulation: I’ve used ROS simulation tools like Gazebo to simulate robot behavior in virtual environments. This enabled testing and debugging of robot software before deploying it to the physical robot, reducing risk and improving development efficiency.

- ROS Integration with Other Systems: My experience extends to integrating ROS with other software systems and hardware components, ensuring seamless communication and data exchange. For example, I’ve integrated ROS with industrial control systems and cloud-based platforms for data logging and remote monitoring.

ROS has significantly accelerated my robot development process, enabling faster prototyping, easier debugging, and more efficient code reuse.

Q 27. What are the challenges of deploying robots in unstructured environments?

Deploying robots in unstructured environments presents significant challenges compared to controlled industrial settings. The key difficulties include:

- Perception: Unstructured environments are unpredictable and lack the consistency of structured environments. Robots need robust perception systems to accurately sense and interpret their surroundings, overcoming challenges like variable lighting conditions, clutter, and dynamic obstacles. This often requires the integration of multiple sensors, such as cameras, LiDAR, and ultrasonic sensors, and sophisticated data fusion algorithms.

- Navigation: Navigating unstructured environments requires advanced algorithms that allow robots to plan paths, avoid obstacles, and adapt to changing conditions. Techniques like Simultaneous Localization and Mapping (SLAM) are crucial. The robustness of these algorithms is critical in handling unexpected events and uncertain environments.

Addressing these challenges often involves utilizing advanced AI techniques, including machine learning and deep learning, to enable robots to learn from experience and adapt to new situations. For example, in a search-and-rescue scenario, the robot may need to navigate debris and uneven terrain, requiring robust navigation and manipulation capabilities.

Q 28. Explain your experience with AI/ML in robotics.

AI and machine learning (ML) are transforming robotics, enabling robots to perform more complex tasks and adapt to dynamic environments. My experience includes:

- Reinforcement Learning: I’ve used reinforcement learning to train robots to perform complex manipulation tasks, such as grasping objects of various shapes and sizes. This involved designing reward functions, training agents in simulation, and transferring the learned policies to real robots.

- Computer Vision: I’ve integrated computer vision algorithms to enable robots to perceive and understand their surroundings. This included object detection, image segmentation, and pose estimation. For instance, I used convolutional neural networks to train a robot to identify and locate specific objects in a cluttered workspace.

- Deep Learning for Control: I’ve utilized deep learning techniques to develop more robust and adaptive robot control systems. This allowed robots to learn complex behaviors from data, rather than relying on explicitly programmed rules.

- Data-driven Modeling: I’ve used machine learning to build data-driven models of robot dynamics and uncertainties. This improved the accuracy of robot control and allowed for more efficient motion planning.

- Robotics Simulation with AI: I extensively use AI algorithms within robot simulation environments like Gazebo to accelerate the training process of AI models and test their performance under various scenarios before deployment on real robots.

The integration of AI and ML significantly expands the capabilities of robots, allowing them to handle more complex and unpredictable tasks, adapt to changing environments, and learn from experience. This is critical for enabling robots to operate successfully in real-world settings.

Key Topics to Learn for Robot Programming and Simulation Interview

- Robot Kinematics and Dynamics: Understanding robot joint configurations, forward and inverse kinematics, and dynamic modeling is crucial for optimizing robot movements and performance. Practical application includes trajectory planning and collision avoidance.

- Robot Programming Languages: Familiarity with languages like RAPID (ABB), KRL (KUKA), or others relevant to your target roles. This includes understanding syntax, control structures, and debugging techniques. Practical application involves writing programs for specific tasks like pick-and-place or welding.

- Simulation Software and Environments: Experience with simulation platforms like ROS, Gazebo, or others. This includes setting up virtual environments, creating robot models, and simulating robot tasks. Practical application includes testing programs before deployment on real robots, optimizing robot designs, and training AI for robotic control.

- Path Planning and Motion Control: Algorithms for generating efficient and collision-free robot paths. This encompasses techniques like A*, RRT, and others. Practical application includes optimizing robot movements in complex environments and minimizing execution time.

- Sensors and Integration: Understanding the role of various sensors (e.g., cameras, force sensors) in robot control and the methods for integrating them into robotic systems. Practical application includes developing perception-based control strategies and implementing sensor fusion techniques.

- Troubleshooting and Debugging: The ability to diagnose and solve problems in robot programs and hardware. This includes identifying errors, using debugging tools, and applying systematic troubleshooting methodologies. Practical application involves resolving issues during robot operation and improving the reliability of robotic systems.

- Safety and Standards: Knowledge of safety protocols and industry standards related to robotics. This includes understanding risk assessment and implementing safety measures in robot programming and operation. Practical application ensures compliance with regulations and minimizes potential hazards.

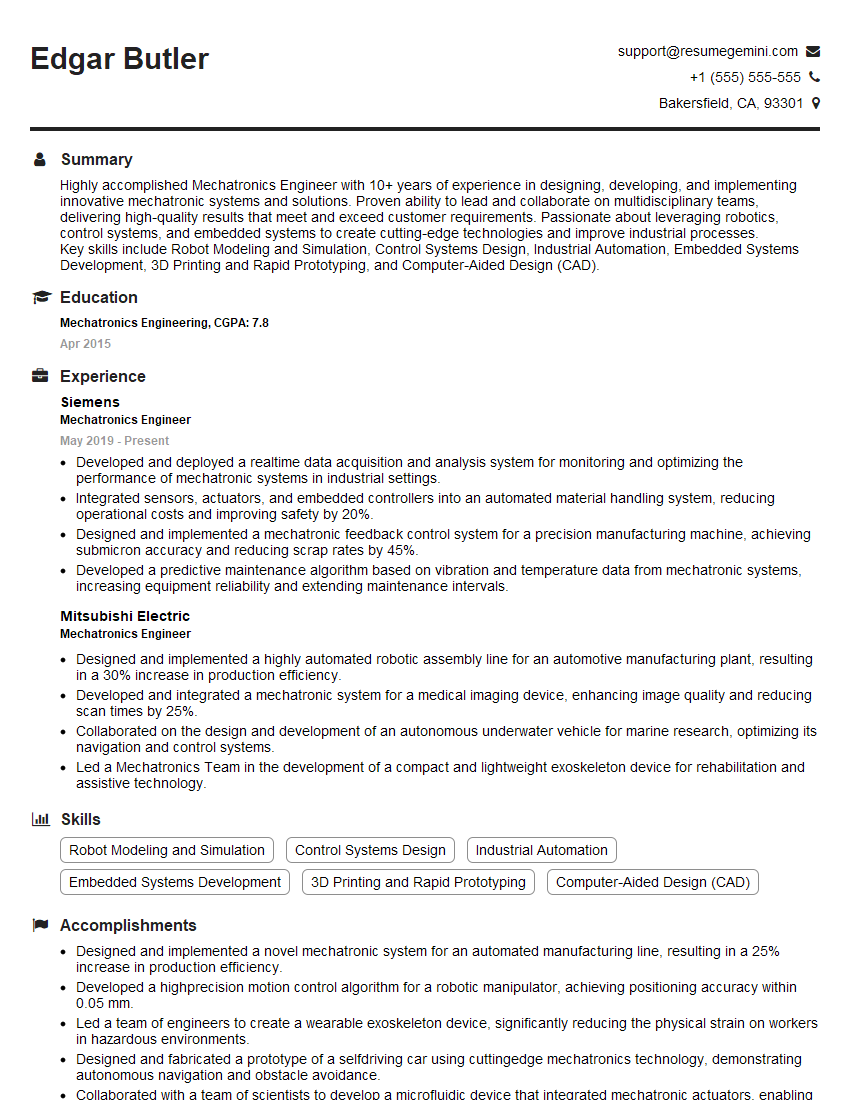

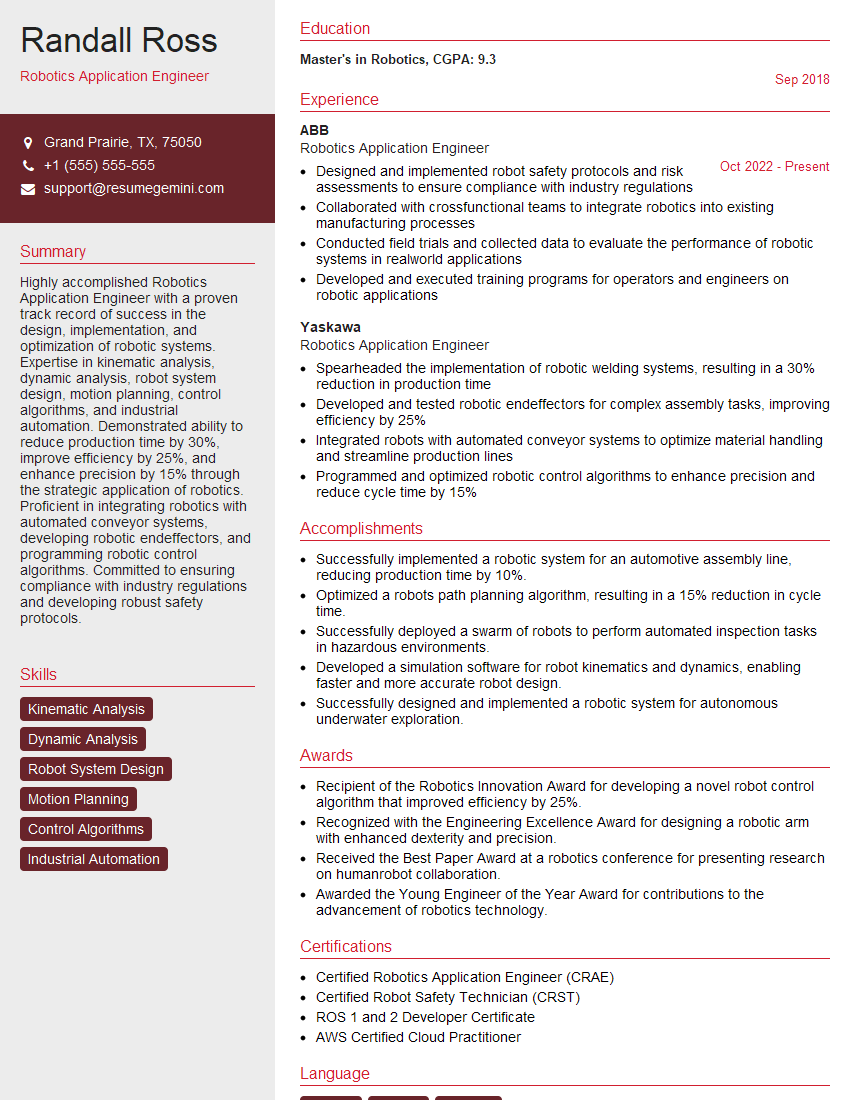

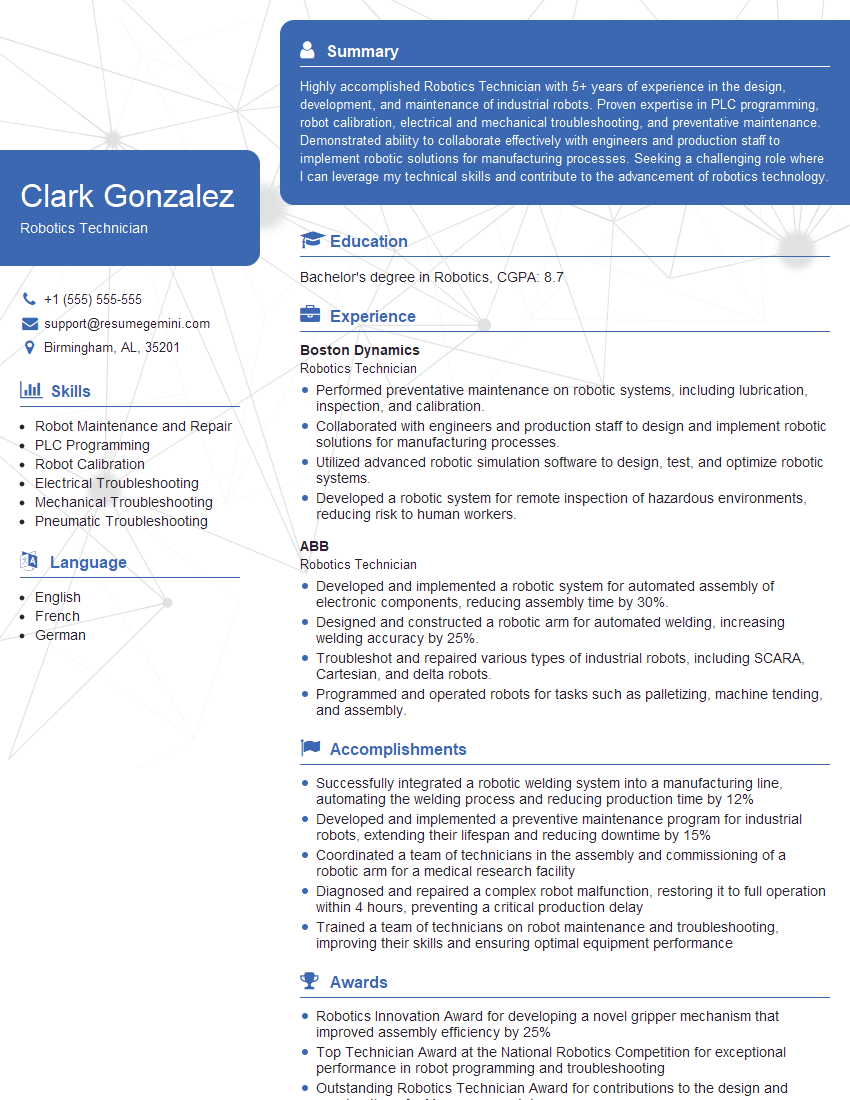

Next Steps

Mastering Robot Programming and Simulation opens doors to exciting and rewarding careers in automation, manufacturing, and research. To maximize your job prospects, create a compelling and ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional resume that showcases your abilities effectively. We provide examples of resumes tailored specifically to Robot Programming and Simulation to help guide you. Invest time in crafting a strong resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?