Preparation is the key to success in any interview. In this post, we’ll explore crucial scikitimage interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in scikitimage Interview

Q 1. Explain the difference between scikit-image and OpenCV.

Scikit-image and OpenCV are both powerful image processing libraries, but they cater to different needs and have distinct philosophies. Scikit-image is part of the SciPy ecosystem, emphasizing a clean, consistent, and well-documented API, prioritizing scientific accuracy and reproducibility. It focuses on providing building blocks for image analysis algorithms, making it ideal for research and applications requiring precise control over the processing steps. OpenCV, on the other hand, is a comprehensive library with a vast range of functionalities, including real-time processing capabilities, optimized for speed and efficiency. It’s often preferred for applications requiring high performance, such as computer vision in robotics or real-time video processing. Think of it this way: Scikit-image is like a high-quality toolbox with precisely engineered tools, while OpenCV is a fully equipped workshop with tools for every conceivable task, some of which may be less refined but significantly faster.

Q 2. Describe the role of filters in image processing and list common filter types available in scikit-image.

Filters in image processing modify pixel values to enhance or extract information from an image. They’re like specialized lenses for your image data, each designed to highlight certain aspects. Scikit-image offers a wide array of filters categorized by their function. Common types include:

- Smoothing filters: These reduce noise and detail. Examples include Gaussian filters (

skimage.filters.gaussian), median filters (skimage.filters.median), and bilateral filters (skimage.filters.bilateral). Imagine blurring an image to remove graininess – that’s a smoothing filter at work. - Sharpening filters: These enhance edges and details by increasing contrast. Examples include unsharp masking (

skimage.filters.unsharp_mask) and Laplacian filters (skimage.filters.laplace). Think of sharpening a blurry photograph to make details stand out. - Edge detection filters: These highlight regions of rapid intensity change, revealing edges in an image. The Sobel (

skimage.filters.sobel) and Canny (skimage.filters.canny) operators are popular choices. This is crucial for object recognition, where identifying boundaries is paramount.

Choosing the right filter depends on the specific application and desired outcome. For instance, a Gaussian filter might be used to pre-process an image before edge detection to reduce noise that could lead to false edge detection.

Q 3. How would you use scikit-image to perform image segmentation?

Image segmentation is the process of partitioning an image into meaningful regions. Scikit-image provides several techniques for this. One common approach is thresholding, where pixels are classified based on their intensity values. skimage.filters.threshold_otsu automatically finds an optimal threshold using Otsu’s method. For more complex scenarios, region-growing methods (though not directly implemented as a single function, readily achievable using combinations of morphological operations and connectivity analysis) and watershed algorithms (skimage.segmentation.watershed) can be employed. Watershed, for example, treats the image as a topographic map, where each basin represents a segment. Let’s say you want to segment cells in a microscopic image: thresholding might work if cells have distinct intensities, but a watershed algorithm might be better if cells are touching or overlapping.

import skimage.io as iofrom skimage.filters import threshold_otsufrom skimage.segmentation import watershed# ... load and process your image ...thresh = threshold_otsu(image)binary = image > thresh #simple thresholding example# ... further processing or watershed segmentation ...

Q 4. Explain the concept of feature extraction in image processing and how scikit-image can be used.

Feature extraction is the process of identifying and quantifying relevant information from an image. It transforms raw pixel data into numerical representations that algorithms can easily interpret. This is crucial for tasks like object recognition and classification. Scikit-image provides many tools for this. For example, you can calculate texture features using functions like skimage.feature.graycomatrix (for Gray-Level Co-occurrence Matrices) which captures textural properties. You can extract local binary patterns (LBPs) using skimage.feature.local_binary_pattern, which are robust descriptors of local image texture. For shape analysis, you can use region properties (skimage.measure.regionprops) after segmentation to extract features like area, perimeter, and eccentricity. Imagine you’re building a system to automatically identify different types of leaves: feature extraction would involve quantifying leaf shape, vein patterns, and texture to create a numerical description that helps distinguish between them.

Q 5. How do you handle different image file formats using scikit-image?

Scikit-image relies on the powerful skimage.io module for image input/output. This module provides a consistent interface for handling various image file formats, including PNG, JPG, TIFF, and more, abstracting away the underlying complexities. Loading an image is as simple as:

import skimage.io as ioimage = io.imread('my_image.png')Similarly, saving an image is straightforward:

io.imsave('processed_image.jpg', processed_image)The library automatically handles the format conversion and ensures compatibility, freeing you from worrying about low-level file format details.

Q 6. What are the advantages and disadvantages of using scikit-image compared to other image processing libraries?

Scikit-image offers several advantages: Its focus on scientific accuracy, clear documentation, and well-defined interfaces makes it ideal for research and applications where reproducibility and understanding the underlying algorithms are crucial. Its integration with the SciPy ecosystem is a big plus. However, it might not be the fastest option for real-time applications compared to optimized libraries like OpenCV. The range of functionalities is also narrower than OpenCV’s. So, Scikit-image excels in research, analysis-focused projects, and situations where clarity and accuracy are paramount, while OpenCV is better suited for high-performance, real-time applications demanding a wider array of features.

Q 7. Describe how you would use scikit-image to perform image registration.

Image registration involves aligning two or more images of the same scene taken from different viewpoints or at different times. Scikit-image doesn’t have a single function to perform all registration tasks, but it provides the building blocks necessary to implement various registration methods. The process usually involves these steps:

- Feature detection: Identify corresponding points or regions in the images using techniques like SIFT (though not directly implemented in skimage, readily available in other libraries like opencv and then integrated) or SURF (similarly available externally).

- Feature matching: Match the detected features between images.

- Transformation estimation: Estimate a geometric transformation (e.g., translation, rotation, scaling) that maps one image to another. Scikit-image’s

skimage.transformmodule offers functions for various transformations. - Image warping: Apply the estimated transformation to warp one image onto the other, achieving alignment.

For example, aligning medical images from different scans for comparison or aligning satellite images taken at different times requires careful image registration. The choice of specific registration techniques depends on the nature of the images and the type of transformation needed.

Q 8. Explain the process of image color space conversion in scikit-image.

Scikit-image provides efficient tools for converting images between different color spaces. This is crucial because different color spaces highlight different aspects of an image. For instance, RGB is great for display, while HSV emphasizes hue, saturation, and value, making it useful for color segmentation. The conversion process typically involves a mathematical transformation of the pixel values.

Scikit-image utilizes the color.rgb2hsv, color.rgb2gray, color.hsv2rgb functions, among others, for these conversions. The functions take the image array as input and return the transformed image.

Example: Converting an RGB image to grayscale:

from skimage import color, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg')

grayscale_image = color.rgb2gray(image)

plt.imshow(graysclae_image, cmap='gray')

plt.show()This code reads an image, converts it to grayscale using color.rgb2gray and then displays the result using Matplotlib. In a professional setting, this might be used for preprocessing before object detection, where grayscale simplifies the computational load. Similarly, converting to HSV can help in isolating objects based on their color properties.

Q 9. How would you detect edges in an image using scikit-image?

Edge detection is a fundamental image processing technique used to identify boundaries between regions with significant intensity changes. Scikit-image offers several edge detectors, each with its strengths and weaknesses. The Sobel operator, Canny edge detector, and Laplacian of Gaussian are commonly used.

The Sobel operator calculates the image gradient using convolution kernels, highlighting edges based on intensity changes. The Canny edge detector is a more sophisticated algorithm that uses multiple steps including Gaussian blurring, gradient calculation, non-maximum suppression, and hysteresis thresholding to produce cleaner, more accurate edges. The Laplacian of Gaussian combines Gaussian smoothing with the Laplacian operator, effectively detecting edges while suppressing noise.

Example: Using the Canny edge detector:

from skimage import feature, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True)

edges = feature.canny(image, sigma=1)

plt.imshow(edges, cmap='gray')

plt.show()This code reads a grayscale image and applies the Canny edge detector with a sigma value of 1 (controlling the amount of Gaussian smoothing). The resulting edges image will show a binary representation of the detected edges, crucial in applications like medical image analysis (e.g., identifying boundaries of organs) or autonomous driving (detecting lane markings).

Q 10. Describe the various morphological operations available in scikit-image.

Morphological operations are non-linear image processing techniques that modify the shape or structure of objects within an image. They’re commonly used for noise reduction, object segmentation, and feature extraction. Scikit-image offers a comprehensive suite of these operations.

Key morphological operations include:

- Erosion: Shrinks the boundaries of objects.

- Dilation: Expands the boundaries of objects.

- Opening: Erosion followed by dilation; removes small objects and smooths contours.

- Closing: Dilation followed by erosion; fills small holes and connects broken parts of objects.

- Skeletonization: Reduces an object to its skeletal structure.

These operations utilize a structuring element (a small binary array) to define the shape and size of the changes applied.

Example: Performing an opening operation:

from skimage import morphology, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True)

structuring_element = morphology.disk(3)

opened_image = morphology.opening(image, structuring_element)

plt.imshow(opened_image, cmap='gray')

plt.show()Here, a disk-shaped structuring element with radius 3 is used. Imagine this as a tiny brush; the opening operation effectively cleans up the image by removing small irregularities. In real-world applications, this is helpful in removing noise from microscopy images or cleaning up scanned documents.

Q 11. How can you measure image similarity using scikit-image?

Measuring image similarity is essential for tasks like image retrieval, duplicate detection, and image registration. Scikit-image offers several metrics for comparing images. The choice of metric depends on the specific application and the type of similarity being assessed.

Common similarity metrics include:

- Structural Similarity Index (SSIM): Measures perceptual similarity by considering luminance, contrast, and structure.

- Mean Squared Error (MSE): Calculates the average squared difference between pixel intensities.

- Peak Signal-to-Noise Ratio (PSNR): Relates the maximum possible power of a signal to the power of corrupting noise.

Example: Calculating SSIM:

from skimage.metrics import structural_similarity as ssim

from skimage import io

image1 = io.imread('image1.jpg', as_gray=True)

image2 = io.imread('image2.jpg', as_gray=True)

ssim_score, diff = ssim(image1, image2, full=True)

print(f'SSIM score: {ssim_score}')This code computes the SSIM between two grayscale images. A score closer to 1 indicates higher similarity. This could be used in a system that automatically identifies near-duplicate images in a large dataset. The MSE and PSNR offer alternative perspectives on image similarity, sometimes more suited to specific applications.

Q 12. Explain how to use scikit-image for image thresholding.

Image thresholding is a crucial technique for segmenting images by converting a grayscale image to a binary image. It involves assigning pixels to either foreground (object) or background based on a threshold value. Scikit-image provides various thresholding methods.

Common methods include:

- Global thresholding (

threshold_otsu): Automatically determines the optimal threshold using Otsu’s method. - Adaptive thresholding (

threshold_local): Computes thresholds for different image regions, adapting to local variations in intensity. - Manual thresholding: Setting a specific threshold value.

Example: Using Otsu’s method for global thresholding:

from skimage import filters, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True)

threshold = filters.threshold_otsu(image)

binary_image = image > threshold

plt.imshow(binary_image, cmap='gray')

plt.show()This code calculates the optimal threshold using threshold_otsu and then creates a binary image based on this threshold. This simple technique is remarkably effective for separating objects from a background, used in applications like document scanning (separating text from the page) or medical imaging (segmenting tissues).

Q 13. How would you perform image enhancement using scikit-image?

Image enhancement aims to improve the visual quality or information content of an image, making features more visible or reducing noise. Scikit-image offers several techniques for image enhancement.

Common enhancement techniques include:

- Histogram equalization (

exposure.equalize_hist): Stretches the intensity distribution to cover the full range, improving contrast. - Contrast stretching (

exposure.rescale_intensity): Maps the intensity range to a specific interval, enhancing contrast. - Noise reduction (using filters like

filters.gaussian): Smooths the image to reduce noise. - Sharpening (using filters like

filters.unsharp_mask): Enhances edges and details.

Example: Histogram equalization:

from skimage import exposure, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True)

equalized_image = exposure.equalize_hist(image)

plt.imshow(equalized_image, cmap='gray')

plt.show()This code applies histogram equalization to improve contrast. This is valuable in scenarios where an image is too dark or too light, making details difficult to see. It can be crucial in areas like astronomy (enhancing faint celestial objects) or medical imaging (improving visibility of subtle details).

Q 14. Explain different interpolation methods in scikit-image and their applications.

Interpolation methods are used to estimate pixel values at locations where no data exists. This becomes necessary when resizing, rotating, or warping images. Scikit-image provides several interpolation methods, each offering a different balance between speed, accuracy, and smoothness.

Common interpolation methods include:

- Nearest-neighbor: The simplest method; assigns the value of the nearest pixel. Fast but can produce blocky results.

- Bilinear: Averages the values of the four nearest pixels. Smoother than nearest-neighbor but can blur details.

- Bicubic: Uses a weighted average of 16 neighboring pixels. Produces high-quality results but is computationally more expensive.

Example: Resizing an image using bicubic interpolation:

from skimage import transform, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg')

resized_image = transform.resize(image, (256, 256), order=3) # order=3 specifies bicubic

plt.imshow(resized_image)

plt.show()This code resizes the image to 256×256 pixels using bicubic interpolation (order=3). The choice of method affects the final image quality, with bicubic often preferred for its balance between speed and sharpness. The selection depends on the specific application; nearest-neighbor might suffice for speed-critical tasks, while bicubic is preferred for high-quality output where computational cost isn’t a major bottleneck. Applications include image scaling for web displays and creating thumbnails.

Q 15. How do you handle noisy images using scikit-image?

Noisy images contain unwanted variations in pixel intensity, obscuring the true image information. Scikit-image offers several methods to handle this. Think of it like trying to hear a conversation in a noisy room – you need to filter out the distractions.

One common approach is using filters. A Gaussian filter, for instance, smooths the image by averaging pixel intensities, effectively reducing high-frequency noise. A median filter replaces each pixel with the median value of its neighbors, which is particularly effective against salt-and-pepper noise (randomly scattered bright and dark pixels).

Here’s how you’d use them:

from skimage import io, filters

import matplotlib.pyplot as plt

image = io.imread('noisy_image.jpg')

gaussian_filtered = filters.gaussian(image, sigma=1)

median_filtered = filters.median(image, selem=np.ones((3, 3)))

plt.figure(figsize=(10, 5))

plt.subplot(131); plt.imshow(image); plt.title('Original')

plt.subplot(132); plt.imshow(gaussian_filtered); plt.title('Gaussian Filtered')

plt.subplot(133); plt.imshow(median_filtered); plt.title('Median Filtered')

plt.show()The choice of filter and its parameters (like sigma for the Gaussian filter) depends on the type and severity of the noise. Experimentation is often key to finding the optimal settings for a given image.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe the process of image restoration in scikit-image.

Image restoration aims to recover a degraded image to its original, or a close approximation of its original, state. Think of it like restoring a damaged painting – you need to carefully fill in the missing parts and correct any distortions. Scikit-image provides tools for various restoration tasks.

A common technique is deconvolution, which reverses the blurring effect caused by factors like camera motion or lens imperfections. This involves estimating the point spread function (PSF), which describes the blurring process, and then using it to deblur the image. Scikit-image’s restoration.unsupervised_wiener function is a powerful tool for this purpose. Another approach is inpainting, filling in missing parts of an image based on surrounding information. This can be useful for removing scratches or other defects.

Example (simplified):

from skimage import restoration, io, img_as_float

import numpy as np

image = io.imread('blurred_image.jpg')

image = img_as_float(image)

psf = np.ones((5, 5)) / 25

deconvolved = restoration.richardson_lucy(image, psf, iterations=10)

io.imshow(deconvolved)Remember that successful image restoration depends heavily on the nature of the degradation. Careful consideration of the blurring mechanism and the use of appropriate algorithms are essential.

Q 17. How would you implement image compression using scikit-image?

Image compression reduces the size of an image file without significant loss of visual quality. This is crucial for efficient storage and transmission. Scikit-image itself doesn’t directly handle file compression in the same way as libraries like Pillow or OpenCV. However, it offers tools to prepare images for compression.

The core idea is to reduce the amount of data needed to represent the image. This can be done through techniques like quantization (reducing the number of color levels) and transform coding (representing the image using a smaller set of coefficients). After preprocessing the image with scikit-image (e.g., noise reduction, sharpening), you would typically use external libraries to handle the actual compression (e.g., JPEG, PNG, WebP).

Example (preprocessing with scikit-image):

from skimage import io, color, img_as_ubyte

import imageio

image = io.imread('large_image.jpg')

downsampled = img_as_ubyte(skimage.transform.resize(image, (image.shape[0]//2, image.shape[1]//2))) # Downsample for size reduction

imageio.imwrite('compressed_image.jpg', downsampled, quality=75) # External library for compressionQ 18. Explain the concept of watershed segmentation and how it’s implemented in scikit-image.

Watershed segmentation is a powerful technique to segment images based on the concept of watersheds in a topographic landscape. Imagine pouring water onto a surface with peaks and valleys; the water will flow downhill and collect in catchment basins. Similarly, in watershed segmentation, we treat image intensity values as elevation, with high-intensity regions as peaks and low-intensity regions as valleys.

The process involves identifying local minima (valleys) in the image and then assigning pixels to the catchment basin of the nearest minimum. This effectively separates regions based on their intensity differences. Scikit-image provides the watershed function for this purpose. It often requires a marker image to specify initial regions or seeds for the algorithm.

Example:

from skimage.feature import peak_local_max

from skimage.segmentation import watershed

from skimage.io import imread, imshow

import numpy as np

image = imread('image.jpg')

distance = ndimage.distance_transform_edt(image)

local_maxi = peak_local_max(distance, indices=False, footprint=np.ones((3, 3)), labels=image)

markers = ndimage.label(local_maxi)[0]

labels = watershed(-distance, markers, mask=image)

imshow(color.label2rgb(labels, image, kind='avg'))The peak_local_max function finds potential watershed markers, which are then used in the watershed function to segment the image. The results are often refined by preprocessing (e.g., noise reduction or gradient calculation) to improve segmentation accuracy.

Q 19. Describe how to use scikit-image for image feature extraction.

Image feature extraction involves identifying and quantifying relevant characteristics of an image, transforming raw pixel data into meaningful representations for further processing. Scikit-image offers a rich set of tools for this.

Features can be low-level (e.g., color histograms, texture features) or high-level (e.g., object detection). For example, you can calculate textures using functions like skimage.feature.graycomatrix or skimage.feature.local_binary_pattern. Edge detection using functions like skimage.feature.canny can highlight boundaries between regions. Histograms provide a summary of the intensity distribution. These features can be used for tasks like image classification, object recognition, or image retrieval.

Example (texture features):

from skimage.feature import graycomatrix, graycoprops

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True)

gcomatrix = graycomatrix(image, distances=[5], angles=[0], levels=256,

symmetric=True, normed=True)

contrast = graycoprops(gcomatrix, 'contrast')[0, 0]

print(f'Contrast: {contrast}')The choice of features depends on the specific application. Careful feature selection is crucial for effective performance in downstream tasks.

Q 20. How do you perform image transformations (e.g., rotation, scaling) in scikit-image?

Image transformations change the spatial arrangement or orientation of pixels. Scikit-image’s transform module provides functions for various transformations, including rotation, scaling, and translation.

Rotation changes the image’s orientation, scaling changes its size, and translation shifts its position. These transformations can be useful for data augmentation (creating variations of images for training machine learning models), image alignment, or correcting geometric distortions.

Example (rotation and scaling):

from skimage import io, transform

import matplotlib.pyplot as plt

image = io.imread('image.jpg')

rotated = transform.rotate(image, angle=45)

scaled = transform.rescale(image, scale=0.5)

plt.figure(figsize=(10, 5))

plt.subplot(131); plt.imshow(image); plt.title('Original')

plt.subplot(132); plt.imshow(rotated); plt.title('Rotated')

plt.subplot(133); plt.imshow(scaled); plt.title('Scaled')

plt.show()The transform module offers various options for interpolation (how pixel values are determined during transformation), ensuring smooth results or preserving sharp edges as needed.

Q 21. Explain the difference between different types of image filters (e.g., Gaussian, median).

Image filters modify pixel intensities based on their neighborhood, enhancing or suppressing certain features. Different filters have different characteristics and are suited for different tasks. Think of them as different brushes an artist might use for different effects.

A Gaussian filter is a smoothing filter that averages pixel intensities using a Gaussian kernel. It’s effective at reducing noise but can also blur sharp edges. The sigma parameter controls the amount of smoothing. The larger the sigma, the more smoothing.

A median filter replaces each pixel with the median value of its neighbors. It’s particularly robust against salt-and-pepper noise (randomly scattered bright and dark pixels) because the median is less sensitive to outliers than the mean (used in Gaussian filtering).

Other filters include: Laplacian filter (edge detection), Sobel filter (edge detection and gradient calculation), etc. The choice depends on the specific goal – smoothing, sharpening, or edge detection.

Example (Gaussian vs. Median):

from skimage import io, filters

import matplotlib.pyplot as plt

image = io.imread('noisy_image.jpg')

gaussian_filtered = filters.gaussian(image, sigma=1)

median_filtered = filters.median(image, selem=np.ones((3, 3)))

plt.figure(figsize=(10, 5))

plt.subplot(131); plt.imshow(image); plt.title('Original')

plt.subplot(132); plt.imshow(gaussian_filtered); plt.title('Gaussian Filtered')

plt.subplot(133); plt.imshow(median_filtered); plt.title('Median Filtered')

plt.show()Q 22. How to handle different color spaces within scikit-image?

Scikit-image offers robust support for various color spaces. The core function is the color.rgb2gray() function, but it’s much more versatile than just grayscale conversion. Scikit-image utilizes the color module to handle transformations between different color spaces. Think of it like a universal translator for image colors.

For instance, you might start with an image in RGB (Red, Green, Blue) format, the most common for displaying images on screens. But for certain image processing tasks, other color spaces like HSV (Hue, Saturation, Value), LAB (CIE L*a*b*), or even YUV might be more suitable. HSV is great for isolating colors based on their hue, LAB offers perceptually uniform color differences, and YUV is widely used in video compression.

Here’s how you’d convert between RGB and HSV:

from skimage import color, io

import matplotlib.pyplot as plt

image = io.imread('image.jpg') # Load your image

image_hsv = color.rgb2hsv(image)

image_rgb = color.hsv2rgb(image_hsv)

plt.imshow(image_hsv)

plt.show()

plt.imshow(image_rgb)

plt.show()Scikit-image handles the mathematical transformations seamlessly. The key is understanding which color space best fits the specific algorithm or analysis you’re performing. For instance, thresholding based on intensity is easier in grayscale, while object segmentation based on color might be easier in HSV.

Q 23. Explain the process of image binarization using scikit-image.

Image binarization is a fundamental image processing technique that simplifies an image into two colors: black and white (or 0 and 1). It’s like creating a digital sketch from a photo. Scikit-image provides several ways to achieve this, primarily using thresholding methods.

The most common approach is global thresholding. This involves choosing a single threshold value. Pixels with intensity values above the threshold are set to white (1), and those below are set to black (0). Scikit-image’s threshold_otsu() function automatically determines the optimal threshold using Otsu’s method, minimizing intra-class variance. This is often the easiest and most effective method for images with distinct foreground and background.

from skimage import io, filters

import matplotlib.pyplot as plt

image = io.imread('image.jpg', as_gray=True) #Load as grayscale

thresh = filters.threshold_otsu(image)

binary_image = image > thresh

plt.imshow(binary_image, cmap='gray')

plt.show()For more complex images, adaptive thresholding methods are preferred. These adjust the threshold locally based on neighborhood intensity variations, which is useful for unevenly lit images. Scikit-image offers various adaptive methods, such as threshold_mean and threshold_local.

Choosing the right method depends on your image characteristics. If the image has uniform lighting, global thresholding is suitable. If there’s significant variation in lighting, adaptive thresholding is a better choice.

Q 24. What are the limitations of scikit-image?

While scikit-image is a powerful library, it has limitations. Primarily, it focuses on core image processing algorithms and doesn’t offer advanced deep learning capabilities like object detection or image segmentation using convolutional neural networks (CNNs). Think of it as a strong foundation, but you might need other libraries for more specialized, cutting-edge tasks.

Another limitation is its potential performance bottleneck with extremely large images. For massive datasets, specialized libraries optimized for distributed computing or GPU acceleration might be necessary for efficiency. Scikit-image shines with medium-sized images and common image processing tasks, but for huge datasets or computationally intense applications, optimization or alternative solutions may be needed.

Finally, while it covers a broad spectrum of image processing techniques, some highly specialized or niche algorithms might not be included. In such cases, you might need to look for alternative libraries or implement the algorithms yourself.

Q 25. How would you debug errors related to image loading or processing in scikit-image?

Debugging image loading or processing errors in scikit-image involves a systematic approach. First, verify that the image file path is correct and the file exists. A simple print() statement checking the path can catch this early. If the path is correct but you receive an error, examine the image format. Scikit-image supports many formats, but incompatibilities can occur.

Next, check the image itself. Are there unusual artifacts? Is it corrupted? Tools outside scikit-image, like image viewers, can help diagnose image problems. If the issue arises during processing, isolate the problematic function. Step-through debugging, using print() statements or a debugger, can pinpoint the exact line causing the problem. Check the image’s shape and data type using image.shape and image.dtype to ensure it’s compatible with the function you’re using. Incorrect data types are a common source of errors.

Finally, consult the scikit-image documentation and look for error messages online. Many common issues have well-documented solutions. If you’re dealing with a complex scenario, consider providing a minimal reproducible example on platforms like Stack Overflow to get faster help from the community.

Q 26. What are some advanced techniques in image processing that scikit-image supports?

Scikit-image supports several advanced techniques. One powerful area is image registration, which aligns multiple images taken from different viewpoints or at different times. This is critical in medical imaging, satellite imagery, and microscopy. Scikit-image provides tools for various registration methods, including feature-based and intensity-based techniques.

Another area is image segmentation, although less advanced than deep learning solutions. Techniques like watershed segmentation and region growing are available for separating objects in an image based on intensity or texture differences. These methods can be very effective when combined with other preprocessing steps, like binarization.

Furthermore, scikit-image facilitates advanced morphological operations, like erosion, dilation, opening, and closing, which are crucial for shape analysis and feature extraction. These techniques can remove noise, smooth boundaries, or fill holes in images.

Finally, the library supports sophisticated filtering techniques beyond simple averaging, including wavelet transforms and anisotropic diffusion, for noise reduction and edge enhancement.

Q 27. How would you optimize scikit-image code for speed and efficiency?

Optimizing scikit-image code involves various strategies. Firstly, consider using NumPy effectively. Scikit-image relies heavily on NumPy arrays. Avoid unnecessary loops in Python, leverage vectorized operations provided by NumPy functions for maximum performance. Vectorization allows NumPy to perform operations on entire arrays at once, instead of processing each element individually, leading to significant speed improvements.

Secondly, choose the appropriate algorithms. Some algorithms are inherently more computationally expensive than others. For instance, adaptive thresholding is more computationally expensive than global thresholding. If speed is paramount, prioritize less intensive methods where possible.

Thirdly, if you’re dealing with very large images, explore techniques like tiling. Process the image in smaller chunks (tiles) instead of loading the entire image into memory at once. This reduces memory usage and can significantly improve performance.

Finally, for extremely large datasets or computationally demanding tasks, consider using libraries like Dask, which provide parallel processing capabilities to distribute the workload across multiple cores or machines.

Q 28. Describe a time you used scikit-image to solve a complex image processing problem.

I once used scikit-image to analyze microscopic images of cells to automatically quantify cell density and size distribution. The images were noisy and had variations in lighting. My solution involved several steps:

1. **Preprocessing:** I used adaptive thresholding (threshold_local) to account for the uneven lighting, followed by morphological operations (binary_closing) to remove small noise artifacts and fill holes in the cells.

2. **Segmentation:** I employed the watershed algorithm (watershed) to segment individual cells, separating them from each other. This required careful parameter tuning to obtain optimal results.

3. **Feature Extraction:** After segmentation, I measured the area and circularity of each segmented cell region. These features were used to characterize cell morphology.

4. **Analysis:** Finally, I used pandas to organize the feature data and generate summary statistics, providing the cell density and size distribution. This automation greatly improved efficiency compared to manual analysis, providing accurate and statistically significant results.

This project demonstrated how scikit-image’s combination of preprocessing, segmentation, and morphological tools enables efficient and precise image analysis, helping me efficiently solve a complex image processing problem.

Key Topics to Learn for scikit-image Interview

- Image Filtering and Enhancement: Understand various filtering techniques (e.g., Gaussian, median, bilateral) and their applications in noise reduction, sharpening, and edge detection. Consider the trade-offs between different methods.

- Feature Extraction and Detection: Master techniques for extracting meaningful features from images, such as edges, corners, and textures. Explore the use of Harris corner detection, SIFT, SURF, or ORB algorithms and their practical applications in object recognition and image registration.

- Image Segmentation: Learn different segmentation methods, including thresholding, region growing, watershed, and more advanced techniques like graph cuts. Understand the strengths and weaknesses of each approach and how to choose the appropriate method for a given task.

- Color Spaces and Transformations: Familiarize yourself with different color spaces (RGB, HSV, LAB) and their properties. Understand color space transformations and their impact on image processing tasks.

- Image Morphology: Grasp the concepts of erosion, dilation, opening, and closing and their applications in image cleaning and shape analysis.

- Image Registration and Alignment: Learn about techniques for aligning images, such as affine and projective transformations. Understand the challenges and approaches to registering images with different viewpoints or scales.

- Practical Application: Be prepared to discuss how you’d apply scikit-image to solve real-world problems. Think about applications in medical imaging, remote sensing, or computer vision.

- Performance Optimization: Understand strategies for optimizing the performance of your scikit-image code, including efficient data structures and algorithm choices.

Next Steps

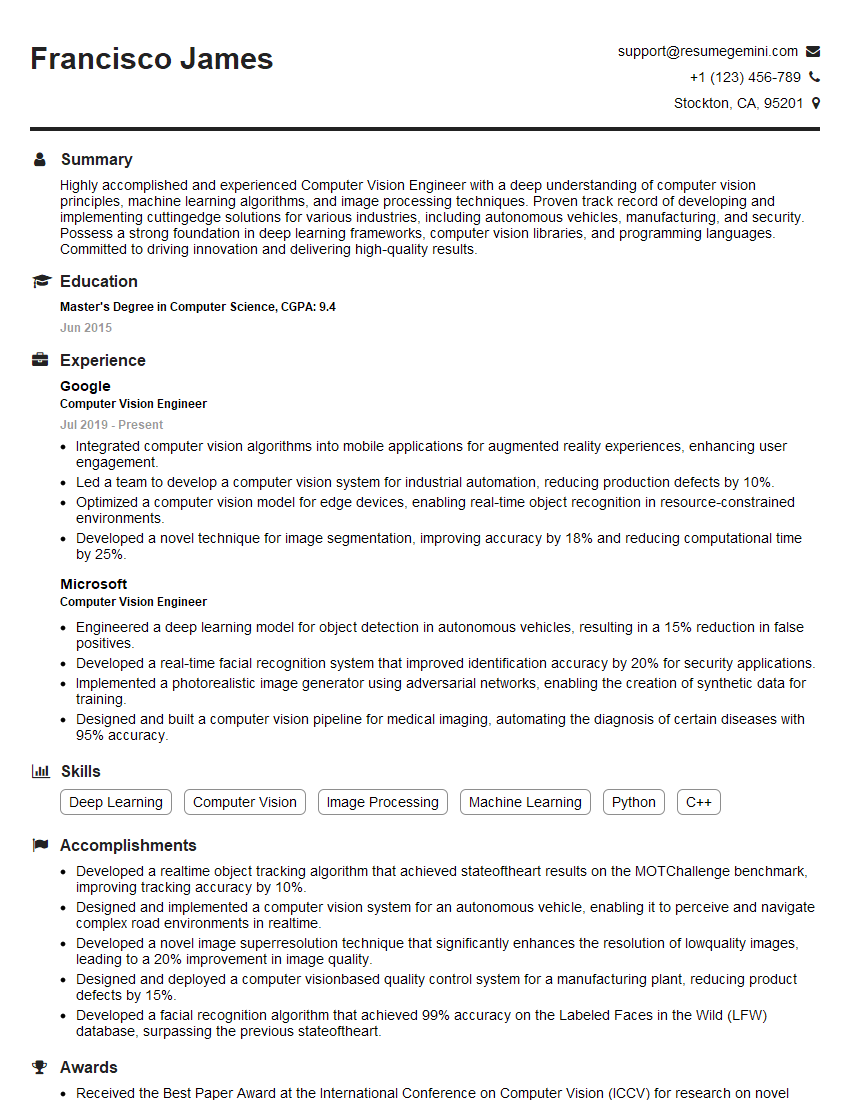

Mastering scikit-image opens doors to exciting careers in image processing and computer vision. Demonstrating proficiency in this library significantly enhances your job prospects. To maximize your chances, create a compelling and ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and effective resume. They provide examples of resumes tailored to scikit-image to guide you, ensuring your application stands out from the competition.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?