Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Syntactic Analysis interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Syntactic Analysis Interview

Q 1. Explain the difference between context-free grammars and dependency grammars.

Context-free grammars (CFGs) and dependency grammars represent distinct approaches to syntactic analysis. CFGs, based on phrase structure rules, describe sentence structure using hierarchical tree-like structures, showing how phrases combine to form larger phrases and ultimately, sentences. Think of it like a recipe where phrases are ingredients and the rules specify how to combine them.

Dependency grammars, conversely, focus on the relationships between individual words (dependencies). They represent sentence structure as a directed graph where words are nodes and dependencies are edges showing which words modify or depend on others. This resembles a network where each word is connected to the word it directly modifies.

Example: Consider the sentence “The cat sat on the mat.”

CFG Representation: Might involve rules like S → NP VP, NP → Det N, VP → V PP, PP → P NP, etc., resulting in a parse tree with hierarchical structure.

Dependency Representation: Would represent it as a graph where ‘sat’ is the root, ‘cat’ depends on ‘sat’, ‘on’ depends on ‘sat’, ‘mat’ depends on ‘on’, and ‘the’ depends on both ‘cat’ and ‘mat’. This is simpler and focuses on direct relationships.

In essence, CFGs emphasize constituency (grouping words into phrases), while dependency grammars highlight the relationships between words.

Q 2. Describe the Chomsky hierarchy and its significance in syntactic analysis.

The Chomsky hierarchy is a classification of formal grammars based on their generative power. It’s crucial in syntactic analysis as it provides a framework for understanding the complexity of different grammars and the types of languages they can describe. Imagine it like a ladder where each level represents grammars capable of producing more complex languages.

- Type 0 (Unrestricted Grammars): The most powerful, capable of describing any recursively enumerable language. These are generally too complex for practical natural language processing.

- Type 1 (Context-Sensitive Grammars): Rules are of the form αAβ → αγβ where A is a non-terminal, α, β, and γ are strings of terminals and non-terminals, and the length of γ is at least 1. These are still quite powerful but less so than type 0.

- Type 2 (Context-Free Grammars): Rules are of the form A → γ, where A is a non-terminal and γ is a string of terminals and non-terminals. These are widely used in NLP for parsing.

- Type 3 (Regular Grammars): Rules are of the form A → a or A → aB, where A and B are non-terminals and ‘a’ is a terminal. These describe the simplest languages, suitable for tasks like lexical analysis.

The significance lies in understanding the trade-off between expressiveness and computational tractability. While more powerful grammars can describe more complex languages, parsing them is often computationally harder. Context-free grammars strike a good balance between descriptive power and efficient parsing algorithms, making them popular in syntactic analysis.

Q 3. What are the key challenges in parsing ambiguous sentences?

Ambiguous sentences pose significant challenges in parsing because they can have multiple valid syntactic analyses. This means the same sentence can be interpreted in different ways, leading to multiple parse trees or dependency graphs. This ambiguity arises from factors such as:

- Lexical Ambiguity: A word can have multiple meanings (e.g., “bank” can be a financial institution or the side of a river).

- Syntactic Ambiguity: The same sentence structure can be interpreted differently due to grammatical flexibility (e.g., “I saw the man with the telescope” – who had the telescope?).

- Attachment Ambiguity: A phrase can be attached to different points in the sentence structure (e.g., “He ate the apple with a fork” – was the apple or the eating done with a fork?).

Handling this requires sophisticated parsing techniques like probabilistic parsing, which assigns probabilities to different parses based on word frequencies, context, and grammar rules. Disambiguation often requires semantic and contextual information beyond pure syntax.

Q 4. How does Part-of-Speech (POS) tagging contribute to syntactic analysis?

Part-of-speech (POS) tagging, the process of assigning grammatical tags (noun, verb, adjective, etc.) to each word in a sentence, is fundamental to syntactic analysis. It provides crucial information that greatly simplifies parsing.

Think of it as providing a roadmap for the parser. Knowing the POS of each word dramatically reduces the search space during parsing. For example, if you know a word is a verb, you can immediately constrain the potential syntactic roles it can play. This reduces ambiguity and speeds up the parsing process. Many parsing algorithms (both CFG and dependency) rely heavily on POS tags as input.

Example: Without POS tags, parsing “The cat sat on the mat” is more challenging. With POS tags (Det-N-V-P-Det-N), the parser knows exactly what grammatical roles each word can play, significantly aiding the process.

Q 5. Explain the concept of head-modifier relationships in dependency parsing.

In dependency parsing, head-modifier relationships describe the grammatical links between words. The “head” is the central word of a phrase, while the “modifier” provides additional information about the head. The relationship is directional, indicating that the modifier depends on the head.

Example: In the phrase “the fluffy cat,” ‘cat’ is the head (the central noun) and ‘the’ and ‘fluffy’ are modifiers. ‘the’ modifies ‘cat’ indicating definiteness, while ‘fluffy’ modifies ‘cat’ by describing its attributes.

Understanding head-modifier relations is crucial because they define the core structure of the sentence in dependency grammar. The head determines the syntactic function of the phrase, and modifiers add details or specifications.

Q 6. Compare and contrast top-down and bottom-up parsing algorithms.

Top-down and bottom-up parsing are two fundamental approaches in syntactic analysis. Top-down parsing starts with the root of the parse tree (usually the sentence symbol ‘S’) and tries to expand it down to match the input sentence. It’s like working from a general blueprint to the specifics. Bottom-up parsing starts with the words of the input and tries to combine them into larger units, ultimately reaching the root symbol. This is like building a house from its individual parts.

Top-Down: Efficient for grammars without excessive ambiguity. Can suffer from ‘left recursion’ issues where the algorithm may get stuck in an infinite loop. Recursive descent parsing and LL(k) parsing are examples.

Bottom-Up: More robust to ambiguity. Can be computationally expensive for certain grammars, but techniques like shift-reduce parsing are quite effective.

In short: Top-down is faster for simple grammars but susceptible to left recursion; bottom-up is more robust but potentially slower for complex cases. The choice depends on the specific grammar and parsing needs.

Q 7. Describe the CYK algorithm and its applications.

The CYK (Cocke-Younger-Kasami) algorithm is a dynamic programming algorithm for parsing context-free grammars in Chomsky Normal Form (CNF). It’s known for its efficiency in determining whether a given string can be generated by a given CFG and, if so, providing a parse tree. Imagine it as a systematic way to check all possible combinations of sub-strings to see if they fit the grammar rules.

How it works: It constructs a table (typically a triangle) where each entry represents whether a substring of the input can be generated by a particular non-terminal. It fills the table from smaller substrings to larger ones using the grammar rules. If the top-right entry (representing the entire input string) contains the start symbol, the string is parsable. The table entries also implicitly store parse information allowing construction of the parse tree.

Applications: CYK is widely used in computational linguistics for parsing, especially when dealing with ambiguous grammars where a single parse is required. It’s also beneficial in theoretical computer science for demonstrating the decidability of certain language properties.

Q 8. What are the advantages and disadvantages of using rule-based vs. statistical parsing methods?

Rule-based and statistical parsing methods represent two distinct approaches to syntactic analysis. Rule-based parsing, also known as symbolic parsing, relies on handcrafted grammar rules to analyze sentences. Statistical parsing, on the other hand, uses probabilistic models trained on large corpora of text to predict the most likely parse tree for a given sentence.

- Rule-based Advantages: More transparent and easier to debug; better control over the grammar; excellent for domains with limited training data.

- Rule-based Disadvantages: Building and maintaining the grammar rules is time-consuming and labor-intensive; difficult to adapt to new domains or dialects; may not handle ambiguous sentences gracefully.

- Statistical Advantages: Can handle large and complex grammars; automatically adapts to different writing styles and domains; generally more robust to noisy or ungrammatical input.

- Statistical Disadvantages: Requires large amounts of annotated training data; may struggle with rare or unseen sentence structures; black-box nature makes debugging more challenging.

Imagine building a car: rule-based parsing is like meticulously designing each component based on engineering principles, while statistical parsing is like analyzing thousands of cars to identify patterns and build a model that approximates the best design. Both approaches have their merits, and the best choice often depends on the specific application and available resources.

Q 9. Explain the concept of constituent structure in phrase structure grammars.

Constituent structure, in the context of phrase structure grammars, refers to the hierarchical organization of words into phrases and clauses. It represents the underlying syntactic relationships within a sentence, showing how words group together to form meaningful units. This hierarchical structure is typically represented as a tree-like diagram called a parse tree or syntax tree.

For example, in the sentence “The quick brown fox jumps over the lazy dog,” the constituent structure might be represented as follows: The words group into noun phrases (“The quick brown fox”), and verb phrases (“jumps over the lazy dog”), and these phrases combine to form the complete sentence.

S / \ NP VP /|\ /|\ Det Adj Adj N V PP / / / / / / \ The quick brown fox jumps over Det Adj N / / / the lazy dog

Understanding constituent structure is crucial for tasks like machine translation, information extraction, and question answering, as it provides a structured representation of the sentence’s meaning and grammatical relationships.

Q 10. How do you handle unknown words during syntactic analysis?

Handling unknown words (out-of-vocabulary words) during syntactic analysis is a significant challenge. Several strategies are employed to address this:

- Using morphological analysis: Breaking down unknown words into their constituent morphemes (smallest meaningful units) can sometimes reveal clues about their part of speech. For example, a word ending in ‘-ing’ might be a gerund or present participle.

- Employing contextual information: The surrounding words and phrases provide valuable context that can help infer the unknown word’s part of speech and syntactic role. This approach leverages the power of distributional semantics.

- Leveraging Word Embeddings: Pre-trained word embeddings, like Word2Vec or GloVe, provide vector representations of words, capturing semantic similarities. These can be used to estimate the syntactic function of unknown words by comparing their embeddings to those of known words in the context.

- Treating unknown words as a special token: A simple strategy is to treat unknown words as a special token (e.g., `

`), which is then handled by the parser according to predefined rules. This is often combined with the above strategies.

The best approach often involves a combination of techniques. Imagine a detective investigating a crime scene; they’d consider all available clues (morphological structure, context, similar cases) to build a hypothesis about the perpetrator. Similarly, we combine multiple strategies to get the best guess for an unknown word’s role in the sentence’s structure.

Q 11. Describe different types of phrase structure rules (e.g., context-free, context-sensitive).

Phrase structure rules define the grammatical structure of a language by specifying how phrases and sentences can be constructed from smaller units. Different types exist, categorized by the constraints they impose:

- Context-Free Grammars (CFGs): These are the most common type, where the rules are of the form

A -> α, meaning that a non-terminal symbolAcan be replaced by a sequence of symbolsα(which can be terminals or non-terminals). The replacement is independent of the surrounding context. Example:S -> NP VP(A sentence consists of a noun phrase and a verb phrase). - Context-Sensitive Grammars (CSGs): These grammars allow the replacement of a non-terminal to depend on the context in which it appears. Rules are of the form

αAβ -> αγβ, meaningAcan be replaced byγonly when surrounded byαandβ. This allows for the expression of more complex linguistic phenomena, but also increases the complexity of parsing. - Head-Driven Phrase Structure Grammar (HPSG): This is a more sophisticated type of grammar that incorporates features and constraints into the rules, allowing for a more detailed and accurate representation of syntactic structures. It’s more computationally complex than CFGs.

The choice of grammar type depends on the complexity of the linguistic phenomena to be modeled and the computational resources available. CFGs provide a good balance between expressiveness and parsing efficiency, making them the most commonly used type in practical applications.

Q 12. Explain the role of semantic analysis in relation to syntactic analysis.

Syntactic analysis focuses on the grammatical structure of sentences, while semantic analysis focuses on their meaning. Though distinct, they are deeply intertwined. Syntactic analysis provides the structural scaffolding upon which semantic interpretation is built. The parser’s output (the parse tree) provides a structured representation of the sentence, which is then used by the semantic analyzer to interpret the meaning.

For example, consider the sentence “The bank holds money.” Syntactic analysis reveals that “bank” is the subject and “holds” is the verb. However, the semantic interpretation depends on the context. “Bank” can refer to a financial institution or the side of a river. The syntactic structure guides the semantic analyzer to disambiguate the meaning based on context and world knowledge.

In essence, syntactic analysis provides the foundational structure; semantic analysis adds the meaning. They work together to allow computers to understand human language in a more nuanced way.

Q 13. What are some common evaluation metrics for syntactic parsers?

Evaluating syntactic parsers requires comparing their output to a gold standard, typically a manually annotated corpus. Common metrics include:

- Precision: The proportion of predicted parse trees that are correct.

- Recall: The proportion of correctly parsed sentences out of all sentences in the test set.

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of accuracy.

- Labeled Precision and Recall: Similar to precision and recall, but consider the accuracy of the labels assigned to each constituent in the parse tree.

- Unlabeled Precision and Recall: Measure the accuracy of the overall tree structure, ignoring the constituent labels.

- PARS (Parse Accuracy Rate): This metric looks at the overall accuracy of the parse tree compared to the gold standard parse tree. It’s sensitive to the entire parse tree structure being correctly identified.

The choice of metric depends on the specific application and priorities. For instance, if capturing fine-grained syntactic details is critical (e.g., in machine translation), labeled precision and recall are preferred. For simpler tasks, unlabeled metrics might suffice.

Q 14. How do you address the problem of long-range dependencies in parsing?

Long-range dependencies refer to grammatical relationships between words that are far apart in a sentence. These dependencies present significant challenges to parsing because the parser needs to maintain information across a large span of words. For example, in the sentence “The dog that chased the cat that sat on the mat slept soundly,” the relationship between “dog” and “slept” spans several intervening clauses.

Several techniques are employed to address this:

- Transition-based parsers: These incremental parsers maintain a stack and buffer of words, adding constituents as they process the sentence. This can help to retain information across larger distances, though efficiency can decrease with very long dependencies.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks: These neural network architectures are designed to handle sequential data and can capture long-range dependencies effectively by maintaining a hidden state that reflects the context of past inputs. LSTMs, in particular, are well-suited for handling the vanishing gradient problem that often affects RNNs with long sequences.

- Attention Mechanisms: Attention mechanisms allow the model to focus on relevant parts of the sentence during processing, thereby facilitating the capturing of long-range dependencies without relying solely on the sequential processing order.

The choice of method often depends on the specific task, data, and available computational resources. LSTMs and attention mechanisms have become particularly popular in recent years due to their ability to effectively manage long-range dependencies in large-scale datasets.

Q 15. Describe different types of syntactic ambiguities and their resolution methods.

Syntactic ambiguity arises when a sentence can be parsed in multiple ways, leading to different meanings. Think of it like a poorly written instruction manual – you could interpret the steps in several ways, each leading to a different outcome. Common types include:

- Attachment Ambiguity: This occurs when a phrase can be attached to multiple parts of the sentence. For example, “I saw the man with the telescope.” Did I see the man *using* the telescope, or was the man *carrying* the telescope?

- Coordination Ambiguity: This arises from the interpretation of conjunctions like “and” or “or.” Consider, “The dog chased the cat and the bird.” Did the dog chase both the cat and the bird, or did it chase the cat and then separately chase the bird?

- Prepositional Phrase Attachment Ambiguity: This happens with prepositional phrases which can modify different parts of the sentence. For instance, “The boy hit the girl with the bat.” Did the boy use the bat to hit the girl, or did the girl have a bat?

Resolving these ambiguities often involves using contextual information, semantic knowledge, or even probabilistic methods. For example, understanding the typical usage of “with” might suggest a tool-related meaning making the first interpretation more likely in “I saw the man with the telescope.” Advanced parsing techniques leverage statistical models trained on large corpora to estimate the likelihood of each possible parse, choosing the most probable one. This is similar to how we understand ambiguous phrases in everyday speech – we use our understanding of the world and common sense to make the most logical interpretation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of treebanks and their use in syntactic analysis.

Treebanks are large collections of sentences annotated with their corresponding syntactic structures, typically represented as parse trees. Imagine a meticulously labeled collection of sentence diagrams. Each sentence is parsed and its grammatical relationships are explicitly marked, showing how words are grouped into phrases and clauses. This provides a gold standard for evaluating and training syntactic parsers.

Their use in syntactic analysis is crucial for:

- Parser Evaluation: Treebanks provide a benchmark for measuring the accuracy of a syntactic parser by comparing its output to the manually annotated trees. This helps determine how well the parser captures the grammatical structure of sentences.

- Parser Training: Machine learning-based parsers rely heavily on treebanks as training data. The parser learns patterns from the annotated trees and uses these patterns to parse new, unseen sentences.

- Linguistic Research: Treebanks facilitate linguistic research by providing a standardized, large-scale resource for studying grammatical phenomena across a vast corpus of text.

Popular treebanks include the Penn Treebank for English, the Negra Treebank for German, and many others, each offering a valuable resource for researchers and developers in the field.

Q 17. How can you improve the accuracy of a syntactic parser?

Improving the accuracy of a syntactic parser is a multi-faceted challenge. Here are some key strategies:

- More Data: Training on larger and more diverse treebanks leads to better generalization and improved performance. Think of it like a student – the more examples they see, the better they understand the underlying patterns.

- Better Features: Enriching the features used by the parser can lead to significant improvements. These features could include part-of-speech tags, word embeddings, dependency relations, or even contextual information from surrounding words.

- Advanced Algorithms: Employing more sophisticated parsing algorithms, such as transition-based or graph-based parsers, can enhance accuracy by effectively handling complex syntactic structures.

- Handling Ambiguity: Incorporating probabilistic models and using techniques like semantic disambiguation can help resolve syntactic ambiguities more accurately. This is akin to a detective considering contextual clues to crack a case.

- Regular Evaluation and Refinement: Continuous evaluation on held-out data allows for iterative improvement by identifying and addressing weaknesses in the parser. Regular checks for errors and improvements are similar to a writer revising their draft.

Q 18. Discuss the use of machine learning techniques in syntactic analysis.

Machine learning has revolutionized syntactic analysis. Many state-of-the-art parsers are now based on machine learning techniques. The most commonly used are:

- Hidden Markov Models (HMMs): These models were among the first to be used in parsing and are still valuable for their simplicity and efficiency.

- Conditional Random Fields (CRFs): CRFs provide a more powerful framework for sequence labeling tasks such as part-of-speech tagging and syntactic chunking, which are crucial steps in syntactic parsing.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs): These neural network architectures are particularly well-suited for handling sequential data, such as sentences, and have achieved significant success in syntactic parsing. They can capture complex long-range dependencies within sentences.

- Transformers: Models based on the transformer architecture, like BERT and its variants, have demonstrated remarkable capabilities in various NLP tasks, including syntactic parsing. Their ability to capture contextual information makes them powerful tools.

These techniques learn patterns from labeled data (like treebanks) to predict the syntactic structure of sentences. They essentially learn the rules of grammar implicitly, without explicitly being programmed with them.

Q 19. What are some common libraries or tools used for syntactic parsing (e.g., NLTK, Stanford CoreNLP)?

Several popular libraries and tools simplify syntactic parsing:

- NLTK (Natural Language Toolkit): A widely-used Python library providing various parsing algorithms and resources, suitable for educational and research purposes. It offers a range of parsers, including the

nltk.RegexpParserfor rule-based parsing and thenltk.parse.stanford.StanfordParserfor accessing the Stanford CoreNLP parser. - Stanford CoreNLP: A powerful Java-based suite offering various NLP functionalities including highly accurate syntactic parsing. It provides both dependency parsing and constituency parsing.

- spaCy: A Python library known for its speed and efficiency. It offers statistical dependency parsing.

- Stanza: A Python NLP library developed by Stanford offering multilingual support. It features robust syntactic parsing capabilities, amongst other NLP features.

The choice of library depends on the specific needs of the project, including programming language preference, performance requirements, and desired level of accuracy.

Q 20. Explain the difference between shallow and deep parsing.

The difference between shallow and deep parsing lies in the level of grammatical detail captured:

- Shallow parsing identifies basic grammatical structures like noun phrases and verb phrases. Think of it like a quick overview, picking out the main components but not the intricate details. This can be useful for tasks like information extraction, where precise grammatical structure might not be essential. It typically uses simpler techniques and is computationally less expensive.

- Deep parsing generates a complete parse tree, capturing all the grammatical relationships between words in a sentence. This is akin to a thorough analysis, understanding all the nuanced relationships. This is crucial for tasks requiring a comprehensive understanding of the grammatical structure, such as machine translation or semantic analysis. Deep parsing is generally more computationally expensive.

Imagine analyzing a sentence like, “The quick brown fox jumps over the lazy dog.” Shallow parsing might identify “The quick brown fox” as a noun phrase and “jumps over the lazy dog” as a verb phrase. Deep parsing would go further, identifying the relationships between each word, showing how “jumps” is the main verb, “over” is a preposition governing “the lazy dog,” and so on. The level of detail needed dictates the choice between shallow and deep parsing.

Q 21. How would you handle nested clauses during parsing?

Handling nested clauses during parsing requires recursive techniques. The parser needs to be able to handle sentences within sentences. Think of it as layers of boxes within boxes. The parsing algorithm must be designed to correctly identify and parse each nested clause.

Here’s a conceptual approach:

- Recursive Descent Parsing: This approach is commonly used. The parser systematically descends into the parse tree, handling nested clauses by recursively calling the parsing functions for each sub-clause. This is like systematically unwrapping the layers of boxes.

- Transition-based Parsing: This involves a stack and a buffer. When a clause boundary is detected, the parser transitions to a state specifically designed to handle the sub-clause, parsing it and then returning to the parent clause’s processing.

- Chart Parsing: This method builds a chart or table containing all possible parse trees. When a nested clause is encountered, the parser checks the chart for existing parse trees for that sub-clause, avoiding redundant parsing.

The algorithm needs to be able to identify the boundaries of nested clauses (often indicated by specific punctuation or subordinate conjunctions) and then recursively apply parsing rules to them. Each algorithm uses a different method for handling the recursion, but they are all built on this fundamental idea of recursive processing.

Consider the sentence: “The boy who saw the girl who was wearing a red dress ran away.” The parser would need to correctly identify and parse the nested clauses “who saw the girl” and “who was wearing a red dress” before completing the parse of the main clause.

Q 22. Describe different types of parsing errors and how to debug them.

Parsing errors occur when the parser encounters input that doesn’t conform to the rules defined by the grammar. These errors can significantly hinder the analysis process. Let’s explore common types and debugging strategies.

- Syntax Errors: These are violations of the grammar’s rules. For example, a missing semicolon in C++ or an unmatched parenthesis in Python. Debugging usually involves carefully examining the input around the error location, comparing it to the grammar rules, and correcting the syntax.

- Lexical Errors: These stem from problems in the lexical analysis stage (tokenization), like unrecognized keywords or invalid characters. Debugging often requires inspecting the token stream produced by the lexer, identifying the problematic tokens, and rectifying them. This might involve adjusting the lexical rules or cleaning the input data.

- Semantic Errors: While not strictly parsing errors, they can manifest during parsing. These errors occur when the input is syntactically correct but semantically meaningless (e.g., assigning a string value to an integer variable). Debugging requires deeper analysis, often involving semantic analysis tools or techniques beyond parsing.

Debugging parsing errors generally involves:

- Using a Debugger: Most parsers offer debugging features to trace the parser’s execution and identify the point of failure. This allows step-by-step examination of the input and the parser’s actions.

- Error Reporting: A good parser provides informative error messages that pinpoint the location and type of the error. This information is crucial for quick identification and correction.

- Grammar Inspection: If the errors are frequent or systemic, carefully review the grammar itself. Ambiguities or inaccuracies in the grammar can lead to parsing errors. Testing the grammar with various inputs can uncover potential issues.

- Input Validation: In many cases, pre-processing the input data to clean it and ensure it meets certain formatting standards can prevent several errors before parsing begins.

Imagine trying to assemble a complex model kit without the instructions. Syntax errors are like missing or misplaced pieces, lexical errors are like using the wrong pieces, and semantic errors are like assembling the pieces correctly but having a model that doesn’t make sense. Debugging is like carefully examining the instructions and the pieces to find and fix the problems.

Q 23. Explain the impact of different grammar formalisms on parsing complexity.

The choice of grammar formalism significantly impacts the complexity of parsing. Different formalisms have varying expressive power and associated parsing algorithms.

- Regular Grammars (Type 3): These describe regular languages and can be parsed efficiently using finite automata. They are suitable for simple patterns like identifiers or keywords but lack the power to handle nested structures.

- Context-Free Grammars (Type 2): These describe context-free languages and are more expressive, capable of representing nested structures like arithmetic expressions or programming language constructs. Parsing algorithms like recursive descent, LL(k), and LR(k) are commonly used, with varying complexities depending on the grammar’s properties and the parsing algorithm employed. LL(k) parsers, for instance, are relatively simpler to implement but have stricter grammar restrictions compared to LR(k) parsers, which are more powerful but complex.

- Context-Sensitive Grammars (Type 1): These grammars are more expressive than context-free grammars and can handle context-dependent rules. However, parsing context-sensitive grammars is generally much more complex and often requires algorithms with high computational cost.

- Unrestricted Grammars (Type 0): These are the most powerful type but are also Turing complete, meaning their parsing problem is undecidable (there’s no algorithm to guarantee parsing completion for all inputs). They are rarely used in practice due to their complexity.

For example, parsing an arithmetic expression (like 2 + 3 * (4 - 1)) requires a context-free grammar and an algorithm like recursive descent or an LR parser. A regular grammar wouldn’t be sufficient to handle the nested parentheses. Choosing the appropriate grammar formalism is crucial for balancing expressive power and parsing efficiency. Overly complex grammars can lead to inefficient or intractable parsing.

Q 24. How can you evaluate the efficiency of a parsing algorithm?

Evaluating a parsing algorithm’s efficiency involves considering both time and space complexity. We usually analyze the algorithm’s performance in terms of the size of the input (n), which represents the length of the input string or the number of tokens.

- Time Complexity: This measures how the runtime scales with the input size. We express it using Big O notation (e.g., O(n), O(n^2), O(2^n)). Linear time complexity (O(n)) is ideal, while exponential time complexity (O(2^n)) is generally unacceptable for large inputs.

- Space Complexity: This assesses the amount of memory the algorithm requires as a function of the input size. Like time complexity, we use Big O notation to represent it (e.g., O(n), O(log n), O(1)). Linear space complexity (O(n)) is often acceptable, but algorithms with exponential space complexity are impractical for large inputs.

- Empirical Testing: While theoretical analysis is crucial, practical testing with various inputs and sizes helps validate the theoretical analysis and reveals performance characteristics in realistic scenarios. Profiling tools can identify performance bottlenecks in the implementation.

For instance, an efficient parser for a context-free grammar might have a time complexity of O(n) or O(n^2) depending on the specific algorithm used (like LL(1) or LR(1) parsers). A simple recursive descent parser could sometimes have O(n^3) complexity for certain grammar structures, while an algorithm with exponential complexity might become unusable for even moderately sized inputs. Measuring runtime and memory usage with benchmarks allows a comparison between different parsing algorithms.

Q 25. What are some real-world applications of syntactic analysis?

Syntactic analysis has far-reaching applications across various fields:

- Programming Languages: Compilers and interpreters heavily rely on syntactic analysis to understand and interpret code. This enables error detection, code generation, and program execution.

- Natural Language Processing (NLP): Syntactic analysis is fundamental for understanding the grammatical structure of sentences, aiding in tasks like machine translation, text summarization, and question answering. Parsing helps determine relationships between words and phrases.

- Data Validation: Syntactic analysis can ensure data conforms to predefined formats or schemas, preventing errors and improving data quality. This is crucial in applications like database management and data entry systems.

- Software Engineering: Tools for static code analysis leverage syntactic analysis to identify potential bugs or vulnerabilities in code before runtime.

- Document Processing: Parsing helps to structure and extract information from documents like XML or HTML, enabling efficient data extraction and manipulation.

For example, a compiler uses syntactic analysis to verify that a program’s syntax conforms to the language’s grammar. This ensures that the code is structurally correct before code generation or interpretation. Similarly, a search engine uses syntactic analysis to improve search relevance, by understanding the relationships between words in a query. A grammar checker in a word processor uses syntactic analysis to identify grammatical errors in a written document.

Q 26. Discuss the challenges of applying syntactic analysis to different languages.

Applying syntactic analysis to different languages poses several challenges:

- Language Structure: Natural languages are far more complex and ambiguous than programming languages. They lack the rigid structure and well-defined rules of programming languages, making parsing more challenging. Different languages have varying grammatical structures, requiring language-specific grammars and parsing techniques.

- Ambiguity: Natural languages often exhibit syntactic ambiguity, where a sentence can have multiple possible interpretations. Resolving ambiguity requires additional linguistic knowledge and techniques beyond simple parsing.

- Informal Language: Social media text and other informal communication forms contain numerous grammatical errors, contractions, slang, and unconventional expressions, posing difficulties for parsers designed for formal language.

- Data Sparsity: For less-resourced languages, obtaining sufficient training data for accurate parsing can be a major obstacle. This is especially true for languages with limited digital resources or linguistic analysis literature.

For example, the English sentence, “I saw the man with the telescope,” is ambiguous. Did I use the telescope to see the man, or was the man holding the telescope? A parser needs additional context or disambiguation strategies to resolve this. Similarly, the diverse range of grammatical structures in languages like German, with its complex word order and verb placement, presents a greater challenge in parsing than the relatively simpler structure of languages like English.

Q 27. How would you approach the problem of parsing informal language (e.g., social media text)?

Parsing informal language, such as social media text, requires a different approach compared to parsing formal languages. The key is to acknowledge the inherent informality and adapt techniques accordingly.

- Robust Parsing Techniques: Traditional parsing algorithms may struggle with the errors and irregularities in informal language. Robust parsing techniques that can handle missing words, misspelled words, and unconventional grammar are necessary. Techniques like statistical parsing and probabilistic context-free grammars (PCFGs) are well-suited.

- Pre-processing: Cleaning and normalizing the input text are crucial. This may involve steps like removing punctuation, handling contractions, and converting text to lowercase.

- Language Models: Using language models can help predict missing words or correct misspelled words, improving the parsing accuracy.

- Custom Grammars: Creating custom grammars to accommodate the specific characteristics of informal language, such as slang or abbreviations, can enhance parsing accuracy.

- Semantic Analysis: Since syntax alone may be insufficient to interpret informal language, integrating semantic analysis and other NLP techniques is crucial for understanding the meaning and intent of the text.

For example, a tweet might contain slang, abbreviations, and grammatical errors. A robust parser might use a language model to fill in missing words or correct spelling mistakes, while a custom grammar would handle slang and abbreviations. Semantic analysis would then help to understand the overall meaning of the tweet, even if the syntax is imperfect. Imagine trying to understand a telegram; the message is often incomplete, but you can still guess the meaning based on the context.

Key Topics to Learn for Syntactic Analysis Interview

- Context-Free Grammars (CFG): Understanding CFGs, their formal definition, and how to represent them using Backus-Naur Form (BNF) or similar notations is fundamental. Practice designing CFGs for simple languages and analyzing their properties.

- Parsing Techniques: Become proficient in different parsing algorithms, including top-down (recursive descent, LL(k)) and bottom-up (LR(k), shift-reduce) parsing. Understand their strengths, weaknesses, and applicability to various grammar types.

- Parse Trees and Abstract Syntax Trees (ASTs): Learn how to construct parse trees and ASTs from input strings using your chosen parsing technique. Grasp the difference between these representations and their role in compiler design and language processing.

- Ambiguity and Resolution: Understand how ambiguity in grammars can lead to multiple parse trees for the same input. Explore techniques for resolving ambiguity, such as using precedence rules or disambiguation strategies.

- Practical Applications: Explore real-world applications of syntactic analysis, such as compiler construction, natural language processing (NLP), and formal verification. Understanding these applications will deepen your comprehension of the subject’s importance.

- Error Handling and Recovery: Learn how to handle syntax errors gracefully during parsing. Explore techniques for error recovery, such as panic mode recovery or phrase-level recovery.

- Lexical Analysis (Scanning): While a separate topic, having a solid understanding of lexical analysis and its interaction with syntactic analysis is crucial. Understand how tokens are generated and their role in the parsing process.

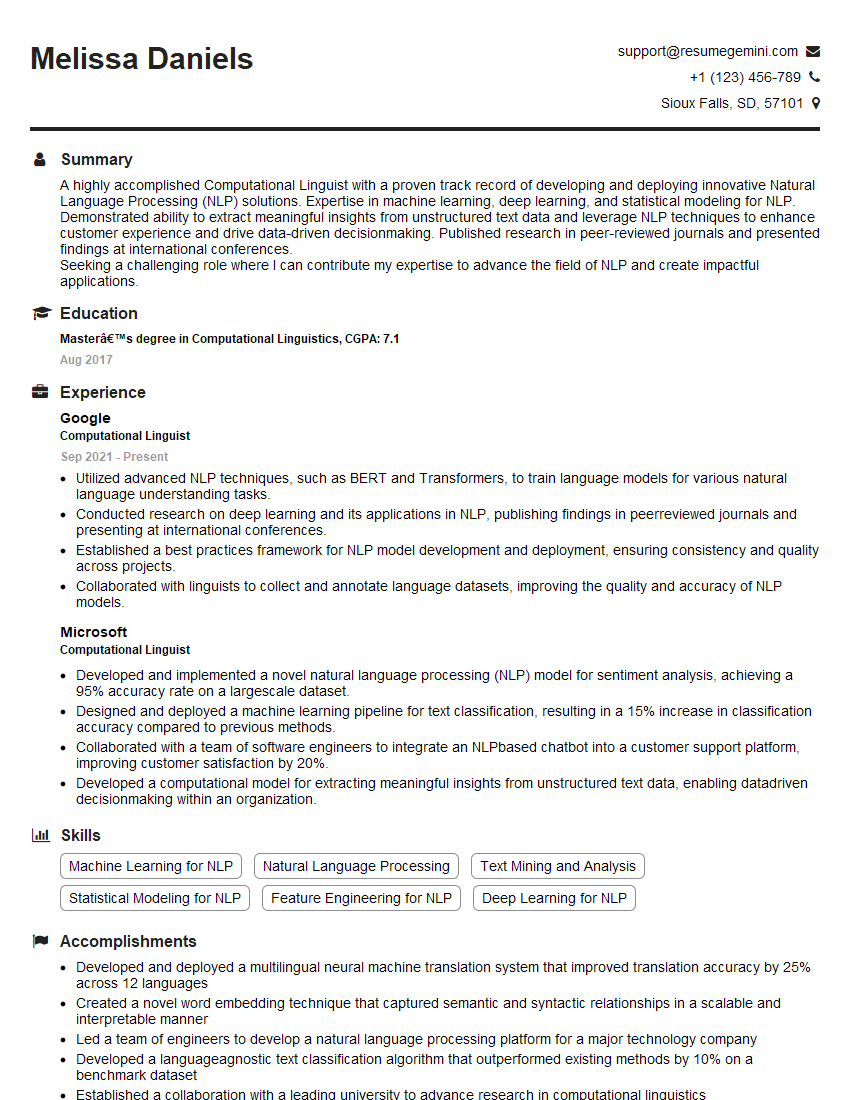

Next Steps

Mastering syntactic analysis significantly enhances your prospects in various high-demand fields, including software engineering, compiler design, and natural language processing. A strong understanding of these concepts demonstrates a solid foundation in computer science and problem-solving abilities highly valued by employers.

To maximize your chances of landing your dream role, crafting a compelling and ATS-friendly resume is paramount. ResumeGemini is a trusted resource that can help you build a professional resume tailored to highlight your skills and experience in syntactic analysis. Examples of resumes tailored to this specialization are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Live Rent Free!

https://bit.ly/LiveRentFREE

Interesting Article, I liked the depth of knowledge you’ve shared.

Helpful, thanks for sharing.

Hi, I represent a social media marketing agency and liked your blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?